Deep Learning Scooping Motion using Bilateral Teleoperations

Paper and Code

Oct 24, 2018

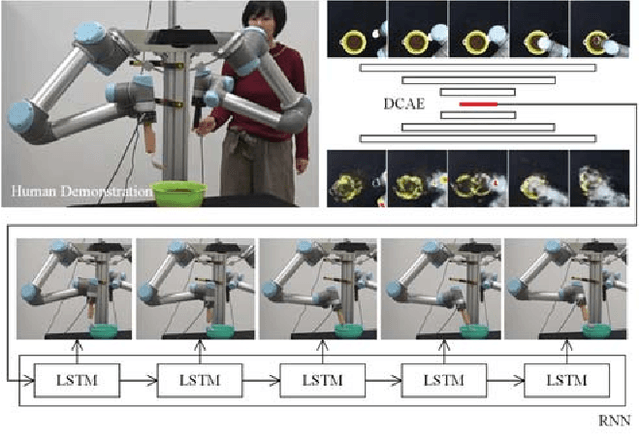

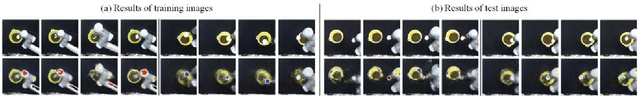

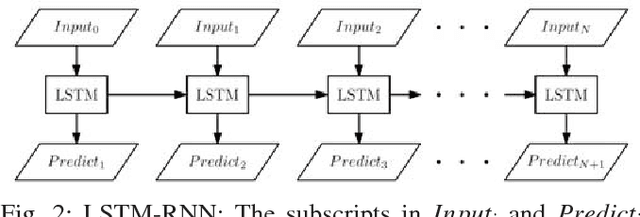

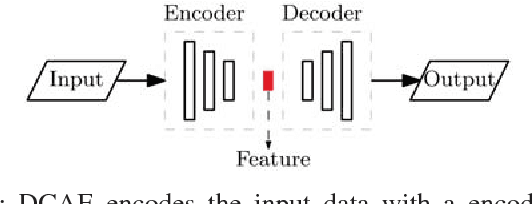

We present bilateral teleoperation system for task learning and robot motion generation. Our system includes a bilateral teleoperation platform and a deep learning software. The deep learning software refers to human demonstration using the bilateral teleoperation platform to collect visual images and robotic encoder values. It leverages the datasets of images and robotic encoder information to learn about the inter-modal correspondence between visual images and robot motion. In detail, the deep learning software uses a combination of Deep Convolutional Auto-Encoders (DCAE) over image regions, and Recurrent Neural Network with Long Short-Term Memory units (LSTM-RNN) over robot motor angles, to learn motion taught be human teleoperation. The learnt models are used to predict new motion trajectories for similar tasks. Experimental results show that our system has the adaptivity to generate motion for similar scooping tasks. Detailed analysis is performed based on failure cases of the experimental results. Some insights about the cans and cannots of the system are summarized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge