Muhammad Nabeel Asim

Dynamic Weight Adjustment for Knowledge Distillation: Leveraging Vision Transformer for High-Accuracy Lung Cancer Detection and Real-Time Deployment

Oct 23, 2025Abstract:This paper presents the FuzzyDistillViT-MobileNet model, a novel approach for lung cancer (LC) classification, leveraging dynamic fuzzy logic-driven knowledge distillation (KD) to address uncertainty and complexity in disease diagnosis. Unlike traditional models that rely on static KD with fixed weights, our method dynamically adjusts the distillation weight using fuzzy logic, enabling the student model to focus on high-confidence regions while reducing attention to ambiguous areas. This dynamic adjustment improves the model ability to handle varying uncertainty levels across different regions of LC images. We employ the Vision Transformer (ViT-B32) as the instructor model, which effectively transfers knowledge to the student model, MobileNet, enhancing the student generalization capabilities. The training process is further optimized using a dynamic wait adjustment mechanism that adapts the training procedure for improved convergence and performance. To enhance image quality, we introduce pixel-level image fusion improvement techniques such as Gamma correction and Histogram Equalization. The processed images (Pix1 and Pix2) are fused using a wavelet-based fusion method to improve image resolution and feature preservation. This fusion method uses the wavedec2 function to standardize images to a 224x224 resolution, decompose them into multi-scale frequency components, and recursively average coefficients at each level for better feature representation. To address computational efficiency, Genetic Algorithm (GA) is used to select the most suitable pre-trained student model from a pool of 12 candidates, balancing model performance with computational cost. The model is evaluated on two datasets, including LC25000 histopathological images (99.16% accuracy) and IQOTH/NCCD CT-scan images (99.54% accuracy), demonstrating robustness across different imaging domains.

FloraSyntropy-Net: Scalable Deep Learning with Novel FloraSyntropy Archive for Large-Scale Plant Disease Diagnosis

Aug 25, 2025Abstract:Early diagnosis of plant diseases is critical for global food safety, yet most AI solutions lack the generalization required for real-world agricultural diversity. These models are typically constrained to specific species, failing to perform accurately across the broad spectrum of cultivated plants. To address this gap, we first introduce the FloraSyntropy Archive, a large-scale dataset of 178,922 images across 35 plant species, annotated with 97 distinct disease classes. We establish a benchmark by evaluating numerous existing models on this archive, revealing a significant performance gap. We then propose FloraSyntropy-Net, a novel federated learning framework (FL) that integrates a Memetic Algorithm (MAO) for optimal base model selection (DenseNet201), a novel Deep Block for enhanced feature representation, and a client-cloning strategy for scalable, privacy-preserving training. FloraSyntropy-Net achieves a state-of-the-art accuracy of 96.38% on the FloraSyntropy benchmark. Crucially, to validate its generalization capability, we test the model on the unrelated multiclass Pest dataset, where it demonstrates exceptional adaptability, achieving 99.84% accuracy. This work provides not only a valuable new resource but also a robust and highly generalizable framework that advances the field towards practical, large-scale agricultural AI applications.

FOLC-Net: A Federated-Optimized Lightweight Architecture for Enhanced MRI Disease Diagnosis across Axial, Coronal, and Sagittal Views

Jul 09, 2025Abstract:The framework is designed to improve performance in the analysis of combined as well as single anatomical perspectives for MRI disease diagnosis. It specifically addresses the performance degradation observed in state-of-the-art (SOTA) models, particularly when processing axial, coronal, and sagittal anatomical planes. The paper introduces the FOLC-Net framework, which incorporates a novel federated-optimized lightweight architecture with approximately 1.217 million parameters and a storage requirement of only 0.9 MB. FOLC-Net integrates Manta-ray foraging optimization (MRFO) mechanisms for efficient model structure generation, global model cloning for scalable training, and ConvNeXt for enhanced client adaptability. The model was evaluated on combined multi-view data as well as individual views, such as axial, coronal, and sagittal, to assess its robustness in various medical imaging scenarios. Moreover, FOLC-Net tests a ShallowFed model on different data to evaluate its ability to generalize beyond the training dataset. The results show that FOLC-Net outperforms existing models, particularly in the challenging sagittal view. For instance, FOLC-Net achieved an accuracy of 92.44% on the sagittal view, significantly higher than the 88.37% accuracy of study method (DL + Residual Learning) and 88.95% of DL models. Additionally, FOLC-Net demonstrated improved accuracy across all individual views, providing a more reliable and robust solution for medical image analysis in decentralized environments. FOLC-Net addresses the limitations of existing SOTA models by providing a framework that ensures better adaptability to individual views while maintaining strong performance in multi-view settings. The incorporation of MRFO, global model cloning, and ConvNeXt ensures that FOLC-Net performs better in real-world medical applications.

Robust & Precise Knowledge Distillation-based Novel Context-Aware Predictor for Disease Detection in Brain and Gastrointestinal

May 09, 2025

Abstract:Medical disease prediction, particularly through imaging, remains a challenging task due to the complexity and variability of medical data, including noise, ambiguity, and differing image quality. Recent deep learning models, including Knowledge Distillation (KD) methods, have shown promising results in brain tumor image identification but still face limitations in handling uncertainty and generalizing across diverse medical conditions. Traditional KD methods often rely on a context-unaware temperature parameter to soften teacher model predictions, which does not adapt effectively to varying uncertainty levels present in medical images. To address this issue, we propose a novel framework that integrates Ant Colony Optimization (ACO) for optimal teacher-student model selection and a novel context-aware predictor approach for temperature scaling. The proposed context-aware framework adjusts the temperature based on factors such as image quality, disease complexity, and teacher model confidence, allowing for more robust knowledge transfer. Additionally, ACO efficiently selects the most appropriate teacher-student model pair from a set of pre-trained models, outperforming current optimization methods by exploring a broader solution space and better handling complex, non-linear relationships within the data. The proposed framework is evaluated using three publicly available benchmark datasets, each corresponding to a distinct medical imaging task. The results demonstrate that the proposed framework significantly outperforms current state-of-the-art methods, achieving top accuracy rates: 98.01% on the MRI brain tumor (Kaggle) dataset, 92.81% on the Figshare MRI dataset, and 96.20% on the GastroNet dataset. This enhanced performance is further evidenced by the improved results, surpassing existing benchmarks of 97.24% (Kaggle), 91.43% (Figshare), and 95.00% (GastroNet).

AI-Driven Diabetic Retinopathy Diagnosis Enhancement through Image Processing and Salp Swarm Algorithm-Optimized Ensemble Network

Mar 18, 2025Abstract:Diabetic retinopathy is a leading cause of blindness in diabetic patients and early detection plays a crucial role in preventing vision loss. Traditional diagnostic methods are often time-consuming and prone to errors. The emergence of deep learning techniques has provided innovative solutions to improve diagnostic efficiency. However, single deep learning models frequently face issues related to extracting key features from complex retinal images. To handle this problem, we present an effective ensemble method for DR diagnosis comprising four main phases: image pre-processing, selection of backbone pre-trained models, feature enhancement, and optimization. Our methodology initiates with the pre-processing phase, where we apply CLAHE to enhance image contrast and Gamma correction is then used to adjust the brightness for better feature recognition. We then apply Discrete Wavelet Transform (DWT) for image fusion by combining multi-resolution details to create a richer dataset. Then, we selected three pre-trained models with the best performance named DenseNet169, MobileNetV1, and Xception for diverse feature extraction. To further improve feature extraction, an improved residual block is integrated into each model. Finally, the predictions from these base models are then aggregated using weighted ensemble approach, with the weights optimized by using Salp Swarm Algorithm (SSA).SSA intelligently explores the weight space and finds the optimal configuration of base architectures to maximize the performance of the ensemble model. The proposed model is evaluated on the multiclass Kaggle APTOS 2019 dataset and obtained 88.52% accuracy.

PassionNet: An Innovative Framework for Duplicate and Conflicting Requirements Identification

Dec 02, 2024

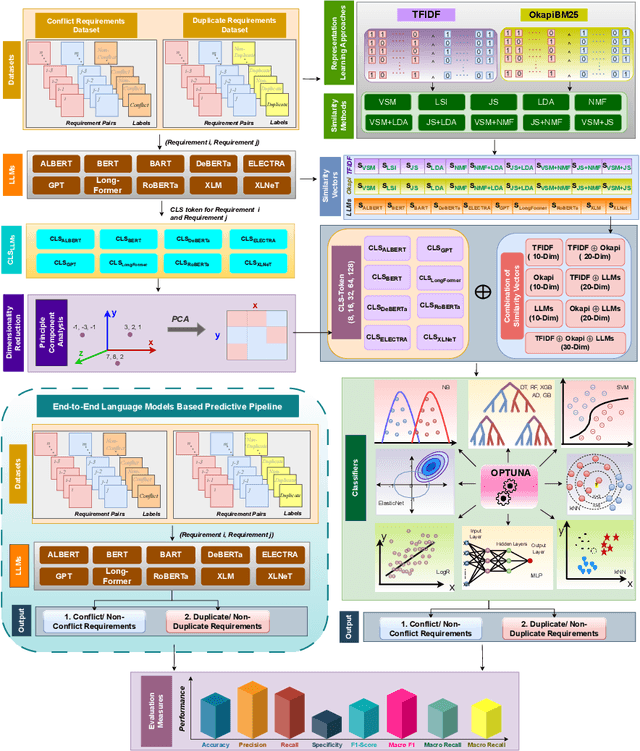

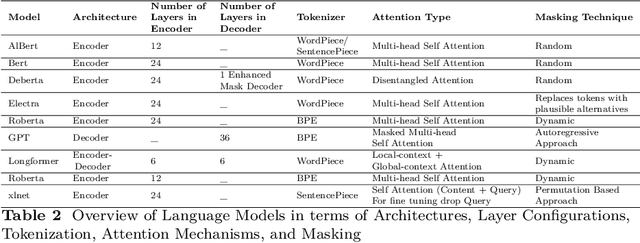

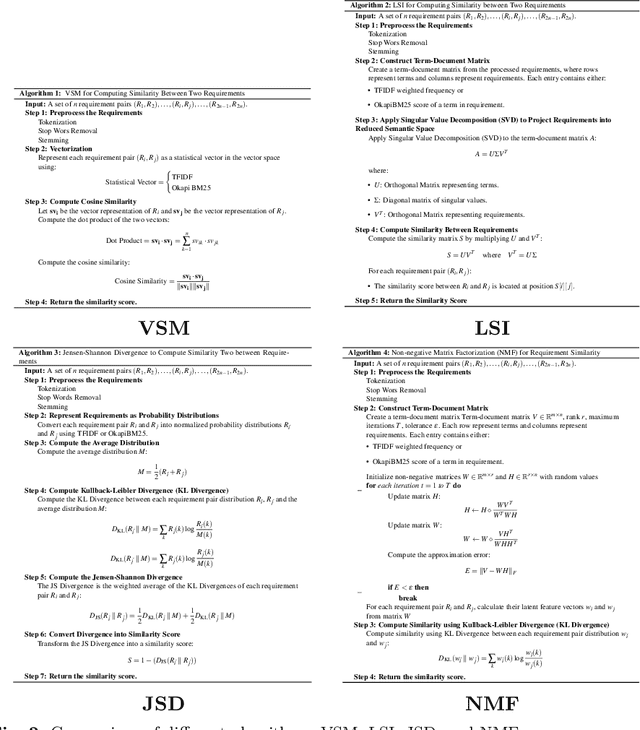

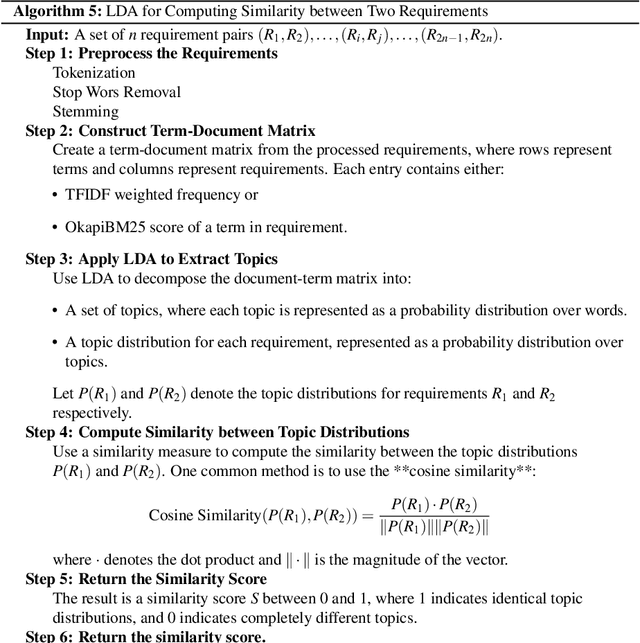

Abstract:Early detection and resolution of duplicate and conflicting requirements can significantly enhance project efficiency and overall software quality. Researchers have developed various computational predictors by leveraging Artificial Intelligence (AI) potential to detect duplicate and conflicting requirements. However, these predictors lack in performance and requires more effective approaches to empower software development processes. Following the need of a unique predictor that can accurately identify duplicate and conflicting requirements, this research offers a comprehensive framework that facilitate development of 3 different types of predictive pipelines: language models based, multi-model similarity knowledge-driven and large language models (LLMs) context + multi-model similarity knowledge-driven. Within first type predictive pipelines landscape, framework facilitates conflicting/duplicate requirements identification by leveraging 8 distinct types of LLMs. In second type, framework supports development of predictive pipelines that leverage multi-scale and multi-model similarity knowledge, ranging from traditional similarity computation methods to advanced similarity vectors generated by LLMs. In the third type, the framework synthesizes predictive pipelines by integrating contextual insights from LLMs with multi-model similarity knowledge. Across 6 public benchmark datasets, extensive testing of 760 distinct predictive pipelines demonstrates that hybrid predictive pipelines consistently outperforms other two types predictive pipelines in accurately identifying duplicate and conflicting requirements. This predictive pipeline outperformed existing state-of-the-art predictors performance with an overall performance margin of 13% in terms of F1-score

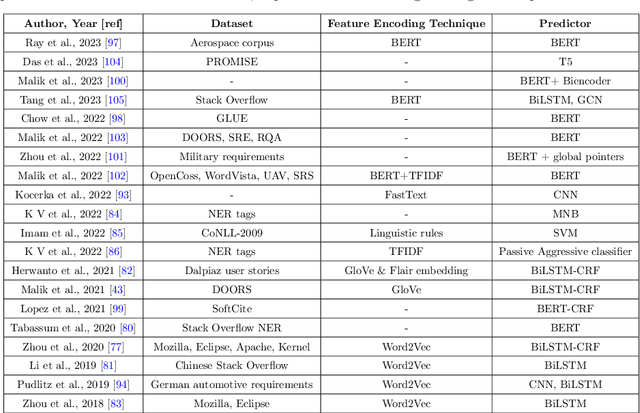

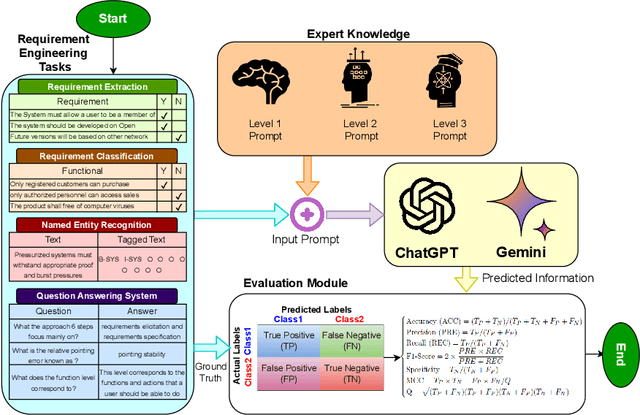

Generative Language Models Potential for Requirement Engineering Applications: Insights into Current Strengths and Limitations

Dec 01, 2024

Abstract:Traditional language models have been extensively evaluated for software engineering domain, however the potential of ChatGPT and Gemini have not been fully explored. To fulfill this gap, the paper in hand presents a comprehensive case study to investigate the potential of both language models for development of diverse types of requirement engineering applications. It deeply explores impact of varying levels of expert knowledge prompts on the prediction accuracies of both language models. Across 4 different public benchmark datasets of requirement engineering tasks, it compares performance of both language models with existing task specific machine/deep learning predictors and traditional language models. Specifically, the paper utilizes 4 benchmark datasets; Pure (7,445 samples, requirements extraction),PROMISE (622 samples, requirements classification), REQuestA (300 question answer (QA) pairs) and Aerospace datasets (6347 words, requirements NER tagging). Our experiments reveal that, in comparison to ChatGPT, Gemini requires more careful prompt engineering to provide accurate predictions. Moreover, across requirement extraction benchmark dataset the state-of-the-art F1-score is 0.86 while ChatGPT and Gemini achieved 0.76 and 0.77,respectively. The State-of-the-art F1-score on requirements classification dataset is 0.96 and both language models 0.78. In name entity recognition (NER) task the state-of-the-art F1-score is 0.92 and ChatGPT managed to produce 0.36, and Gemini 0.25. Similarly, across question answering dataset the state-of-the-art F1-score is 0.90 and ChatGPT and Gemini managed to produce 0.91 and 0.88 respectively. Our experiments show that Gemini requires more precise prompt engineering than ChatGPT. Except for question-answering, both models under-perform compared to current state-of-the-art predictors across other tasks.

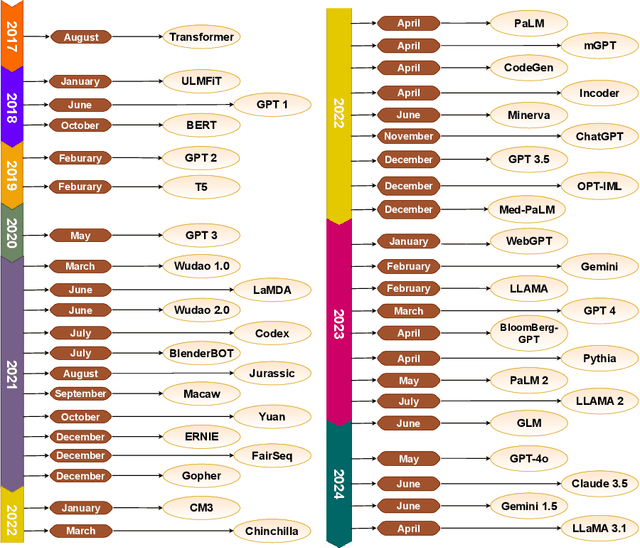

Quantitative knowledge retrieval from large language models

Feb 12, 2024Abstract:Large language models (LLMs) have been extensively studied for their abilities to generate convincing natural language sequences, however their utility for quantitative information retrieval is less well understood. In this paper we explore the feasibility of LLMs as a mechanism for quantitative knowledge retrieval to aid data analysis tasks such as elicitation of prior distributions for Bayesian models and imputation of missing data. We present a prompt engineering framework, treating an LLM as an interface to a latent space of scientific literature, comparing responses in different contexts and domains against more established approaches. Implications and challenges of using LLMs as 'experts' are discussed.

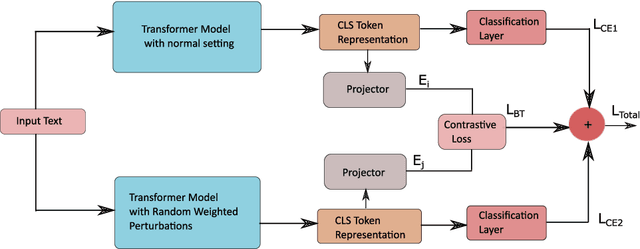

A Unique Training Strategy to Enhance Language Models Capabilities for Health Mention Detection from Social Media Content

Oct 29, 2023

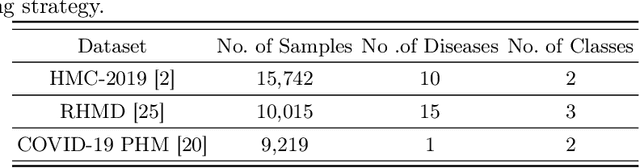

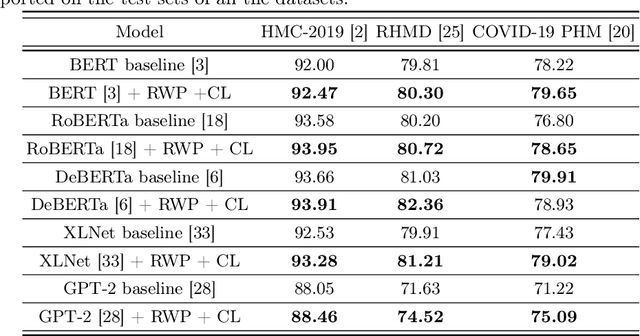

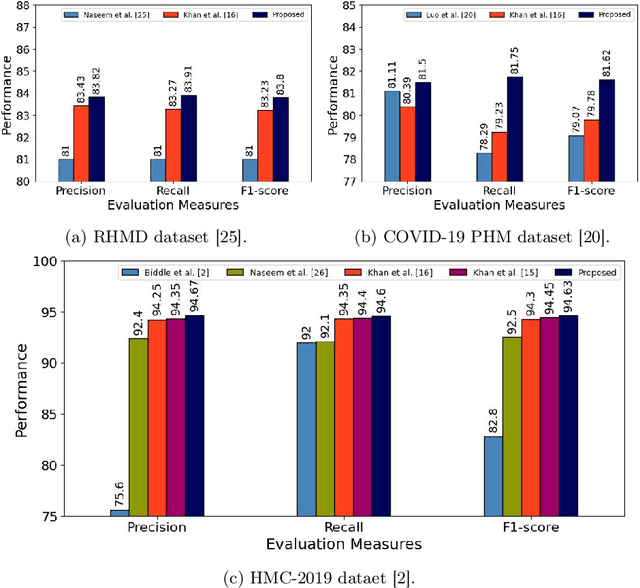

Abstract:An ever-increasing amount of social media content requires advanced AI-based computer programs capable of extracting useful information. Specifically, the extraction of health-related content from social media is useful for the development of diverse types of applications including disease spread, mortality rate prediction, and finding the impact of diverse types of drugs on diverse types of diseases. Language models are competent in extracting the syntactic and semantics of text. However, they face a hard time extracting similar patterns from social media texts. The primary reason for this shortfall lies in the non-standardized writing style commonly employed by social media users. Following the need for an optimal language model competent in extracting useful patterns from social media text, the key goal of this paper is to train language models in such a way that they learn to derive generalized patterns. The key goal is achieved through the incorporation of random weighted perturbation and contrastive learning strategies. On top of a unique training strategy, a meta predictor is proposed that reaps the benefits of 5 different language models for discriminating posts of social media text into non-health and health-related classes. Comprehensive experimentation across 3 public benchmark datasets reveals that the proposed training strategy improves the performance of the language models up to 3.87%, in terms of F1-score, as compared to their performance with traditional training. Furthermore, the proposed meta predictor outperforms existing health mention classification predictors across all 3 benchmark datasets.

A Precisely Xtreme-Multi Channel Hybrid Approach For Roman Urdu Sentiment Analysis

Mar 11, 2020

Abstract:In order to accelerate the performance of various Natural Language Processing tasks for Roman Urdu, this paper for the very first time provides 3 neural word embeddings prepared using most widely used approaches namely Word2vec, FastText, and Glove. The integrity of generated neural word embeddings is evaluated using intrinsic and extrinsic evaluation approaches. Considering the lack of publicly available benchmark datasets, it provides a first-ever Roman Urdu dataset which consists of 3241 sentiments annotated against positive, negative and neutral classes. To provide benchmark baseline performance over the presented dataset, we adapt diverse machine learning (Support Vector Machine Logistic Regression, Naive Bayes), deep learning (convolutional neural network, recurrent neural network), and hybrid approaches. Effectiveness of generated neural word embeddings is evaluated by comparing the performance of machine and deep learning based methodologies using 7, and 5 distinct feature representation approaches respectively. Finally, it proposes a novel precisely extreme multi-channel hybrid methodology which outperforms state-of-the-art adapted machine and deep learning approaches by the figure of 9%, and 4% in terms of F1-score. Roman Urdu Sentiment Analysis, Pretrain word embeddings for Roman Urdu, Word2Vec, Glove, Fast-Text

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge