Moussab Bennehar

SAIL: Self-supervised Albedo Estimation from Real Images with a Latent Diffusion Model

May 26, 2025Abstract:Intrinsic image decomposition aims at separating an image into its underlying albedo and shading components, isolating the base color from lighting effects to enable downstream applications such as virtual relighting and scene editing. Despite the rise and success of learning-based approaches, intrinsic image decomposition from real-world images remains a significant challenging task due to the scarcity of labeled ground-truth data. Most existing solutions rely on synthetic data as supervised setups, limiting their ability to generalize to real-world scenes. Self-supervised methods, on the other hand, often produce albedo maps that contain reflections and lack consistency under different lighting conditions. To address this, we propose SAIL, an approach designed to estimate albedo-like representations from single-view real-world images. We repurpose the prior knowledge of a latent diffusion model for unconditioned scene relighting as a surrogate objective for albedo estimation. To extract the albedo, we introduce a novel intrinsic image decomposition fully formulated in the latent space. To guide the training of our latent diffusion model, we introduce regularization terms that constrain both the lighting-dependent and independent components of our latent image decomposition. SAIL predicts stable albedo under varying lighting conditions and generalizes to multiple scenes, using only unlabeled multi-illumination data available online.

Pose Optimization for Autonomous Driving Datasets using Neural Rendering Models

Apr 22, 2025

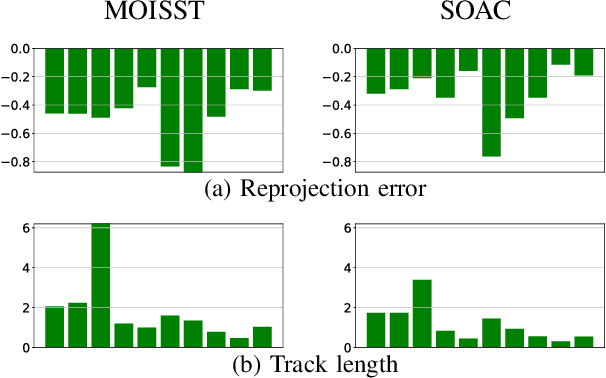

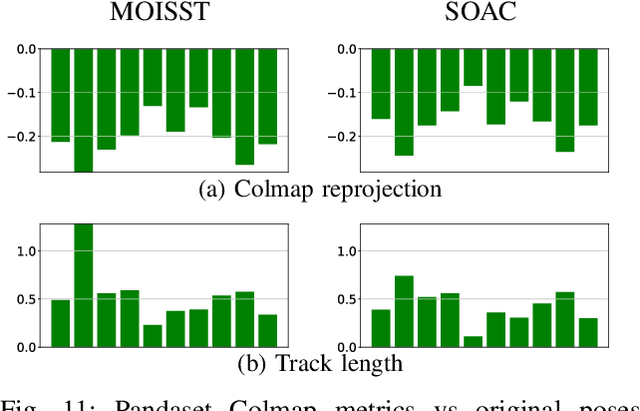

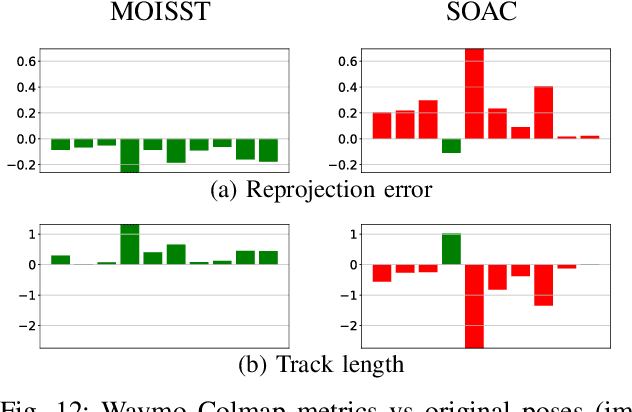

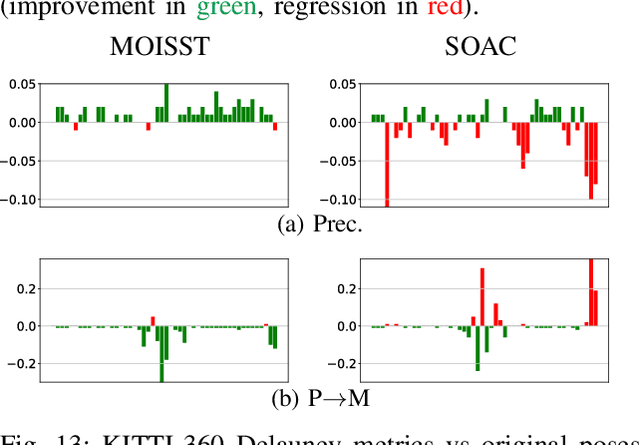

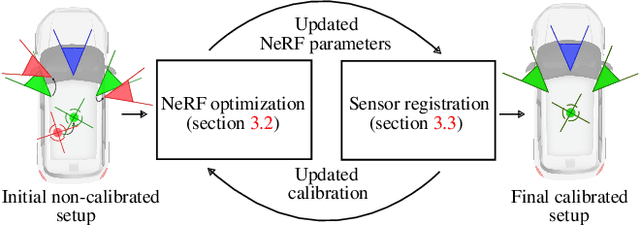

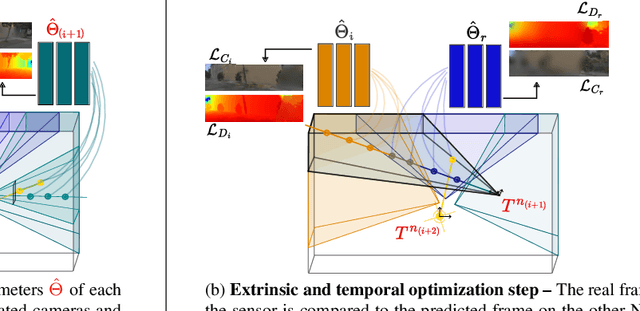

Abstract:Autonomous driving systems rely on accurate perception and localization of the ego car to ensure safety and reliability in challenging real-world driving scenarios. Public datasets play a vital role in benchmarking and guiding advancement in research by providing standardized resources for model development and evaluation. However, potential inaccuracies in sensor calibration and vehicle poses within these datasets can lead to erroneous evaluations of downstream tasks, adversely impacting the reliability and performance of the autonomous systems. To address this challenge, we propose a robust optimization method based on Neural Radiance Fields (NeRF) to refine sensor poses and calibration parameters, enhancing the integrity of dataset benchmarks. To validate improvement in accuracy of our optimized poses without ground truth, we present a thorough evaluation process, relying on reprojection metrics, Novel View Synthesis rendering quality, and geometric alignment. We demonstrate that our method achieves significant improvements in sensor pose accuracy. By optimizing these critical parameters, our approach not only improves the utility of existing datasets but also paves the way for more reliable autonomous driving models. To foster continued progress in this field, we make the optimized sensor poses publicly available, providing a valuable resource for the research community.

CoStruction: Conjoint radiance field optimization for urban scene reconStruction with limited image overlap

Jan 07, 2025

Abstract:Reconstructing the surrounding surface geometry from recorded driving sequences poses a significant challenge due to the limited image overlap and complex topology of urban environments. SoTA neural implicit surface reconstruction methods often struggle in such setting, either failing due to small vision overlap or exhibiting suboptimal performance in accurately reconstructing both the surface and fine structures. To address these limitations, we introduce CoStruction, a novel hybrid implicit surface reconstruction method tailored for large driving sequences with limited camera overlap. CoStruction leverages cross-representation uncertainty estimation to filter out ambiguous geometry caused by limited observations. Our method performs joint optimization of both radiance fields in addition to guided sampling achieving accurate reconstruction of large areas along with fine structures in complex urban scenarios. Extensive evaluation on major driving datasets demonstrates the superiority of our approach in reconstructing large driving sequences with limited image overlap, outperforming concurrent SoTA methods.

Pointmap-Conditioned Diffusion for Consistent Novel View Synthesis

Jan 06, 2025

Abstract:In this paper, we present PointmapDiffusion, a novel framework for single-image novel view synthesis (NVS) that utilizes pre-trained 2D diffusion models. Our method is the first to leverage pointmaps (i.e. rasterized 3D scene coordinates) as a conditioning signal, capturing geometric prior from the reference images to guide the diffusion process. By embedding reference attention blocks and a ControlNet for pointmap features, our model balances between generative capability and geometric consistency, enabling accurate view synthesis across varying viewpoints. Extensive experiments on diverse real-world datasets demonstrate that PointmapDiffusion achieves high-quality, multi-view consistent results with significantly fewer trainable parameters compared to other baselines for single-image NVS tasks.

3DGS-Calib: 3D Gaussian Splatting for Multimodal SpatioTemporal Calibration

Mar 18, 2024

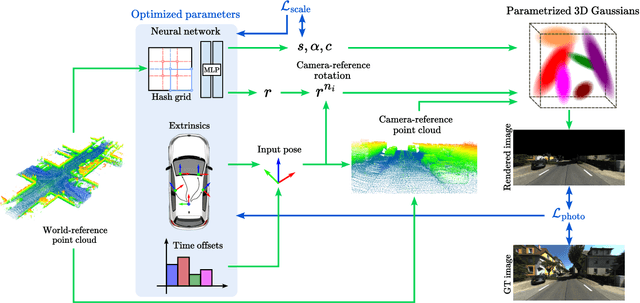

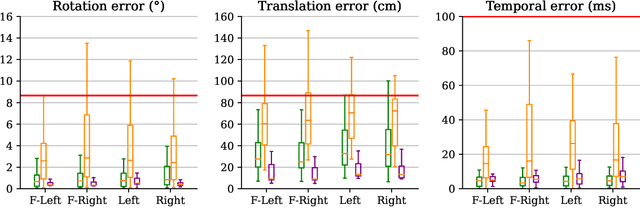

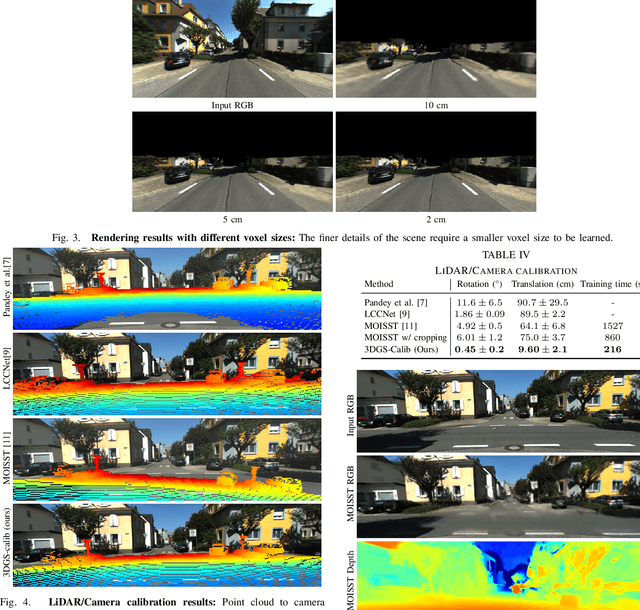

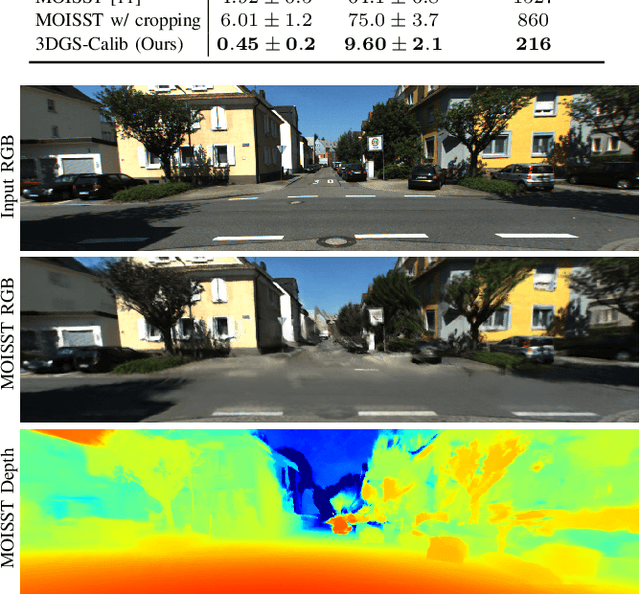

Abstract:Reliable multimodal sensor fusion algorithms require accurate spatiotemporal calibration. Recently, targetless calibration techniques based on implicit neural representations have proven to provide precise and robust results. Nevertheless, such methods are inherently slow to train given the high computational overhead caused by the large number of sampled points required for volume rendering. With the recent introduction of 3D Gaussian Splatting as a faster alternative to implicit representation methods, we propose to leverage this new rendering approach to achieve faster multi-sensor calibration. We introduce 3DGS-Calib, a new calibration method that relies on the speed and rendering accuracy of 3D Gaussian Splatting to achieve multimodal spatiotemporal calibration that is accurate, robust, and with a substantial speed-up compared to methods relying on implicit neural representations. We demonstrate the superiority of our proposal with experimental results on sequences from KITTI-360, a widely used driving dataset.

SCILLA: SurfaCe Implicit Learning for Large Urban Area, a volumetric hybrid solution

Mar 15, 2024

Abstract:Neural implicit surface representation methods have recently shown impressive 3D reconstruction results. However, existing solutions struggle to reconstruct urban outdoor scenes due to their large, unbounded, and highly detailed nature. Hence, to achieve accurate reconstructions, additional supervision data such as LiDAR, strong geometric priors, and long training times are required. To tackle such issues, we present SCILLA, a new hybrid implicit surface learning method to reconstruct large driving scenes from 2D images. SCILLA's hybrid architecture models two separate implicit fields: one for the volumetric density and another for the signed distance to the surface. To accurately represent urban outdoor scenarios, we introduce a novel volume-rendering strategy that relies on self-supervised probabilistic density estimation to sample points near the surface and transition progressively from volumetric to surface representation. Our solution permits a proper and fast initialization of the signed distance field without relying on any geometric prior on the scene, compared to concurrent methods. By conducting extensive experiments on four outdoor driving datasets, we show that SCILLA can learn an accurate and detailed 3D surface scene representation in various urban scenarios while being two times faster to train compared to previous state-of-the-art solutions.

SWAG: Splatting in the Wild images with Appearance-conditioned Gaussians

Mar 15, 2024

Abstract:Implicit neural representation methods have shown impressive advancements in learning 3D scenes from unstructured in-the-wild photo collections but are still limited by the large computational cost of volumetric rendering. More recently, 3D Gaussian Splatting emerged as a much faster alternative with superior rendering quality and training efficiency, especially for small-scale and object-centric scenarios. Nevertheless, this technique suffers from poor performance on unstructured in-the-wild data. To tackle this, we extend over 3D Gaussian Splatting to handle unstructured image collections. We achieve this by modeling appearance to seize photometric variations in the rendered images. Additionally, we introduce a new mechanism to train transient Gaussians to handle the presence of scene occluders in an unsupervised manner. Experiments on diverse photo collection scenes and multi-pass acquisition of outdoor landmarks show the effectiveness of our method over prior works achieving state-of-the-art results with improved efficiency.

RoDUS: Robust Decomposition of Static and Dynamic Elements in Urban Scenes

Mar 14, 2024

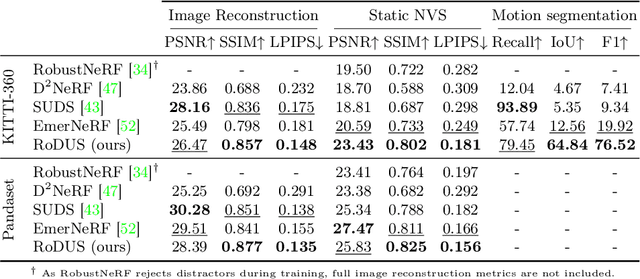

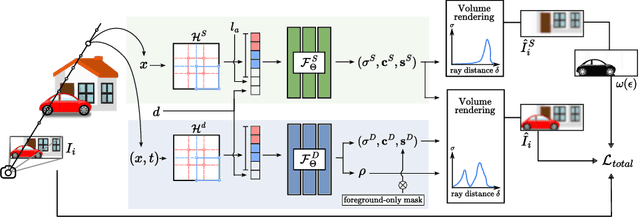

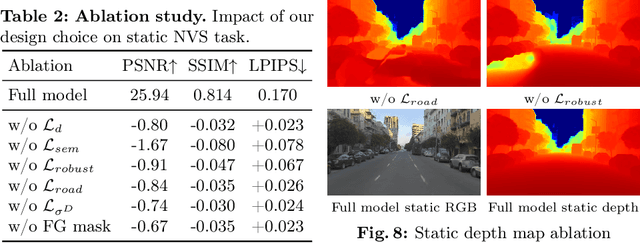

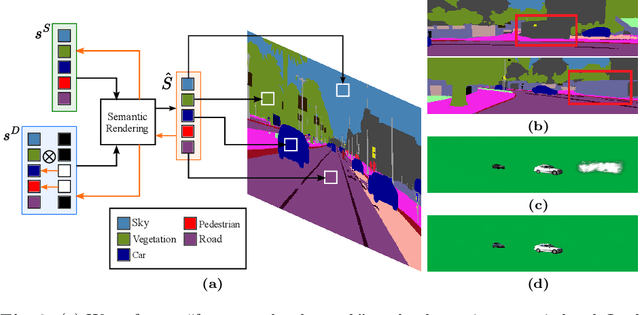

Abstract:The task of separating dynamic objects from static environments using NeRFs has been widely studied in recent years. However, capturing large-scale scenes still poses a challenge due to their complex geometric structures and unconstrained dynamics. Without the help of 3D motion cues, previous methods often require simplified setups with slow camera motion and only a few/single dynamic actors, leading to suboptimal solutions in most urban setups. To overcome such limitations, we present RoDUS, a pipeline for decomposing static and dynamic elements in urban scenes, with thoughtfully separated NeRF models for moving and non-moving components. Our approach utilizes a robust kernel-based initialization coupled with 4D semantic information to selectively guide the learning process. This strategy enables accurate capturing of the dynamics in the scene, resulting in reduced artifacts caused by NeRF on background reconstruction, all by using self-supervision. Notably, experimental evaluations on KITTI-360 and Pandaset datasets demonstrate the effectiveness of our method in decomposing challenging urban scenes into precise static and dynamic components.

SOAC: Spatio-Temporal Overlap-Aware Multi-Sensor Calibration using Neural Radiance Fields

Nov 27, 2023

Abstract:In rapidly-evolving domains such as autonomous driving, the use of multiple sensors with different modalities is crucial to ensure high operational precision and stability. To correctly exploit the provided information by each sensor in a single common frame, it is essential for these sensors to be accurately calibrated. In this paper, we leverage the ability of Neural Radiance Fields (NeRF) to represent different sensors modalities in a common volumetric representation to achieve robust and accurate spatio-temporal sensor calibration. By designing a partitioning approach based on the visible part of the scene for each sensor, we formulate the calibration problem using only the overlapping areas. This strategy results in a more robust and accurate calibration that is less prone to failure. We demonstrate that our approach works on outdoor urban scenes by validating it on multiple established driving datasets. Results show that our method is able to get better accuracy and robustness compared to existing methods.

PlaNeRF: SVD Unsupervised 3D Plane Regularization for NeRF Large-Scale Scene Reconstruction

Jun 06, 2023

Abstract:Neural Radiance Fields (NeRF) enable 3D scene reconstruction from 2D images and camera poses for Novel View Synthesis (NVS). Although NeRF can produce photorealistic results, it often suffers from overfitting to training views, leading to poor geometry reconstruction, especially in low-texture areas. This limitation restricts many important applications which require accurate geometry, such as extrapolated NVS, HD mapping and scene editing. To address this limitation, we propose a new method to improve NeRF's 3D structure using only RGB images and semantic maps. Our approach introduces a novel plane regularization based on Singular Value Decomposition (SVD), that does not rely on any geometric prior. In addition, we leverage the Structural Similarity Index Measure (SSIM) in our loss design to properly initialize the volumetric representation of NeRF. Quantitative and qualitative results show that our method outperforms popular regularization approaches in accurate geometry reconstruction for large-scale outdoor scenes and achieves SoTA rendering quality on the KITTI-360 NVS benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge