Mohamed Bouri

Adaptive Negative Damping Control for User-Dependent Multi-Terrain Walking Assistance with a Hip Exoskeleton

Mar 05, 2025

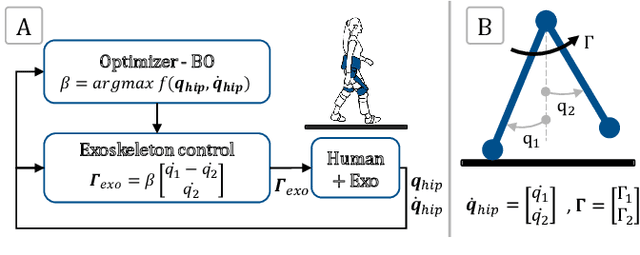

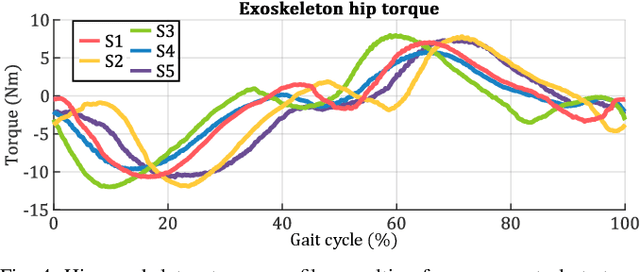

Abstract:Hip exoskeletons are known for their versatility in assisting users across varied scenarios. However, current assistive strategies often lack the flexibility to accommodate for individual walking patterns and adapt to diverse locomotion environments. In this work, we present a novel control strategy that adapts the mechanical impedance of the human-exoskeleton system. We design the hip assistive torques as an adaptive virtual negative damping, which is able to inject energy into the system while allowing the users to remain in control and contribute voluntarily to the movements. Experiments with five healthy subjects demonstrate that our controller reduces the metabolic cost of walking compared to free walking (average reduction of 7.2%), and it preserves the lower-limbs kinematics. Additionally, our method achieves minimal power losses from the exoskeleton across the entire gait cycle (less than 2% negative mechanical power out of the total power), ensuring synchronized action with the users' movements. Moreover, we use Bayesian Optimization to adapt the assistance strength and allow for seamless adaptation and transitions across multi-terrain environments. Our strategy achieves efficient power transmission under all conditions. Our approach demonstrates an individualized, adaptable, and straightforward controller for hip exoskeletons, advancing the development of viable, adaptive, and user-dependent control laws.

* Copyright 2025 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Rapid Online Learning of Hip Exoskeleton Assistance Preferences

Feb 21, 2025

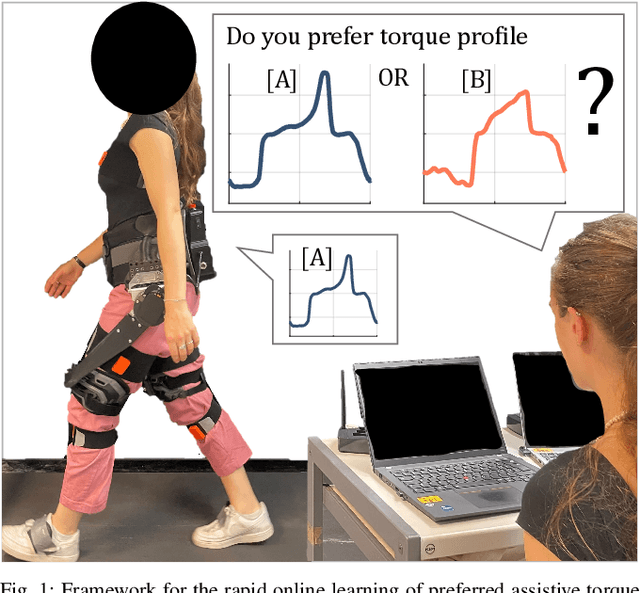

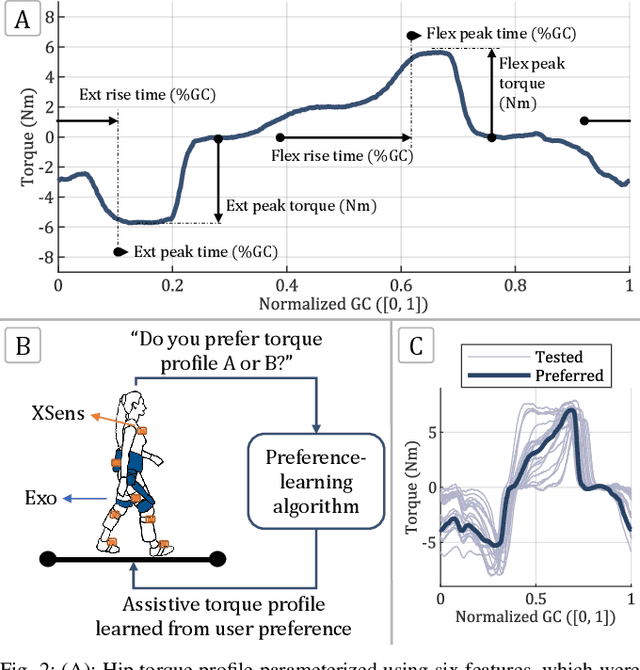

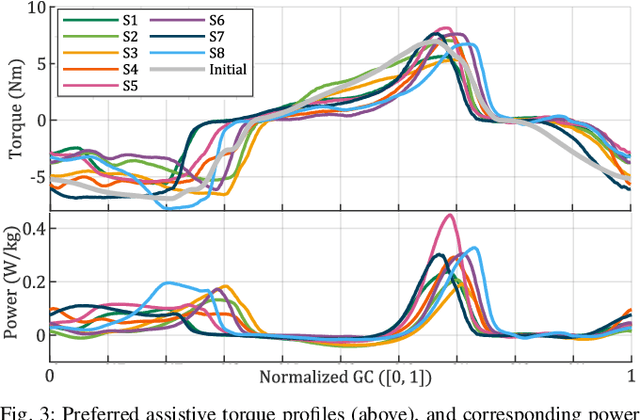

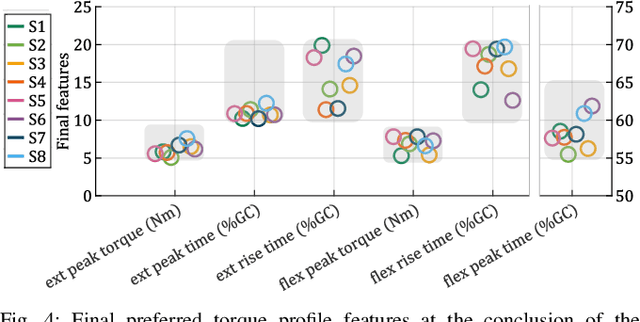

Abstract:Hip exoskeletons are increasing in popularity due to their effectiveness across various scenarios and their ability to adapt to different users. However, personalizing the assistance often requires lengthy tuning procedures and computationally intensive algorithms, and most existing methods do not incorporate user feedback. In this work, we propose a novel approach for rapidly learning users' preferences for hip exoskeleton assistance. We perform pairwise comparisons of distinct randomly generated assistive profiles, and collect participants preferences through active querying. Users' feedback is integrated into a preference-learning algorithm that updates its belief, learns a user-dependent reward function, and changes the assistive torque profiles accordingly. Results from eight healthy subjects display distinct preferred torque profiles, and users' choices remain consistent when compared to a perturbed profile. A comprehensive evaluation of users' preferences reveals a close relationship with individual walking strategies. The tested torque profiles do not disrupt kinematic joint synergies, and participants favor assistive torques that are synchronized with their movements, resulting in lower negative power from the device. This straightforward approach enables the rapid learning of users preferences and rewards, grounding future studies on reward-based human-exoskeleton interaction.

* Copyright 2025 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Locomotion Mode Transitions: Tackling System- and User-Specific Variability in Lower-Limb Exoskeletons

Nov 20, 2024

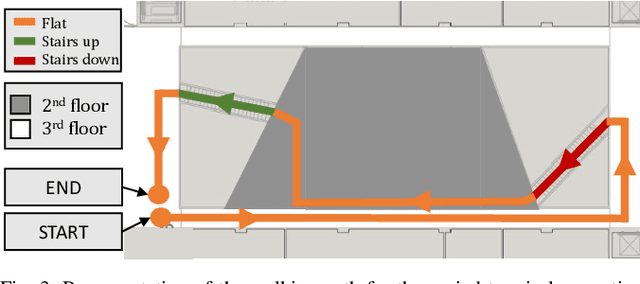

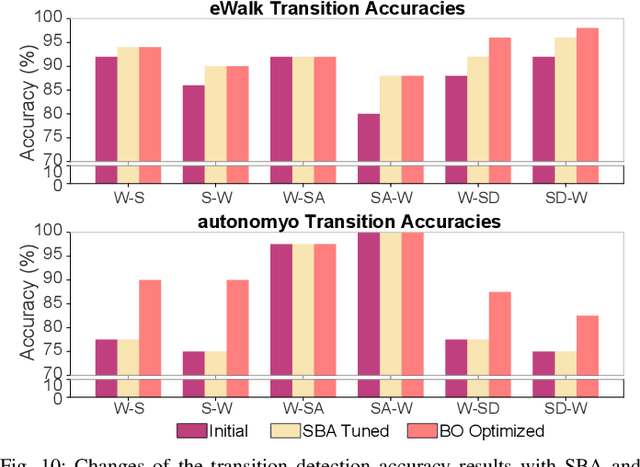

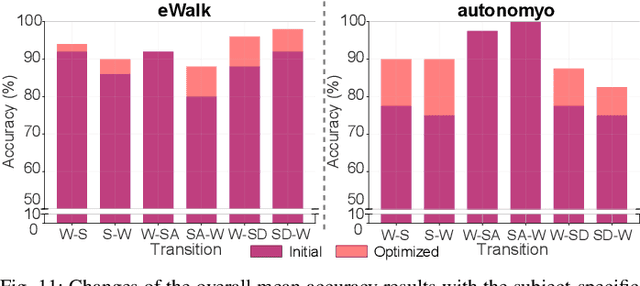

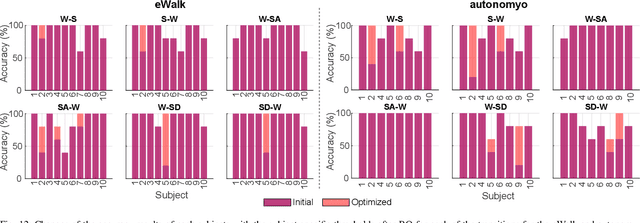

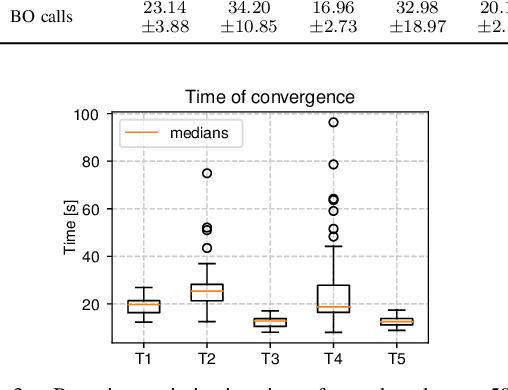

Abstract:Accurate detection of locomotion transitions, such as walk to sit, walk to stair ascent, and descent, is crucial to effectively control robotic assistive devices, such as lower-limb exoskeletons, as each locomotion mode requires specific assistance. Variability in collected sensor data introduced by user- or system-specific characteristics makes it challenging to maintain high transition detection accuracy while avoiding latency using non-adaptive classification models. In this study, we identified key factors influencing transition detection performance, including variations in user behavior, and different mechanical designs of the exoskeletons. To boost the transition detection accuracy, we introduced two methods for adapting a finite-state machine classifier to system- and user-specific variability: a Statistics-Based approach and Bayesian Optimization. Our experimental results demonstrate that both methods remarkably improve transition detection accuracy across diverse users, achieving up to an 80% increase in certain scenarios compared to the non-personalized threshold method. These findings emphasize the importance of personalization in adaptive control systems, underscoring the potential for enhanced user experience and effectiveness in assistive devices. By incorporating subject- and system-specific data into the model training process, our approach offers a precise and reliable solution for detecting locomotion transitions, catering to individual user needs, and ultimately improving the performance of assistive devices.

Generalizing Segmentation Foundation Model Under Sim-to-real Domain-shift for Guidewire Segmentation in X-ray Fluoroscopy

Oct 09, 2024

Abstract:Guidewire segmentation during endovascular interventions holds the potential to significantly enhance procedural accuracy, improving visualization and providing critical feedback that can support both physicians and robotic systems in navigating complex vascular pathways. Unlike supervised segmentation networks, which need many expensive expert-annotated labels, sim-to-real domain adaptation approaches utilize synthetic data from simulations, offering a cost-effective solution. The success of models like Segment-Anything (SAM) has driven advancements in image segmentation foundation models with strong zero/few-shot generalization through prompt engineering. However, they struggle with medical images like X-ray fluoroscopy and the domain-shifts of the data. Given the challenges of acquiring annotation and the accessibility of labeled simulation data, we propose a sim-to-real domain adaption framework with a coarse-to-fine strategy to adapt SAM to X-ray fluoroscopy guidewire segmentation without any annotation on the target domain. We first generate the pseudo-labels by utilizing a simple source image style transfer technique that preserves the guidewire structure. Then, we develop a weakly supervised self-training architecture to fine-tune an end-to-end student SAM with the coarse labels by imposing consistency regularization and supervision from the teacher SAM network. We validate the effectiveness of the proposed method on a publicly available Cardiac dataset and an in-house Neurovascular dataset, where our method surpasses both pre-trained SAM and many state-of-the-art domain adaptation techniques by a large margin. Our code will be made public on GitHub soon.

ExoRecovery: Push Recovery with a Lower-Limb Exoskeleton based on Stepping Strategy

Oct 31, 2023

Abstract:Balance loss is a significant challenge in lower-limb exoskeleton applications, as it can lead to potential falls, thereby impacting user safety and confidence. We introduce a control framework for omnidirectional recovery step planning by online optimization of step duration and position in response to external forces. We map the step duration and position to a human-like foot trajectory, which is then translated into joint trajectories using inverse kinematics. These trajectories are executed via an impedance controller, promoting cooperation between the exoskeleton and the user. Moreover, our framework is based on the concept of the divergent component of motion, also known as the Extrapolated Center of Mass, which has been established as a consistent dynamic for describing human movement. This real-time online optimization framework enhances the adaptability of exoskeleton users under unforeseen forces thereby improving the overall user stability and safety. To validate the effectiveness of our approach, simulations, and experiments were conducted. Our push recovery experiments employing the exoskeleton in zero-torque mode (without assistance) exhibit an alignment with the exoskeleton's recovery assistance mode, that shows the consistency of the control framework with human intention. To the best of our knowledge, this is the first cooperative push recovery framework for the lower-limb human exoskeleton that relies on the simultaneous adaptation of intra-stride parameters in both frontal and sagittal directions. The proposed control scheme has been validated with human subject experiments.

Maximizing Performance with Minimal Resources for Real-Time Transition Detection

Oct 06, 2023

Abstract:Assistive devices, such as exoskeletons and prostheses, have revolutionized the field of rehabilitation and mobility assistance. Efficiently detecting transitions between different activities, such as walking, stair ascending and descending, and sitting, is crucial for ensuring adaptive control and enhancing user experience. We here present an approach for real-time transition detection, aimed at optimizing the processing-time performance. By establishing activity-specific threshold values through trained machine learning models, we effectively distinguish motion patterns and we identify transition moments between locomotion modes. This threshold-based method improves real-time embedded processing time performance by up to 11 times compared to machine learning approaches. The efficacy of the developed finite-state machine is validated using data collected from three different measurement systems. Moreover, experiments with healthy participants were conducted on an active pelvis orthosis to validate the robustness and reliability of our approach. The proposed algorithm achieved high accuracy in detecting transitions between activities. These promising results show the robustness and reliability of the method, reinforcing its potential for integration into practical applications.

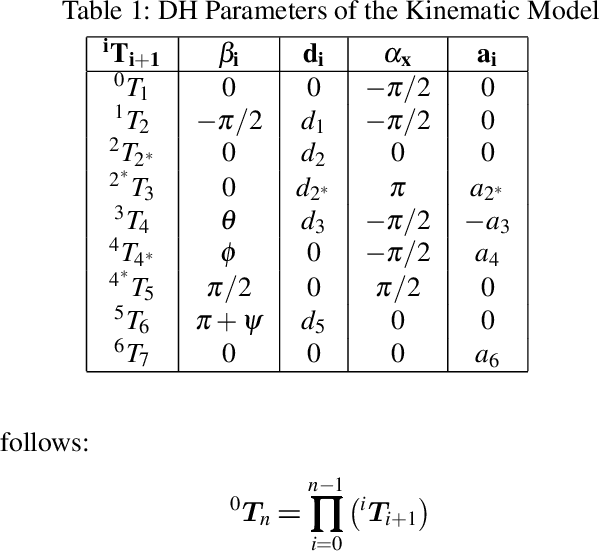

TEAM: a parameter-free algorithm to teach collaborative robots motions from user demonstrations

Sep 14, 2022

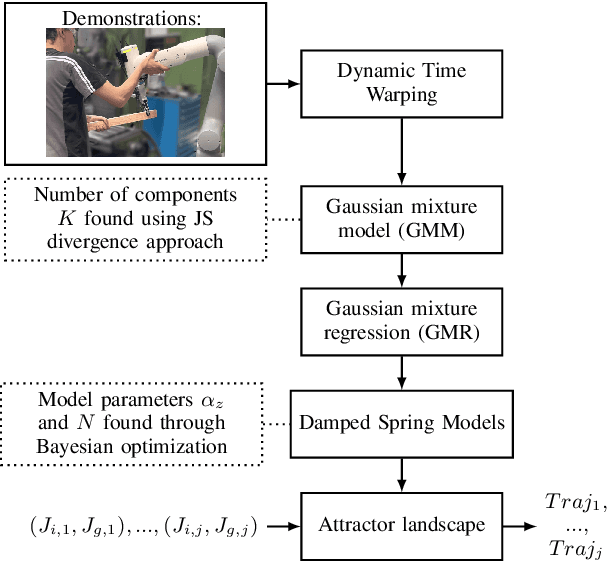

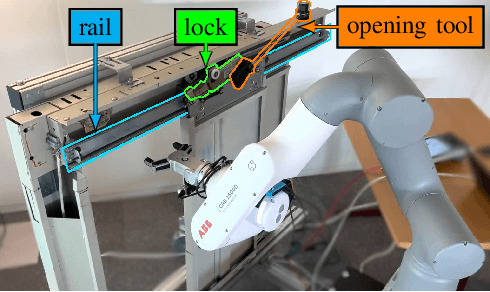

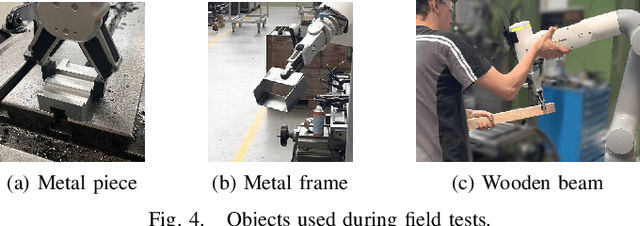

Abstract:Collaborative robots (cobots) built to work alongside humans must be able to quickly learn new skills and adapt to new task configurations. Learning from demonstration (LfD) enables cobots to learn and adapt motions to different use conditions. However, state-of-the-art LfD methods require manually tuning intrinsic parameters and have rarely been used in industrial contexts without experts. In this paper, the development and implementation of a LfD framework for industrial applications with naive users is presented. We propose a parameter-free method based on probabilistic movement primitives, where all the parameters are pre-determined using Jensen-Shannon divergence and bayesian optimization; thus, users do not have to perform manual parameter tuning. This method learns motions from a small dataset of user demonstrations, and generalizes the motion to various scenarios and conditions. We evaluate the method extensively in two field tests: one where the cobot works on elevator door maintenance, and one where three Schindler workers teach the cobot tasks useful for their workflow. Errors between the cobot end-effector and target positions range from $0$ to $1.48\pm0.35$mm. For all tests, no task failures were reported. Questionnaires completed by the Schindler workers highlighted the method's ease of use, feeling of safety, and the accuracy of the reproduced motion. Our code and recorded trajectories are made available online for reproduction.

Experimental evaluation of complete safe coordination of astrobots for Sloan Digital Sky Survey V

Dec 19, 2020

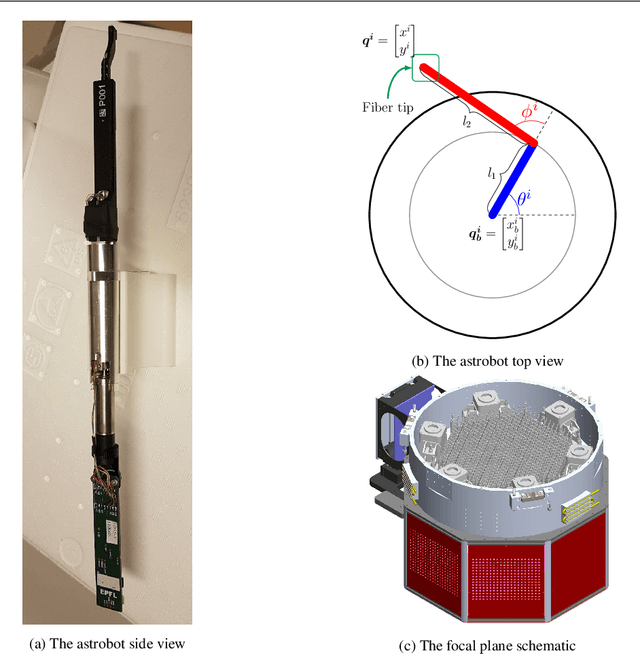

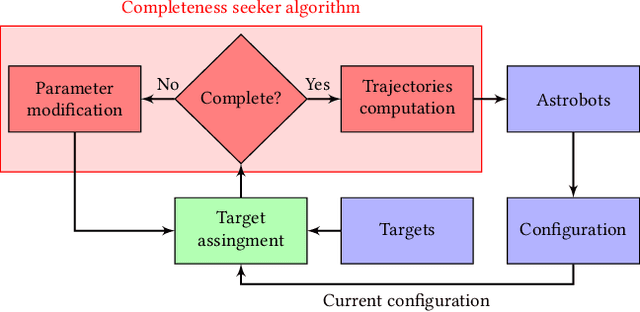

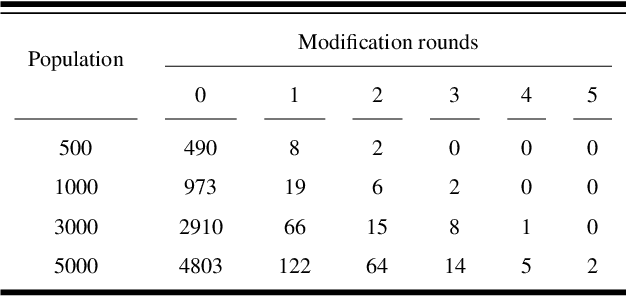

Abstract:The data throughput of massive spectroscopic surveys in the course of each observation is directly coordinated with the number of optical fibers which reach their target. In this paper, we evaluate the safety and the performance of the astrobots coordination in SDSS-V by conducting various experimental and simulated tests. We illustrate that our strategy provides a complete coordination condition which depends on the operational characteristics of astrobots, their configurations, and their targets. Namely, a coordination method based on the notion of cooperative artificial potential fields is used to generate safe and complete trajectories for astrobots. Optimal target assignment further improves the performance of the used algorithm in terms of faster convergences and less oscillatory movements. Both random targets and galaxy catalog targets are employed to observe the coordination success of the algorithm in various target distributions. The proposed method is capable of handling all potential collisions in the course of coordination. Once the completeness condition is fulfilled according to initial configuration of astrobots and their targets, the algorithm reaches full convergence of astrobots. Should one assign targets to astrobots using efficient strategies, convergence time as well as the number of oscillations decrease in the course of coordination. Rare incomplete scenarios are simply resolved by trivial modifications of astrobots swarms' parameters.

* https://link.springer.com/article/10.1007/s10686-020-09687-4

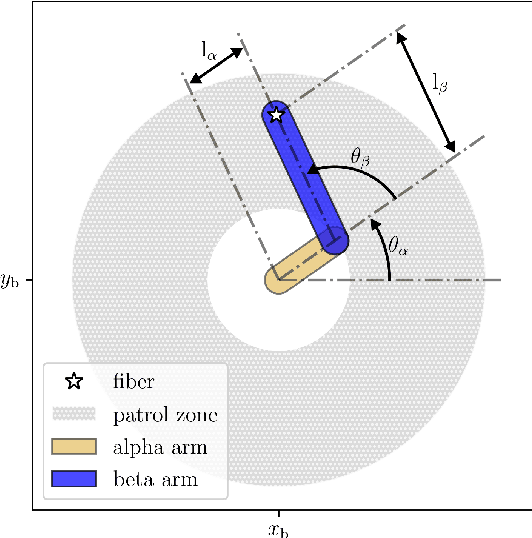

SDSS-V Algorithms: Fast, Collision-Free Trajectory Planning for Heavily Overlapping Robotic Fiber Positioners

Dec 08, 2020

Abstract:Robotic fiber positioner (RFP) arrays are becoming heavily adopted in wide field massively multiplexed spectroscopic survey instruments. RFP arrays decrease nightly operational overheads through rapid reconfiguration between fields and exposures. In comparison to similar instruments, SDSS-V has selected a very dense RFP packing scheme where any point in a field is typically accessible to three or more robots. This design provides flexibility in target assignment. However, the task of collision-less trajectory planning is especially challenging. We present two multi-agent distributed control strategies that are highly efficient and computationally inexpensive for determining collision-free paths for RFPs in heavily overlapping workspaces. We demonstrate that a reconfiguration path between two arbitrary robot configurations can be efficiently found if "folded" state, in which all robot arms are retracted and aligned in a lattice-like orientation, is inserted between the initial and final states. Although developed for SDSS-V, the approach we describe is generic and so applicable to a wide range of RFP designs and layouts. Robotic fiber positioner technology continues to advance rapidly, and in the near future ultra-densely packed RFP designs may be feasible. Our algorithms are especially capable in routing paths in very crowded environments, where we see efficient results even in regimes significantly more crowded than the SDSS-V RFP design.

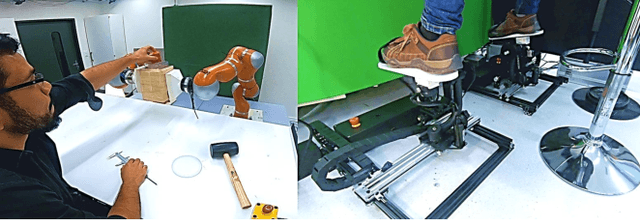

Four-Arm Manipulation via Feet Interfaces

Sep 11, 2019

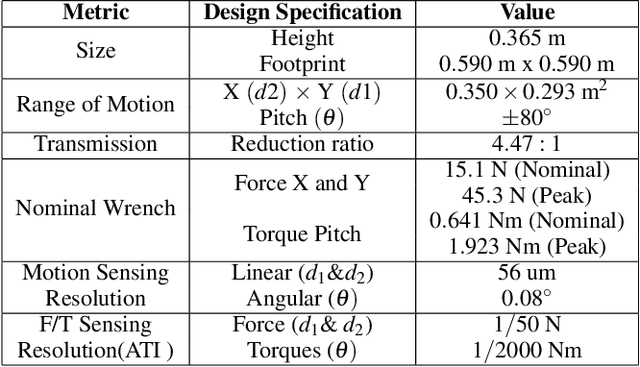

Abstract:We seek to augment human manipulation by enabling humans to control two robotic arms in addition to their natural arms using their feet. Thereby, the hands are free to perform tasks of high dexterity, while the feet-controlled arms perform tasks requiring lower dexterity, such as supporting a load. The robotic arms are tele-operated through two foot interfaces that transmit translation and rotation to the end effector of the manipulator. Haptic feedback is provided for the human to perceive contact and change in load and to adapt the feet pressure accordingly. Existing foot interfaces have been used primarily for a single foot control and are limited in range of motion and number of degrees of freedom they can control. This paper presents foot-interfaces specifically made for bipedal control, with a workspace suitable for two feet operation and in five degrees of freedom each. This paper also presents a position-force teleoperation controller based on Impedance Control modulated through Dynamical Systems for trajectory generation. Finally, an initial validation of the platform is presented, whereby a user grasps an object with both feet and generates various disturbances while the object is supported by the feet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge