Miska M. Hannuksela

NN-VVC: Versatile Video Coding boosted by self-supervisedly learned image coding for machines

Jan 19, 2024

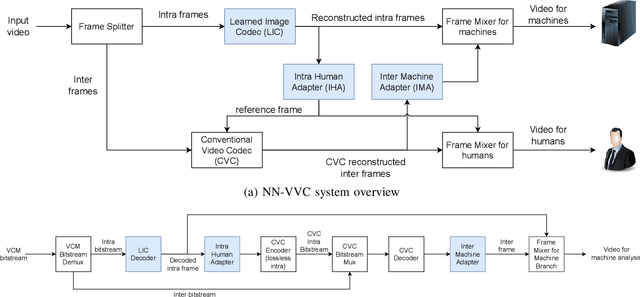

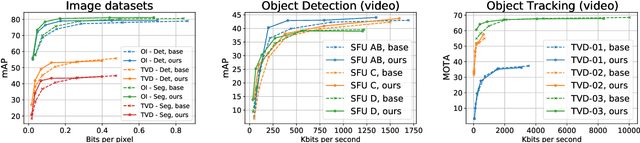

Abstract:The recent progress in artificial intelligence has led to an ever-increasing usage of images and videos by machine analysis algorithms, mainly neural networks. Nonetheless, compression, storage and transmission of media have traditionally been designed considering human beings as the viewers of the content. Recent research on image and video coding for machine analysis has progressed mainly in two almost orthogonal directions. The first is represented by end-to-end (E2E) learned codecs which, while offering high performance on image coding, are not yet on par with state-of-the-art conventional video codecs and lack interoperability. The second direction considers using the Versatile Video Coding (VVC) standard or any other conventional video codec (CVC) together with pre- and post-processing operations targeting machine analysis. While the CVC-based methods benefit from interoperability and broad hardware and software support, the machine task performance is often lower than the desired level, particularly in low bitrates. This paper proposes a hybrid codec for machines called NN-VVC, which combines the advantages of an E2E-learned image codec and a CVC to achieve high performance in both image and video coding for machines. Our experiments show that the proposed system achieved up to -43.20% and -26.8% Bj{\o}ntegaard Delta rate reduction over VVC for image and video data, respectively, when evaluated on multiple different datasets and machine vision tasks. To the best of our knowledge, this is the first research paper showing a hybrid video codec that outperforms VVC on multiple datasets and multiple machine vision tasks.

Bridging the gap between image coding for machines and humans

Jan 19, 2024Abstract:Image coding for machines (ICM) aims at reducing the bitrate required to represent an image while minimizing the drop in machine vision analysis accuracy. In many use cases, such as surveillance, it is also important that the visual quality is not drastically deteriorated by the compression process. Recent works on using neural network (NN) based ICM codecs have shown significant coding gains against traditional methods; however, the decompressed images, especially at low bitrates, often contain checkerboard artifacts. We propose an effective decoder finetuning scheme based on adversarial training to significantly enhance the visual quality of ICM codecs, while preserving the machine analysis accuracy, without adding extra bitcost or parameters at the inference phase. The results show complete removal of the checkerboard artifacts at the negligible cost of -1.6% relative change in task performance score. In the cases where some amount of artifacts is tolerable, such as when machine consumption is the primary target, this technique can enhance both pixel-fidelity and feature-fidelity scores without losing task performance.

Leveraging progressive model and overfitting for efficient learned image compression

Oct 08, 2022

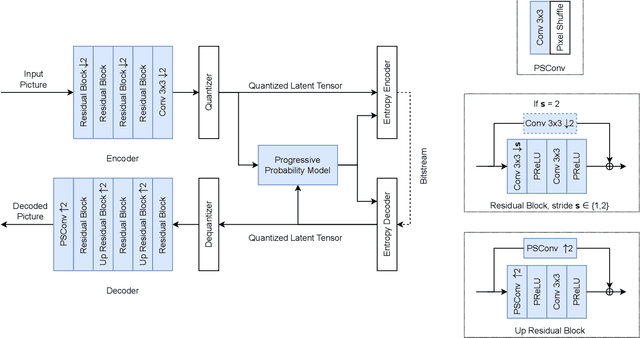

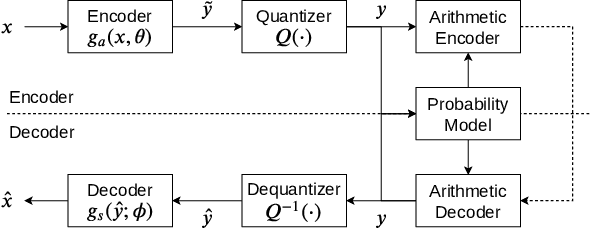

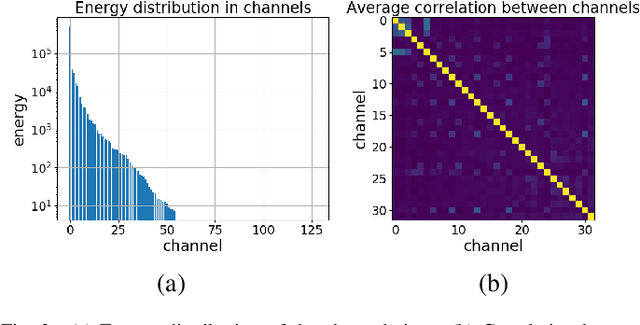

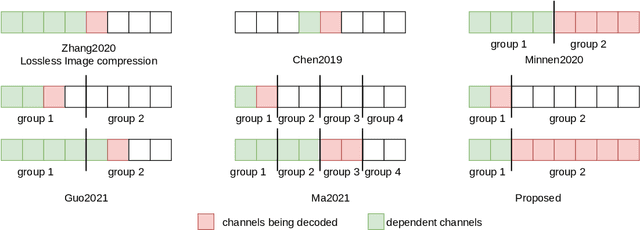

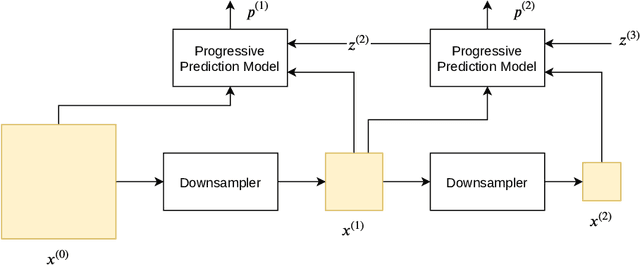

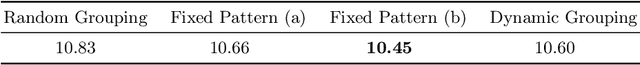

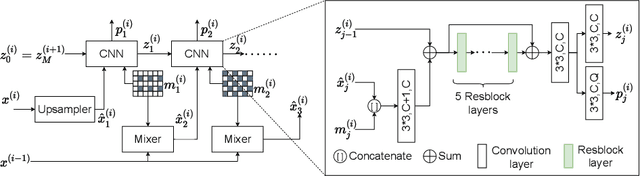

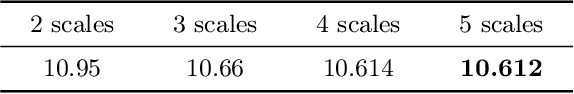

Abstract:Deep learning is overwhelmingly dominant in the field of computer vision and image/video processing for the last decade. However, for image and video compression, it lags behind the traditional techniques based on discrete cosine transform (DCT) and linear filters. Built on top of an autoencoder architecture, learned image compression (LIC) systems have drawn enormous attention in recent years. Nevertheless, the proposed LIC systems are still inferior to the state-of-the-art traditional techniques, for example, the Versatile Video Coding (VVC/H.266) standard, due to either their compression performance or decoding complexity. Although claimed to outperform the VVC/H.266 on a limited bit rate range, some proposed LIC systems take over 40 seconds to decode a 2K image on a GPU system. In this paper, we introduce a powerful and flexible LIC framework with multi-scale progressive (MSP) probability model and latent representation overfitting (LOF) technique. With different predefined profiles, the proposed framework can achieve various balance points between compression efficiency and computational complexity. Experiments show that the proposed framework achieves 2.5%, 1.0%, and 1.3% Bjontegaard delta bit rate (BD-rate) reduction over the VVC/H.266 standard on three benchmark datasets on a wide bit rate range. More importantly, the decoding complexity is reduced from O(n) to O(1) compared to many other LIC systems, resulting in over 20 times speedup when decoding 2K images.

Coding of volumetric content with MIV using VVC subpictures

Jun 06, 2022

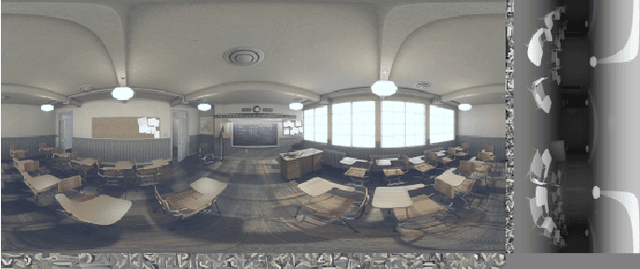

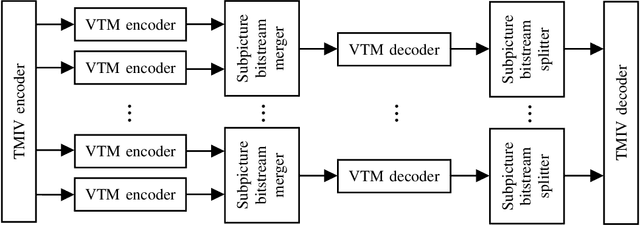

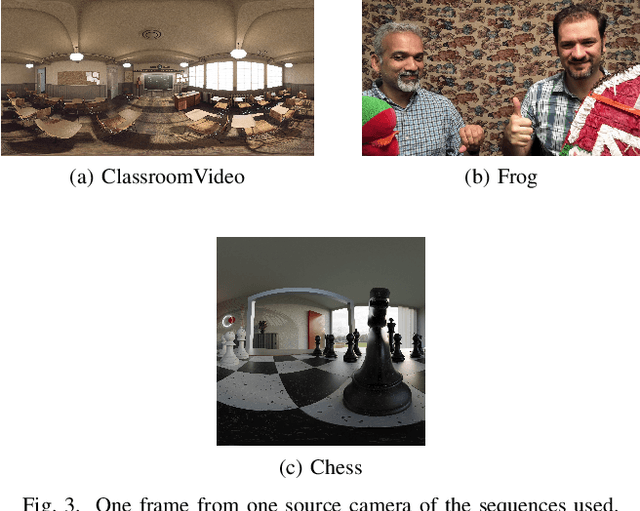

Abstract:Storage and transport of six degrees of freedom (6DoF) dynamic volumetric visual content for immersive applications requires efficient compression. ISO/IEC MPEG has recently been working on a standard that aims to efficiently code and deliver 6DoF immersive visual experiences. This standard is called the MIV. MIV uses regular 2D video codecs to code the visual data. MPEG jointly with ITU-T VCEG, has also specified the VVC standard. VVC introduced recently the concept of subpicture. This tool was specifically designed to provide independent accessibility and decodability of sub-bitstreams for omnidirectional applications. This paper shows the benefit of using subpictures in the MIV use-case. While different ways in which subpictures could be used in MIV are discussed, a particular case study is selected. Namely, subpictures are used for parallel encoding and to reduce the number of decoder instances. Experimental results show that the cost of using subpictures in terms of bitrate overhead is negligible (0.1% to 0.4%), when compared to the overall bitrate. The number of decoder instances on the other hand decreases by a factor of two.

* 6 pages, 3 figures

Omnidirectional MediA Format (OMAF): Toolbox for Virtual Reality Services

Mar 02, 2022

Abstract:This paper provides an overview of the Omnidirectional Media Format (OMAF) standard, second edition, which has been recently finalized. OMAF specifies the media format for coding, storage, delivery, and rendering of omnidirectional media, including video, audio, images, and timed text. Additionally, OMAF supports multiple viewpoints corresponding to omnidirectional cameras and overlay images or video rendered over the omnidirectional background image or video. Many examples of usage scenarios for multiple viewpoints and overlays are described in the paper. OMAF provides a toolbox of features, which can be selectively used in virtual reality services. Consequently, the paper presents the interoperability points specified in the OMAF standard, which enable signaling which OMAF features are in use or required to be supported in implementations. Finally, the paper summarizes which OMAF interoperability points have been taken into use in virtual reality service specifications by the 3rd Generation Partnership Project (3GPP) and the Virtual Reality Industry Forum (VRIF).

* 7 pages, 1 figure. This document is the accepted version of the paper that has been published in 2021 IEEE Conference on Standards for Communications and Networking (CSCN)

Lossless Image Compression Using a Multi-Scale Progressive Statistical Model

Aug 24, 2021

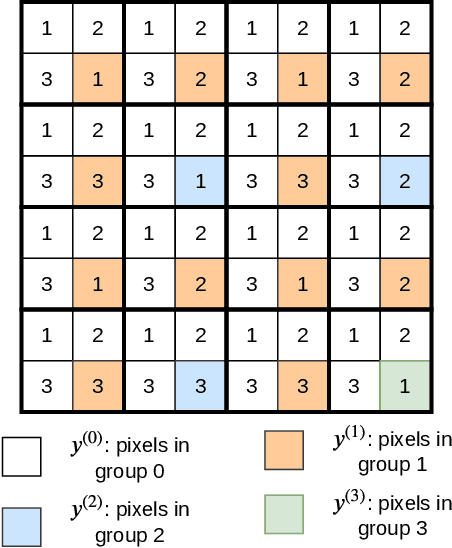

Abstract:Lossless image compression is an important technique for image storage and transmission when information loss is not allowed. With the fast development of deep learning techniques, deep neural networks have been used in this field to achieve a higher compression rate. Methods based on pixel-wise autoregressive statistical models have shown good performance. However, the sequential processing way prevents these methods to be used in practice. Recently, multi-scale autoregressive models have been proposed to address this limitation. Multi-scale approaches can use parallel computing systems efficiently and build practical systems. Nevertheless, these approaches sacrifice compression performance in exchange for speed. In this paper, we propose a multi-scale progressive statistical model that takes advantage of the pixel-wise approach and the multi-scale approach. We developed a flexible mechanism where the processing order of the pixels can be adjusted easily. Our proposed method outperforms the state-of-the-art lossless image compression methods on two large benchmark datasets by a significant margin without degrading the inference speed dramatically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge