Ming Ye

Bridging Synthetic-to-Real Gaps: Frequency-Aware Perturbation and Selection for Single-shot Multi-Parametric Mapping Reconstruction

Mar 05, 2025Abstract:Data-centric artificial intelligence (AI) has remarkably advanced medical imaging, with emerging methods using synthetic data to address data scarcity while introducing synthetic-to-real gaps. Unsupervised domain adaptation (UDA) shows promise in ground truth-scarce tasks, but its application in reconstruction remains underexplored. Although multiple overlapping-echo detachment (MOLED) achieves ultra-fast multi-parametric reconstruction, extending its application to various clinical scenarios, the quality suffers from deficiency in mitigating the domain gap, difficulty in maintaining structural integrity, and inadequacy in ensuring mapping accuracy. To resolve these issues, we proposed frequency-aware perturbation and selection (FPS), comprising Wasserstein distance-modulated frequency-aware perturbation (WDFP) and hierarchical frequency-aware selection network (HFSNet), which integrates frequency-aware adaptive selection (FAS), compact FAS (cFAS) and feature-aware architecture integration (FAI). Specifically, perturbation activates domain-invariant feature learning within uncertainty, while selection refines optimal solutions within perturbation, establishing a robust and closed-loop learning pathway. Extensive experiments on synthetic data, along with diverse real clinical cases from 5 healthy volunteers, 94 ischemic stroke patients, and 46 meningioma patients, demonstrate the superiority and clinical applicability of FPS. Furthermore, FPS is applied to diffusion tensor imaging (DTI), underscoring its versatility and potential for broader medical applications. The code is available at https://github.com/flyannie/FPS.

GAN Based Near-Field Channel Estimation for Extremely Large-Scale MIMO Systems

Feb 27, 2024Abstract:Extremely large-scale multiple-input-multiple-output (XL-MIMO) is a promising technique to achieve ultra-high spectral efficiency for future 6G communications. The mixed line-of-sight (LoS) and non-line-of-sight (NLoS) XL-MIMO near-field channel model is adopted to describe the XL-MIMO near-field channel accurately. In this paper, a generative adversarial network (GAN) variant based channel estimation method is proposed for XL-MIMO systems. Specifically, the GAN variant is developed to simultaneously estimate the LoS and NLoS path components of the XL-MIMO channel. The initially estimated channels instead of the received signals are input into the GAN variant as the conditional input to generate the XL-MIMO channels more efficiently. The GAN variant not only learns the mapping from the initially estimated channels to the XL-MIMO channels but also learns an adversarial loss. Moreover, we combine the adversarial loss with a conventional loss function to ensure the correct direction of training the generator. To further enhance the estimation performance, we investigate the impact of the hyper-parameter of the loss function on the performance of our method. Simulation results show that the proposed method outperforms the existing channel estimation approaches in the adopted channel model. In addition, the proposed method surpasses the Cram$\acute{\mathbf{e}}$r-Rao lower bound (CRLB) under low pilot overhead.

Cascade Feature Aggregation for Human Pose Estimation

Apr 08, 2019

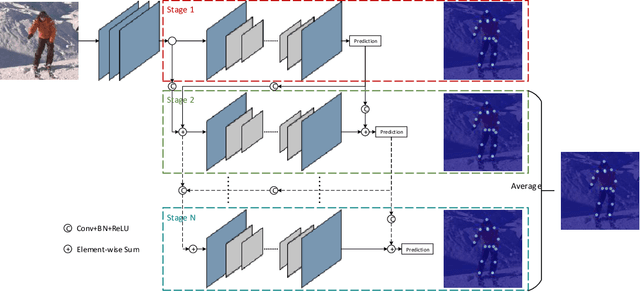

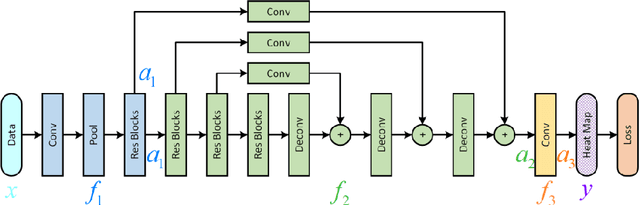

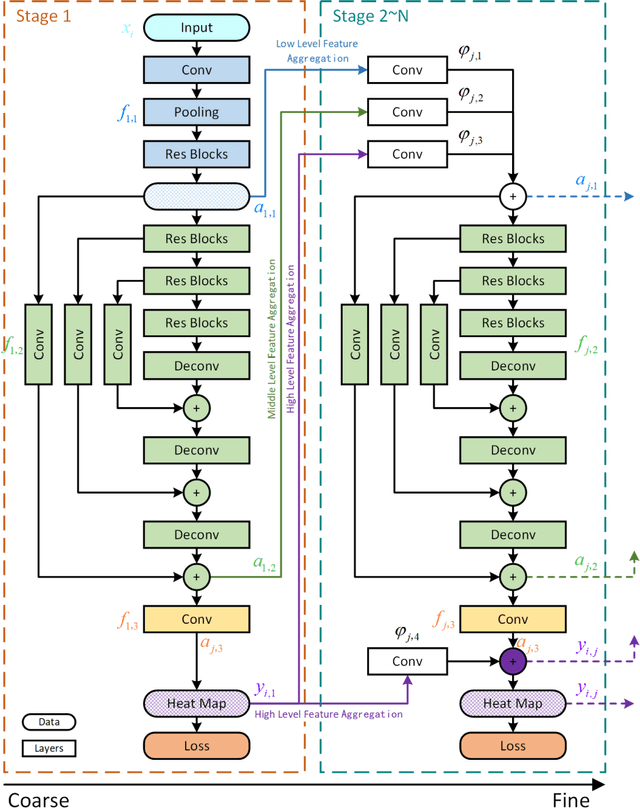

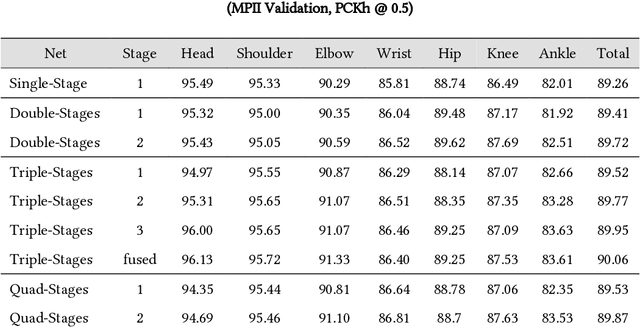

Abstract:Human pose estimation plays an important role in many computer vision tasks and has been studied for many decades. However, due to complex appearance variations from poses, illuminations, occlusions and low resolutions, it still remains a challenging problem. Taking the advantage of high-level semantic information from deep convolutional neural networks is an effective way to improve the accuracy of human pose estimation. In this paper, we propose a novel Cascade Feature Aggregation (CFA) method, which cascades several hourglass networks for robust human pose estimation. Features from different stages are aggregated to obtain abundant contextual information, leading to robustness to poses, partial occlusions and low resolution. Moreover, results from different stages are fused to further improve the localization accuracy. The extensive experiments on MPII datasets and LIP datasets demonstrate that our proposed CFA outperforms the state-of-the-art and achieves the best performance on the state-of-the-art benchmark MPII.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge