Meiya Chen

All-in-One: Transferring Vision Foundation Models into Stereo Matching

Dec 13, 2024

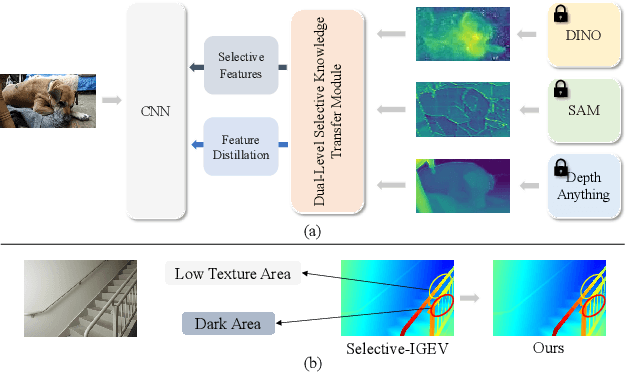

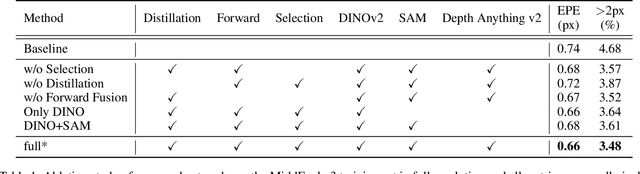

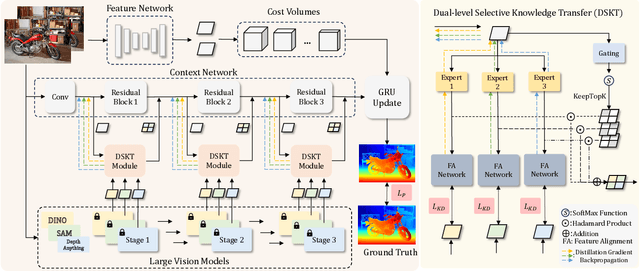

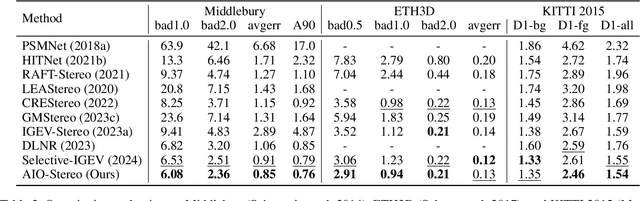

Abstract:As a fundamental vision task, stereo matching has made remarkable progress. While recent iterative optimization-based methods have achieved promising performance, their feature extraction capabilities still have room for improvement. Inspired by the ability of vision foundation models (VFMs) to extract general representations, in this work, we propose AIO-Stereo which can flexibly select and transfer knowledge from multiple heterogeneous VFMs to a single stereo matching model. To better reconcile features between heterogeneous VFMs and the stereo matching model and fully exploit prior knowledge from VFMs, we proposed a dual-level feature utilization mechanism that aligns heterogeneous features and transfers multi-level knowledge. Based on the mechanism, a dual-level selective knowledge transfer module is designed to selectively transfer knowledge and integrate the advantages of multiple VFMs. Experimental results show that AIO-Stereo achieves start-of-the-art performance on multiple datasets and ranks $1^{st}$ on the Middlebury dataset and outperforms all the published work on the ETH3D benchmark.

MIPI 2023 Challenge on RGB+ToF Depth Completion: Methods and Results

Apr 27, 2023

Abstract:Depth completion from RGB images and sparse Time-of-Flight (ToF) measurements is an important problem in computer vision and robotics. While traditional methods for depth completion have relied on stereo vision or structured light techniques, recent advances in deep learning have enabled more accurate and efficient completion of depth maps from RGB images and sparse ToF measurements. To evaluate the performance of different depth completion methods, we organized an RGB+sparse ToF depth completion competition. The competition aimed to encourage research in this area by providing a standardized dataset and evaluation metrics to compare the accuracy of different approaches. In this report, we present the results of the competition and analyze the strengths and weaknesses of the top-performing methods. We also discuss the implications of our findings for future research in RGB+sparse ToF depth completion. We hope that this competition and report will help to advance the state-of-the-art in this important area of research. More details of this challenge and the link to the dataset can be found at https://mipi-challenge.org/MIPI2023.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge