Meiqi Hu

MergeSAM: Unsupervised change detection of remote sensing images based on the Segment Anything Model

Jul 30, 2025Abstract:Recently, large foundation models trained on vast datasets have demonstrated exceptional capabilities in feature extraction and general feature representation. The ongoing advancements in deep learning-driven large models have shown great promise in accelerating unsupervised change detection methods, thereby enhancing the practical applicability of change detection technologies. Building on this progress, this paper introduces MergeSAM, an innovative unsupervised change detection method for high-resolution remote sensing imagery, based on the Segment Anything Model (SAM). Two novel strategies, MaskMatching and MaskSplitting, are designed to address real-world complexities such as object splitting, merging, and other intricate changes. The proposed method fully leverages SAM's object segmentation capabilities to construct multitemporal masks that capture complex changes, embedding the spatial structure of land cover into the change detection process.

HSANET: A Hybrid Self-Cross Attention Network For Remote Sensing Change Detection

Apr 21, 2025

Abstract:The remote sensing image change detection task is an essential method for large-scale monitoring. We propose HSANet, a network that uses hierarchical convolution to extract multi-scale features. It incorporates hybrid self-attention and cross-attention mechanisms to learn and fuse global and cross-scale information. This enables HSANet to capture global context at different scales and integrate cross-scale features, refining edge details and improving detection performance. We will also open-source our model code: https://github.com/ChengxiHAN/HSANet.

HyperSIGMA: Hyperspectral Intelligence Comprehension Foundation Model

Jun 17, 2024

Abstract:Foundation models (FMs) are revolutionizing the analysis and understanding of remote sensing (RS) scenes, including aerial RGB, multispectral, and SAR images. However, hyperspectral images (HSIs), which are rich in spectral information, have not seen much application of FMs, with existing methods often restricted to specific tasks and lacking generality. To fill this gap, we introduce HyperSIGMA, a vision transformer-based foundation model for HSI interpretation, scalable to over a billion parameters. To tackle the spectral and spatial redundancy challenges in HSIs, we introduce a novel sparse sampling attention (SSA) mechanism, which effectively promotes the learning of diverse contextual features and serves as the basic block of HyperSIGMA. HyperSIGMA integrates spatial and spectral features using a specially designed spectral enhancement module. In addition, we construct a large-scale hyperspectral dataset, HyperGlobal-450K, for pre-training, which contains about 450K hyperspectral images, significantly surpassing existing datasets in scale. Extensive experiments on various high-level and low-level HSI tasks demonstrate HyperSIGMA's versatility and superior representational capability compared to current state-of-the-art methods. Moreover, HyperSIGMA shows significant advantages in scalability, robustness, cross-modal transferring capability, and real-world applicability.

C2F-SemiCD: A Coarse-to-Fine Semi-Supervised Change Detection Method Based on Consistency Regularization in High-Resolution Remote Sensing Images

Apr 22, 2024

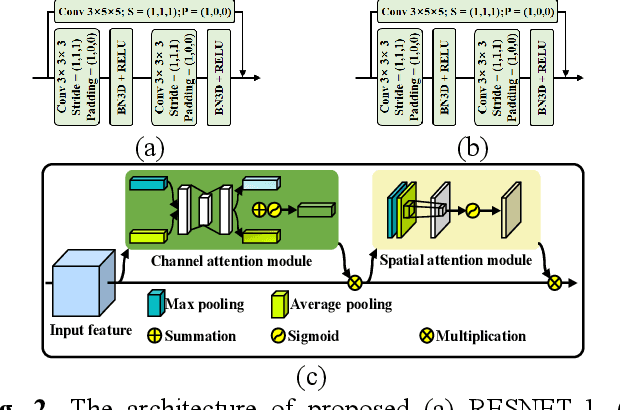

Abstract:A high-precision feature extraction model is crucial for change detection (CD). In the past, many deep learning-based supervised CD methods learned to recognize change feature patterns from a large number of labelled bi-temporal images, whereas labelling bi-temporal remote sensing images is very expensive and often time-consuming; therefore, we propose a coarse-to-fine semi-supervised CD method based on consistency regularization (C2F-SemiCD), which includes a coarse-to-fine CD network with a multiscale attention mechanism (C2FNet) and a semi-supervised update method. Among them, the C2FNet network gradually completes the extraction of change features from coarse-grained to fine-grained through multiscale feature fusion, channel attention mechanism, spatial attention mechanism, global context module, feature refine module, initial aggregation module, and final aggregation module. The semi-supervised update method uses the mean teacher method. The parameters of the student model are updated to the parameters of the teacher Model by using the exponential moving average (EMA) method. Through extensive experiments on three datasets and meticulous ablation studies, including crossover experiments across datasets, we verify the significant effectiveness and efficiency of the proposed C2F-SemiCD method. The code will be open at: https://github.com/ChengxiHAN/C2F-SemiCDand-C2FNet.

HANet: A Hierarchical Attention Network for Change Detection With Bitemporal Very-High-Resolution Remote Sensing Images

Apr 14, 2024

Abstract:Benefiting from the developments in deep learning technology, deep-learning-based algorithms employing automatic feature extraction have achieved remarkable performance on the change detection (CD) task. However, the performance of existing deep-learning-based CD methods is hindered by the imbalance between changed and unchanged pixels. To tackle this problem, a progressive foreground-balanced sampling strategy on the basis of not adding change information is proposed in this article to help the model accurately learn the features of the changed pixels during the early training process and thereby improve detection performance.Furthermore, we design a discriminative Siamese network, hierarchical attention network (HANet), which can integrate multiscale features and refine detailed features. The main part of HANet is the HAN module, which is a lightweight and effective self-attention mechanism. Extensive experiments and ablation studies on two CDdatasets with extremely unbalanced labels validate the effectiveness and efficiency of the proposed method.

Change Guiding Network: Incorporating Change Prior to Guide Change Detection in Remote Sensing Imagery

Apr 14, 2024

Abstract:The rapid advancement of automated artificial intelligence algorithms and remote sensing instruments has benefited change detection (CD) tasks. However, there is still a lot of space to study for precise detection, especially the edge integrity and internal holes phenomenon of change features. In order to solve these problems, we design the Change Guiding Network (CGNet), to tackle the insufficient expression problem of change features in the conventional U-Net structure adopted in previous methods, which causes inaccurate edge detection and internal holes. Change maps from deep features with rich semantic information are generated and used as prior information to guide multi-scale feature fusion, which can improve the expression ability of change features. Meanwhile, we propose a self-attention module named Change Guide Module (CGM), which can effectively capture the long-distance dependency among pixels and effectively overcome the problem of the insufficient receptive field of traditional convolutional neural networks. On four major CD datasets, we verify the usefulness and efficiency of the CGNet, and a large number of experiments and ablation studies demonstrate the effectiveness of CGNet. We're going to open-source our code at https://github.com/ChengxiHAN/CGNet-CD.

GlobalMind: Global Multi-head Interactive Self-attention Network for Hyperspectral Change Detection

Apr 18, 2023

Abstract:High spectral resolution imagery of the Earth's surface enables users to monitor changes over time in fine-grained scale, playing an increasingly important role in agriculture, defense, and emergency response. However, most current algorithms are still confined to describing local features and fail to incorporate a global perspective, which limits their ability to capture interactions between global features, thus usually resulting in incomplete change regions. In this paper, we propose a Global Multi-head INteractive self-attention change Detection network (GlobalMind) to explore the implicit correlation between different surface objects and variant land cover transformations, acquiring a comprehensive understanding of the data and accurate change detection result. Firstly, a simple but effective Global Axial Segmentation (GAS) strategy is designed to expand the self-attention computation along the row space or column space of hyperspectral images, allowing the global connection with high efficiency. Secondly, with GAS, the global spatial multi-head interactive self-attention (Global-M) module is crafted to mine the abundant spatial-spectral feature involving potential correlations between the ground objects from the entire rich and complex hyperspectral space. Moreover, to acquire the accurate and complete cross-temporal changes, we devise a global temporal interactive multi-head self-attention (GlobalD) module which incorporates the relevance and variation of bi-temporal spatial-spectral features, deriving the integrate potential same kind of changes in the local and global range with the combination of GAS. We perform extensive experiments on five mostly used hyperspectral datasets, and our method outperforms the state-of-the-art algorithms with high accuracy and efficiency.

EMS-Net: Efficient Multi-Temporal Self-Attention For Hyperspectral Change Detection

Mar 24, 2023Abstract:Hyperspectral change detection plays an essential role of monitoring the dynamic urban development and detecting precise fine object evolution and alteration. In this paper, we have proposed an original Efficient Multi-temporal Self-attention Network (EMS-Net) for hyperspectral change detection. The designed EMS module cuts redundancy of those similar and containing-no-changes feature maps, computing efficient multi-temporal change information for precise binary change map. Besides, to explore the clustering characteristics of the change detection, a novel supervised contrastive loss is provided to enhance the compactness of the unchanged. Experiments implemented on two hyperspectral change detection datasets manifests the out-standing performance and validity of proposed method.

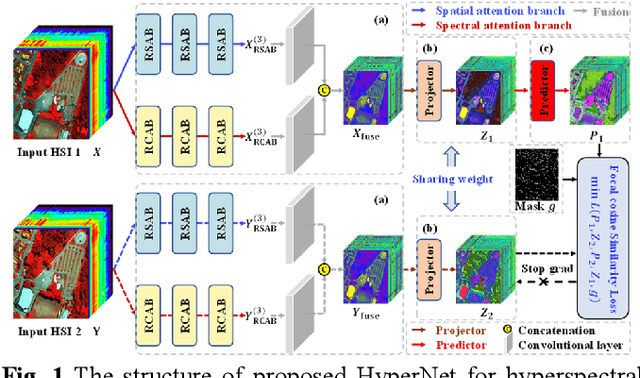

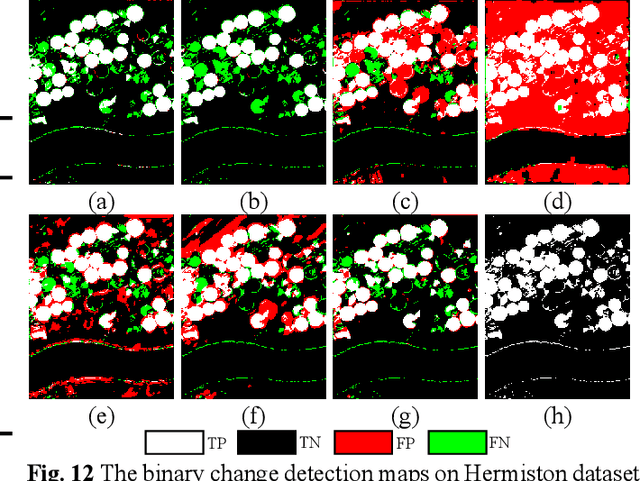

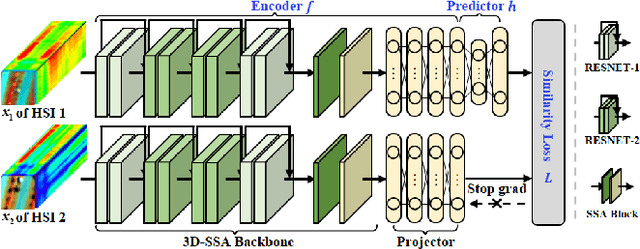

HyperNet: Self-Supervised Hyperspectral Spatial-Spectral Feature Understanding Network for Hyperspectral Change Detection

Jul 20, 2022

Abstract:The fast development of self-supervised learning lowers the bar learning feature representation from massive unlabeled data and has triggered a series of research on change detection of remote sensing images. Challenges in adapting self-supervised learning from natural images classification to remote sensing images change detection arise from difference between the two tasks. The learned patch-level feature representations are not satisfying for the pixel-level precise change detection. In this paper, we proposed a novel pixel-level self-supervised hyperspectral spatial-spectral understanding network (HyperNet) to accomplish pixel-wise feature representation for effective hyperspectral change detection. Concretely, not patches but the whole images are fed into the network and the multi-temporal spatial-spectral features are compared pixel by pixel. Instead of processing the two-dimensional imaging space and spectral response dimension in hybrid style, a powerful spatial-spectral attention module is put forward to explore the spatial correlation and discriminative spectral features of multi-temporal hyperspectral images (HSIs), separately. Only the positive samples at the same location of bi-temporal HSIs are created and forced to be aligned, aiming at learning the spectral difference-invariant features. Moreover, a new similarity loss function named focal cosine is proposed to solve the problem of imbalanced easy and hard positive samples comparison, where the weights of those hard samples are enlarged and highlighted to promote the network training. Six hyperspectral datasets have been adopted to test the validity and generalization of proposed HyperNet. The extensive experiments demonstrate the superiority of HyperNet over the state-of-the-art algorithms on downstream hyperspectral change detection tasks.

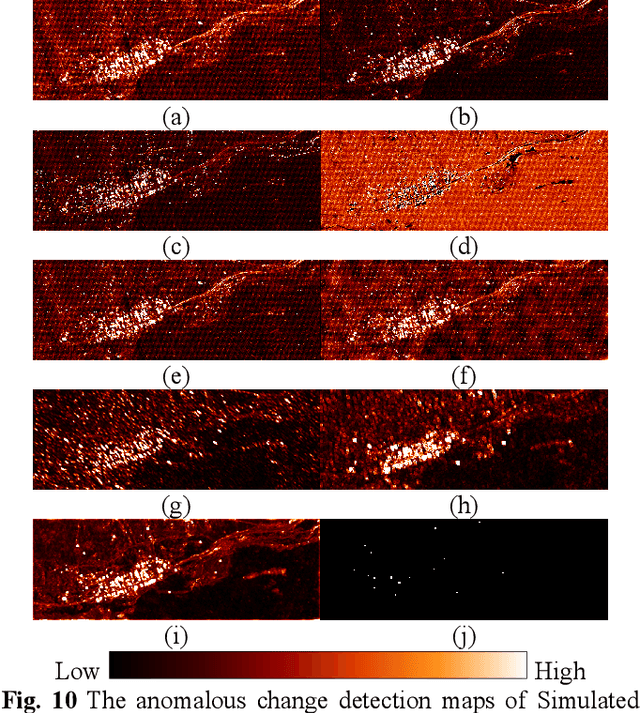

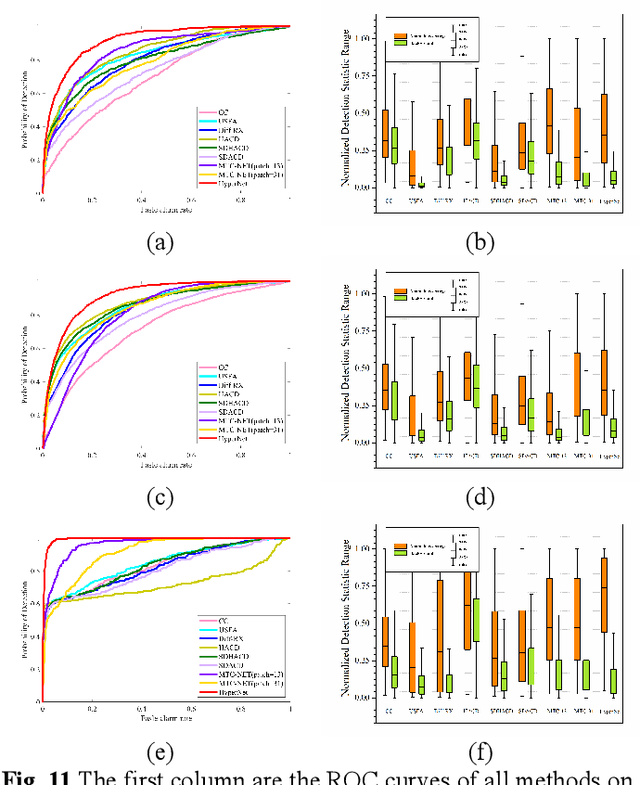

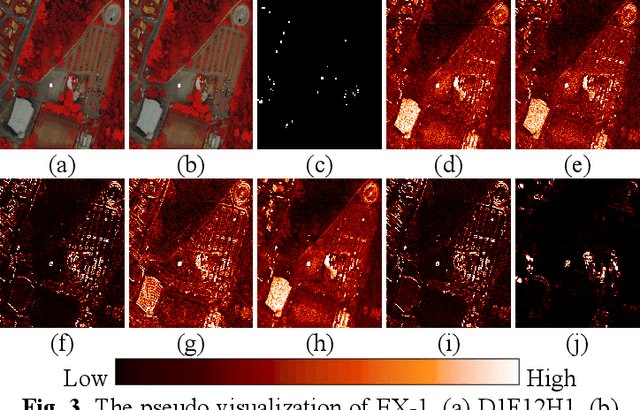

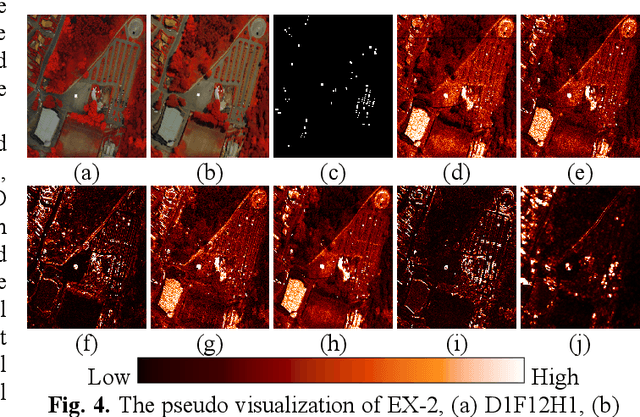

Multi-Temporal Spatial-Spectral Comparison Network for Hyperspectral Anomalous Change Detection

May 23, 2022

Abstract:Hyperspectral anomalous change detection has been a challenging task for its emphasis on the dynamics of small and rare objects against the prevalent changes. In this paper, we have proposed a Multi-Temporal spatial-spectral Comparison Network for hyperspectral anomalous change detection (MTC-NET). The whole model is a deep siamese network, aiming at learning the prevalent spectral difference resulting from the complex imaging conditions from the hyperspectral images by contrastive learning. A three-dimensional spatial spectral attention module is designed to effectively extract the spatial semantic information and the key spectral differences. Then the gaps between the multi-temporal features are minimized, boosting the alignment of the semantic and spectral features and the suppression of the multi-temporal background spectral difference. The experiments on the "Viareggio 2013" datasets demonstrate the effectiveness of proposed MTC-NET.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge