Matias Quintana

It is not always greener on the other side: Greenery perception across demographics and personalities in multiple cities

Dec 19, 2025Abstract:Quantifying and assessing urban greenery is consequential for planning and development, reflecting the everlasting importance of green spaces for multiple climate and well-being dimensions of cities. Evaluation can be broadly grouped into objective (e.g., measuring the amount of greenery) and subjective (e.g., polling the perception of people) approaches, which may differ -- what people see and feel about how green a place is might not match the measurements of the actual amount of vegetation. In this work, we advance the state of the art by measuring such differences and explaining them through human, geographic, and spatial dimensions. The experiments rely on contextual information extracted from street view imagery and a comprehensive urban visual perception survey collected from 1,000 people across five countries with their extensive demographic and personality information. We analyze the discrepancies between objective measures (e.g., Green View Index (GVI)) and subjective scores (e.g., pairwise ratings), examining whether they can be explained by a variety of human and visual factors such as age group and spatial variation of greenery in the scene. The findings reveal that such discrepancies are comparable around the world and that demographics and personality do not play a significant role in perception. Further, while perceived and measured greenery correlate consistently across geographies (both where people and where imagery are from), where people live plays a significant role in explaining perceptual differences, with these two, as the top among seven, features that influences perceived greenery the most. This location influence suggests that cultural, environmental, and experiential factors substantially shape how individuals observe greenery in cities.

It's not you, it's me -- Global urban visual perception varies across demographics and personalities

May 19, 2025Abstract:Understanding people's preferences and needs is crucial for urban planning decisions, yet current approaches often combine them from multi-cultural and multi-city populations, obscuring important demographic differences and risking amplifying biases. We conducted a large-scale urban visual perception survey of streetscapes worldwide using street view imagery, examining how demographics -- including gender, age, income, education, race and ethnicity, and, for the first time, personality traits -- shape perceptions among 1,000 participants, with balanced demographics, from five countries and 45 nationalities. This dataset, introduced as Street Perception Evaluation Considering Socioeconomics (SPECS), exhibits statistically significant differences in perception scores in six traditionally used indicators (safe, lively, wealthy, beautiful, boring, and depressing) and four new ones we propose (live nearby, walk, cycle, green) among demographics and personalities. We revealed that location-based sentiments are carried over in people's preferences when comparing urban streetscapes with other cities. Further, we compared the perception scores based on where participants and streetscapes are from. We found that an off-the-shelf machine learning model trained on an existing global perception dataset tends to overestimate positive indicators and underestimate negative ones compared to human responses, suggesting that targeted intervention should consider locals' perception. Our study aspires to rectify the myopic treatment of street perception, which rarely considers demographics or personality traits.

ZenSVI: An Open-Source Software for the Integrated Acquisition, Processing and Analysis of Street View Imagery Towards Scalable Urban Science

Dec 24, 2024Abstract:Street view imagery (SVI) has been instrumental in many studies in the past decade to understand and characterize street features and the built environment. Researchers across a variety of domains, such as transportation, health, architecture, human perception, and infrastructure have employed different methods to analyze SVI. However, these applications and image-processing procedures have not been standardized, and solutions have been implemented in isolation, often making it difficult for others to reproduce existing work and carry out new research. Using SVI for research requires multiple technical steps: accessing APIs for scalable data collection, preprocessing images to standardize formats, implementing computer vision models for feature extraction, and conducting spatial analysis. These technical requirements create barriers for researchers in urban studies, particularly those without extensive programming experience. We develop ZenSVI, a free and open-source Python package that integrates and implements the entire process of SVI analysis, supporting a wide range of use cases. Its end-to-end pipeline includes downloading SVI from multiple platforms (e.g., Mapillary and KartaView) efficiently, analyzing metadata of SVI, applying computer vision models to extract target features, transforming SVI into different projections (e.g., fish-eye and perspective) and different formats (e.g., depth map and point cloud), visualizing analyses with maps and plots, and exporting outputs to other software tools. We demonstrate its use in Singapore through a case study of data quality assessment and clustering analysis in a streamlined manner. Our software improves the transparency, reproducibility, and scalability of research relying on SVI and supports researchers in conducting urban analyses efficiently. Its modular design facilitates extensions and unlocking new use cases.

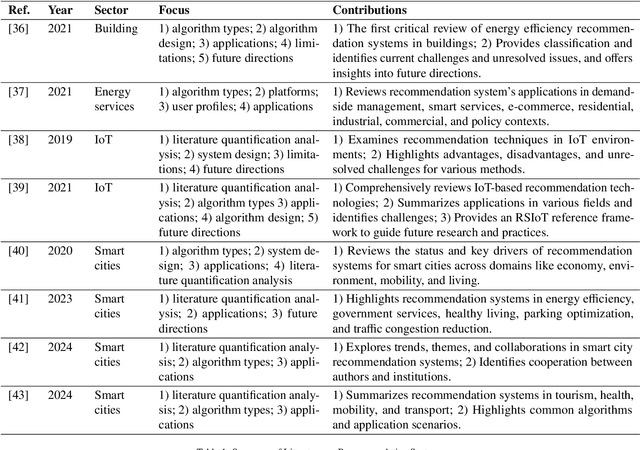

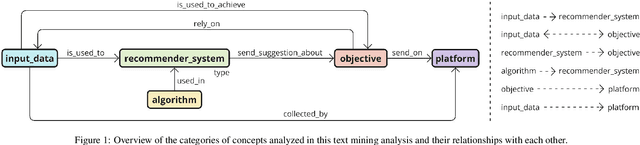

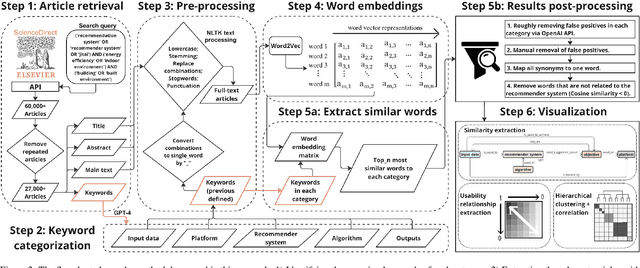

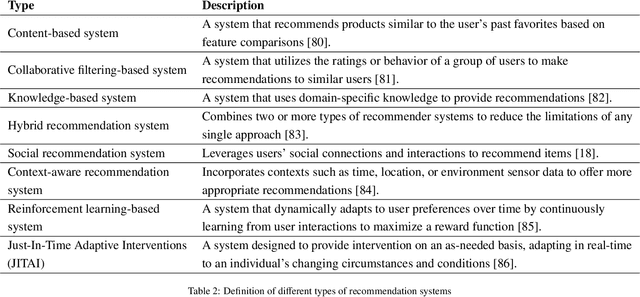

Recommender systems and reinforcement learning for building control and occupant interaction: A text-mining driven review of scientific literature

Nov 13, 2024

Abstract:The indoor environment greatly affects health and well-being; enhancing health and reducing energy use in these settings is a key research focus. With advancing Information and Communication Technology (ICT), recommendation systems and reinforcement learning have emerged as promising methods to induce behavioral changes that improve indoor environments and building energy efficiency. This study employs text-mining and Natural Language Processing (NLP) to examine these approaches in building control and occupant interaction. Analyzing approximately 27,000 articles from the ScienceDirect database, we found extensive use of recommendation systems and reinforcement learning for space optimization, location recommendations, and personalized control suggestions. Despite broad applications, their use in optimizing indoor environments and energy efficiency is limited. Traditional recommendation algorithms are commonly used, but optimizing indoor conditions and energy efficiency often requires advanced machine learning techniques like reinforcement and deep learning. This review highlights the potential for expanding recommender systems and reinforcement learning applications in buildings and indoor environments. Areas for innovation include predictive maintenance, building-related product recommendations, and optimizing environments for specific needs like sleep and productivity enhancements based on user feedback.

Creating synthetic energy meter data using conditional diffusion and building metadata

Mar 31, 2024

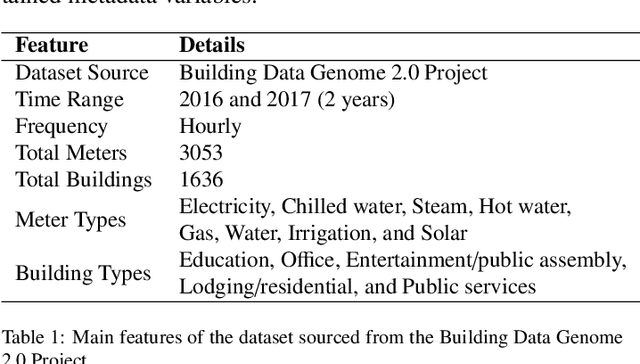

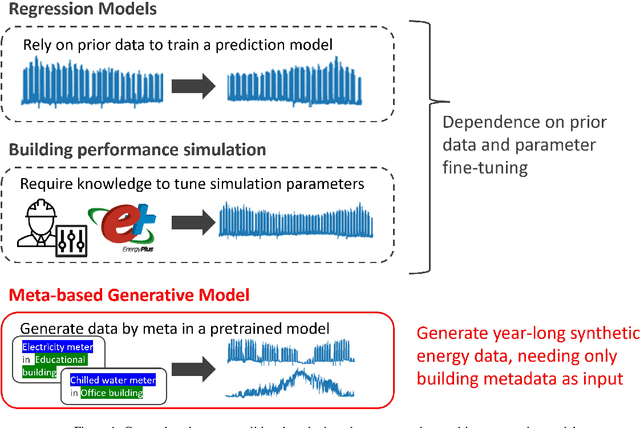

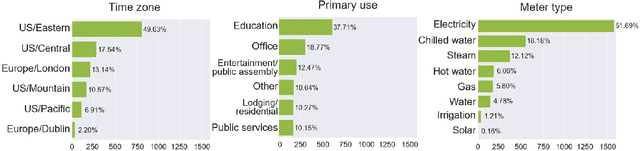

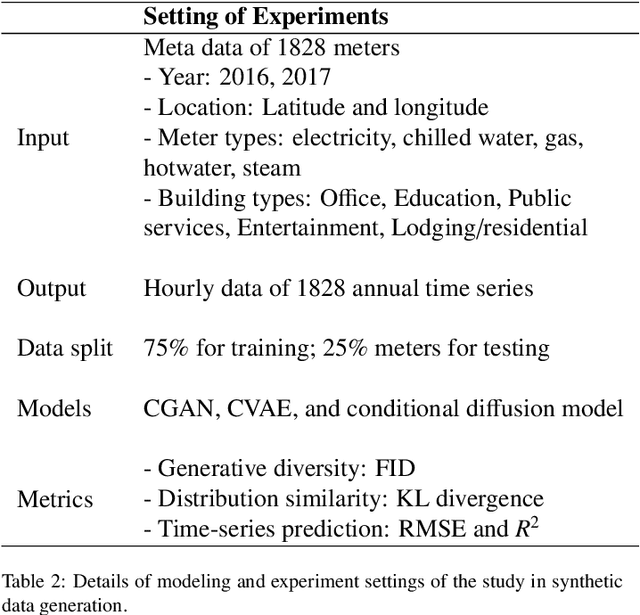

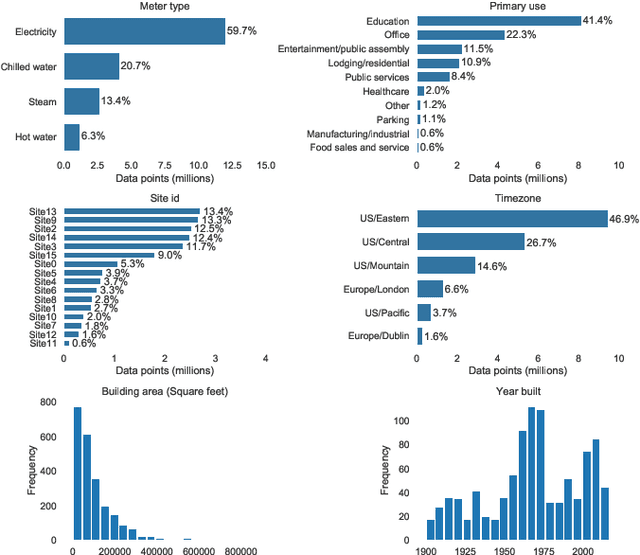

Abstract:Advances in machine learning and increased computational power have driven progress in energy-related research. However, limited access to private energy data from buildings hinders traditional regression models relying on historical data. While generative models offer a solution, previous studies have primarily focused on short-term generation periods (e.g., daily profiles) and a limited number of meters. Thus, the study proposes a conditional diffusion model for generating high-quality synthetic energy data using relevant metadata. Using a dataset comprising 1,828 power meters from various buildings and countries, this model is compared with traditional methods like Conditional Generative Adversarial Networks (CGAN) and Conditional Variational Auto-Encoders (CVAE). It explicitly handles long-term annual consumption profiles, harnessing metadata such as location, weather, building, and meter type to produce coherent synthetic data that closely resembles real-world energy consumption patterns. The results demonstrate the proposed diffusion model's superior performance, with a 36% reduction in Frechet Inception Distance (FID) score and a 13% decrease in Kullback-Leibler divergence (KL divergence) compared to the following best method. The proposed method successfully generates high-quality energy data through metadata, and its code will be open-sourced, establishing a foundation for a broader array of energy data generation models in the future.

Opening the Black Box: Towards inherently interpretable energy data imputation models using building physics insight

Nov 28, 2023Abstract:Missing data are frequently observed by practitioners and researchers in the building energy modeling community. In this regard, advanced data-driven solutions, such as Deep Learning methods, are typically required to reflect the non-linear behavior of these anomalies. As an ongoing research question related to Deep Learning, a model's applicability to limited data settings can be explored by introducing prior knowledge in the network. This same strategy can also lead to more interpretable predictions, hence facilitating the field application of the approach. For that purpose, the aim of this paper is to propose the use of Physics-informed Denoising Autoencoders (PI-DAE) for missing data imputation in commercial buildings. In particular, the presented method enforces physics-inspired soft constraints to the loss function of a Denoising Autoencoder (DAE). In order to quantify the benefits of the physical component, an ablation study between different DAE configurations is conducted. First, three univariate DAEs are optimized separately on indoor air temperature, heating, and cooling data. Then, two multivariate DAEs are derived from the previous configurations. Eventually, a building thermal balance equation is coupled to the last multivariate configuration to obtain PI-DAE. Additionally, two commonly used benchmarks are employed to support the findings. It is shown how introducing physical knowledge in a multivariate Denoising Autoencoder can enhance the inherent model interpretability through the optimized physics-based coefficients. While no significant improvement is observed in terms of reconstruction error with the proposed PI-DAE, its enhanced robustness to varying rates of missing data and the valuable insights derived from the physics-based coefficients create opportunities for wider applications within building systems and the built environment.

Filling time-series gaps using image techniques: Multidimensional context autoencoder approach for building energy data imputation

Jul 13, 2023Abstract:Building energy prediction and management has become increasingly important in recent decades, driven by the growth of Internet of Things (IoT) devices and the availability of more energy data. However, energy data is often collected from multiple sources and can be incomplete or inconsistent, which can hinder accurate predictions and management of energy systems and limit the usefulness of the data for decision-making and research. To address this issue, past studies have focused on imputing missing gaps in energy data, including random and continuous gaps. One of the main challenges in this area is the lack of validation on a benchmark dataset with various building and meter types, making it difficult to accurately evaluate the performance of different imputation methods. Another challenge is the lack of application of state-of-the-art imputation methods for missing gaps in energy data. Contemporary image-inpainting methods, such as Partial Convolution (PConv), have been widely used in the computer vision domain and have demonstrated their effectiveness in dealing with complex missing patterns. To study whether energy data imputation can benefit from the image-based deep learning method, this study compared PConv, Convolutional neural networks (CNNs), and weekly persistence method using one of the biggest publicly available whole building energy datasets, consisting of 1479 power meters worldwide, as the benchmark. The results show that, compared to the CNN with the raw time series (1D-CNN) and the weekly persistence method, neural network models with reshaped energy data with two dimensions reduced the Mean Squared Error (MSE) by 10% to 30%. The advanced deep learning method, Partial convolution (PConv), has further reduced the MSE by 20-30% than 2D-CNN and stands out among all models.

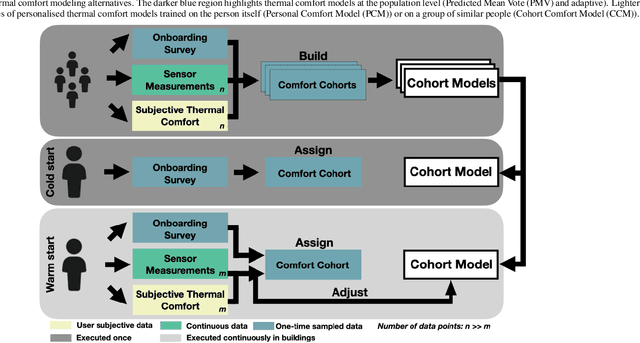

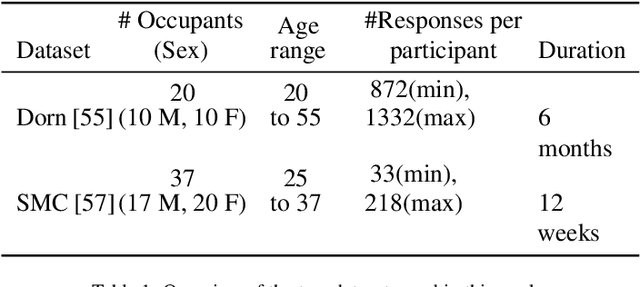

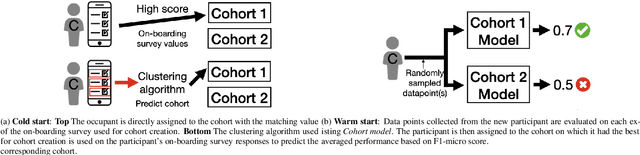

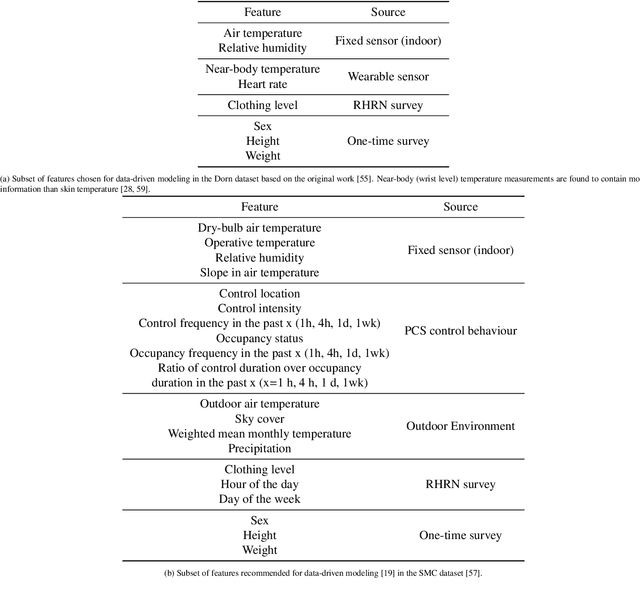

Cohort comfort models -- Using occupants' similarity to predict personal thermal preference with less data

Aug 05, 2022

Abstract:We introduce Cohort Comfort Models, a new framework for predicting how new occupants would perceive their thermal environment. Cohort Comfort Models leverage historical data collected from a sample population, who have some underlying preference similarity, to predict thermal preference responses of new occupants. Our framework is capable of exploiting available background information such as physical characteristics and one-time on-boarding surveys (satisfaction with life scale, highly sensitive person scale, the Big Five personality traits) from the new occupant as well as physiological and environmental sensor measurements paired with thermal preference responses. We implemented our framework in two publicly available datasets containing longitudinal data from 55 people, comprising more than 6,000 individual thermal comfort surveys. We observed that, a Cohort Comfort Model that uses background information provided very little change in thermal preference prediction performance but uses none historical data. On the other hand, for half and one third of each dataset occupant population, using Cohort Comfort Models, with less historical data from target occupants, Cohort Comfort Models increased their thermal preference prediction by 8~\% and 5~\% on average, and up to 36~\% and 46~\% for some occupants, when compared to general-purpose models trained on the whole population of occupants. The framework is presented in a data and site agnostic manner, with its different components easily tailored to the data availability of the occupants and the buildings. Cohort Comfort Models can be an important step towards personalization without the need of developing a personalized model for each new occupant.

ALDI++: Automatic and parameter-less discord and outlier detection for building energy load profiles

Mar 13, 2022

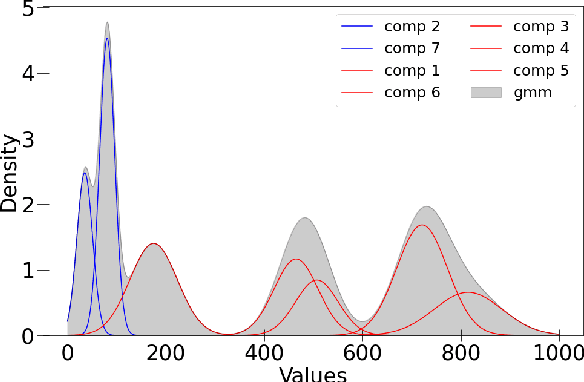

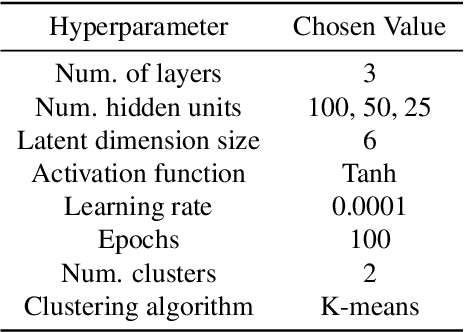

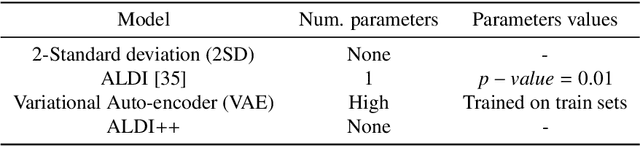

Abstract:Data-driven building energy prediction is an integral part of the process for measurement and verification, building benchmarking, and building-to-grid interaction. The ASHRAE Great Energy Predictor III (GEPIII) machine learning competition used an extensive meter data set to crowdsource the most accurate machine learning workflow for whole building energy prediction. A significant component of the winning solutions was the pre-processing phase to remove anomalous training data. Contemporary pre-processing methods focus on filtering statistical threshold values or deep learning methods requiring training data and multiple hyper-parameters. A recent method named ALDI (Automated Load profile Discord Identification) managed to identify these discords using matrix profile, but the technique still requires user-defined parameters. We develop ALDI++, a method based on the previous work that bypasses user-defined parameters and takes advantage of discord similarity. We evaluate ALDI++ against a statistical threshold, variational auto-encoder, and the original ALDI as baselines in classifying discords and energy forecasting scenarios. Our results demonstrate that while the classification performance improvement over the original method is marginal, ALDI++ helps achieve the best forecasting error improving 6% over the winning's team approach with six times less computation time.

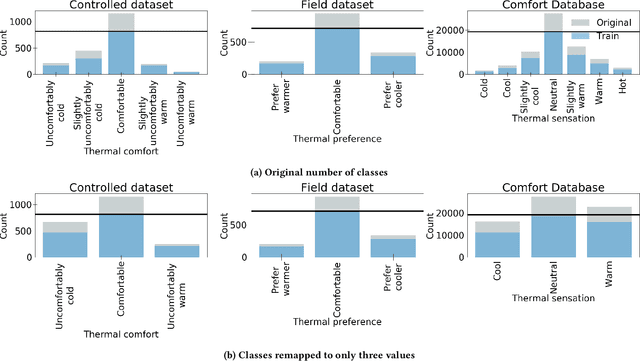

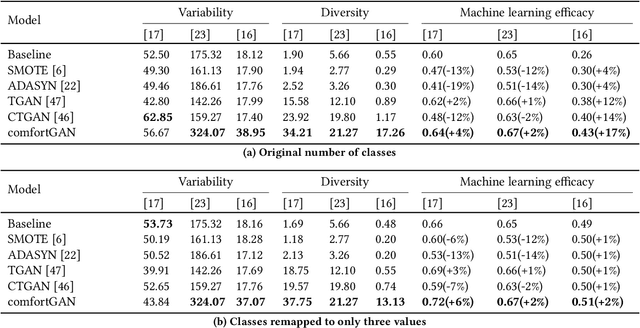

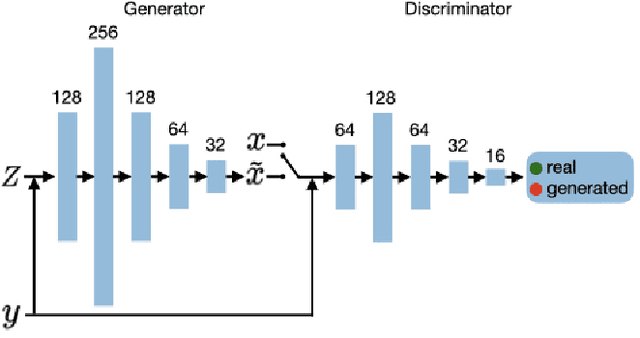

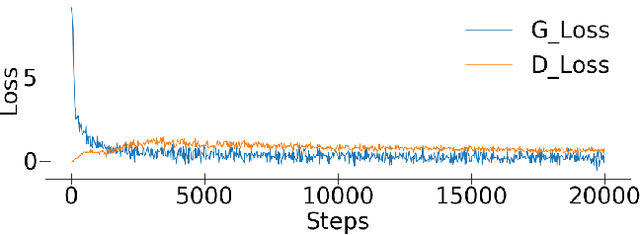

Balancing thermal comfort datasets: We GAN, but should we?

Sep 28, 2020

Abstract:Thermal comfort assessment for the built environment has become more available to analysts and researchers due to the proliferation of sensors and subjective feedback methods. These data can be used for modeling comfort behavior to support design and operations towards energy efficiency and well-being. By nature, occupant subjective feedback is imbalanced as indoor conditions are designed for comfort, and responses indicating otherwise are less common. This situation creates a scenario for the machine learning workflow where class balancing as a pre-processing step might be valuable for developing predictive thermal comfort classification models with high-performance. This paper investigates the various thermal comfort dataset class balancing techniques from the literature and proposes a modified conditional Generative Adversarial Network (GAN), $\texttt{comfortGAN}$, to address this imbalance scenario. These approaches are applied to three publicly available datasets, ranging from 30 and 67 participants to a global collection of thermal comfort datasets, with 1,474; 2,067; and 66,397 data points, respectively. This work finds that a classification model trained on a balanced dataset, comprised of real and generated samples from $\texttt{comfortGAN}$, has higher performance (increase between 4% and 17% in classification accuracy) than other augmentation methods tested. However, when classes representing discomfort are merged and reduced to three, better imbalanced performance is expected, and the additional increase in performance by $\texttt{comfortGAN}$ shrinks to 1-2%. These results illustrate that class balancing for thermal comfort modeling is beneficial using advanced techniques such as GANs, but its value is diminished in certain scenarios. A discussion is provided to assist potential users in determining which scenarios this process is useful and which method works best.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge