Masoumeh Zareapoor

Finding Structure in Continual Learning

Feb 04, 2026Abstract:Learning from a stream of tasks usually pits plasticity against stability: acquiring new knowledge often causes catastrophic forgetting of past information. Most methods address this by summing competing loss terms, creating gradient conflicts that are managed with complex and often inefficient strategies such as external memory replay or parameter regularization. We propose a reformulation of the continual learning objective using Douglas-Rachford Splitting (DRS). This reframes the learning process not as a direct trade-off, but as a negotiation between two decoupled objectives: one promoting plasticity for new tasks and the other enforcing stability of old knowledge. By iteratively finding a consensus through their proximal operators, DRS provides a more principled and stable learning dynamic. Our approach achieves an efficient balance between stability and plasticity without the need for auxiliary modules or complex add-ons, providing a simpler yet more powerful paradigm for continual learning systems.

From Missing Pieces to Masterpieces: Image Completion with Context-Adaptive Diffusion

Apr 19, 2025Abstract:Image completion is a challenging task, particularly when ensuring that generated content seamlessly integrates with existing parts of an image. While recent diffusion models have shown promise, they often struggle with maintaining coherence between known and unknown (missing) regions. This issue arises from the lack of explicit spatial and semantic alignment during the diffusion process, resulting in content that does not smoothly integrate with the original image. Additionally, diffusion models typically rely on global learned distributions rather than localized features, leading to inconsistencies between the generated and existing image parts. In this work, we propose ConFill, a novel framework that introduces a Context-Adaptive Discrepancy (CAD) model to ensure that intermediate distributions of known and unknown regions are closely aligned throughout the diffusion process. By incorporating CAD, our model progressively reduces discrepancies between generated and original images at each diffusion step, leading to contextually aligned completion. Moreover, ConFill uses a new Dynamic Sampling mechanism that adaptively increases the sampling rate in regions with high reconstruction complexity. This approach enables precise adjustments, enhancing detail and integration in restored areas. Extensive experiments demonstrate that ConFill outperforms current methods, setting a new benchmark in image completion.

Fractional Correspondence Framework in Detection Transformer

Mar 06, 2025

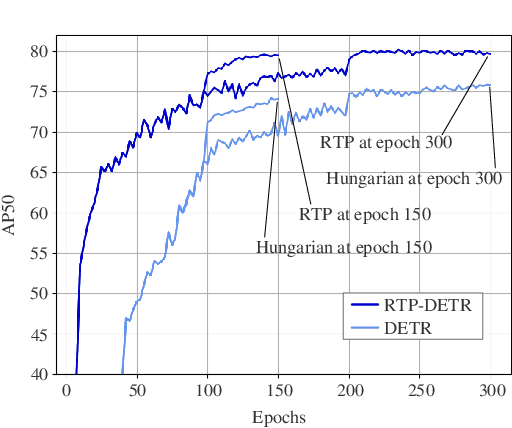

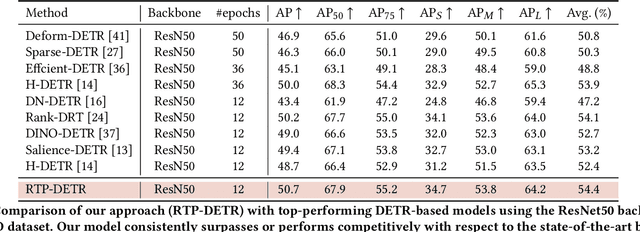

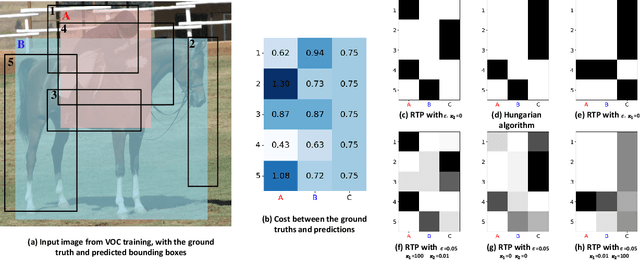

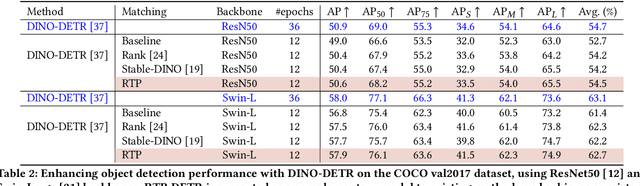

Abstract:The Detection Transformer (DETR), by incorporating the Hungarian algorithm, has significantly simplified the matching process in object detection tasks. This algorithm facilitates optimal one-to-one matching of predicted bounding boxes to ground-truth annotations during training. While effective, this strict matching process does not inherently account for the varying densities and distributions of objects, leading to suboptimal correspondences such as failing to handle multiple detections of the same object or missing small objects. To address this, we propose the Regularized Transport Plan (RTP). RTP introduces a flexible matching strategy that captures the cost of aligning predictions with ground truths to find the most accurate correspondences between these sets. By utilizing the differentiable Sinkhorn algorithm, RTP allows for soft, fractional matching rather than strict one-to-one assignments. This approach enhances the model's capability to manage varying object densities and distributions effectively. Our extensive evaluations on the MS-COCO and VOC benchmarks demonstrate the effectiveness of our approach. RTP-DETR, surpassing the performance of the Deform-DETR and the recently introduced DINO-DETR, achieving absolute gains in mAP of +3.8% and +1.7%, respectively.

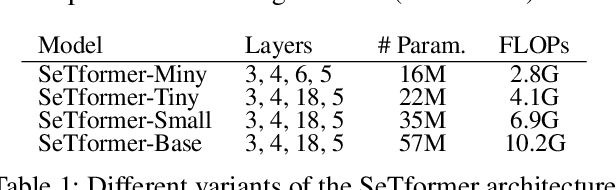

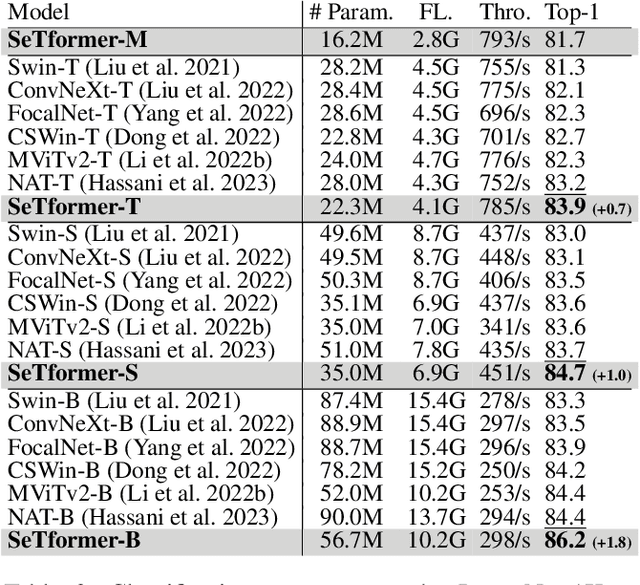

SeTformer is What You Need for Vision and Language

Jan 07, 2024

Abstract:The dot product self-attention (DPSA) is a fundamental component of transformers. However, scaling them to long sequences, like documents or high-resolution images, becomes prohibitively expensive due to quadratic time and memory complexities arising from the softmax operation. Kernel methods are employed to simplify computations by approximating softmax but often lead to performance drops compared to softmax attention. We propose SeTformer, a novel transformer, where DPSA is purely replaced by Self-optimal Transport (SeT) for achieving better performance and computational efficiency. SeT is based on two essential softmax properties: maintaining a non-negative attention matrix and using a nonlinear reweighting mechanism to emphasize important tokens in input sequences. By introducing a kernel cost function for optimal transport, SeTformer effectively satisfies these properties. In particular, with small and basesized models, SeTformer achieves impressive top-1 accuracies of 84.7% and 86.2% on ImageNet-1K. In object detection, SeTformer-base outperforms the FocalNet counterpart by +2.2 mAP, using 38% fewer parameters and 29% fewer FLOPs. In semantic segmentation, our base-size model surpasses NAT by +3.5 mIoU with 33% fewer parameters. SeTformer also achieves state-of-the-art results in language modeling on the GLUE benchmark. These findings highlight SeTformer's applicability in vision and language tasks.

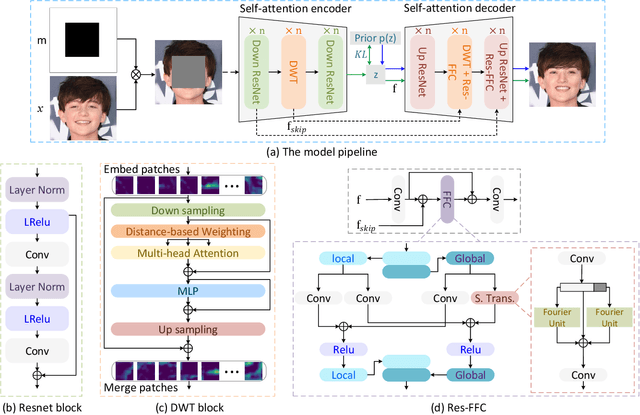

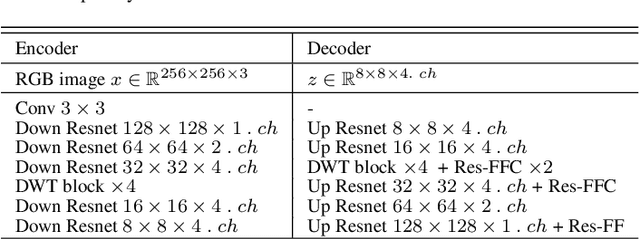

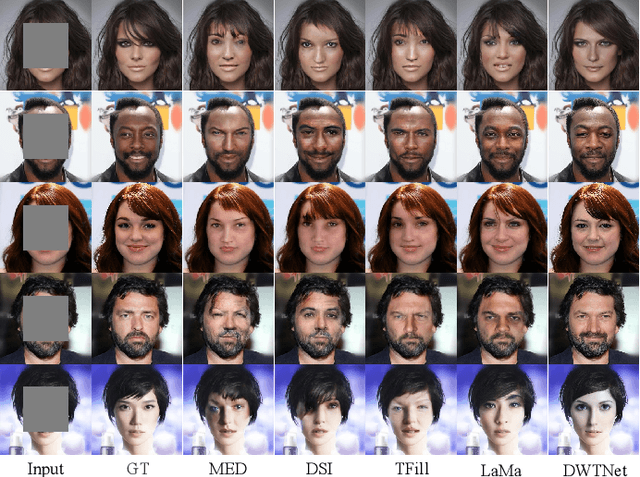

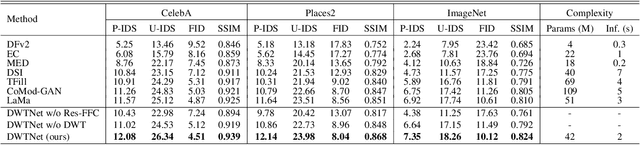

Distance Weighted Trans Network for Image Completion

Oct 25, 2023

Abstract:The challenge of image generation has been effectively modeled as a problem of structure priors or transformation. However, existing models have unsatisfactory performance in understanding the global input image structures because of particular inherent features (for example, local inductive prior). Recent studies have shown that self-attention is an efficient modeling technique for image completion problems. In this paper, we propose a new architecture that relies on Distance-based Weighted Transformer (DWT) to better understand the relationships between an image's components. In our model, we leverage the strengths of both Convolutional Neural Networks (CNNs) and DWT blocks to enhance the image completion process. Specifically, CNNs are used to augment the local texture information of coarse priors and DWT blocks are used to recover certain coarse textures and coherent visual structures. Unlike current approaches that generally use CNNs to create feature maps, we use the DWT to encode global dependencies and compute distance-based weighted feature maps, which substantially minimizes the problem of visual ambiguities. Meanwhile, to better produce repeated textures, we introduce Residual Fast Fourier Convolution (Res-FFC) blocks to combine the encoder's skip features with the coarse features provided by our generator. Furthermore, a simple yet effective technique is proposed to normalize the non-zero values of convolutions, and fine-tune the network layers for regularization of the gradient norms to provide an efficient training stabiliser. Extensive quantitative and qualitative experiments on three challenging datasets demonstrate the superiority of our proposed model compared to existing approaches.

Entropy Transformer Networks: A Learning Approach via Tangent Bundle Data Manifold

Jul 24, 2023Abstract:This paper focuses on an accurate and fast interpolation approach for image transformation employed in the design of CNN architectures. Standard Spatial Transformer Networks (STNs) use bilinear or linear interpolation as their interpolation, with unrealistic assumptions about the underlying data distributions, which leads to poor performance under scale variations. Moreover, STNs do not preserve the norm of gradients in propagation due to their dependency on sparse neighboring pixels. To address this problem, a novel Entropy STN (ESTN) is proposed that interpolates on the data manifold distributions. In particular, random samples are generated for each pixel in association with the tangent space of the data manifold and construct a linear approximation of their intensity values with an entropy regularizer to compute the transformer parameters. A simple yet effective technique is also proposed to normalize the non-zero values of the convolution operation, to fine-tune the layers for gradients' norm-regularization during training. Experiments on challenging benchmarks show that the proposed ESTN can improve predictive accuracy over a range of computer vision tasks, including image reconstruction, and classification, while reducing the computational cost.

Image Completion via Dual-path Cooperative Filtering

Apr 30, 2023Abstract:Given the recent advances with image-generating algorithms, deep image completion methods have made significant progress. However, state-of-art methods typically provide poor cross-scene generalization, and generated masked areas often contain blurry artifacts. Predictive filtering is a method for restoring images, which predicts the most effective kernels based on the input scene. Motivated by this approach, we address image completion as a filtering problem. Deep feature-level semantic filtering is introduced to fill in missing information, while preserving local structure and generating visually realistic content. In particular, a Dual-path Cooperative Filtering (DCF) model is proposed, where one path predicts dynamic kernels, and the other path extracts multi-level features by using Fast Fourier Convolution to yield semantically coherent reconstructions. Experiments on three challenging image completion datasets show that our proposed DCF outperforms state-of-art methods.

VTAE: Variational Transformer Autoencoder with Manifolds Learning

Apr 03, 2023Abstract:Deep generative models have demonstrated successful applications in learning non-linear data distributions through a number of latent variables and these models use a nonlinear function (generator) to map latent samples into the data space. On the other hand, the nonlinearity of the generator implies that the latent space shows an unsatisfactory projection of the data space, which results in poor representation learning. This weak projection, however, can be addressed by a Riemannian metric, and we show that geodesics computation and accurate interpolations between data samples on the Riemannian manifold can substantially improve the performance of deep generative models. In this paper, a Variational spatial-Transformer AutoEncoder (VTAE) is proposed to minimize geodesics on a Riemannian manifold and improve representation learning. In particular, we carefully design the variational autoencoder with an encoded spatial-Transformer to explicitly expand the latent variable model to data on a Riemannian manifold, and obtain global context modelling. Moreover, to have smooth and plausible interpolations while traversing between two different objects' latent representations, we propose a geodesic interpolation network different from the existing models that use linear interpolation with inferior performance. Experiments on benchmarks show that our proposed model can improve predictive accuracy and versatility over a range of computer vision tasks, including image interpolations, and reconstructions.

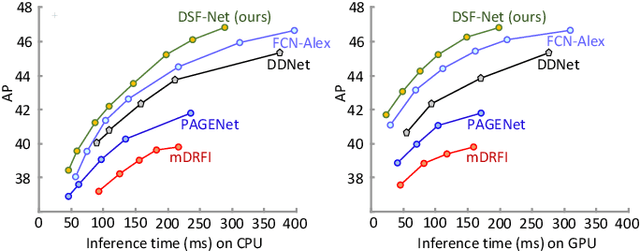

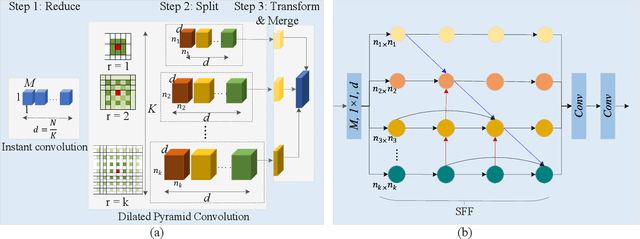

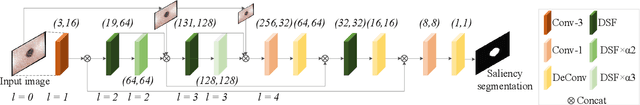

Salient Skin Lesion Segmentation via Dilated Scale-Wise Feature Fusion Network

May 20, 2022

Abstract:Skin lesion detection in dermoscopic images is essential in the accurate and early diagnosis of skin cancer by a computerized apparatus. Current skin lesion segmentation approaches show poor performance in challenging circumstances such as indistinct lesion boundaries, low contrast between the lesion and the surrounding area, or heterogeneous background that causes over/under segmentation of the skin lesion. To accurately recognize the lesion from the neighboring regions, we propose a dilated scale-wise feature fusion network based on convolution factorization. Our network is designed to simultaneously extract features at different scales which are systematically fused for better detection. The proposed model has satisfactory accuracy and efficiency. Various experiments for lesion segmentation are performed along with comparisons with the state-of-the-art models. Our proposed model consistently showcases state-of-the-art results.

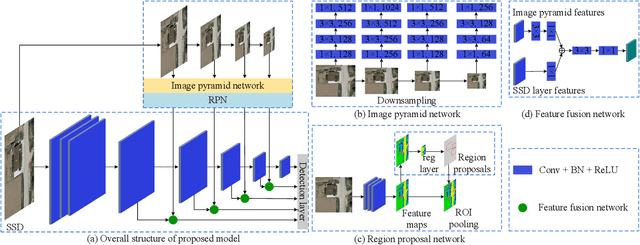

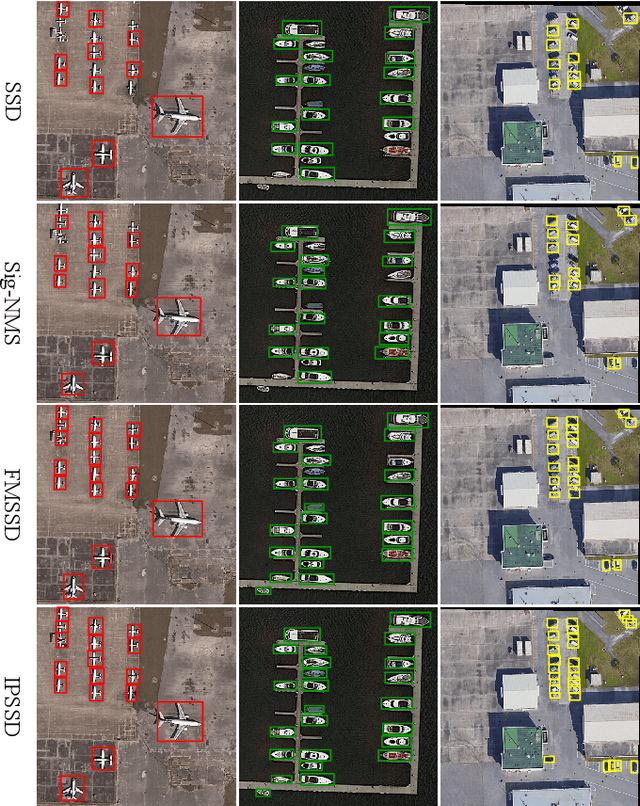

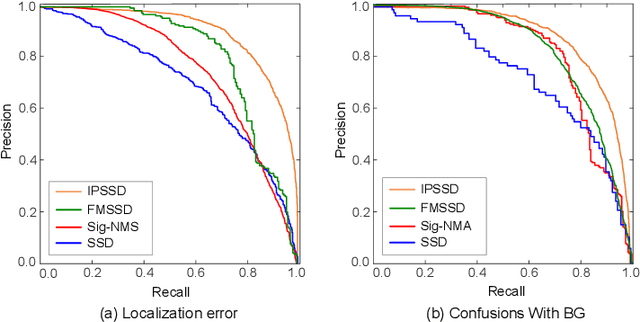

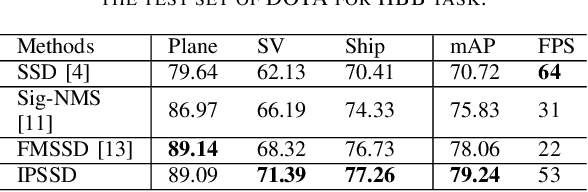

Enhanced Single-shot Detector for Small Object Detection in Remote Sensing Images

May 12, 2022

Abstract:Small-object detection is a challenging problem. In the last few years, the convolution neural networks methods have been achieved considerable progress. However, the current detectors struggle with effective features extraction for small-scale objects. To address this challenge, we propose image pyramid single-shot detector (IPSSD). In IPSSD, single-shot detector is adopted combined with an image pyramid network to extract semantically strong features for generating candidate regions. The proposed network can enhance the small-scale features from a feature pyramid network. We evaluated the performance of the proposed model on two public datasets and the results show the superior performance of our model compared to the other state-of-the-art object detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge