Mary Czerwinski

MindScape Study: Integrating LLM and Behavioral Sensing for Personalized AI-Driven Journaling Experiences

Sep 15, 2024Abstract:Mental health concerns are prevalent among college students, highlighting the need for effective interventions that promote self-awareness and holistic well-being. MindScape pioneers a novel approach to AI-powered journaling by integrating passively collected behavioral patterns such as conversational engagement, sleep, and location with Large Language Models (LLMs). This integration creates a highly personalized and context-aware journaling experience, enhancing self-awareness and well-being by embedding behavioral intelligence into AI. We present an 8-week exploratory study with 20 college students, demonstrating the MindScape app's efficacy in enhancing positive affect (7%), reducing negative affect (11%), loneliness (6%), and anxiety and depression, with a significant week-over-week decrease in PHQ-4 scores (-0.25 coefficient), alongside improvements in mindfulness (7%) and self-reflection (6%). The study highlights the advantages of contextual AI journaling, with participants particularly appreciating the tailored prompts and insights provided by the MindScape app. Our analysis also includes a comparison of responses to AI-driven contextual versus generic prompts, participant feedback insights, and proposed strategies for leveraging contextual AI journaling to improve well-being on college campuses. By showcasing the potential of contextual AI journaling to support mental health, we provide a foundation for further investigation into the effects of contextual AI journaling on mental health and well-being.

Exploration of LLMs, EEG, and behavioral data to measure and support attention and sleep

Aug 01, 2024Abstract:We explore the application of large language models (LLMs), pre-trained models with massive textual data for detecting and improving these altered states. We investigate the use of LLMs to estimate attention states, sleep stages, and sleep quality and generate sleep improvement suggestions and adaptive guided imagery scripts based on electroencephalogram (EEG) and physical activity data (e.g. waveforms, power spectrogram images, numerical features). Our results show that LLMs can estimate sleep quality based on human textual behavioral features and provide personalized sleep improvement suggestions and guided imagery scripts; however detecting attention, sleep stages, and sleep quality based on EEG and activity data requires further training data and domain-specific knowledge.

Contextual AI Journaling: Integrating LLM and Time Series Behavioral Sensing Technology to Promote Self-Reflection and Well-being using the MindScape App

Mar 30, 2024Abstract:MindScape aims to study the benefits of integrating time series behavioral patterns (e.g., conversational engagement, sleep, location) with Large Language Models (LLMs) to create a new form of contextual AI journaling, promoting self-reflection and well-being. We argue that integrating behavioral sensing in LLMs will likely lead to a new frontier in AI. In this Late-Breaking Work paper, we discuss the MindScape contextual journal App design that uses LLMs and behavioral sensing to generate contextual and personalized journaling prompts crafted to encourage self-reflection and emotional development. We also discuss the MindScape study of college students based on a preliminary user study and our upcoming study to assess the effectiveness of contextual AI journaling in promoting better well-being on college campuses. MindScape represents a new application class that embeds behavioral intelligence in AI.

From User Surveys to Telemetry-Driven Agents: Exploring the Potential of Personalized Productivity Solutions

Jan 17, 2024

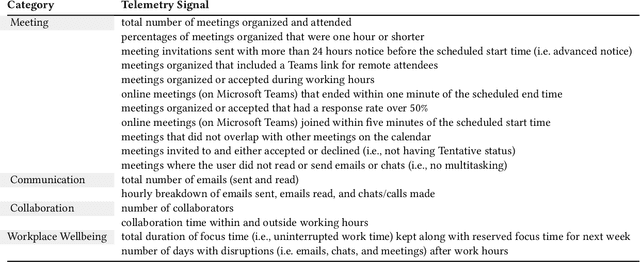

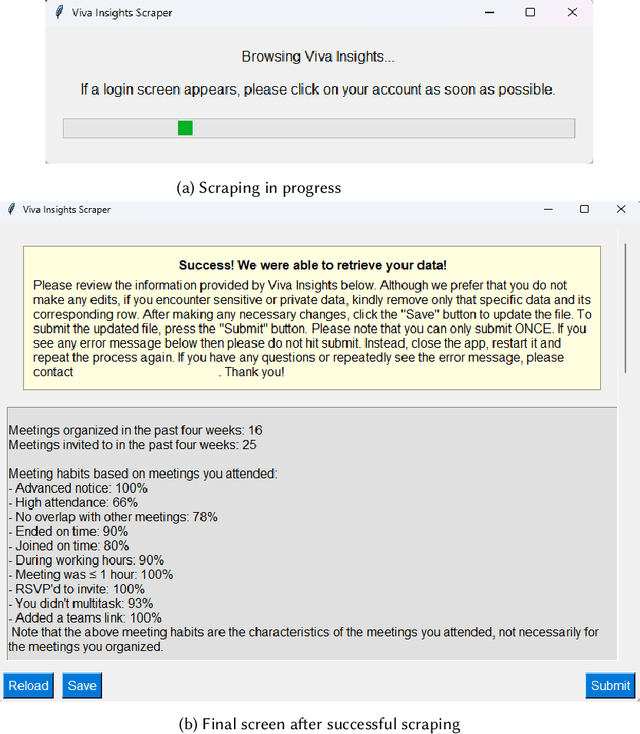

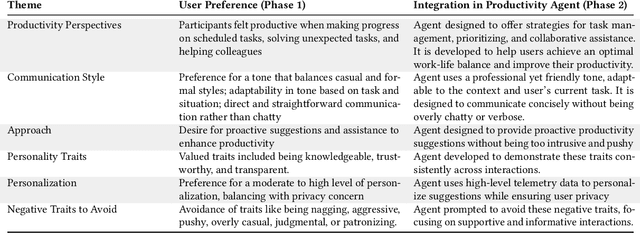

Abstract:We present a comprehensive, user-centric approach to understand preferences in AI-based productivity agents and develop personalized solutions tailored to users' needs. Utilizing a two-phase method, we first conducted a survey with 363 participants, exploring various aspects of productivity, communication style, agent approach, personality traits, personalization, and privacy. Drawing on the survey insights, we developed a GPT-4 powered personalized productivity agent that utilizes telemetry data gathered via Viva Insights from information workers to provide tailored assistance. We compared its performance with alternative productivity-assistive tools, such as dashboard and narrative, in a study involving 40 participants. Our findings highlight the importance of user-centric design, adaptability, and the balance between personalization and privacy in AI-assisted productivity tools. By building on the insights distilled from our study, we believe that our work can enable and guide future research to further enhance productivity solutions, ultimately leading to optimized efficiency and user experiences for information workers.

Affective Conversational Agents: Understanding Expectations and Personal Influences

Oct 19, 2023

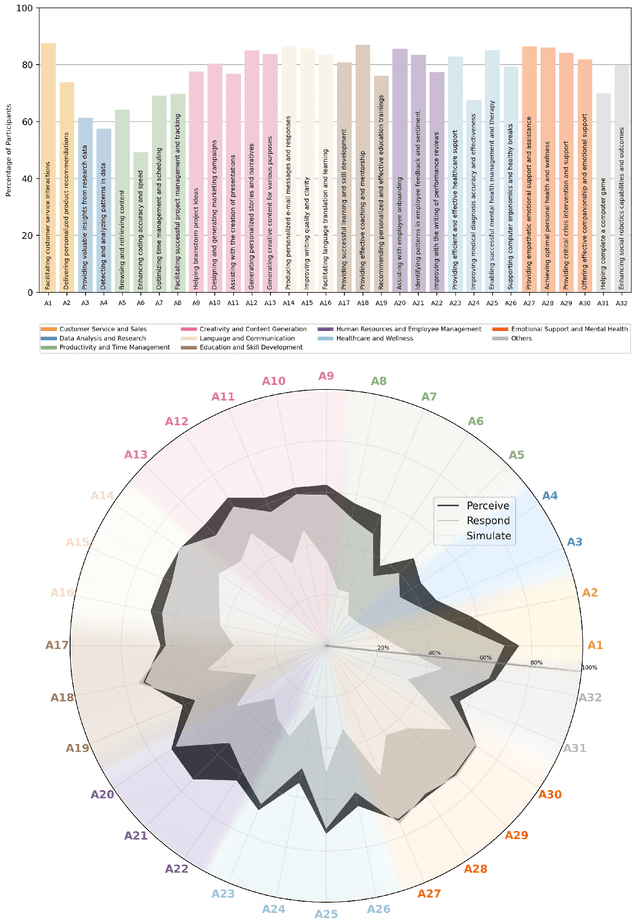

Abstract:The rise of AI conversational agents has broadened opportunities to enhance human capabilities across various domains. As these agents become more prevalent, it is crucial to investigate the impact of different affective abilities on their performance and user experience. In this study, we surveyed 745 respondents to understand the expectations and preferences regarding affective skills in various applications. Specifically, we assessed preferences concerning AI agents that can perceive, respond to, and simulate emotions across 32 distinct scenarios. Our results indicate a preference for scenarios that involve human interaction, emotional support, and creative tasks, with influences from factors such as emotional reappraisal and personality traits. Overall, the desired affective skills in AI agents depend largely on the application's context and nature, emphasizing the need for adaptability and context-awareness in the design of affective AI conversational agents.

Personal Productivity and Well-being -- Chapter 2 of the 2021 New Future of Work Report

Mar 03, 2021Abstract:We now turn to understanding the impact that COVID-19 had on the personal productivity and well-being of information workers as their work practices were impacted by remote work. This chapter overviews people's productivity, satisfaction, and work patterns, and shows that the challenges and benefits of remote work are closely linked. Looking forward, the infrastructure surrounding work will need to evolve to help people adapt to the challenges of remote and hybrid work.

DeepFN: Towards Generalizable Facial Action Unit Recognition with Deep Face Normalization

Mar 03, 2021

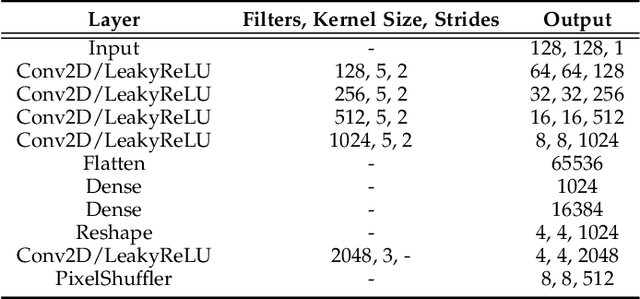

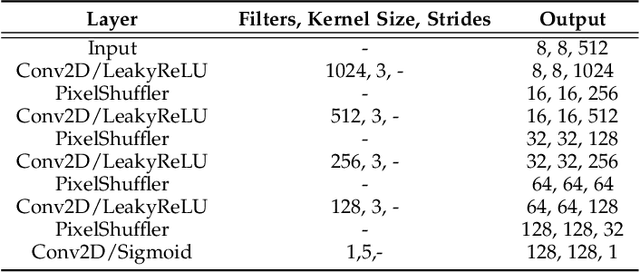

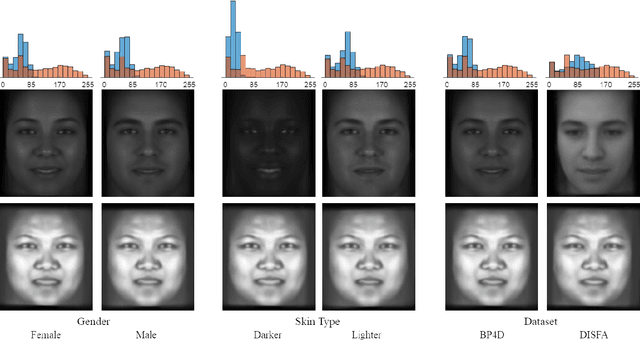

Abstract:Facial action unit recognition has many applications from market research to psychotherapy and from image captioning to entertainment. Despite its recent progress, deployment of these models has been impeded due to their limited generalization to unseen people and demographics. This work conducts an in-depth analysis of performance across several dimensions: individuals(40 subjects), genders (male and female), skin types (darker and lighter), and databases (BP4D and DISFA). To help suppress the variance in data, we use the notion of self-supervised denoising autoencoders to design a method for deep face normalization(DeepFN) that transfers facial expressions of different people onto a common facial template which is then used to train and evaluate facial action recognition models. We show that person-independent models yield significantly lower performance (55% average F1 and accuracy across 40 subjects) than person-dependent models (60.3%), leading to a generalization gap of 5.3%. However, normalizing the data with the newly introduced DeepFN significantly increased the performance of person-independent models (59.6%), effectively reducing the gap. Similarly, we observed generalization gaps when considering gender (2.4%), skin type (5.3%), and dataset (9.4%), which were significantly reduced with the use of DeepFN. These findings represent an important step towards the creation of more generalizable facial action unit recognition systems.

Designing Style Matching Conversational Agents

Oct 16, 2019

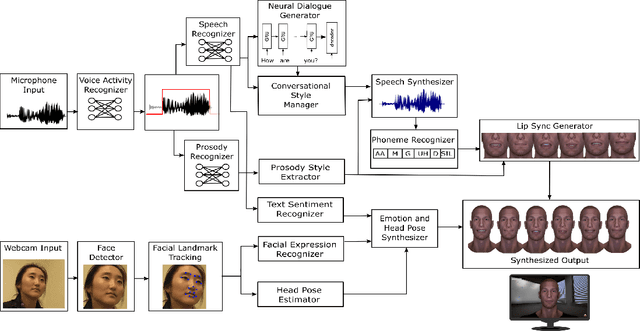

Abstract:Advances in machine intelligence have enabled conversational interfaces that have the potential to radically change the way humans interact with machines. However, even with the progress in the abilities of these agents, there remain critical gaps in their capacity for natural interactions. One limitation is that the agents are often monotonic in behavior and do not adapt to their partner. We built two end-to-end conversational agents: a voice-based agent that can engage in naturalistic, multi-turn dialogue and align with the interlocutor's conversational style, and a 2nd, expressive, embodied conversational agent (ECA) that can recognize human behavior during open-ended conversations and automatically align its responses to the visual and conversational style of the other party. The embodied conversational agent leverages multimodal inputs to produce rich and perceptually valid vocal and facial responses (e.g., lip syncing and expressions) during the conversation. Based on empirical results from a set of user studies, we highlight several significant challenges in building such systems and provide design guidelines for multi-turn dialogue interactions using style adaptation for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge