Arvind Pillai

LENS: LLM-Enabled Narrative Synthesis for Mental Health by Aligning Multimodal Sensing with Language Models

Dec 28, 2025Abstract:Multimodal health sensing offers rich behavioral signals for assessing mental health, yet translating these numerical time-series measurements into natural language remains challenging. Current LLMs cannot natively ingest long-duration sensor streams, and paired sensor-text datasets are scarce. To address these challenges, we introduce LENS, a framework that aligns multimodal sensing data with language models to generate clinically grounded mental-health narratives. LENS first constructs a large-scale dataset by transforming Ecological Momentary Assessment (EMA) responses related to depression and anxiety symptoms into natural-language descriptions, yielding over 100,000 sensor-text QA pairs from 258 participants. To enable native time-series integration, we train a patch-level encoder that projects raw sensor signals directly into an LLM's representation space. Our results show that LENS outperforms strong baselines on standard NLP metrics and task-specific measures of symptom-severity accuracy. A user study with 13 mental-health professionals further indicates that LENS-produced narratives are comprehensive and clinically meaningful. Ultimately, our approach advances LLMs as interfaces for health sensing, providing a scalable path toward models that can reason over raw behavioral signals and support downstream clinical decision-making.

MotionTeller: Multi-modal Integration of Wearable Time-Series with LLMs for Health and Behavioral Understanding

Dec 25, 2025Abstract:As wearable sensing becomes increasingly pervasive, a key challenge remains: how can we generate natural language summaries from raw physiological signals such as actigraphy - minute-level movement data collected via accelerometers? In this work, we introduce MotionTeller, a generative framework that natively integrates minute-level wearable activity data with large language models (LLMs). MotionTeller combines a pretrained actigraphy encoder with a lightweight projection module that maps behavioral embeddings into the token space of a frozen decoder-only LLM, enabling free-text, autoregressive generation of daily behavioral summaries. We construct a novel dataset of 54383 (actigraphy, text) pairs derived from real-world NHANES recordings, and train the model using cross-entropy loss with supervision only on the language tokens. MotionTeller achieves high semantic fidelity (BERTScore-F1 = 0.924) and lexical accuracy (ROUGE-1 = 0.722), outperforming prompt-based baselines by 7 percent in ROUGE-1. The average training loss converges to 0.38 by epoch 15, indicating stable optimization. Qualitative analysis confirms that MotionTeller captures circadian structure and behavioral transitions, while PCA plots reveal enhanced cluster alignment in embedding space post-training. Together, these results position MotionTeller as a scalable, interpretable system for transforming wearable sensor data into fluent, human-centered descriptions, introducing new pathways for behavioral monitoring, clinical review, and personalized health interventions.

Beyond Prompting: Time2Lang -- Bridging Time-Series Foundation Models and Large Language Models for Health Sensing

Feb 12, 2025

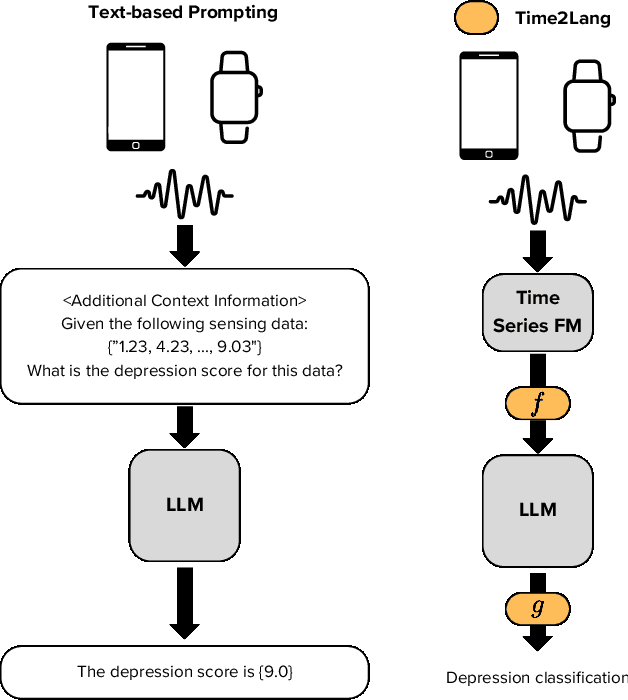

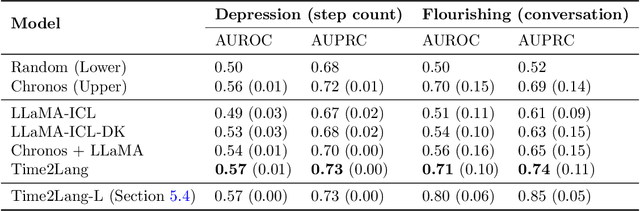

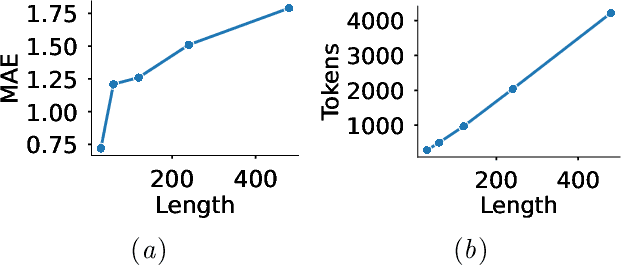

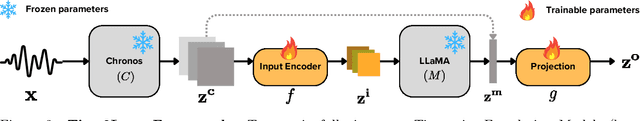

Abstract:Large language models (LLMs) show promise for health applications when combined with behavioral sensing data. Traditional approaches convert sensor data into text prompts, but this process is prone to errors, computationally expensive, and requires domain expertise. These challenges are particularly acute when processing extended time series data. While time series foundation models (TFMs) have recently emerged as powerful tools for learning representations from temporal data, bridging TFMs and LLMs remains challenging. Here, we present Time2Lang, a framework that directly maps TFM outputs to LLM representations without intermediate text conversion. Our approach first trains on synthetic data using periodicity prediction as a pretext task, followed by evaluation on mental health classification tasks. We validate Time2Lang on two longitudinal wearable and mobile sensing datasets: daily depression prediction using step count data (17,251 days from 256 participants) and flourishing classification based on conversation duration (46 participants over 10 weeks). Time2Lang maintains near constant inference times regardless of input length, unlike traditional prompting methods. The generated embeddings preserve essential time-series characteristics such as auto-correlation. Our results demonstrate that TFMs and LLMs can be effectively integrated while minimizing information loss and enabling performance transfer across these distinct modeling paradigms. To our knowledge, we are the first to integrate a TFM and an LLM for health, thus establishing a foundation for future research combining general-purpose large models for complex healthcare tasks.

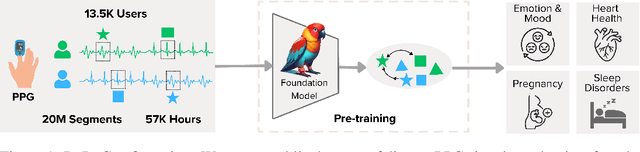

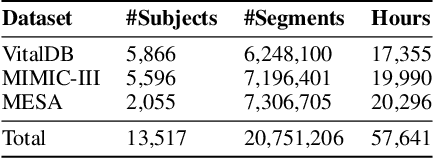

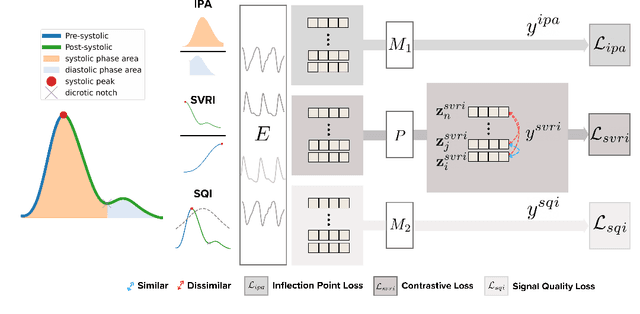

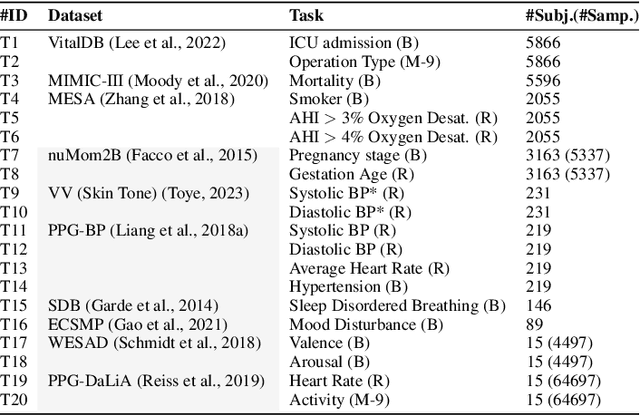

PaPaGei: Open Foundation Models for Optical Physiological Signals

Oct 27, 2024

Abstract:Photoplethysmography (PPG) is the most widely used non-invasive technique for monitoring biosignals and cardiovascular health, with applications in both clinical settings and consumer health through wearable devices. Current machine learning models trained on PPG signals are mostly task-specific and lack generalizability. Previous works often used single-device datasets, did not explore out-of-domain generalization, or did not release their models, hindering reproducibility and further research. We introduce PaPaGei, the first open foundation model for PPG signals. PaPaGei is pre-trained on more than 57,000 hours of 20 million unlabeled segments of PPG signals using publicly available datasets exclusively. We evaluate against popular time-series foundation models and other benchmarks on 20 tasks of 10 diverse datasets spanning cardiovascular health, sleep disorders, pregnancy monitoring, and wellbeing assessment. Our architecture incorporates novel representation learning approaches that leverage differences in PPG signal morphology across individuals, enabling it to capture richer representations than traditional contrastive learning methods. Across 20 tasks, PaPaGei improves classification and regression performance by an average of 6.3% and 2.9%, respectively, compared to other competitive time-series foundation models in at least 14 tasks. PaPaGei is more data- and parameter-efficient than other foundation models or methods, as it outperforms 70x larger models. Beyond accuracy, we also investigate robustness against different skin tones, establishing a benchmark for bias evaluations of future models. Notably, PaPaGei can be used out of the box as both a feature extractor and an encoder for other multimodal models, opening up new opportunities for multimodal health monitoring

MindScape Study: Integrating LLM and Behavioral Sensing for Personalized AI-Driven Journaling Experiences

Sep 15, 2024Abstract:Mental health concerns are prevalent among college students, highlighting the need for effective interventions that promote self-awareness and holistic well-being. MindScape pioneers a novel approach to AI-powered journaling by integrating passively collected behavioral patterns such as conversational engagement, sleep, and location with Large Language Models (LLMs). This integration creates a highly personalized and context-aware journaling experience, enhancing self-awareness and well-being by embedding behavioral intelligence into AI. We present an 8-week exploratory study with 20 college students, demonstrating the MindScape app's efficacy in enhancing positive affect (7%), reducing negative affect (11%), loneliness (6%), and anxiety and depression, with a significant week-over-week decrease in PHQ-4 scores (-0.25 coefficient), alongside improvements in mindfulness (7%) and self-reflection (6%). The study highlights the advantages of contextual AI journaling, with participants particularly appreciating the tailored prompts and insights provided by the MindScape app. Our analysis also includes a comparison of responses to AI-driven contextual versus generic prompts, participant feedback insights, and proposed strategies for leveraging contextual AI journaling to improve well-being on college campuses. By showcasing the potential of contextual AI journaling to support mental health, we provide a foundation for further investigation into the effects of contextual AI journaling on mental health and well-being.

Contextual AI Journaling: Integrating LLM and Time Series Behavioral Sensing Technology to Promote Self-Reflection and Well-being using the MindScape App

Mar 30, 2024Abstract:MindScape aims to study the benefits of integrating time series behavioral patterns (e.g., conversational engagement, sleep, location) with Large Language Models (LLMs) to create a new form of contextual AI journaling, promoting self-reflection and well-being. We argue that integrating behavioral sensing in LLMs will likely lead to a new frontier in AI. In this Late-Breaking Work paper, we discuss the MindScape contextual journal App design that uses LLMs and behavioral sensing to generate contextual and personalized journaling prompts crafted to encourage self-reflection and emotional development. We also discuss the MindScape study of college students based on a preliminary user study and our upcoming study to assess the effectiveness of contextual AI journaling in promoting better well-being on college campuses. MindScape represents a new application class that embeds behavioral intelligence in AI.

MoodCapture: Depression Detection Using In-the-Wild Smartphone Images

Feb 25, 2024Abstract:MoodCapture presents a novel approach that assesses depression based on images automatically captured from the front-facing camera of smartphones as people go about their daily lives. We collect over 125,000 photos in the wild from N=177 participants diagnosed with major depressive disorder for 90 days. Images are captured naturalistically while participants respond to the PHQ-8 depression survey question: \textit{``I have felt down, depressed, or hopeless''}. Our analysis explores important image attributes, such as angle, dominant colors, location, objects, and lighting. We show that a random forest trained with face landmarks can classify samples as depressed or non-depressed and predict raw PHQ-8 scores effectively. Our post-hoc analysis provides several insights through an ablation study, feature importance analysis, and bias assessment. Importantly, we evaluate user concerns about using MoodCapture to detect depression based on sharing photos, providing critical insights into privacy concerns that inform the future design of in-the-wild image-based mental health assessment tools.

Rare Life Event Detection via Mobile Sensing Using Multi-Task Learning

May 31, 2023

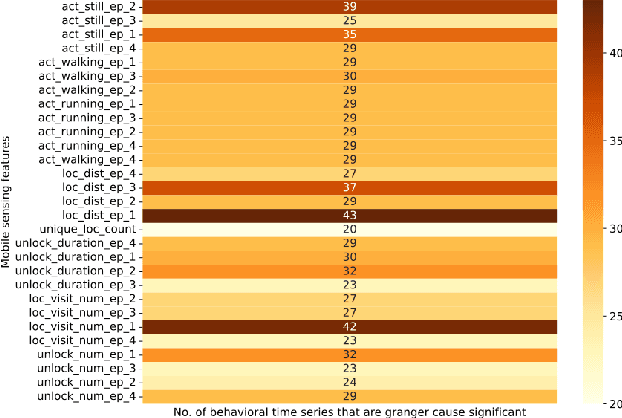

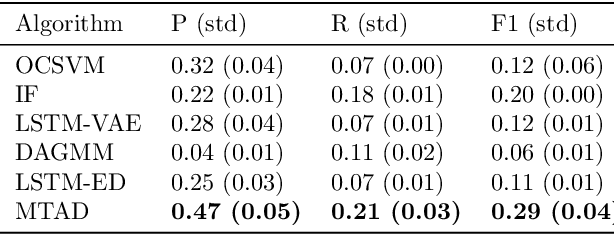

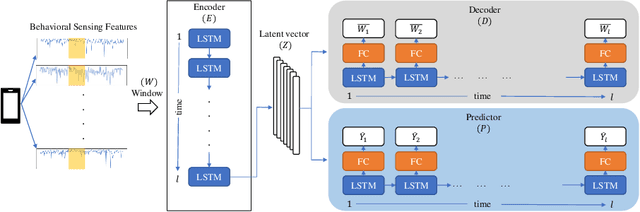

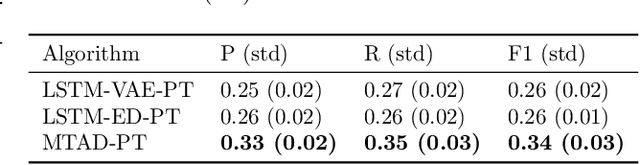

Abstract:Rare life events significantly impact mental health, and their detection in behavioral studies is a crucial step towards health-based interventions. We envision that mobile sensing data can be used to detect these anomalies. However, the human-centered nature of the problem, combined with the infrequency and uniqueness of these events makes it challenging for unsupervised machine learning methods. In this paper, we first investigate granger-causality between life events and human behavior using sensing data. Next, we propose a multi-task framework with an unsupervised autoencoder to capture irregular behavior, and an auxiliary sequence predictor that identifies transitions in workplace performance to contextualize events. We perform experiments using data from a mobile sensing study comprising N=126 information workers from multiple industries, spanning 10106 days with 198 rare events (<2%). Through personalized inference, we detect the exact day of a rare event with an F1 of 0.34, demonstrating that our method outperforms several baselines. Finally, we discuss the implications of our work from the context of real-world deployment.

Personalized Step Counting Using Wearable Sensors: A Domain Adapted LSTM Network Approach

Dec 11, 2020

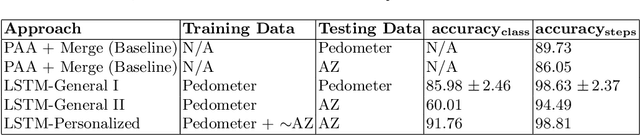

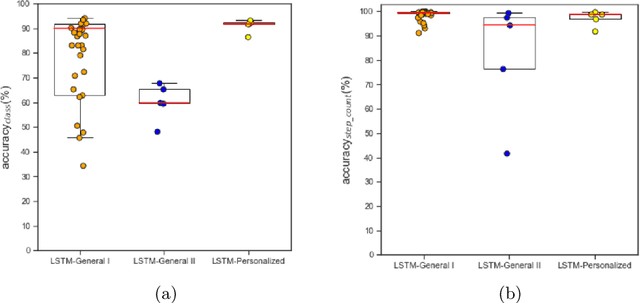

Abstract:Activity monitors are widely used to measure various physical activities (PA) as an indicator of mobility, fitness and general health. Similarly, real-time monitoring of longitudinal trends in step count has significant clinical potential as a personalized measure of disease related changes in daily activity. However, inconsistent step count accuracy across vendors, body locations, and individual gait differences limits clinical utility. The tri-axial accelerometer inside PA monitors can be exploited to improve step count accuracy across devices and individuals. In this study, we hypothesize: (1) raw tri-axial sensor data can be modeled to create reliable and accurate step count, and (2) a generalized step count model can then be efficiently adapted to each unique gait pattern using very little new data. Firstly, open-source raw sensor data was used to construct a long short term memory (LSTM) deep neural network to model step count. Then we generated a new, fully independent data set using a different device and different subjects. Finally, a small amount of subject-specific data was domain adapted to produce personalized models with high individualized step count accuracy. These results suggest models trained using large freely available datasets can be adapted to patient populations where large historical data sets are rare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge