Faisal Khan

Development, Optimization, and Deployment of Thermal Forward Vision Systems for Advance Vehicular Applications on Edge Devices

Jan 18, 2023Abstract:In this research work, we have proposed a thermal tiny-YOLO multi-class object detection (TTYMOD) system as a smart forward sensing system that should remain effective in all weather and harsh environmental conditions using an end-to-end YOLO deep learning framework. It provides enhanced safety and improved awareness features for driver assistance. The system is trained on large-scale thermal public datasets as well as newly gathered novel open-sourced dataset comprising of more than 35,000 distinct thermal frames. For optimal training and convergence of YOLO-v5 tiny network variant on thermal data, we have employed different optimizers which include stochastic decent gradient (SGD), Adam, and its variant AdamW which has an improved implementation of weight decay. The performance of thermally tuned tiny architecture is further evaluated on the public as well as locally gathered test data in diversified and challenging weather and environmental conditions. The efficacy of a thermally tuned nano network is quantified using various qualitative metrics which include mean average precision, frames per second rate, and average inference time. Experimental outcomes show that the network achieved the best mAP of 56.4% with an average inference time/ frame of 4 milliseconds. The study further incorporates optimization of tiny network variant using the TensorFlow Lite quantization tool this is beneficial for the deployment of deep learning architectures on the edge and mobile devices. For this study, we have used a raspberry pi 4 computing board for evaluating the real-time feasibility performance of an optimized version of the thermal object detection network for the automotive sensor suite. The source code, trained and optimized models and complete validation/ testing results are publicly available at https://github.com/MAli-Farooq/Thermal-YOLO-And-Model-Optimization-Using-TensorFlowLite.

Artificial Intelligence enabled Smart Learning

Jan 08, 2021Abstract:Artificial Intelligence (AI) is a discipline of computer science that deals with machine intelligence. It is essential to bring AI into the context of learning because it helps in analysing the enormous amounts of data that is collected from individual students, teachers and academic staff. The major priorities of implementing AI in education are making innovative use of existing digital technologies for learning, and teaching practices that significantly improve traditional educational methods. The main problem with traditional learning is that it cannot be suited to every student in class. Some students may grasp the concepts well, while some may have difficulties in understanding them and some may be more auditory or visual learners. The World Bank report on education has indicated that the learning gap created by this problem causes many students to drop out (World Development Report, 2018). Personalised learning has been able to solve this grave problem.

* 4

Personalized Step Counting Using Wearable Sensors: A Domain Adapted LSTM Network Approach

Dec 11, 2020

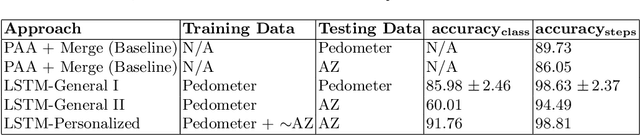

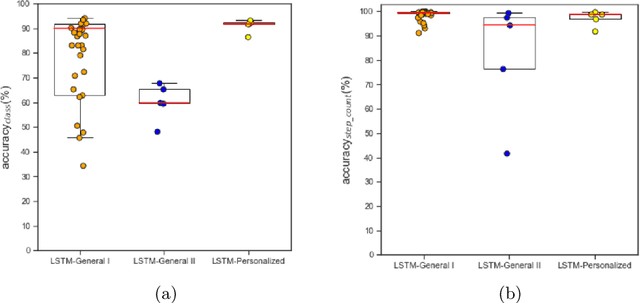

Abstract:Activity monitors are widely used to measure various physical activities (PA) as an indicator of mobility, fitness and general health. Similarly, real-time monitoring of longitudinal trends in step count has significant clinical potential as a personalized measure of disease related changes in daily activity. However, inconsistent step count accuracy across vendors, body locations, and individual gait differences limits clinical utility. The tri-axial accelerometer inside PA monitors can be exploited to improve step count accuracy across devices and individuals. In this study, we hypothesize: (1) raw tri-axial sensor data can be modeled to create reliable and accurate step count, and (2) a generalized step count model can then be efficiently adapted to each unique gait pattern using very little new data. Firstly, open-source raw sensor data was used to construct a long short term memory (LSTM) deep neural network to model step count. Then we generated a new, fully independent data set using a different device and different subjects. Finally, a small amount of subject-specific data was domain adapted to produce personalized models with high individualized step count accuracy. These results suggest models trained using large freely available datasets can be adapted to patient populations where large historical data sets are rare.

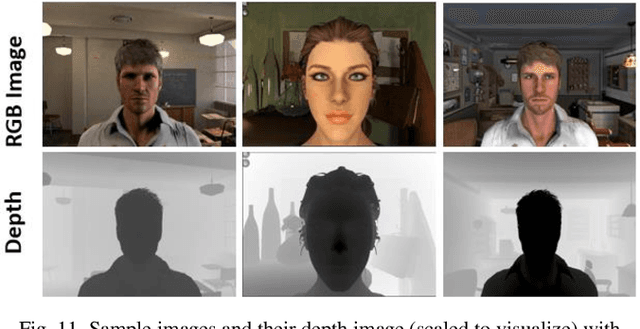

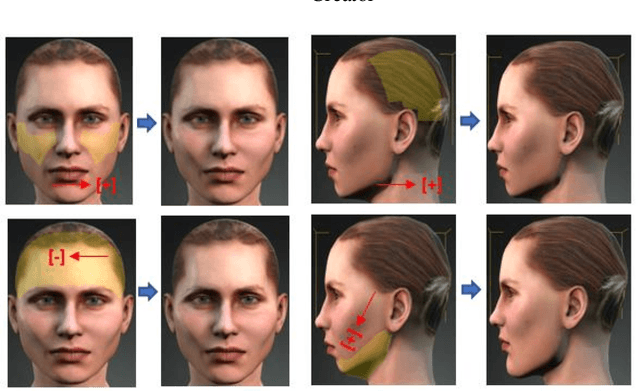

Methodology for Building Synthetic Datasets with Virtual Humans

Jun 21, 2020

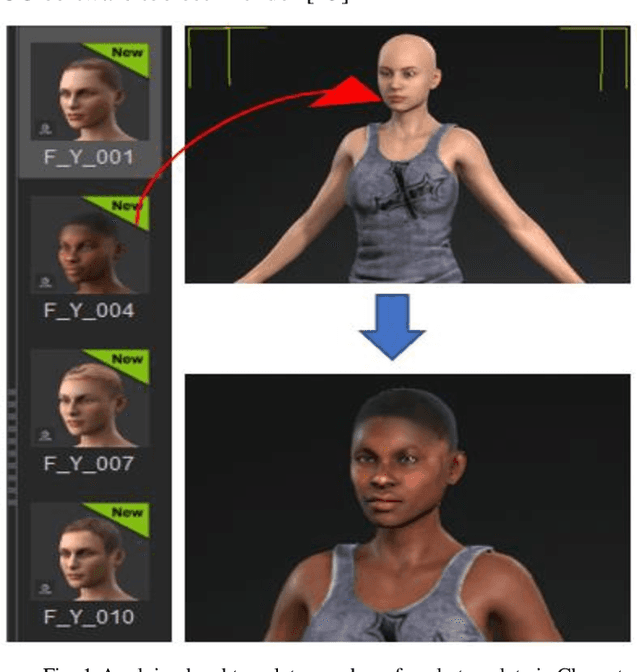

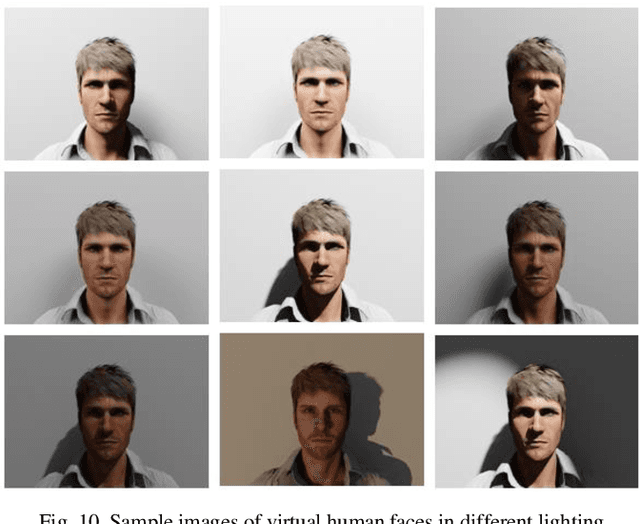

Abstract:Recent advances in deep learning methods have increased the performance of face detection and recognition systems. The accuracy of these models relies on the range of variation provided in the training data. Creating a dataset that represents all variations of real-world faces is not feasible as the control over the quality of the data decreases with the size of the dataset. Repeatability of data is another challenge as it is not possible to exactly recreate 'real-world' acquisition conditions outside of the laboratory. In this work, we explore a framework to synthetically generate facial data to be used as part of a toolchain to generate very large facial datasets with a high degree of control over facial and environmental variations. Such large datasets can be used for improved, targeted training of deep neural networks. In particular, we make use of a 3D morphable face model for the rendering of multiple 2D images across a dataset of 100 synthetic identities, providing full control over image variations such as pose, illumination, and background.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge