Judith Amores

Longitudinal Study on Social and Emotional Use of AI Conversational Agent

Apr 19, 2025Abstract:Development in digital technologies has continuously reshaped how individuals seek and receive social and emotional support. While online platforms and communities have long served this need, the increased integration of general-purpose conversational AI into daily lives has introduced new dynamics in how support is provided and experienced. Existing research has highlighted both benefits (e.g., wider access to well-being resources) and potential risks (e.g., over-reliance) of using AI for support seeking. In this five-week, exploratory study, we recruited 149 participants divided into two usage groups: a baseline usage group (BU, n=60) that used the internet and AI as usual, and an active usage group (AU, n=89) encouraged to use one of four commercially available AI tools (Microsoft Copilot, Google Gemini, PI AI, ChatGPT) for social and emotional interactions. Our analysis revealed significant increases in perceived attachment towards AI (32.99 percentage points), perceived AI empathy (25.8 p.p.), and motivation to use AI for entertainment (22.90 p.p.) among the AU group. We also observed that individual differences (e.g., gender identity, prior AI usage) influenced perceptions of AI empathy and attachment. Lastly, the AU group expressed higher comfort in seeking personal help, managing stress, obtaining social support, and talking about health with AI, indicating potential for broader emotional support while highlighting the need for safeguards against problematic usage. Overall, our exploratory findings underscore the importance of developing consumer-facing AI tools that support emotional well-being responsibly, while empowering users to understand the limitations of these tools.

Super-intelligence or Superstition? Exploring Psychological Factors Underlying Unwarranted Belief in AI Predictions

Aug 13, 2024

Abstract:This study investigates psychological factors influencing belief in AI predictions about personal behavior, comparing it to belief in astrology and personality-based predictions. Through an experiment with 238 participants, we examined how cognitive style, paranormal beliefs, AI attitudes, personality traits, and other factors affect perceived validity, reliability, usefulness, and personalization of predictions from different sources. Our findings reveal that belief in AI predictions is positively correlated with belief in predictions based on astrology and personality psychology. Notably, paranormal beliefs and positive AI attitudes significantly increased perceived validity, reliability, usefulness, and personalization of AI predictions. Conscientiousness was negatively correlated with belief in predictions across all sources, and interest in the prediction topic increased believability across predictions. Surprisingly, cognitive style did not significantly influence belief in predictions. These results highlight the "rational superstition" phenomenon in AI, where belief is driven more by mental heuristics and intuition than critical evaluation. We discuss implications for designing AI systems and communication strategies that foster appropriate trust and skepticism. This research contributes to our understanding of the psychology of human-AI interaction and offers insights for the design and deployment of AI systems.

Exploration of LLMs, EEG, and behavioral data to measure and support attention and sleep

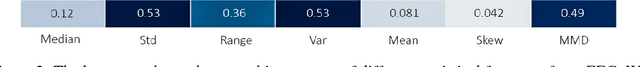

Aug 01, 2024Abstract:We explore the application of large language models (LLMs), pre-trained models with massive textual data for detecting and improving these altered states. We investigate the use of LLMs to estimate attention states, sleep stages, and sleep quality and generate sleep improvement suggestions and adaptive guided imagery scripts based on electroencephalogram (EEG) and physical activity data (e.g. waveforms, power spectrogram images, numerical features). Our results show that LLMs can estimate sleep quality based on human textual behavioral features and provide personalized sleep improvement suggestions and guided imagery scripts; however detecting attention, sleep stages, and sleep quality based on EEG and activity data requires further training data and domain-specific knowledge.

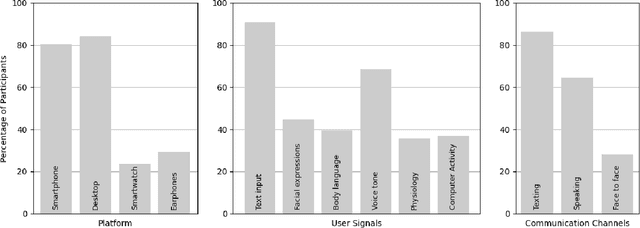

From User Surveys to Telemetry-Driven Agents: Exploring the Potential of Personalized Productivity Solutions

Jan 17, 2024

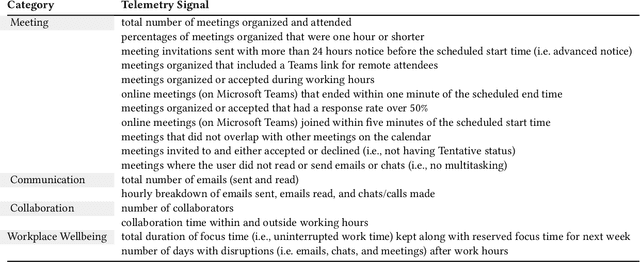

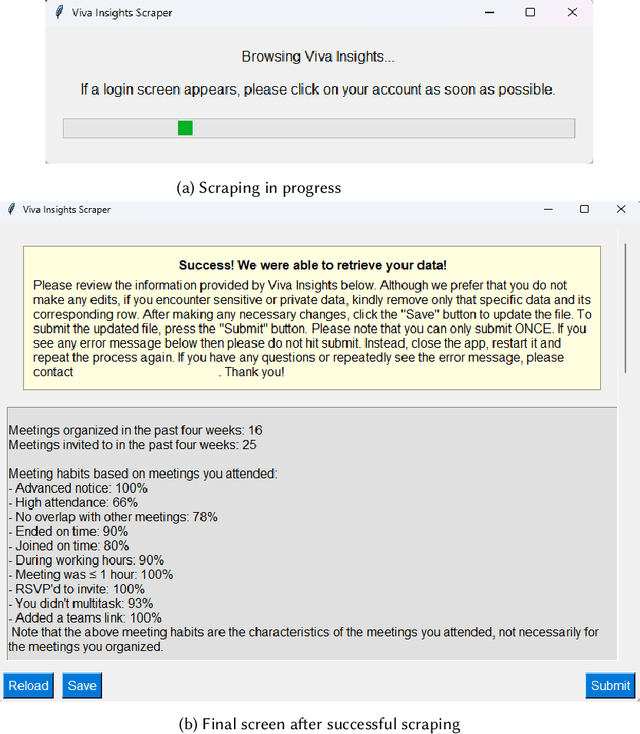

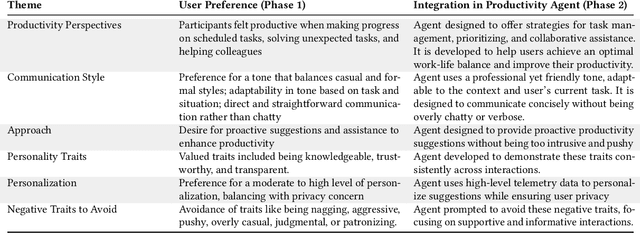

Abstract:We present a comprehensive, user-centric approach to understand preferences in AI-based productivity agents and develop personalized solutions tailored to users' needs. Utilizing a two-phase method, we first conducted a survey with 363 participants, exploring various aspects of productivity, communication style, agent approach, personality traits, personalization, and privacy. Drawing on the survey insights, we developed a GPT-4 powered personalized productivity agent that utilizes telemetry data gathered via Viva Insights from information workers to provide tailored assistance. We compared its performance with alternative productivity-assistive tools, such as dashboard and narrative, in a study involving 40 participants. Our findings highlight the importance of user-centric design, adaptability, and the balance between personalization and privacy in AI-assisted productivity tools. By building on the insights distilled from our study, we believe that our work can enable and guide future research to further enhance productivity solutions, ultimately leading to optimized efficiency and user experiences for information workers.

Real-time Animation Generation and Control on Rigged Models via Large Language Models

Oct 27, 2023

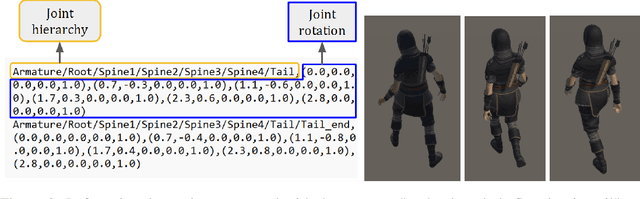

Abstract:We introduce a novel method for real-time animation control and generation on rigged models using natural language input. First, we embed a large language model (LLM) in Unity to output structured texts that can be parsed into diverse and realistic animations. Second, we illustrate LLM's potential to enable flexible state transition between existing animations. We showcase the robustness of our approach through qualitative results on various rigged models and motions.

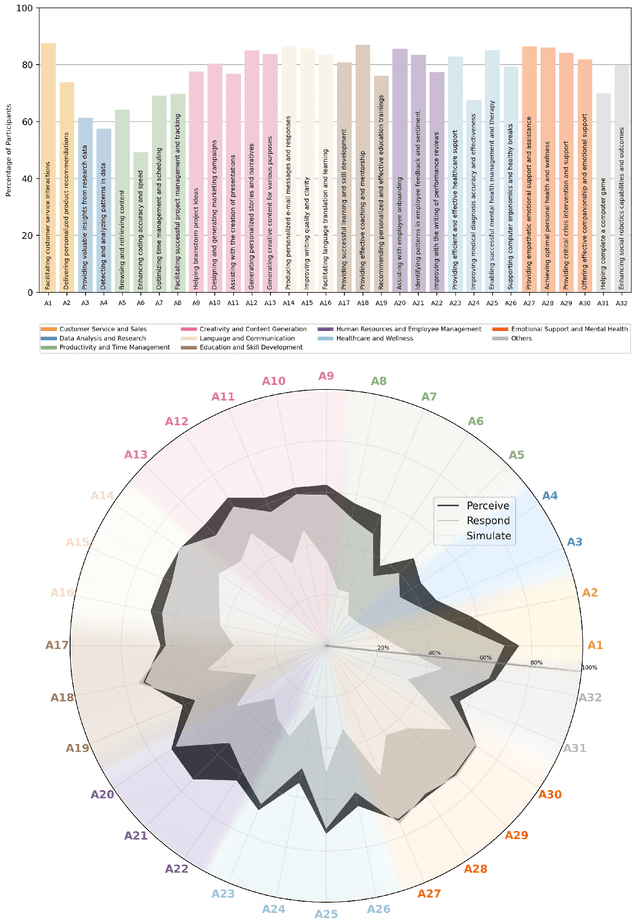

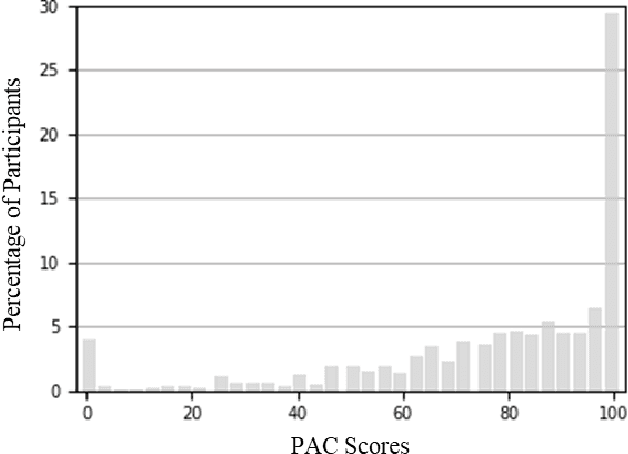

Affective Conversational Agents: Understanding Expectations and Personal Influences

Oct 19, 2023

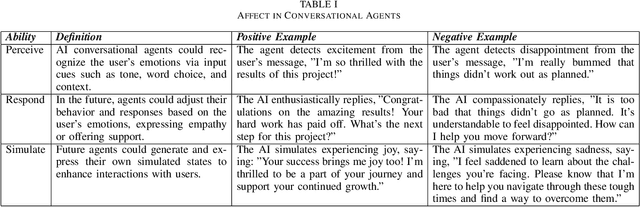

Abstract:The rise of AI conversational agents has broadened opportunities to enhance human capabilities across various domains. As these agents become more prevalent, it is crucial to investigate the impact of different affective abilities on their performance and user experience. In this study, we surveyed 745 respondents to understand the expectations and preferences regarding affective skills in various applications. Specifically, we assessed preferences concerning AI agents that can perceive, respond to, and simulate emotions across 32 distinct scenarios. Our results indicate a preference for scenarios that involve human interaction, emotional support, and creative tasks, with influences from factors such as emotional reappraisal and personality traits. Overall, the desired affective skills in AI agents depend largely on the application's context and nature, emphasizing the need for adaptability and context-awareness in the design of affective AI conversational agents.

Real-Time Sleep Staging using Deep Learning on a Smartphone for a Wearable EEG

Nov 28, 2018

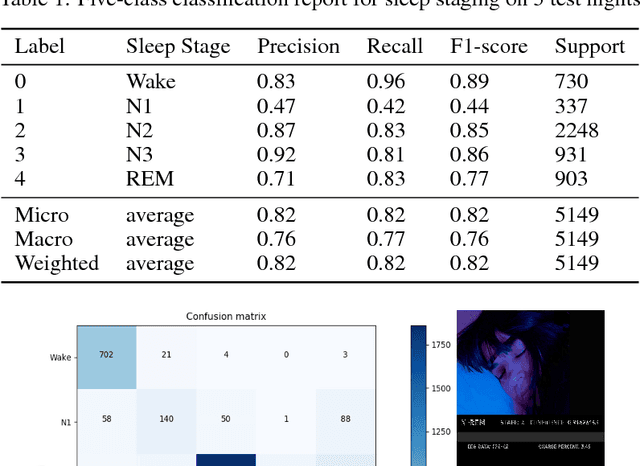

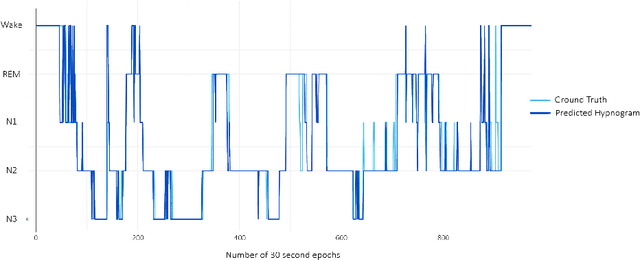

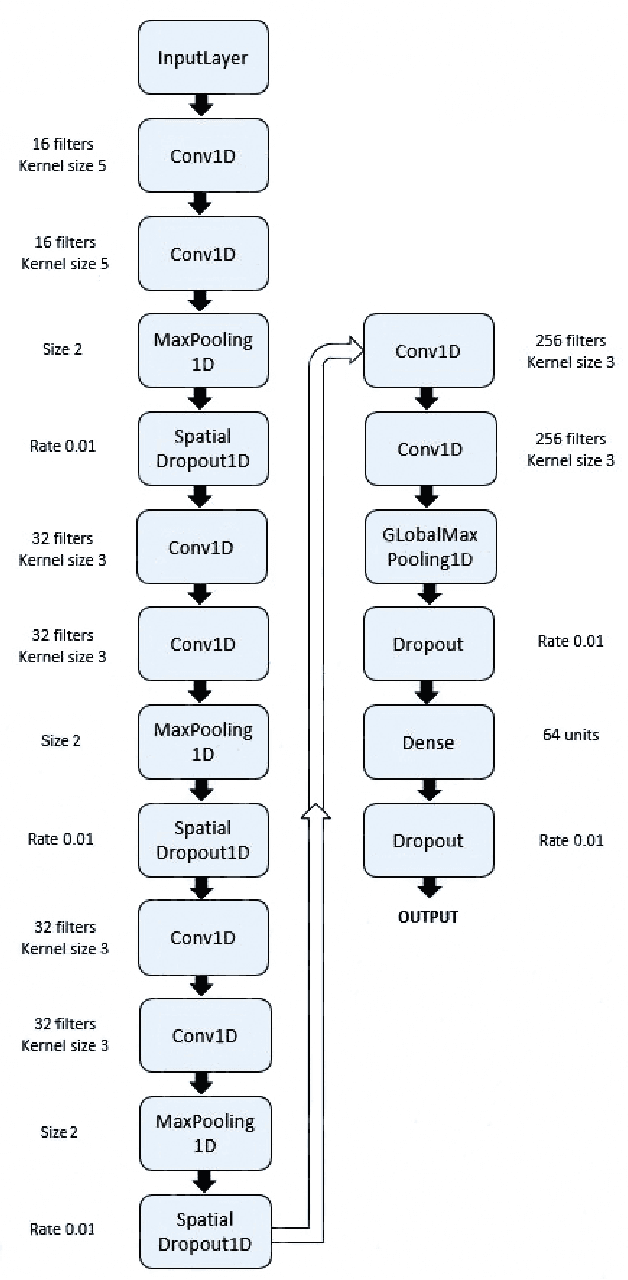

Abstract:We present the first real-time sleep staging system that uses deep learning without the need for servers in a smartphone application for a wearable EEG. We employ real-time adaptation of a single channel Electroencephalography (EEG) to infer from a Time-Distributed 1-D Deep Convolutional Neural Network. Polysomnography (PSG)-the gold standard for sleep staging, requires a human scorer and is both complex and resource-intensive. Our work demonstrates an end-to-end on-smartphone pipeline that can infer sleep stages in just single 30-second epochs, with an overall accuracy of 83.5% on 20-fold cross validation for five-class classification of sleep stages using the open Sleep-EDF dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge