Martin Rudorfer

University of Birmingham, UK

A Framework for Joint Grasp and Motion Planning in Confined Spaces

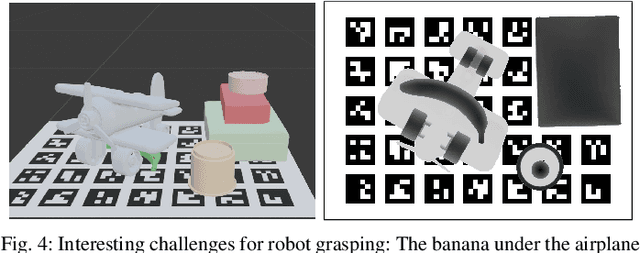

May 12, 2025Abstract:Robotic grasping is a fundamental skill across all domains of robot applications. There is a large body of research for grasping objects in table-top scenarios, where finding suitable grasps is the main challenge. In this work, we are interested in scenarios where the objects are in confined spaces and hence particularly difficult to reach. Planning how the robot approaches the object becomes a major part of the challenge, giving rise to methods for joint grasp and motion planning. The framework proposed in this paper provides 20 benchmark scenarios with systematically increasing difficulty, realistic objects with precomputed grasp annotations, and tools to create and share more scenarios. We further provide two baseline planners and evaluate them on the scenarios, demonstrating that the proposed difficulty levels indeed offer a meaningful progression. We invite the research community to build upon this framework by making all components publicly available as open source.

* 2024 13th International Workshop on Robot Motion and Control (RoMoCo)

RM4D: A Combined Reachability and Inverse Reachability Map for Common 6-/7-axis Robot Arms by Dimensionality Reduction to 4D

Oct 09, 2024

Abstract:Knowledge of a manipulator's workspace is fundamental for a variety of tasks including robot design, grasp planning and robot base placement. Consequently, workspace representations are well studied in robotics. Two important representations are reachability maps and inverse reachability maps. The former predicts whether a given end-effector pose is reachable from where the robot currently is, and the latter suggests suitable base positions for a desired end-effector pose. Typically, the reachability map is built by discretizing the 6D space containing the robot's workspace and determining, for each cell, whether it is reachable or not. The reachability map is subsequently inverted to build the inverse map. This is a cumbersome process which restricts the applications of such maps. In this work, we exploit commonalities of existing six and seven axis robot arms to reduce the dimension of the discretization from 6D to 4D. We propose Reachability Map 4D (RM4D), a map that only requires a single 4D data structure for both forward and inverse queries. This gives a much more compact map that can be constructed by an order of magnitude faster than existing maps, with no inversion overheads and no loss in accuracy. Our experiments showcase the usefulness of RM4D for grasp planning with a mobile manipulator.

BURG-Toolkit: Robot Grasping Experiments in Simulation and the Real World

May 27, 2022

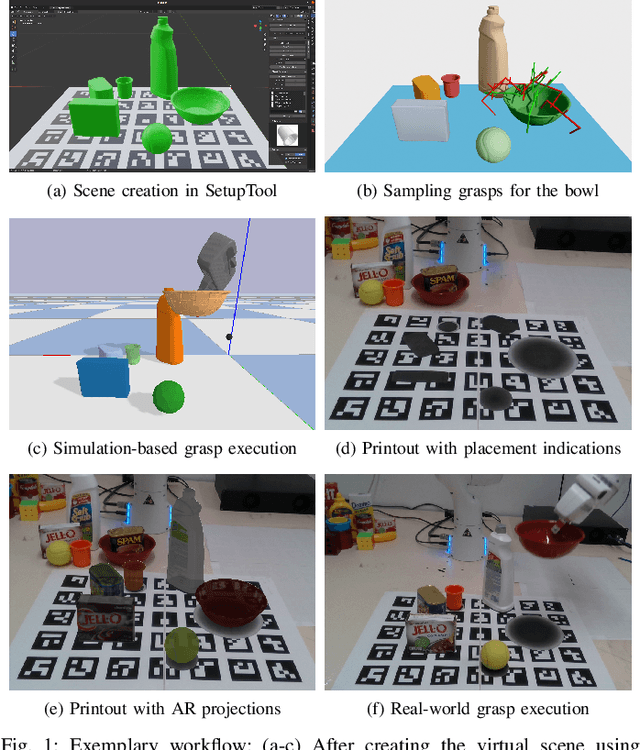

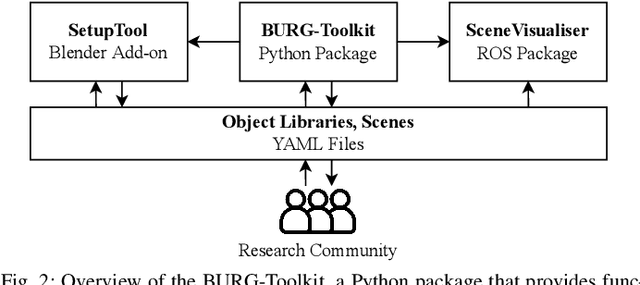

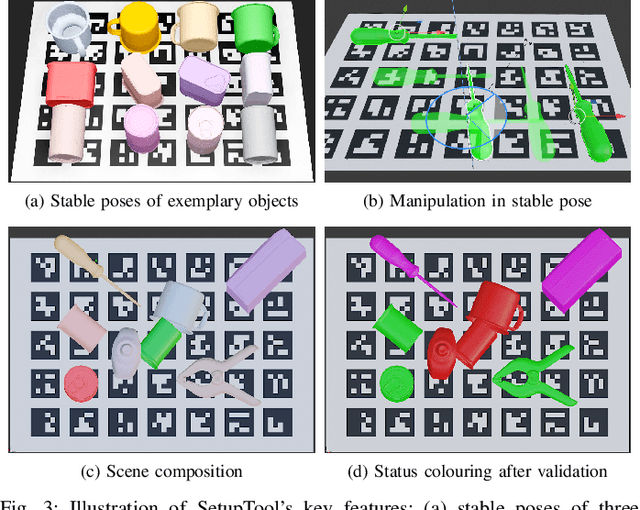

Abstract:This paper presents BURG-Toolkit, a set of open-source tools for Benchmarking and Understanding Robotic Grasping. Our tools allow researchers to: (1) create virtual scenes for generating training data and performing grasping in simulation; (2) recreate the scene by arranging the corresponding objects accurately in the physical world for real robot experiments, supporting an analysis of the sim-to-real gap; and (3) share the scenes with other researchers to foster comparability and reproducibility of experimental results. We explain how to use our tools by describing some potential use cases. We further provide proof-of-concept experimental results quantifying the sim-to-real gap for robot grasping in some example scenes. The tools are available at: https://mrudorfer.github.io/burg-toolkit/

End-to-End Learning to Grasp from Object Point Clouds

Mar 10, 2022

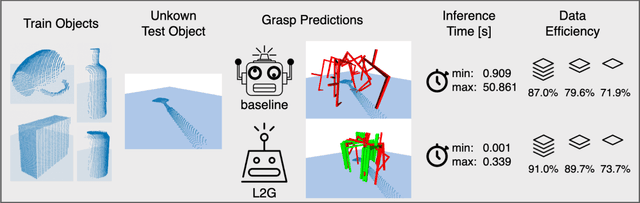

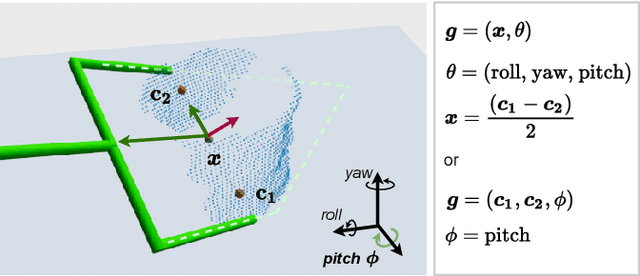

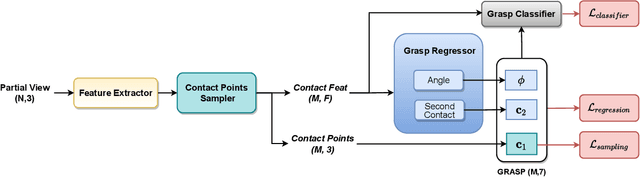

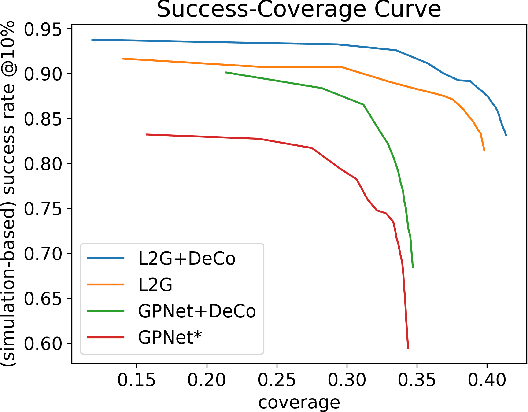

Abstract:The ability to grasp objects is an essential skill that enables many robotic manipulation tasks. Recent works have studied point cloud-based methods for object grasping by starting from simulated datasets and have shown promising performance in real-world scenarios. Nevertheless, many of them still strongly rely on ad-hoc geometric heuristics to generate grasp candidates, which fail to generalize to objects with significantly different shapes with respect to those observed during training. Moreover, these methods are generally inefficient with respect to the number of training samples and the time needed during deployment. In this paper, we propose an end-to-end learning solution to generate 6-DOF parallel-jaw grasps starting from the partial view of the object. Our Learning to Grasp (L2G) method takes as input object point clouds and is guided by a principled multi-task optimization objective that generates a diverse set of grasps combining contact point sampling, grasp regression, and grasp evaluation. With a thorough experimental analysis, we show the effectiveness of the proposed method as well as its robustness and generalization abilities.

Robots Assembling Machines: Learning from the World Robot Summit 2018 Assembly Challenge

Nov 14, 2019

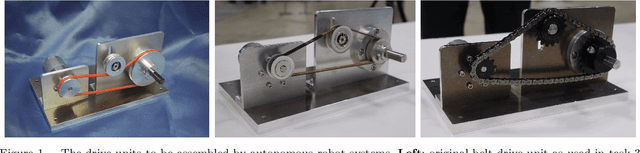

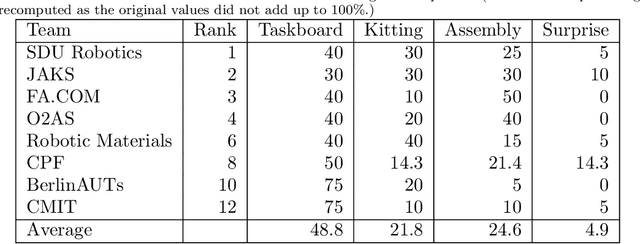

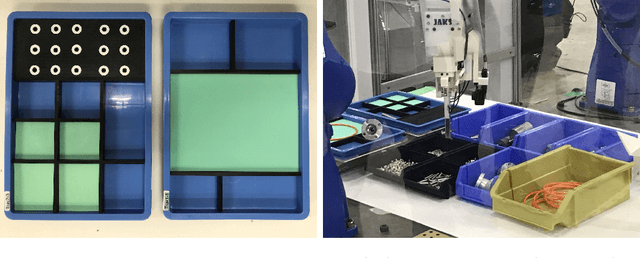

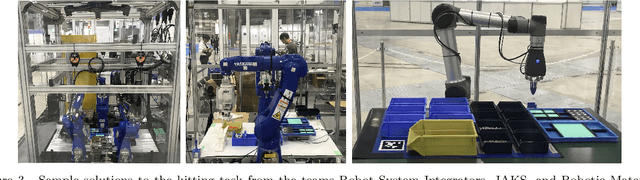

Abstract:The Industrial Assembly Challenge at the World Robot Summit was held in 2018 to showcase the state-of-the-art of autonomous manufacturing systems. The challenge included various tasks, such as bin picking, kitting, and assembly of standard industrial parts into 2D and 3D assemblies. Some of the tasks were only revealed at the competition itself, representing the challenge of "level 5" automation, i. e., programming and setting up an autonomous assembly system in less than one day. We conducted a survey among the teams that participated in the challenge and investigated aspects such as team composition, development costs, system setups as well as the teams' strategies and approaches. An analysis of the survey results reveals that the competitors have been in two camps: those constructing conventional robotic work cells with off-the-shelf tools, and teams who mostly relied on custom-made end effectors and novel software approaches in combination with collaborative robots. While both camps performed reasonably well, the winning team chose a middle ground in between, combining the efficiency of established play-back programming with the autonomy gained by CAD-based object detection and force control for assembly operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge