Mark Vogelsberger

How DREAMS are made: Emulating Satellite Galaxy and Subhalo Populations with Diffusion Models and Point Clouds

Sep 04, 2024

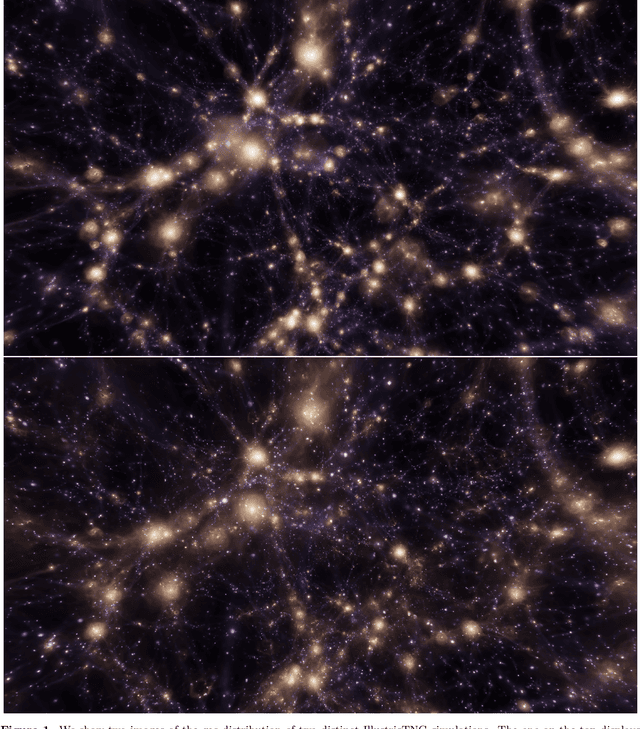

Abstract:The connection between galaxies and their host dark matter (DM) halos is critical to our understanding of cosmology, galaxy formation, and DM physics. To maximize the return of upcoming cosmological surveys, we need an accurate way to model this complex relationship. Many techniques have been developed to model this connection, from Halo Occupation Distribution (HOD) to empirical and semi-analytic models to hydrodynamic. Hydrodynamic simulations can incorporate more detailed astrophysical processes but are computationally expensive; HODs, on the other hand, are computationally cheap but have limited accuracy. In this work, we present NeHOD, a generative framework based on variational diffusion model and Transformer, for painting galaxies/subhalos on top of DM with an accuracy of hydrodynamic simulations but at a computational cost similar to HOD. By modeling galaxies/subhalos as point clouds, instead of binning or voxelization, we can resolve small spatial scales down to the resolution of the simulations. For each halo, NeHOD predicts the positions, velocities, masses, and concentrations of its central and satellite galaxies. We train NeHOD on the TNG-Warm DM suite of the DREAMS project, which consists of 1024 high-resolution zoom-in hydrodynamic simulations of Milky Way-mass halos with varying warm DM mass and astrophysical parameters. We show that our model captures the complex relationships between subhalo properties as a function of the simulation parameters, including the mass functions, stellar-halo mass relations, concentration-mass relations, and spatial clustering. Our method can be used for a large variety of downstream applications, from galaxy clustering to strong lensing studies.

Field-level simulation-based inference with galaxy catalogs: the impact of systematic effects

Oct 23, 2023Abstract:It has been recently shown that a powerful way to constrain cosmological parameters from galaxy redshift surveys is to train graph neural networks to perform field-level likelihood-free inference without imposing cuts on scale. In particular, de Santi et al. (2023) developed models that could accurately infer the value of $\Omega_{\rm m}$ from catalogs that only contain the positions and radial velocities of galaxies that are robust to uncertainties in astrophysics and subgrid models. However, observations are affected by many effects, including 1) masking, 2) uncertainties in peculiar velocities and radial distances, and 3) different galaxy selections. Moreover, observations only allow us to measure redshift, intertwining galaxies' radial positions and velocities. In this paper we train and test our models on galaxy catalogs, created from thousands of state-of-the-art hydrodynamic simulations run with different codes from the CAMELS project, that incorporate these observational effects. We find that, although the presence of these effects degrades the precision and accuracy of the models, and increases the fraction of catalogs where the model breaks down, the fraction of galaxy catalogs where the model performs well is over 90 %, demonstrating the potential of these models to constrain cosmological parameters even when applied to real data.

Robust field-level likelihood-free inference with galaxies

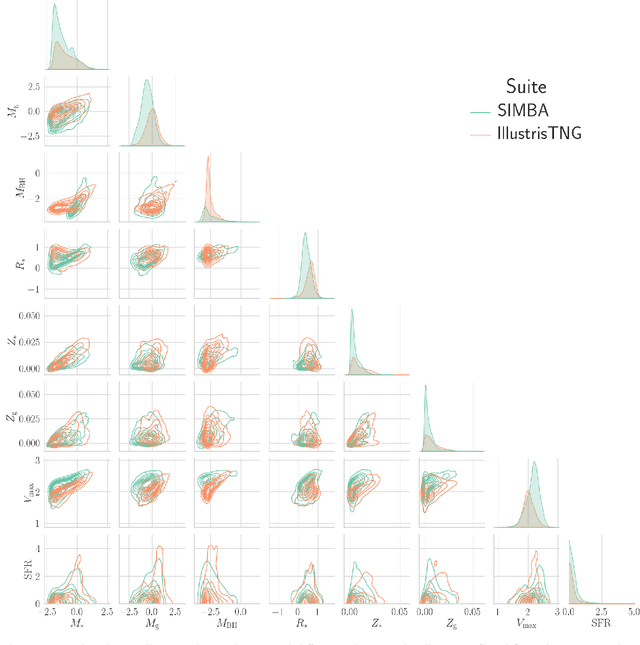

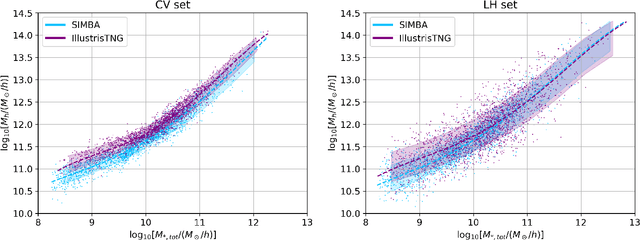

Feb 27, 2023Abstract:We train graph neural networks to perform field-level likelihood-free inference using galaxy catalogs from state-of-the-art hydrodynamic simulations of the CAMELS project. Our models are rotationally, translationally, and permutation invariant and have no scale cutoff. By training on galaxy catalogs that only contain the 3D positions and radial velocities of approximately $1,000$ galaxies in tiny volumes of $(25~h^{-1}{\rm Mpc})^3$, our models achieve a precision of approximately $12$% when inferring the value of $\Omega_{\rm m}$. To test the robustness of our models, we evaluated their performance on galaxy catalogs from thousands of hydrodynamic simulations, each with different efficiencies of supernova and AGN feedback, run with five different codes and subgrid models, including IllustrisTNG, SIMBA, Astrid, Magneticum, and SWIFT-EAGLE. Our results demonstrate that our models are robust to astrophysics, subgrid physics, and subhalo/galaxy finder changes. Furthermore, we test our models on 1,024 simulations that cover a vast region in parameter space - variations in 5 cosmological and 23 astrophysical parameters - finding that the model extrapolates really well. Including both positions and velocities are key to building robust models, and our results indicate that our networks have likely learned an underlying physical relation that does not depend on galaxy formation and is valid on scales larger than, at least, $~\sim10~h^{-1}{\rm kpc}$.

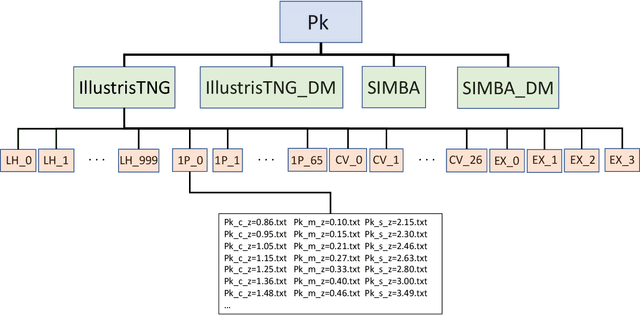

The CAMELS project: public data release

Jan 04, 2022

Abstract:The Cosmology and Astrophysics with MachinE Learning Simulations (CAMELS) project was developed to combine cosmology with astrophysics through thousands of cosmological hydrodynamic simulations and machine learning. CAMELS contains 4,233 cosmological simulations, 2,049 N-body and 2,184 state-of-the-art hydrodynamic simulations that sample a vast volume in parameter space. In this paper we present the CAMELS public data release, describing the characteristics of the CAMELS simulations and a variety of data products generated from them, including halo, subhalo, galaxy, and void catalogues, power spectra, bispectra, Lyman-$\alpha$ spectra, probability distribution functions, halo radial profiles, and X-rays photon lists. We also release over one thousand catalogues that contain billions of galaxies from CAMELS-SAM: a large collection of N-body simulations that have been combined with the Santa Cruz Semi-Analytic Model. We release all the data, comprising more than 350 terabytes and containing 143,922 snapshots, millions of halos, galaxies and summary statistics. We provide further technical details on how to access, download, read, and process the data at \url{https://camels.readthedocs.io}.

Weighing the Milky Way and Andromeda with Artificial Intelligence

Nov 29, 2021

Abstract:We present new constraints on the masses of the halos hosting the Milky Way and Andromeda galaxies derived using graph neural networks. Our models, trained on thousands of state-of-the-art hydrodynamic simulations of the CAMELS project, only make use of the positions, velocities and stellar masses of the galaxies belonging to the halos, and are able to perform likelihood-free inference on halo masses while accounting for both cosmological and astrophysical uncertainties. Our constraints are in agreement with estimates from other traditional methods.

Inferring halo masses with Graph Neural Networks

Nov 16, 2021

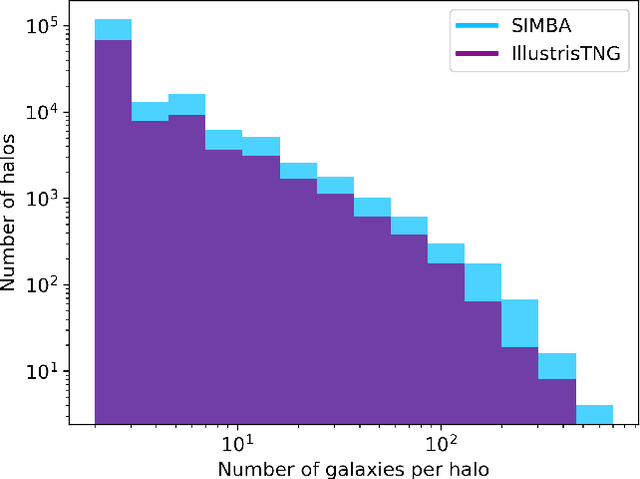

Abstract:Understanding the halo-galaxy connection is fundamental in order to improve our knowledge on the nature and properties of dark matter. In this work we build a model that infers the mass of a halo given the positions, velocities, stellar masses, and radii of the galaxies it hosts. In order to capture information from correlations among galaxy properties and their phase-space, we use Graph Neural Networks (GNNs), that are designed to work with irregular and sparse data. We train our models on galaxies from more than 2,000 state-of-the-art simulations from the Cosmology and Astrophysics with MachinE Learning Simulations (CAMELS) project. Our model, that accounts for cosmological and astrophysical uncertainties, is able to constrain the masses of the halos with a $\sim$0.2 dex accuracy. Furthermore, a GNN trained on a suite of simulations is able to preserve part of its accuracy when tested on simulations run with a different code that utilizes a distinct subgrid physics model, showing the robustness of our method. The PyTorch Geometric implementation of the GNN is publicly available on Github at https://github.com/PabloVD/HaloGraphNet

The CAMELS Multifield Dataset: Learning the Universe's Fundamental Parameters with Artificial Intelligence

Sep 22, 2021

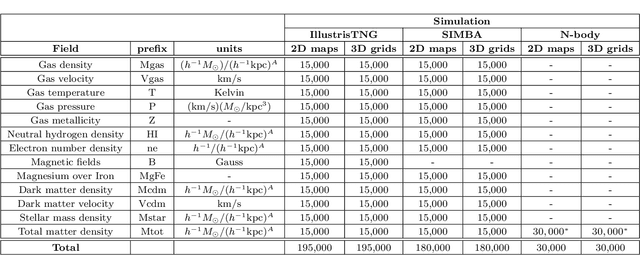

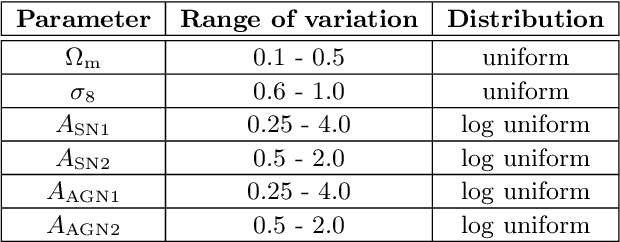

Abstract:We present the Cosmology and Astrophysics with MachinE Learning Simulations (CAMELS) Multifield Dataset, CMD, a collection of hundreds of thousands of 2D maps and 3D grids containing many different properties of cosmic gas, dark matter, and stars from 2,000 distinct simulated universes at several cosmic times. The 2D maps and 3D grids represent cosmic regions that span $\sim$100 million light years and have been generated from thousands of state-of-the-art hydrodynamic and gravity-only N-body simulations from the CAMELS project. Designed to train machine learning models, CMD is the largest dataset of its kind containing more than 70 Terabytes of data. In this paper we describe CMD in detail and outline a few of its applications. We focus our attention on one such task, parameter inference, formulating the problems we face as a challenge to the community. We release all data and provide further technical details at https://camels-multifield-dataset.readthedocs.io.

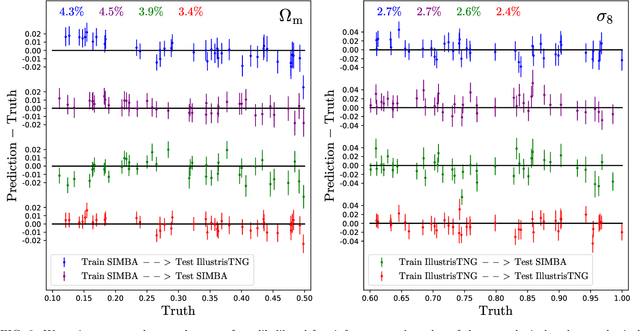

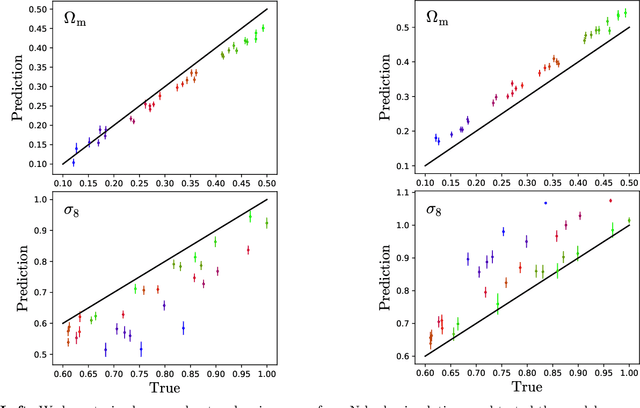

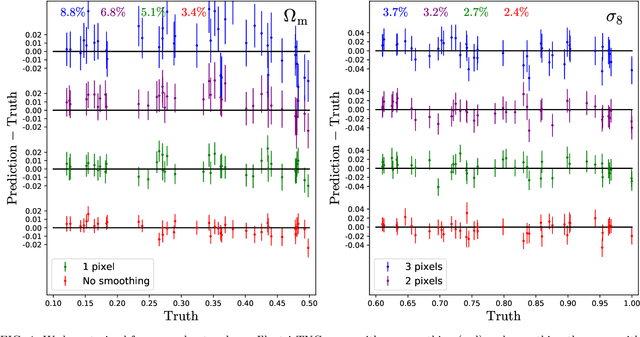

Robust marginalization of baryonic effects for cosmological inference at the field level

Sep 21, 2021

Abstract:We train neural networks to perform likelihood-free inference from $(25\,h^{-1}{\rm Mpc})^2$ 2D maps containing the total mass surface density from thousands of hydrodynamic simulations of the CAMELS project. We show that the networks can extract information beyond one-point functions and power spectra from all resolved scales ($\gtrsim 100\,h^{-1}{\rm kpc}$) while performing a robust marginalization over baryonic physics at the field level: the model can infer the value of $\Omega_{\rm m} (\pm 4\%)$ and $\sigma_8 (\pm 2.5\%)$ from simulations completely different to the ones used to train it.

Multifield Cosmology with Artificial Intelligence

Sep 20, 2021

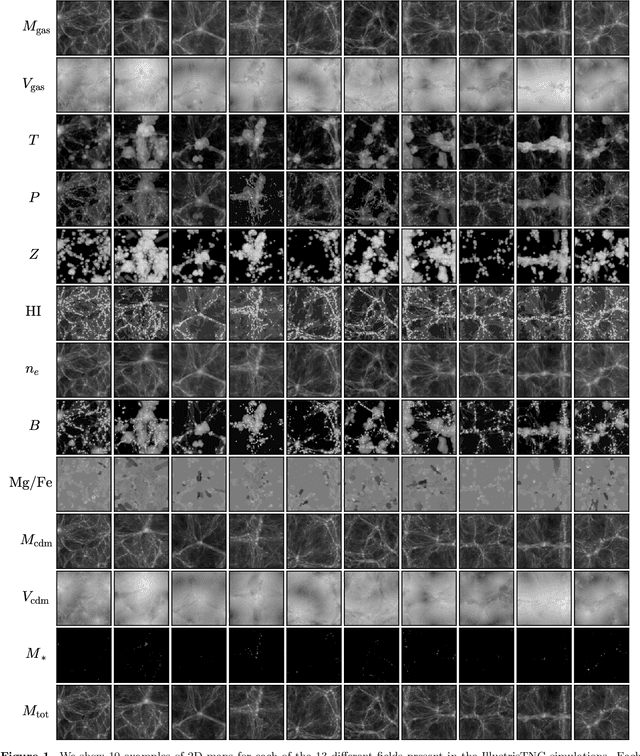

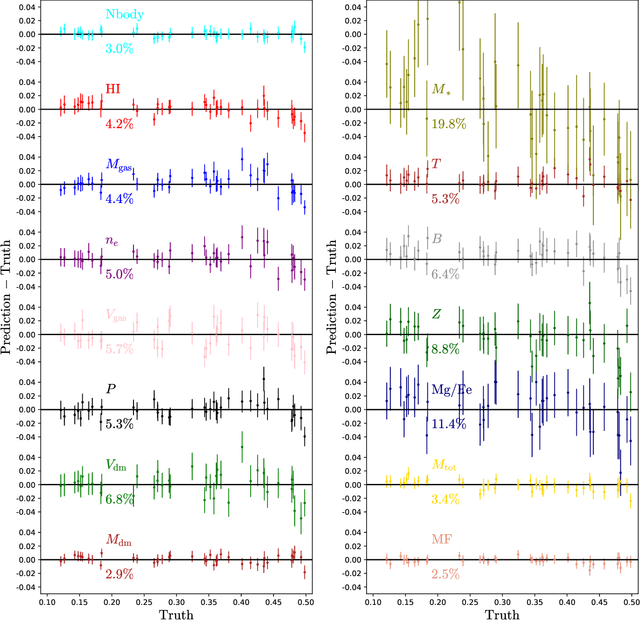

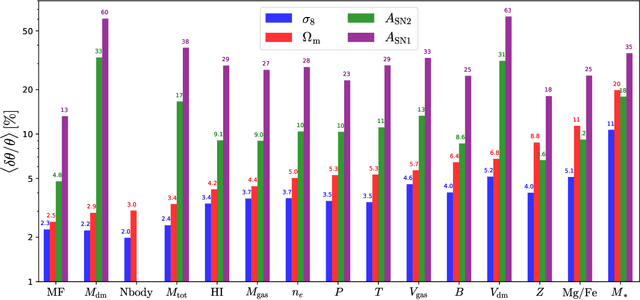

Abstract:Astrophysical processes such as feedback from supernovae and active galactic nuclei modify the properties and spatial distribution of dark matter, gas, and galaxies in a poorly understood way. This uncertainty is one of the main theoretical obstacles to extract information from cosmological surveys. We use 2,000 state-of-the-art hydrodynamic simulations from the CAMELS project spanning a wide variety of cosmological and astrophysical models and generate hundreds of thousands of 2-dimensional maps for 13 different fields: from dark matter to gas and stellar properties. We use these maps to train convolutional neural networks to extract the maximum amount of cosmological information while marginalizing over astrophysical effects at the field level. Although our maps only cover a small area of $(25~h^{-1}{\rm Mpc})^2$, and the different fields are contaminated by astrophysical effects in very different ways, our networks can infer the values of $\Omega_{\rm m}$ and $\sigma_8$ with a few percent level precision for most of the fields. We find that the marginalization performed by the network retains a wealth of cosmological information compared to a model trained on maps from gravity-only N-body simulations that are not contaminated by astrophysical effects. Finally, we train our networks on multifields -- 2D maps that contain several fields as different colors or channels -- and find that not only they can infer the value of all parameters with higher accuracy than networks trained on individual fields, but they can constrain the value of $\Omega_{\rm m}$ with higher accuracy than the maps from the N-body simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge