Marc Bosch

Accenture-NVS1: A Novel View Synthesis Dataset

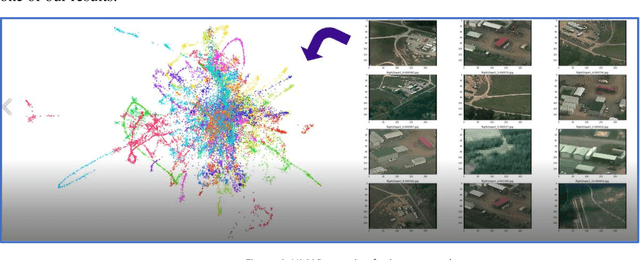

Mar 24, 2025Abstract:This paper introduces ACC-NVS1, a specialized dataset designed for research on Novel View Synthesis specifically for airborne and ground imagery. Data for ACC-NVS1 was collected in Austin, TX and Pittsburgh, PA in 2023 and 2024. The collection encompasses six diverse real-world scenes captured from both airborne and ground cameras, resulting in a total of 148,000 images. ACC-NVS1 addresses challenges such as varying altitudes and transient objects. This dataset is intended to supplement existing datasets, providing additional resources for comprehensive research, rather than serving as a benchmark.

Improving Emergency Response during Hurricane Season using Computer Vision

Sep 08, 2020

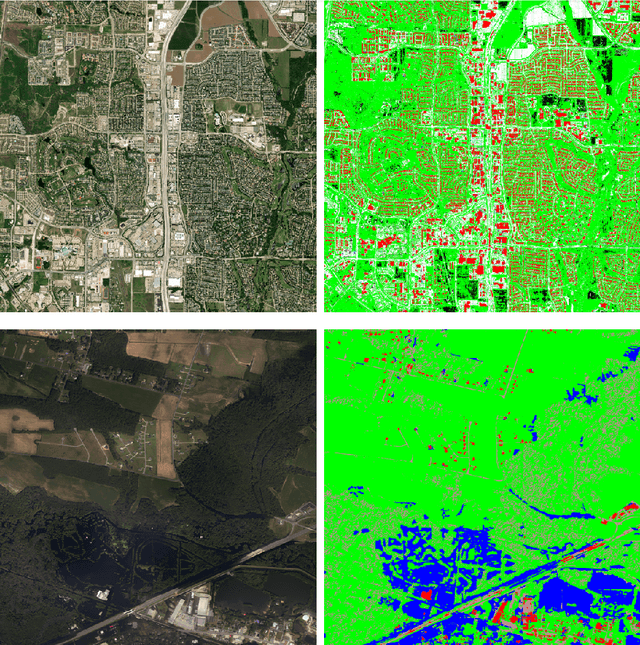

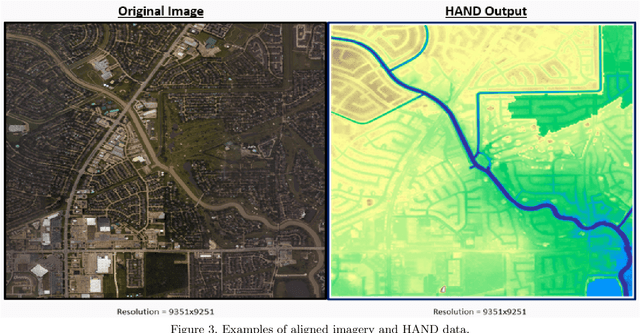

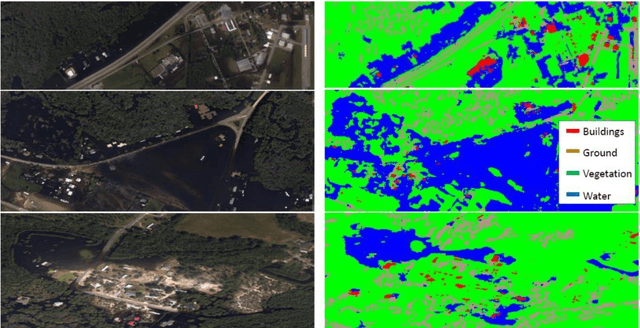

Abstract:We have developed a framework for crisis response and management that incorporates the latest technologies in computer vision (CV), inland flood prediction, damage assessment and data visualization. The framework uses data collected before, during, and after the crisis to enable rapid and informed decision making during all phases of disaster response. Our computer-vision model analyzes spaceborne and airborne imagery to detect relevant features during and after a natural disaster and creates metadata that is transformed into actionable information through web-accessible mapping tools. In particular, we have designed an ensemble of models to identify features including water, roads, buildings, and vegetation from the imagery. We have investigated techniques to bootstrap and reduce dependency on large data annotation efforts by adding use of open source labels including OpenStreetMaps and adding complementary data sources including Height Above Nearest Drainage (HAND) as a side channel to the network's input to encourage it to learn other features orthogonal to visual characteristics. Modeling efforts include modification of connected U-Nets for (1) semantic segmentation, (2) flood line detection, and (3) for damage assessment. In particular for the case of damage assessment, we added a second encoder to U-Net so that it could learn pre-event and post-event image features simultaneously. Through this method, the network is able to learn the difference between the pre- and post-disaster images, and therefore more effectively classify the level of damage. We have validated our approaches using publicly available data from the National Oceanic and Atmospheric Administration (NOAA)'s Remote Sensing Division, which displays the city and street-level details as mosaic tile images as well as data released as part of the Xview2 challenge.

Improving Community Resiliency and Emergency Response With Artificial Intelligence

May 28, 2020

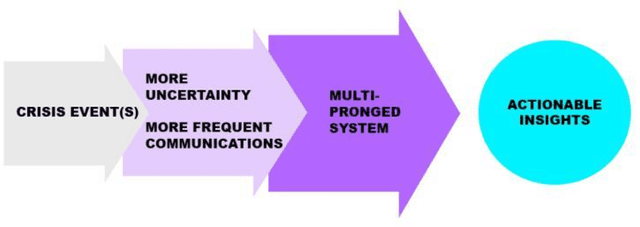

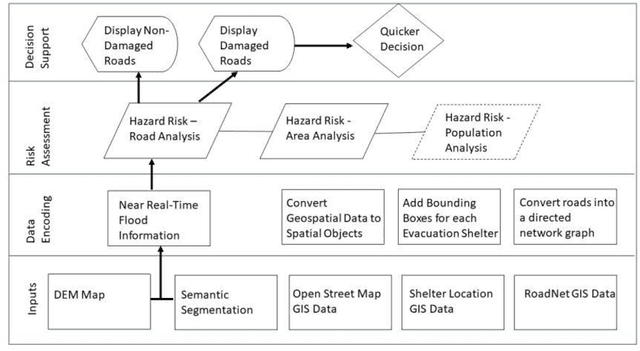

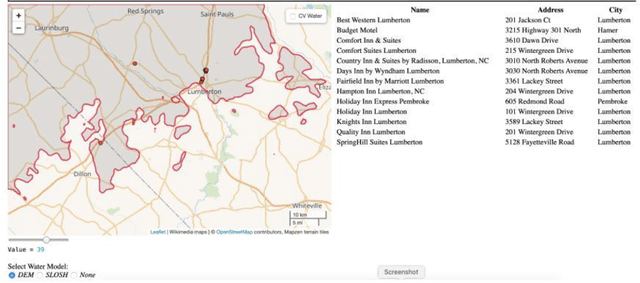

Abstract:New crisis response and management approaches that incorporate the latest information technologies are essential in all phases of emergency preparedness and response, including the planning, response, recovery, and assessment phases. Accurate and timely information is as crucial as is rapid and coherent coordination among the responding organizations. We are working towards a multipronged emergency response tool that provide stakeholders timely access to comprehensive, relevant, and reliable information. The faster emergency personnel are able to analyze, disseminate and act on key information, the more effective and timelier their response will be and the greater the benefit to affected populations. Our tool consists of encoding multiple layers of open source geospatial data including flood risk location, road network strength, inundation maps that proxy inland flooding and computer vision semantic segmentation for estimating flooded areas and damaged infrastructure. These data layers are combined and used as input data for machine learning algorithms such as finding the best evacuation routes before, during and after an emergency or providing a list of available lodging for first responders in an impacted area for first. Even though our system could be used in a number of use cases where people are forced from one location to another, we demonstrate the feasibility of our system for the use case of Hurricane Florence in Lumberton, North Carolina.

A Common Operating Picture Framework Leveraging Data Fusion and Deep Learning

Jan 16, 2020

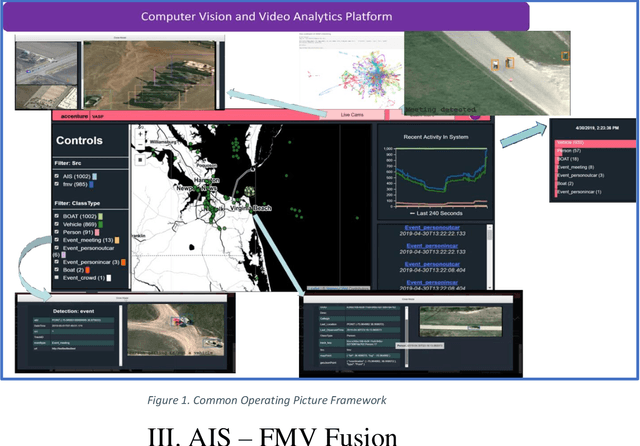

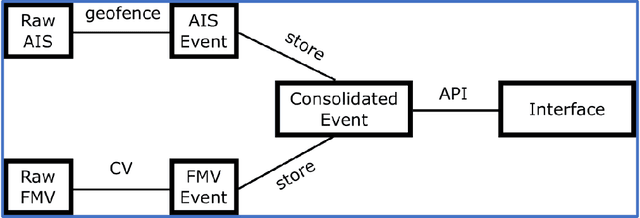

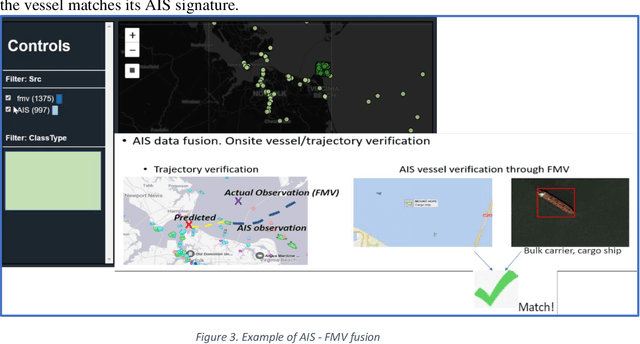

Abstract:Organizations are starting to realize of the combined power of data and data-driven algorithmic models to gain insights, situational awareness, and advance their mission. A common challenge to gaining insights is connecting inherently different datasets. These datasets (e.g. geocoded features, video streams, raw text, social network data, etc.) per separate they provide very narrow answers; however collectively they can provide new capabilities. In this work, we present a data fusion framework for accelerating solutions for Processing, Exploitation, and Dissemination (PED). Our platform is a collection of services that extract information from several data sources (per separate) by leveraging deep learning and other means of processing. This information is fused by a set of analytical engines that perform data correlations, searches, and other modeling operations to combine information from the disparate data sources. As a result, events of interest are detected, geolocated, logged, and presented into a common operating picture. This common operating picture allows the user to visualize in real time all the data sources, per separate and their collective cooperation. In addition, forensic activities have been implemented and made available through the framework. Users can review archived results and compare them to the most recent snapshot of the operational environment. In our first iteration we have focused on visual data (FMV, WAMI, CCTV/PTZ-Cameras, open source video, etc.) and AIS data streams (satellite and terrestrial sources). As a proof-of-concept, in our experiments we show how FMV detections can be combined with vessel tracking signals from AIS sources to confirm identity, tip-and-cue aerial reconnaissance, and monitor vessel activity in an area.

Contextual Sense Making by Fusing Scene Classification, Detections, and Events in Full Motion Video

Jan 16, 2020

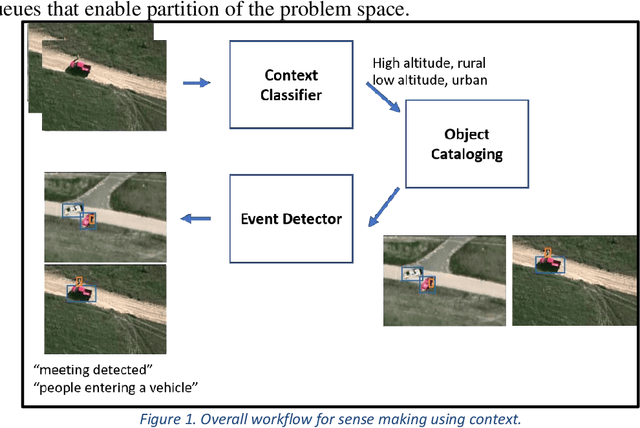

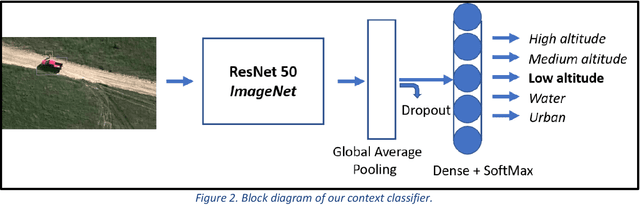

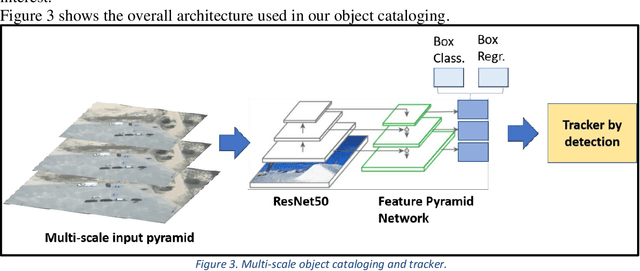

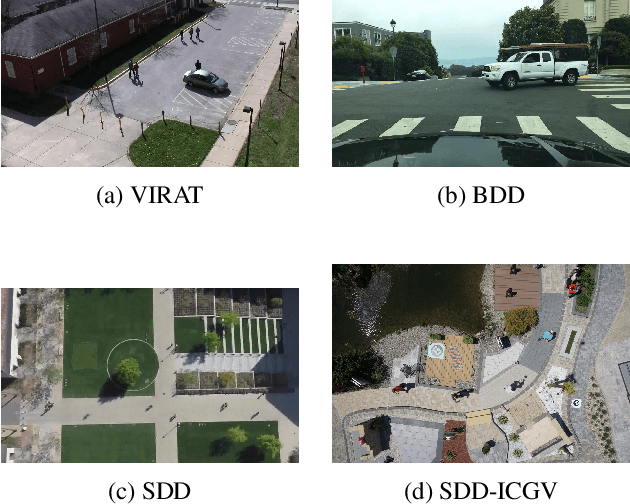

Abstract:With the proliferation of imaging sensors, the volume of multi-modal imagery far exceeds the ability of human analysts to adequately consume and exploit it. Full motion video (FMV) possesses the extra challenge of containing large amounts of redundant temporal data. We aim to address the needs of human analysts to consume and exploit data given aerial FMV. We have investigated and designed a system capable of detecting events and activities of interest that deviate from the baseline patterns of observation given FMV feeds. We have divided the problem into three tasks: (1) Context awareness, (2) object cataloging, and (3) event detection. The goal of context awareness is to constraint the problem of visual search and detection in video data. A custom image classifier categorizes the scene with one or multiple labels to identify the operating context and environment. This step helps reducing the semantic search space of downstream tasks in order to increase their accuracy. The second step is object cataloging, where an ensemble of object detectors locates and labels any known objects found in the scene (people, vehicles, boats, planes, buildings, etc.). Finally, context information and detections are sent to the event detection engine to monitor for certain behaviors. A series of analytics monitor the scene by tracking object counts, and object interactions. If these object interactions are not declared to be commonly observed in the current scene, the system will report, geolocate, and log the event. Events of interest include identifying a gathering of people as a meeting and/or a crowd, alerting when there are boats on a beach unloading cargo, increased count of people entering a building, people getting in and/or out of vehicles of interest, etc. We have applied our methods on data from different sensors at different resolutions in a variety of geographical areas.

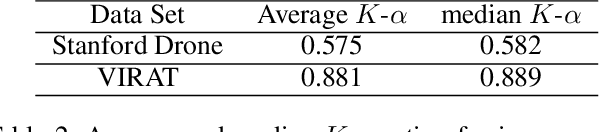

Assessing Data Quality of Annotations with Krippendorff Alpha For Applications in Computer Vision

Dec 20, 2019

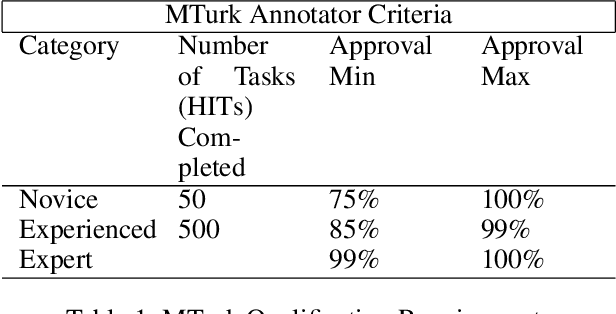

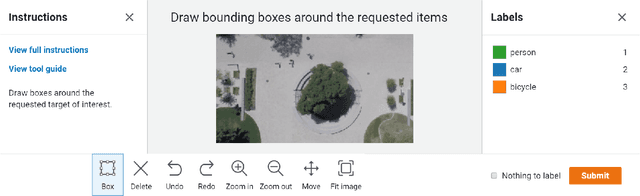

Abstract:Current supervised deep learning frameworks rely on annotated data for modeling the underlying data distribution of a given task. In particular for computer vision algorithms powered by deep learning, the quality of annotated data is the most critical factor in achieving the desired algorithm performance. Data annotation is, typically, a manual process where the annotator follows guidelines and operates in a best-guess manner. Labeling criteria among annotators can show discrepancies in labeling results. This may impact the algorithm inference performance. Given the popularity and widespread use of deep learning among computer vision, more and more custom datasets are needed to train neural networks to tackle different kinds of tasks. Unfortunately, there is no full understanding of the factors that affect annotated data quality, and how it translates into algorithm performance. In this paper we studied this problem for object detection and recognition.We conducted several data annotation experiments to measure inter annotator agreement and consistency, as well as how the selection of ground truth impacts the perceived algorithm performance.We propose a methodology to monitor the quality of annotations during the labeling of images and how it can be used to measure performance. We also show that neglecting to monitor the annotation process can result in significant loss in algorithm precision. Through these experiments, we observe that knowledge of the labeling process, training data, and ground truth data used for algorithm evaluation are fundamental components to accurately assess trustworthiness of an AI system.

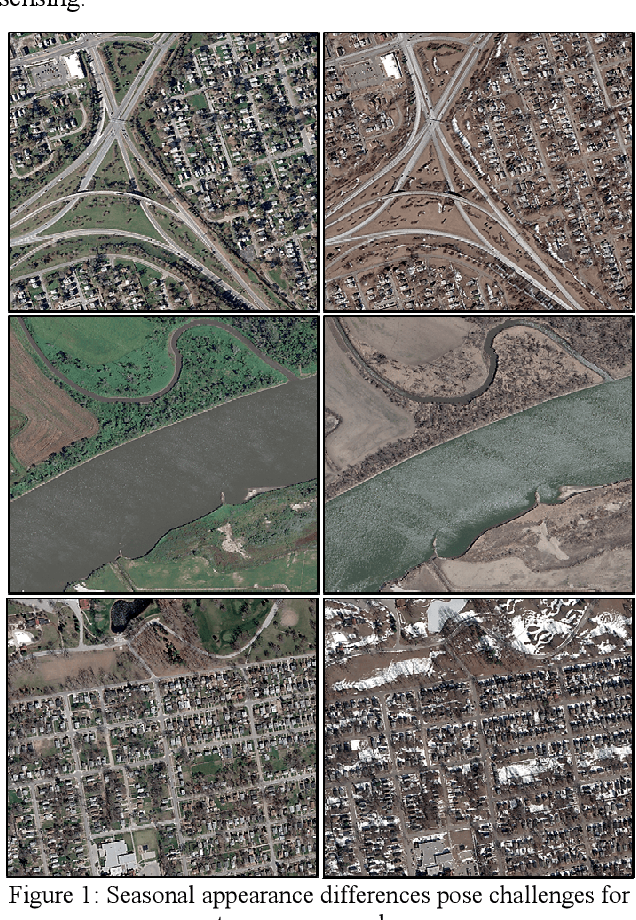

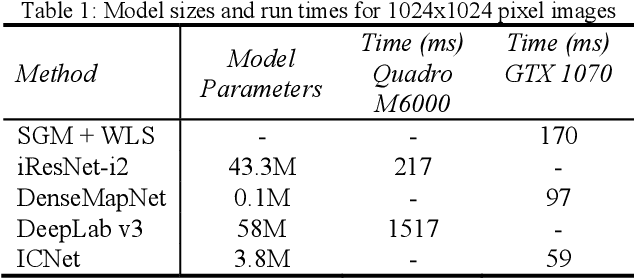

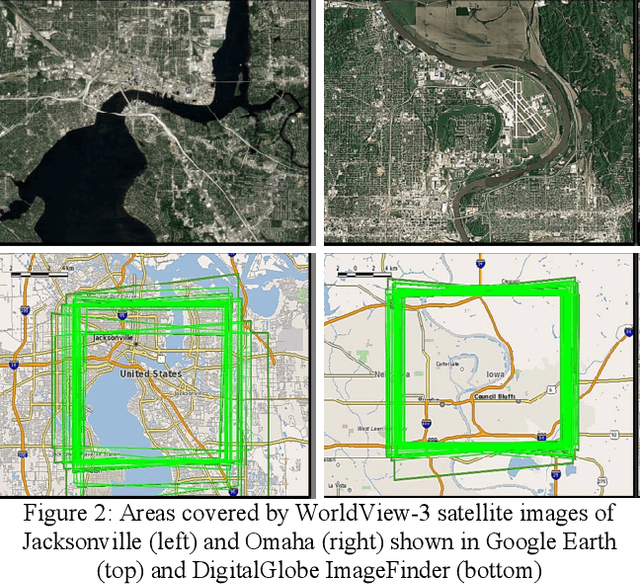

Semantic Stereo for Incidental Satellite Images

Nov 21, 2018

Abstract:The increasingly common use of incidental satellite images for stereo reconstruction versus rigidly tasked binocular or trinocular coincident collection is helping to enable timely global-scale 3D mapping; however, reliable stereo correspondence from multi-date image pairs remains very challenging due to seasonal appearance differences and scene change. Promising recent work suggests that semantic scene segmentation can provide a robust regularizing prior for resolving ambiguities in stereo correspondence and reconstruction problems. To enable research for pairwise semantic stereo and multi-view semantic 3D reconstruction with incidental satellite images, we have established a large-scale public dataset including multi-view, multi-band satellite images and ground truth geometric and semantic labels for two large cities. To demonstrate the complementary nature of the stereo and segmentation tasks, we present lightweight public baselines adapted from recent state of the art convolutional neural network models and assess their performance.

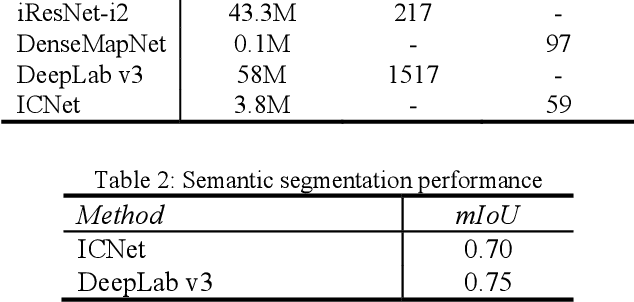

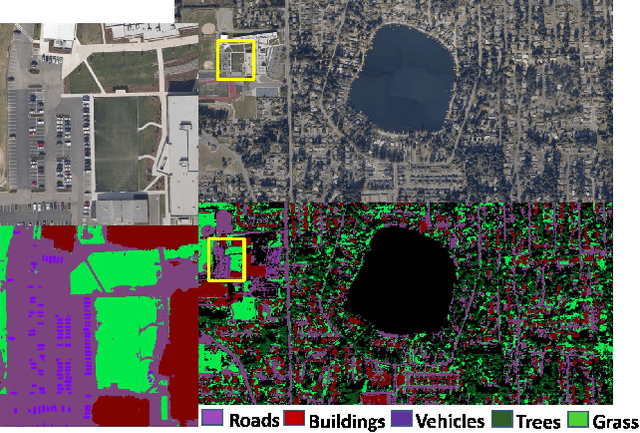

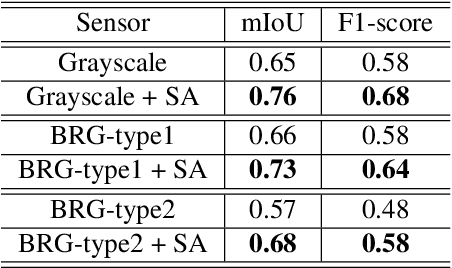

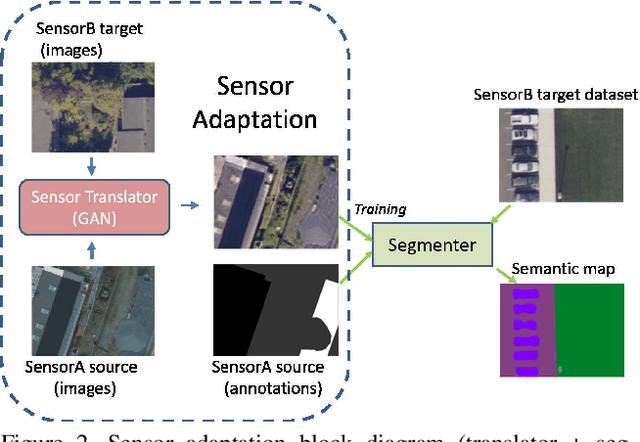

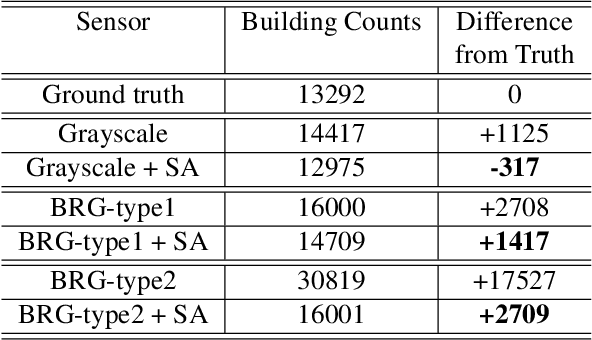

Sensor Adaptation for Improved Semantic Segmentation of Overhead Imagery

Nov 20, 2018

Abstract:Semantic segmentation is a powerful method to facilitate visual scene understanding. Each pixel is assigned a label according to a pre-defined list of object classes and semantic entities. This becomes very useful as a means to summarize large scale overhead imagery. In this paper we present our work on semantic segmentation with applications to overhead imagery. We propose an algorithm that builds and extends upon the DeepLab framework to be able to refine and resolve small objects (relative to the image size) such as vehicles. We have also investigated sensor adaptation as a means to augment available training data to be able to reduce some of the shortcomings of neural networks when deployed in new environments and to new sensors. We report results on several datasets and compare performance with other state-of-the-art architectures.

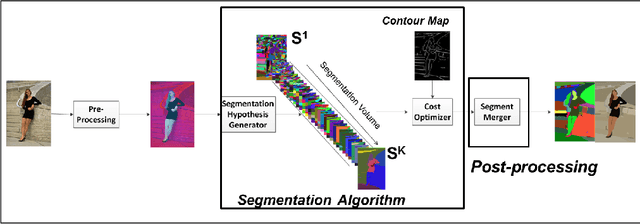

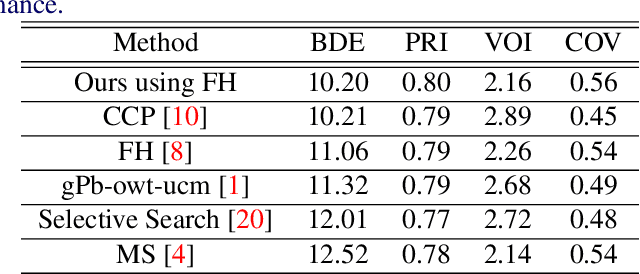

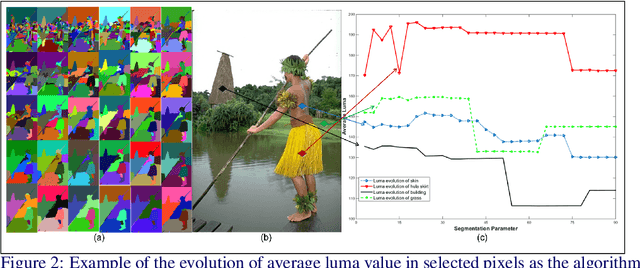

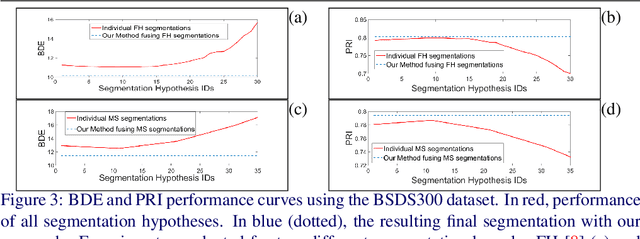

Improved Image Segmentation via Cost Minimization of Multiple Hypotheses

Jan 31, 2018

Abstract:Image segmentation is an important component of many image understanding systems. It aims to group pixels in a spatially and perceptually coherent manner. Typically, these algorithms have a collection of parameters that control the degree of over-segmentation produced. It still remains a challenge to properly select such parameters for human-like perceptual grouping. In this work, we exploit the diversity of segments produced by different choices of parameters. We scan the segmentation parameter space and generate a collection of image segmentation hypotheses (from highly over-segmented to under-segmented). These are fed into a cost minimization framework that produces the final segmentation by selecting segments that: (1) better describe the natural contours of the image, and (2) are more stable and persistent among all the segmentation hypotheses. We compare our algorithm's performance with state-of-the-art algorithms, showing that we can achieve improved results. We also show that our framework is robust to the choice of segmentation kernel that produces the initial set of hypotheses.

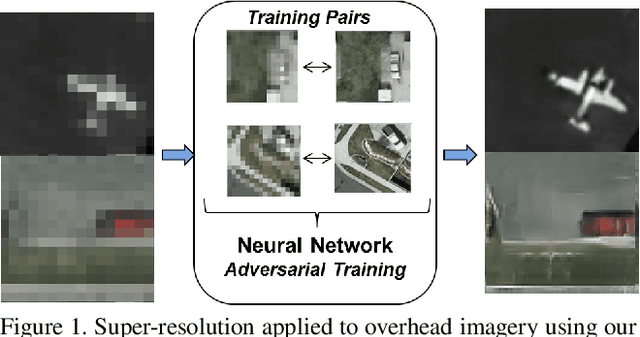

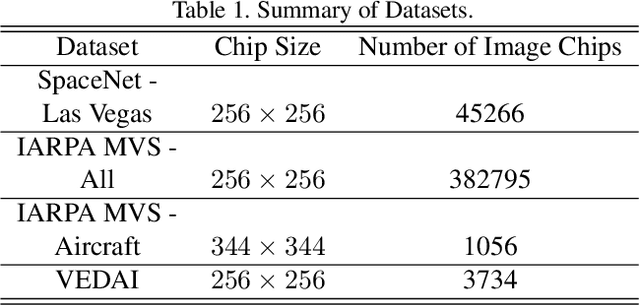

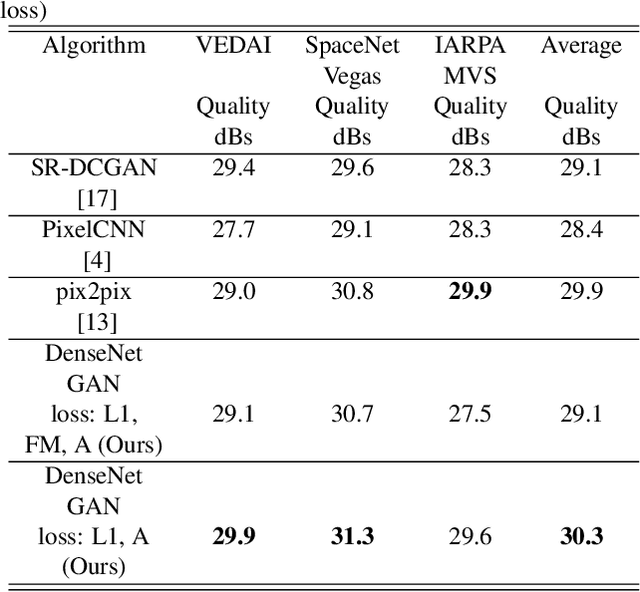

Super-Resolution for Overhead Imagery Using DenseNets and Adversarial Learning

Nov 28, 2017

Abstract:Recent advances in Generative Adversarial Learning allow for new modalities of image super-resolution by learning low to high resolution mappings. In this paper we present our work using Generative Adversarial Networks (GANs) with applications to overhead and satellite imagery. We have experimented with several state-of-the-art architectures. We propose a GAN-based architecture using densely connected convolutional neural networks (DenseNets) to be able to super-resolve overhead imagery with a factor of up to 8x. We have also investigated resolution limits of these networks. We report results on several publicly available datasets, including SpaceNet data and IARPA Multi-View Stereo Challenge, and compare performance with other state-of-the-art architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge