Margaret Smith

A Common Operating Picture Framework Leveraging Data Fusion and Deep Learning

Jan 16, 2020

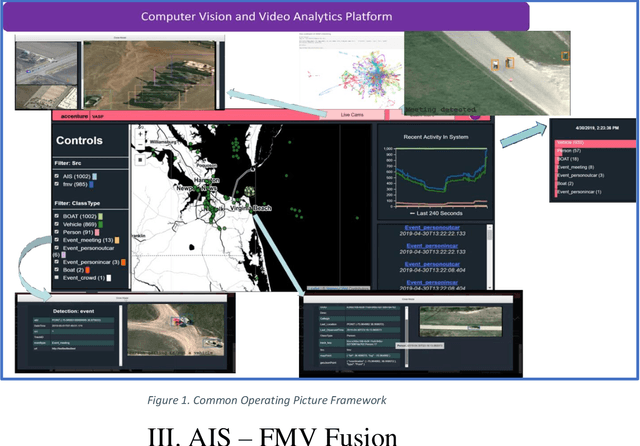

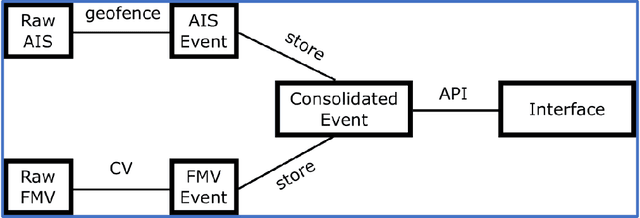

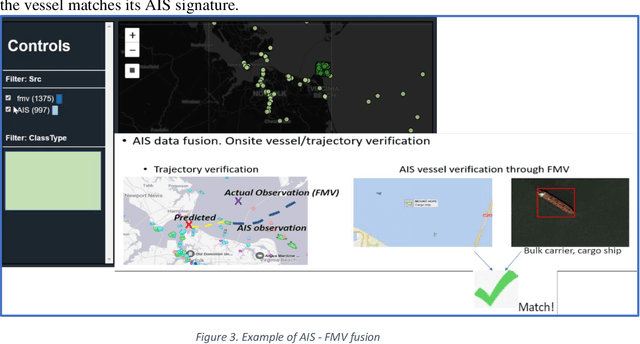

Abstract:Organizations are starting to realize of the combined power of data and data-driven algorithmic models to gain insights, situational awareness, and advance their mission. A common challenge to gaining insights is connecting inherently different datasets. These datasets (e.g. geocoded features, video streams, raw text, social network data, etc.) per separate they provide very narrow answers; however collectively they can provide new capabilities. In this work, we present a data fusion framework for accelerating solutions for Processing, Exploitation, and Dissemination (PED). Our platform is a collection of services that extract information from several data sources (per separate) by leveraging deep learning and other means of processing. This information is fused by a set of analytical engines that perform data correlations, searches, and other modeling operations to combine information from the disparate data sources. As a result, events of interest are detected, geolocated, logged, and presented into a common operating picture. This common operating picture allows the user to visualize in real time all the data sources, per separate and their collective cooperation. In addition, forensic activities have been implemented and made available through the framework. Users can review archived results and compare them to the most recent snapshot of the operational environment. In our first iteration we have focused on visual data (FMV, WAMI, CCTV/PTZ-Cameras, open source video, etc.) and AIS data streams (satellite and terrestrial sources). As a proof-of-concept, in our experiments we show how FMV detections can be combined with vessel tracking signals from AIS sources to confirm identity, tip-and-cue aerial reconnaissance, and monitor vessel activity in an area.

Contextual Sense Making by Fusing Scene Classification, Detections, and Events in Full Motion Video

Jan 16, 2020

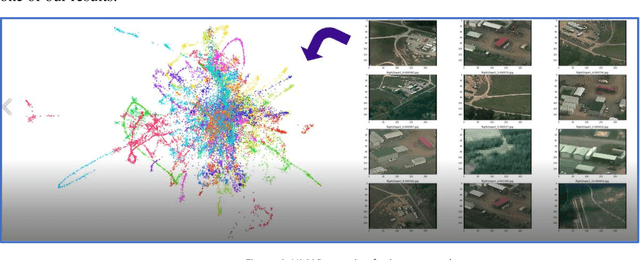

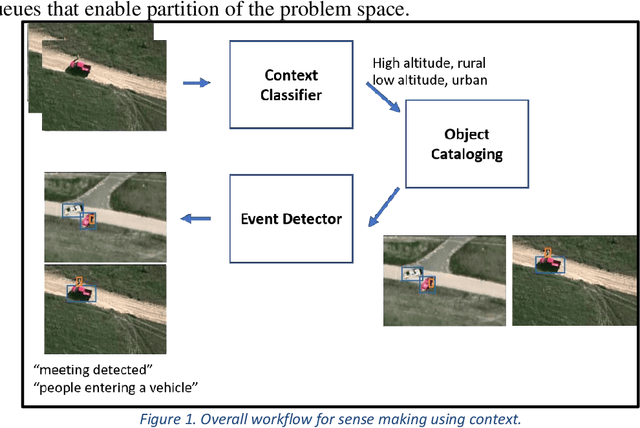

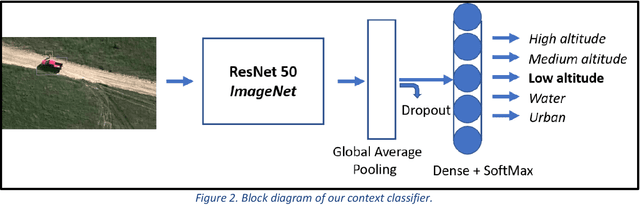

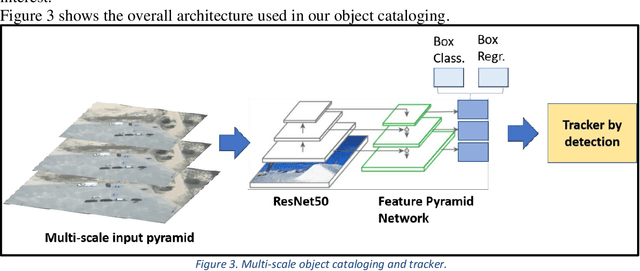

Abstract:With the proliferation of imaging sensors, the volume of multi-modal imagery far exceeds the ability of human analysts to adequately consume and exploit it. Full motion video (FMV) possesses the extra challenge of containing large amounts of redundant temporal data. We aim to address the needs of human analysts to consume and exploit data given aerial FMV. We have investigated and designed a system capable of detecting events and activities of interest that deviate from the baseline patterns of observation given FMV feeds. We have divided the problem into three tasks: (1) Context awareness, (2) object cataloging, and (3) event detection. The goal of context awareness is to constraint the problem of visual search and detection in video data. A custom image classifier categorizes the scene with one or multiple labels to identify the operating context and environment. This step helps reducing the semantic search space of downstream tasks in order to increase their accuracy. The second step is object cataloging, where an ensemble of object detectors locates and labels any known objects found in the scene (people, vehicles, boats, planes, buildings, etc.). Finally, context information and detections are sent to the event detection engine to monitor for certain behaviors. A series of analytics monitor the scene by tracking object counts, and object interactions. If these object interactions are not declared to be commonly observed in the current scene, the system will report, geolocate, and log the event. Events of interest include identifying a gathering of people as a meeting and/or a crowd, alerting when there are boats on a beach unloading cargo, increased count of people entering a building, people getting in and/or out of vehicles of interest, etc. We have applied our methods on data from different sensors at different resolutions in a variety of geographical areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge