Lyutianyang Zhang

Revisiting Multi-User Downlink in IEEE 802.11ax: A Designers Guide to MU-MIMO

Jun 09, 2024Abstract:Downlink (DL) Multi-User (MU) Multiple Input Multiple Output (MU-MIMO) is a key technology that allows multiple concurrent data transmissions from an Access Point (AP) to a selected sub-set of clients for higher network efficiency in IEEE 802.11ax. However, DL MU-MIMO feature is typically turned off as the default setting in AP vendors' products, that is, turning on the DL MU-MIMO may not help increase the network efficiency, which is counter-intuitive. In this article, we provide a sufficiently deep understanding of the interplay between the various underlying factors, i.e., CSI overhead and spatial correlation, which result in negative results when turning on the DL MU-MIMO. Furthermore, we provide a fundamental guideline as a function of operational scenarios to address the fundamental question "when the DL MU-MIMO should be turned on/off".

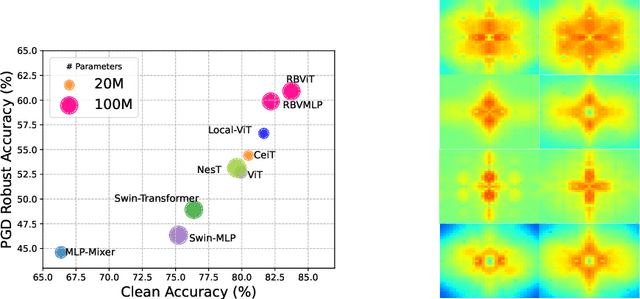

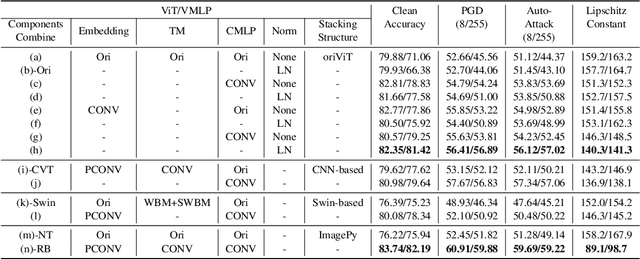

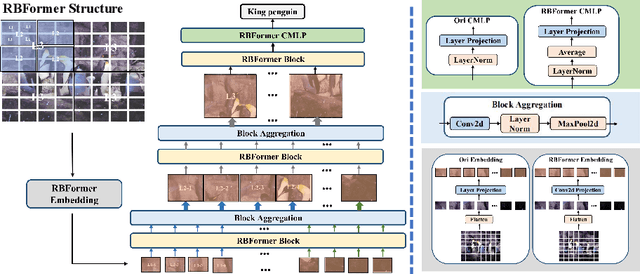

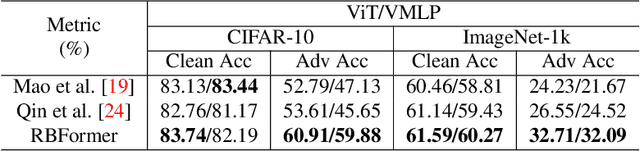

RBFormer: Improve Adversarial Robustness of Transformer by Robust Bias

Sep 23, 2023

Abstract:Recently, there has been a surge of interest and attention in Transformer-based structures, such as Vision Transformer (ViT) and Vision Multilayer Perceptron (VMLP). Compared with the previous convolution-based structures, the Transformer-based structure under investigation showcases a comparable or superior performance under its distinctive attention-based input token mixer strategy. Introducing adversarial examples as a robustness consideration has had a profound and detrimental impact on the performance of well-established convolution-based structures. This inherent vulnerability to adversarial attacks has also been demonstrated in Transformer-based structures. In this paper, our emphasis lies on investigating the intrinsic robustness of the structure rather than introducing novel defense measures against adversarial attacks. To address the susceptibility to robustness issues, we employ a rational structure design approach to mitigate such vulnerabilities. Specifically, we enhance the adversarial robustness of the structure by increasing the proportion of high-frequency structural robust biases. As a result, we introduce a novel structure called Robust Bias Transformer-based Structure (RBFormer) that shows robust superiority compared to several existing baseline structures. Through a series of extensive experiments, RBFormer outperforms the original structures by a significant margin, achieving an impressive improvement of +16.12% and +5.04% across different evaluation criteria on CIFAR-10 and ImageNet-1k, respectively.

Static Background Removal in Vehicular Radar: Filtering in Azimuth-Elevation-Doppler Domain

Jul 04, 2023Abstract:A significant challenge in autonomous driving systems lies in image understanding within complex environments, particularly dense traffic scenarios. An effective solution to this challenge involves removing the background or static objects from the scene, so as to enhance the detection of moving targets as key component of improving overall system performance. In this paper, we present an efficient algorithm for background removal in automotive radar applications, specifically utilizing a frequency-modulated continuous wave (FMCW) radar. Our proposed algorithm follows a three-step approach, encompassing radar signal preprocessing, three-dimensional (3D) ego-motion estimation, and notch filter-based background removal in the azimuth-elevation-Doppler domain. To begin, we model the received signal of the FMCW multiple-input multiple-output (MIMO) radar and develop a signal processing framework for extracting four-dimensional (4D) point clouds. Subsequently, we introduce a robust 3D ego-motion estimation algorithm that accurately estimates radar ego-motion speed, accounting for Doppler ambiguity, by processing the point clouds. Additionally, our algorithm leverages the relationship between Doppler velocity, azimuth angle, elevation angle, and radar ego-motion speed to identify the spectrum belonging to background clutter. Subsequently, we employ notch filters to effectively filter out the background clutter. The performance of our algorithm is evaluated using both simulated data and extensive experiments with real-world data. The results demonstrate its effectiveness in efficiently removing background clutter and enhacing perception within complex environments. By offering a fast and computationally efficient solution, our approach effectively addresses challenges posed by non-homogeneous environments and real-time processing requirements.

Optimal Beam Training for mmWave Massive MIMO using 802.11ay

Nov 29, 2022Abstract:Beam training of 802.11 ad is a technology that helps accelerate the analog weighting vector (AWV) selection process under the constraint of the existing code-book for AWV. However, 5G milli-meter wave (mmWave) multiple-input-multiple-output (MIMO) system brings challenges to this new technology due to the higher order of complexity of antennae. Hence, the existing codebook of 11ad is unlikely to even include the near-optimal AWV and the data rate will degrade severely. To cope with this situation, this paper proposed a new beam training protocol combined with the state-of-the-art compressed sensing channel estimation in order to find the AWV to maximize the optimal data-rate. Simulation is implemented to show the data-rate of AWV achieved by 11 ad is worse than the proposed protocol.

Architecture-Algorithmic Trade-offs in Multi-path Channel Estimation for mmWAVE Systems

Sep 07, 2022

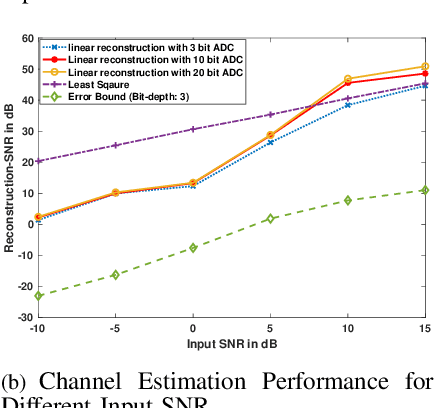

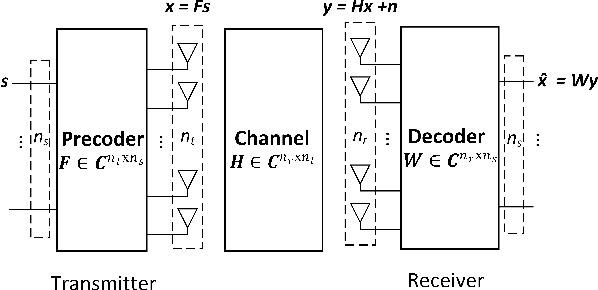

Abstract:5G mmWave massive MIMO systems are likely to be deployed in dense urban scenarios, where increasing network capacity is the primary objective. A key component in mmWave transceiver design is channel estimation which is challenging due to the very large signal bandwidths (order of GHz) implying significant resolved spatial multipath, coupled with large # of Tx/Rx antennas for large-scale MIMO. This results in significantly increased training overhead that in turn leads to unacceptably high computational complexity and power cost. Our work thus highlights the interplay of transceiver architecture and receiver signal processing algorithm choices that fundamentally address (mobile) handset power consumption, with minimal degradation in performance. We investigate trade-offs enabled by conjunction of hybrid beamforming mmWave receiver and channel estimation algorithms that exploit available sparsity in such wideband scenarios. A compressive sensing (CS) framework for sparse channel estimation -- Binary Iterative Hard Thresholding (BIHT) \cite{jacques2013robust} followed by linear reconstruction method with varying quantization (ADC) levels -- is explored to compare the trade-offs between bit-depth and sampling rate for a given ADC power budget. Performance analysis of the BIHT+ linear reconstruction method is conducted via simulation studies for 5G specified multi-path channel models and compared to oracle-assisted bounds for validation.

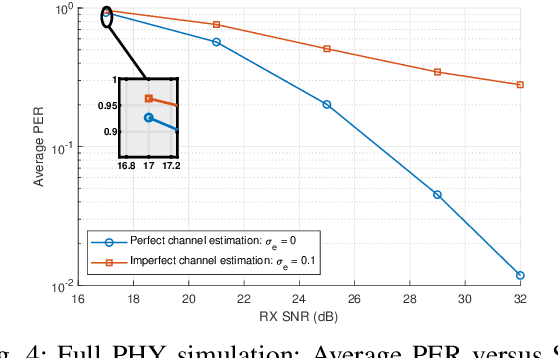

Efficient PHY Layer Abstraction under Imperfect Channel Estimation

May 24, 2022

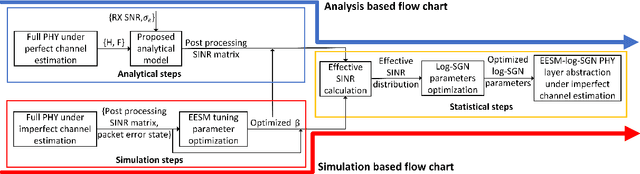

Abstract:As network simulator has been suffering from the large computational complexity in the physical (PHY) layer, a PHY layer abstraction model that efficiently and accurately characterizes the PHY layer performance from the system level simulations is well-needed. However, most existing work investigate the PHY layer abstraction under an assumption of perfect channel estimation, as a result, such a model may become unreliable if there exists channel estimation error in a real communication system. This work improves an efficient PHY layer method, EESM-log-SGN PHY layer abstraction, by considering the presence of channel estimation error. We develop two methods for implementing the EESM-log-SGN PHY abstraction under imperfect channel estimation. Meanwhile, we present the derivation of effective Signal-to-Interference-plus-Noise-Ratio (SINR) for minimum mean squared error (MMSE) receivers impacted by the channel estimation error. Via full PHY simulations, we verify that the effective SINR is not impacted by the channel estimation error under multiple-input and single-output (MISO)/single-input and single-output (SISO) configuration. Finally, the developed methods are validated under different orthogonal frequency division multiplexing (OFDM) scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge