Lukáš Adam

WildFusion: Individual Animal Identification with Calibrated Similarity Fusion

Aug 23, 2024

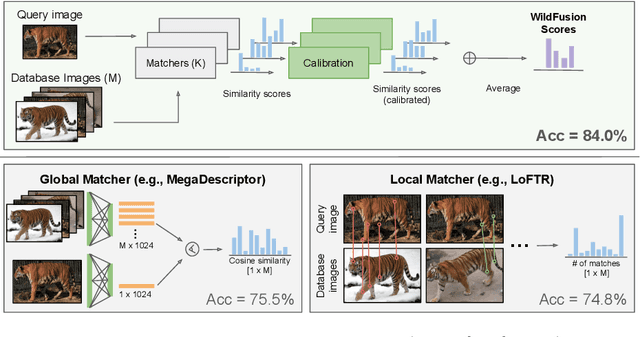

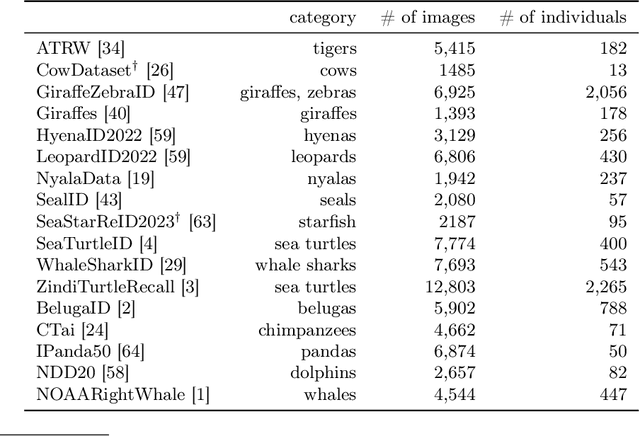

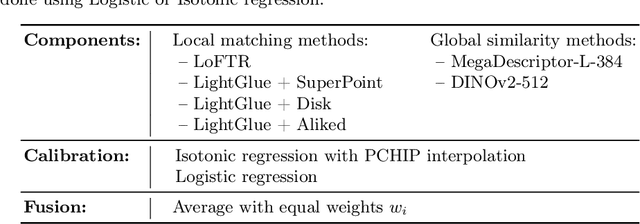

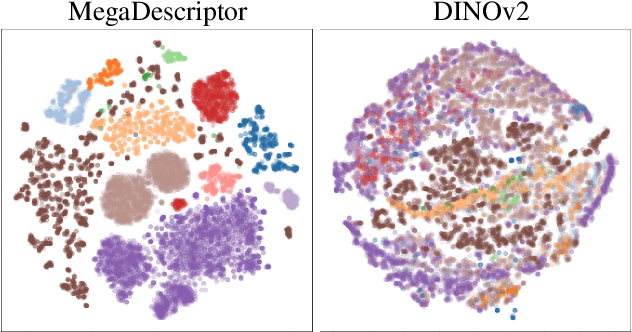

Abstract:We propose a new method - WildFusion - for individual identification of a broad range of animal species. The method fuses deep scores (e.g., MegaDescriptor or DINOv2) and local matching similarity (e.g., LoFTR and LightGlue) to identify individual animals. The global and local information fusion is facilitated by similarity score calibration. In a zero-shot setting, relying on local similarity score only, WildFusion achieved mean accuracy, measured on 17 datasets, of 76.2%. This is better than the state-of-the-art model, MegaDescriptor-L, whose training set included 15 of the 17 datasets. If a dataset-specific calibration is applied, mean accuracy increases by 2.3% percentage points. WildFusion, with both local and global similarity scores, outperforms the state-of-the-art significantly - mean accuracy reached 84.0%, an increase of 8.5 percentage points; the mean relative error drops by 35%. We make the code and pre-trained models publicly available5, enabling immediate use in ecology and conservation.

WildlifeReID-10k: Wildlife re-identification dataset with 10k individual animals

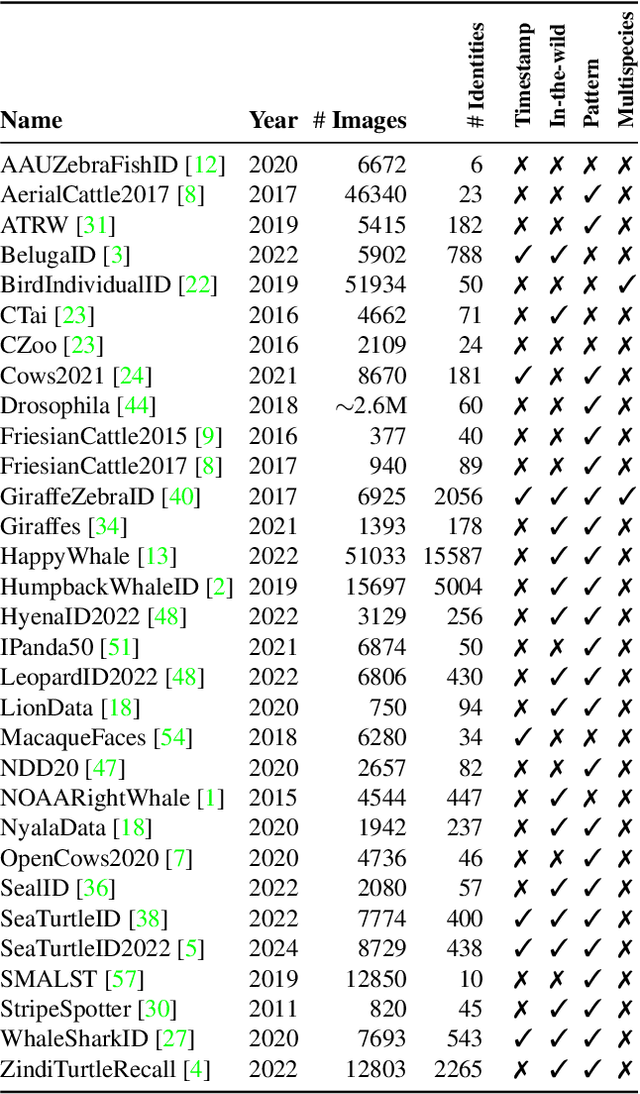

Jun 17, 2024Abstract:We introduce a new wildlife re-identification dataset WildlifeReID-10k with more than 214k images of 10k individual animals. It is a collection of 30 existing wildlife re-identification datasets with additional processing steps. WildlifeReID-10k contains animals as diverse as marine turtles, primates, birds, African herbivores, marine mammals and domestic animals. Due to the ubiquity of similar images in datasets, we argue that the standard (random) splits into training and testing sets are inadequate for wildlife re-identification and propose a new similarity-aware split based on the similarity of extracted features. To promote fair method comparison, we include similarity-aware splits both for closed-set and open-set settings, use MegaDescriptor - a foundational model for wildlife re-identification - for baseline performance and host a leaderboard with the best results. We publicly publish the dataset and the codes used to create it in the wildlife-datasets library, making WildlifeReID-10k both highly curated and easy to use.

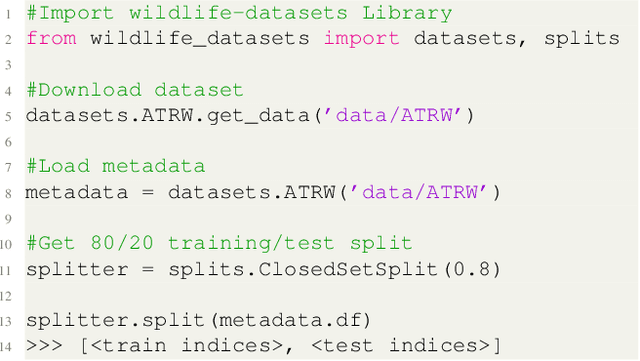

WildlifeDatasets: An open-source toolkit for animal re-identification

Nov 15, 2023

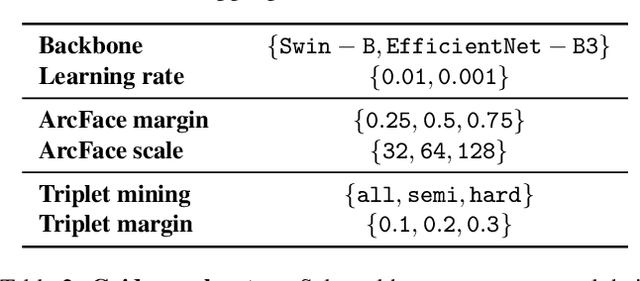

Abstract:In this paper, we present WildlifeDatasets (https://github.com/WildlifeDatasets/wildlife-datasets) - an open-source toolkit intended primarily for ecologists and computer-vision / machine-learning researchers. The WildlifeDatasets is written in Python, allows straightforward access to publicly available wildlife datasets, and provides a wide variety of methods for dataset pre-processing, performance analysis, and model fine-tuning. We showcase the toolkit in various scenarios and baseline experiments, including, to the best of our knowledge, the most comprehensive experimental comparison of datasets and methods for wildlife re-identification, including both local descriptors and deep learning approaches. Furthermore, we provide the first-ever foundation model for individual re-identification within a wide range of species - MegaDescriptor - that provides state-of-the-art performance on animal re-identification datasets and outperforms other pre-trained models such as CLIP and DINOv2 by a significant margin. To make the model available to the general public and to allow easy integration with any existing wildlife monitoring applications, we provide multiple MegaDescriptor flavors (i.e., Small, Medium, and Large) through the HuggingFace hub (https://huggingface.co/BVRA).

SeaTurtleID2022: A long-span dataset for reliable sea turtle re-identification

Nov 09, 2023Abstract:This paper introduces the first public large-scale, long-span dataset with sea turtle photographs captured in the wild -- SeaTurtleID2022 (https://www.kaggle.com/datasets/wildlifedatasets/seaturtleid2022). The dataset contains 8729 photographs of 438 unique individuals collected within 13 years, making it the longest-spanned dataset for animal re-identification. All photographs include various annotations, e.g., identity, encounter timestamp, and body parts segmentation masks. Instead of standard "random" splits, the dataset allows for two realistic and ecologically motivated splits: (i) a time-aware closed-set with training, validation, and test data from different days/years, and (ii) a time-aware open-set with new unknown individuals in test and validation sets. We show that time-aware splits are essential for benchmarking re-identification methods, as random splits lead to performance overestimation. Furthermore, a baseline instance segmentation and re-identification performance over various body parts is provided. Finally, an end-to-end system for sea turtle re-identification is proposed and evaluated. The proposed system based on Hybrid Task Cascade for head instance segmentation and ArcFace-trained feature-extractor achieved an accuracy of 86.8%.

SeaTurtleID: A novel long-span dataset highlighting the importance of timestamps in wildlife re-identification

Nov 18, 2022Abstract:This paper introduces SeaTurtleID, the first public large-scale, long-span dataset with sea turtle photographs captured in the wild. The dataset is suitable for benchmarking re-identification methods and evaluating several other computer vision tasks. The dataset consists of 7774 high-resolution photographs of 400 unique individuals collected within 12 years in 1081 encounters. Each photograph is accompanied by rich metadata, e.g., identity label, head segmentation mask, and encounter timestamp. The 12-year span of the dataset makes it the longest-spanned public wild animal dataset with timestamps. By exploiting this unique property, we show that timestamps are necessary for an unbiased evaluation of animal re-identification methods because they allow time-aware splits of the dataset into reference and query sets. We show that time-unaware splits can lead to performance overestimation of more than 100% compared to the time-aware splits for both feature- and CNN-based re-identification methods. We also argue that time-aware splits correspond to more realistic re-identification pipelines than the time-unaware ones. We recommend that animal re-identification methods should only be tested on datasets with timestamps using time-aware splits, and we encourage dataset curators to include such information in the associated metadata.

Adversarial examples by perturbing high-level features in intermediate decoder layers

Oct 14, 2021

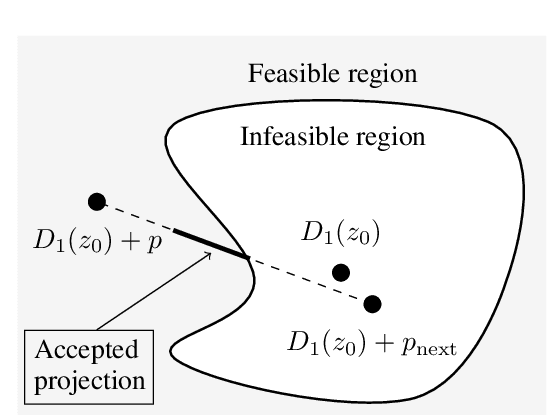

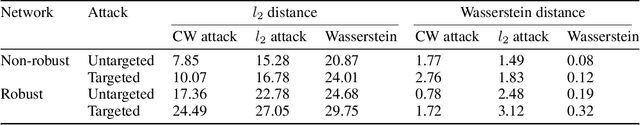

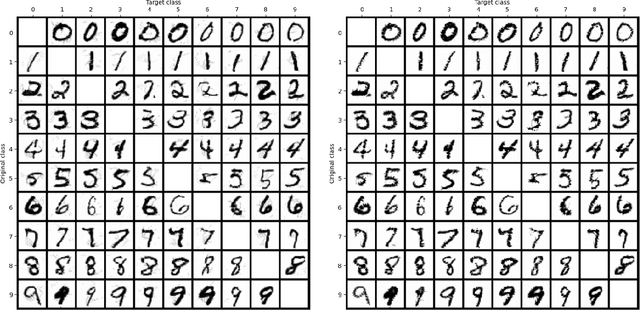

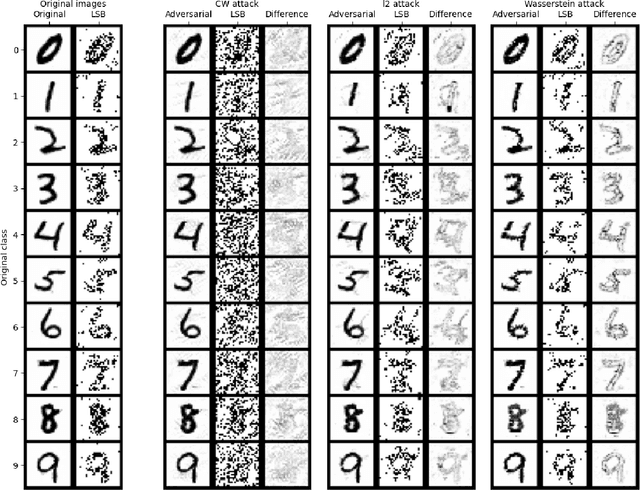

Abstract:We propose a novel method for creating adversarial examples. Instead of perturbing pixels, we use an encoder-decoder representation of the input image and perturb intermediate layers in the decoder. This changes the high-level features provided by the generative model. Therefore, our perturbation possesses semantic meaning, such as a longer beak or green tints. We formulate this task as an optimization problem by minimizing the Wasserstein distance between the adversarial and initial images under a misclassification constraint. We employ the projected gradient method with a simple inexact projection. Due to the projection, all iterations are feasible, and our method always generates adversarial images. We perform numerical experiments on the MNIST and ImageNet datasets in both targeted and untargeted settings. We demonstrate that our adversarial images are much less vulnerable to steganographic defence techniques than pixel-based attacks. Moreover, we show that our method modifies key features such as edges and that defence techniques based on adversarial training are vulnerable to our attacks.

DeepTopPush: Simple and Scalable Method for Accuracy at the Top

Jun 22, 2020

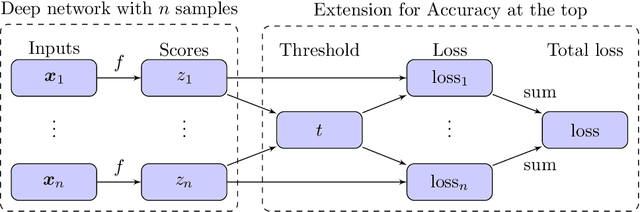

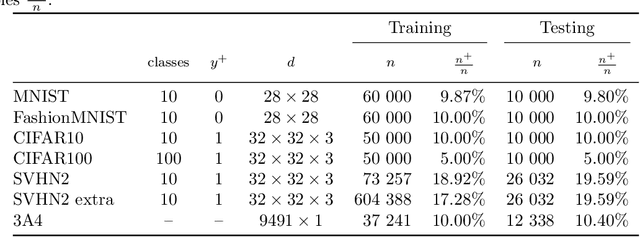

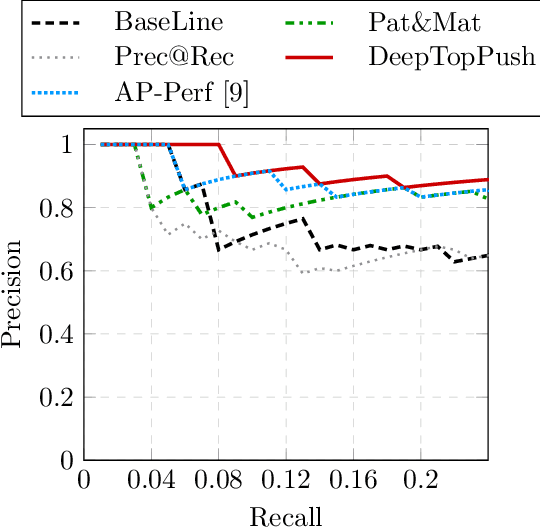

Abstract:Accuracy at the top is a special class of binary classification problems where the performance is evaluated only on a small number of relevant (top) samples. Applications include information retrieval systems or processes with manual (expensive) postprocessing. This leads to the minimization of irrelevant samples above a threshold. We consider classifiers in the form of an arbitrary (deep) network and propose a new method DeepTopPush for minimizing the top loss function. Since the threshold depends on all samples, the problem is non-decomposable. We modify the stochastic gradient descent to handle the non-decomposability in an end-to-end training manner and propose a way to estimate the threshold only from values on the current minibatch. We demonstrate the good performance of DeepTopPush on visual recognition datasets and on a real-world application of selecting a small number of molecules for further drug testing.

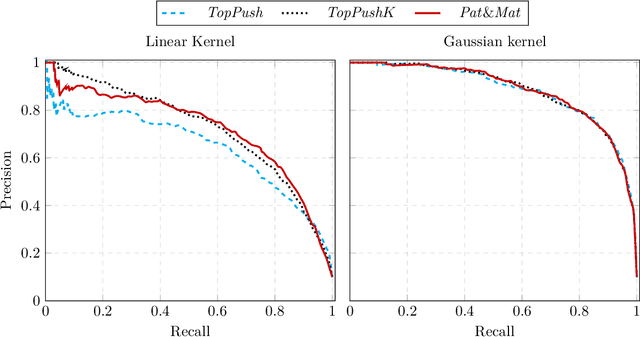

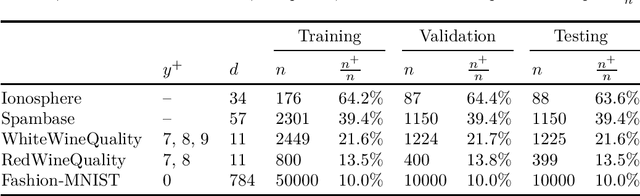

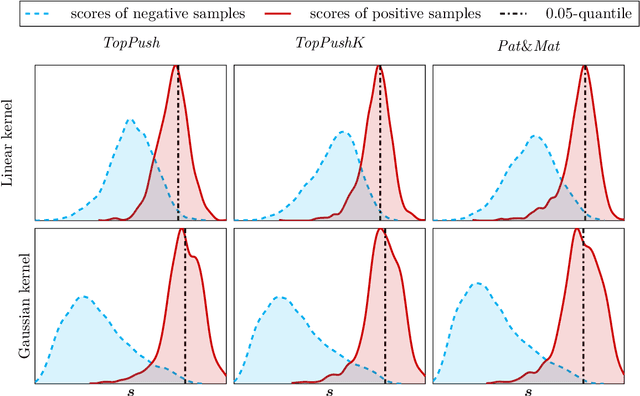

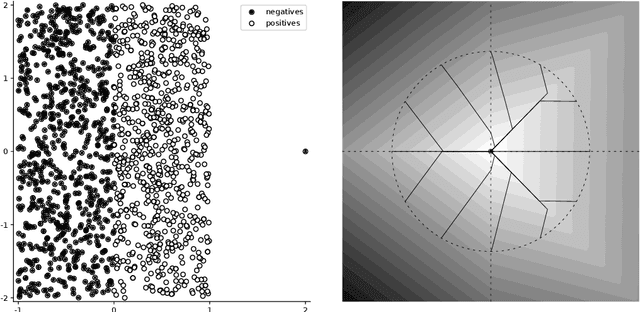

Nonlinear classifiers for ranking problems based on kernelized SVM

Feb 26, 2020

Abstract:Many classification problems focus on maximizing the performance only on the samples with the highest relevance instead of all samples. As an example, we can mention ranking problems, accuracy at the top or search engines where only the top few queries matter. In our previous work, we derived a general framework including several classes of these linear classification problems. In this paper, we extend the framework to nonlinear classifiers. Utilizing a similarity to SVM, we dualize the problems, add kernels and propose a componentwise dual ascent method. This allows us to perform one iteration in less than 20 milliseconds on relatively large datasets such as FashionMNIST.

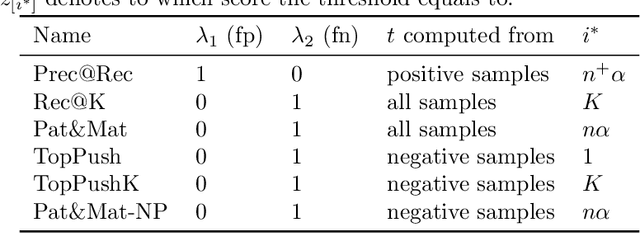

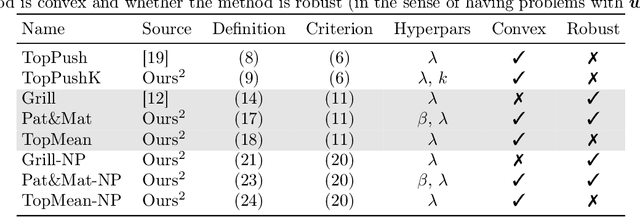

General Framework for Binary Classification on Top Samples

Feb 25, 2020

Abstract:Many binary classification problems minimize misclassification above (or below) a threshold. We show that instances of ranking problems, accuracy at the top or hypothesis testing may be written in this form. We propose a general framework to handle these classes of problems and show which known methods (both known and newly proposed) fall into this framework. We provide a theoretical analysis of this framework and mention selected possible pitfalls the methods may encounter. We suggest several numerical improvements including the implicit derivative and stochastic gradient descent. We provide an extensive numerical study. Based both on the theoretical properties and numerical experiments, we conclude the paper by suggesting which method should be used in which situation.

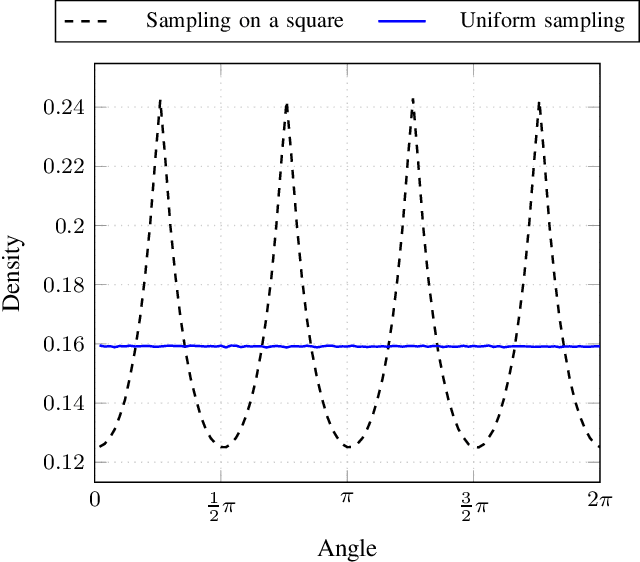

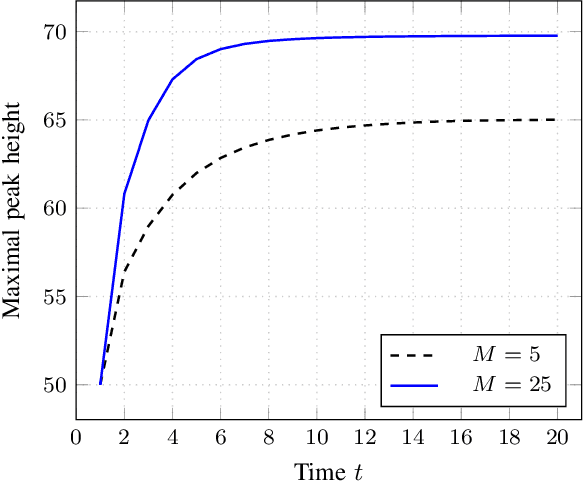

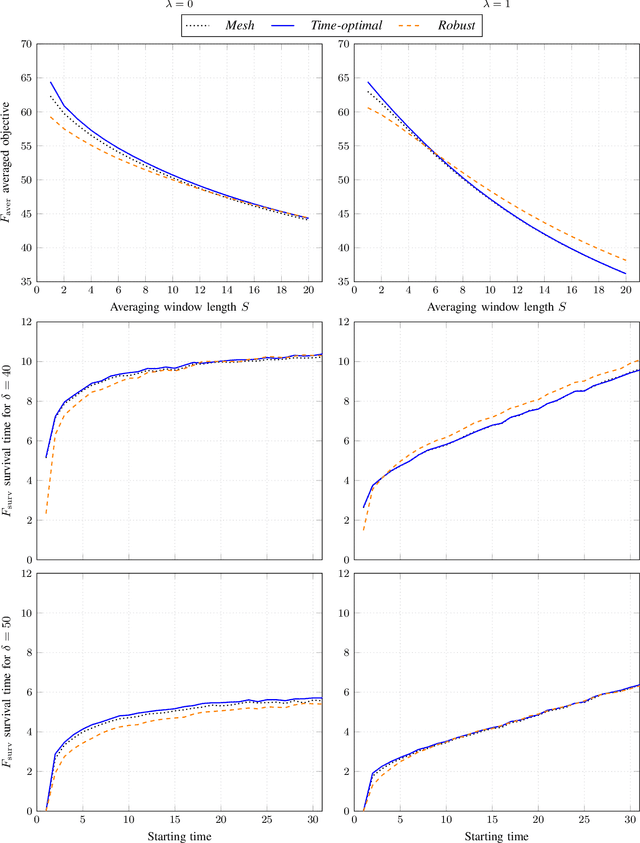

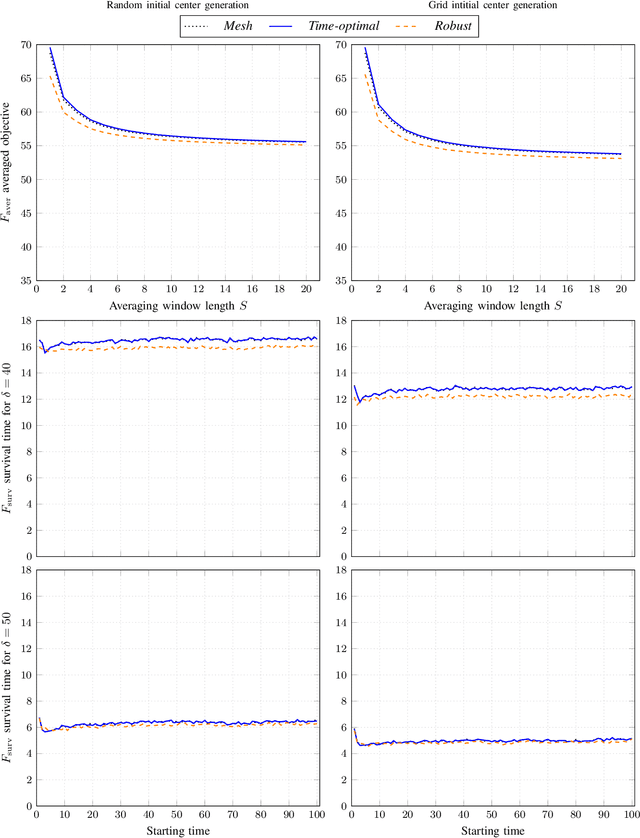

A Simple Yet Effective Approach to Robust Optimization Over Time

Sep 05, 2019

Abstract:Robust optimization over time (ROOT) refers to an optimization problem where its performance is evaluated over a period of future time. Most of the existing algorithms use particle swarm optimization combined with another method which predicts future solutions to the optimization problem. We argue that this approach may perform subpar and suggest instead a method based on a random sampling of the search space. We prove its theoretical guarantees and show that it significantly outperforms the state-of-the-art methods for ROOT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge