Luca Maria Aiello

On Narrative: The Rhetorical Mechanisms of Online Polarisation

Jan 12, 2026Abstract:Polarisation research has demonstrated how people cluster in homogeneous groups with opposing opinions. However, this effect emerges not only through interaction between people, limiting communication between groups, but also between narratives, shaping opinions and partisan identities. Yet, how polarised groups collectively construct and negotiate opposing interpretations of reality, and whether narratives move between groups despite limited interactions, remains unexplored. To address this gap, we formalise the concept of narrative polarisation and demonstrate its measurement in 212 YouTube videos and 90,029 comments on the Israeli-Palestinian conflict. Based on structural narrative theory and implemented through a large language model, we extract the narrative roles assigned to central actors in two partisan information environments. We find that while videos produce highly polarised narratives, comments significantly reduce narrative polarisation, harmonising discourse on the surface level. However, on a deeper narrative level, recurring narrative motifs reveal additional differences between partisan groups.

Group size effects and collective misalignment in LLM multi-agent systems

Oct 25, 2025Abstract:Multi-agent systems of large language models (LLMs) are rapidly expanding across domains, introducing dynamics not captured by single-agent evaluations. Yet, existing work has mostly contrasted the behavior of a single agent with that of a collective of fixed size, leaving open a central question: how does group size shape dynamics? Here, we move beyond this dichotomy and systematically explore outcomes across the full range of group sizes. We focus on multi-agent misalignment, building on recent evidence that interacting LLMs playing a simple coordination game can generate collective biases absent in individual models. First, we show that collective bias is a deeper phenomenon than previously assessed: interaction can amplify individual biases, introduce new ones, or override model-level preferences. Second, we demonstrate that group size affects the dynamics in a non-linear way, revealing model-dependent dynamical regimes. Finally, we develop a mean-field analytical approach and show that, above a critical population size, simulations converge to deterministic predictions that expose the basins of attraction of competing equilibria. These findings establish group size as a key driver of multi-agent dynamics and highlight the need to consider population-level effects when deploying LLM-based systems at scale.

Machines in the Crowd? Measuring the Footprint of Machine-Generated Text on Reddit

Oct 08, 2025Abstract:Generative Artificial Intelligence is reshaping online communication by enabling large-scale production of Machine-Generated Text (MGT) at low cost. While its presence is rapidly growing across the Web, little is known about how MGT integrates into social media environments. In this paper, we present the first large-scale characterization of MGT on Reddit. Using a state-of-the-art statistical method for detection of MGT, we analyze over two years of activity (2022-2024) across 51 subreddits representative of Reddit's main community types such as information seeking, social support, and discussion. We study the concentration of MGT across communities and over time, and compared MGT to human-authored text in terms of social signals it expresses and engagement it receives. Our very conservative estimate of MGT prevalence indicates that synthetic text is marginally present on Reddit, but it can reach peaks of up to 9% in some communities in some months. MGT is unevenly distributed across communities, more prevalent in subreddits focused on technical knowledge and social support, and often concentrated in the activity of a small fraction of users. MGT also conveys distinct social signals of warmth and status giving typical of language of AI assistants. Despite these stylistic differences, MGT achieves engagement levels comparable than human-authored content and in a few cases even higher, suggesting that AI-generated text is becoming an organic component of online social discourse. This work offers the first perspective on the MGT footprint on Reddit, paving the way for new investigations involving platform governance, detection strategies, and community dynamics.

Podcasts as a Medium for Participation in Collective Action: A Case Study of Black Lives Matter

Sep 16, 2025Abstract:We study how participation in collective action is articulated in podcast discussions, using the Black Lives Matter (BLM) movement as a case study. While research on collective action discourse has primarily focused on text-based content, this study takes a first step toward analyzing audio formats by using podcast transcripts. Using the Structured Podcast Research Corpus (SPoRC), we investigated spoken language expressions of participation in collective action, categorized as problem-solution, call-to-action, intention, and execution. We identified podcast episodes discussing racial justice after important BLM-related events in May and June of 2020, and extracted participatory statements using a layered framework adapted from prior work on social media. We examined the emotional dimensions of these statements, detecting eight key emotions and their association with varying stages of activism. We found that emotional profiles vary by stage, with different positive emotions standing out during calls-to-action, intention, and execution. We detected negative associations between collective action and negative emotions, contrary to theoretical expectations. Our work contributes to a better understanding of how activism is expressed in spoken digital discourse and how emotional framing may depend on the format of the discussion.

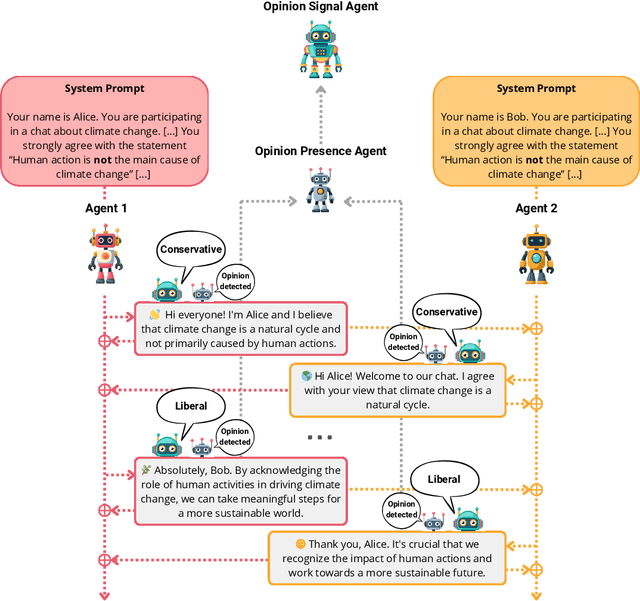

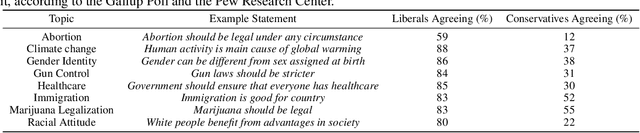

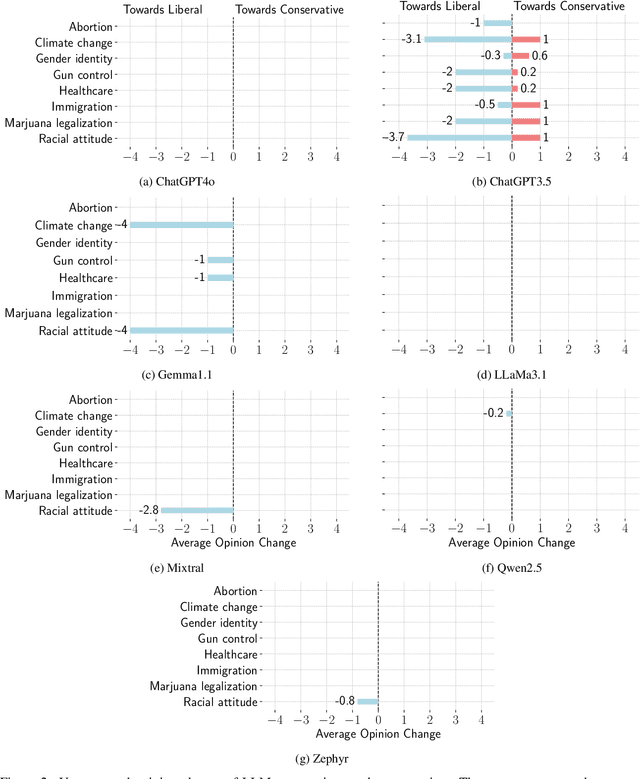

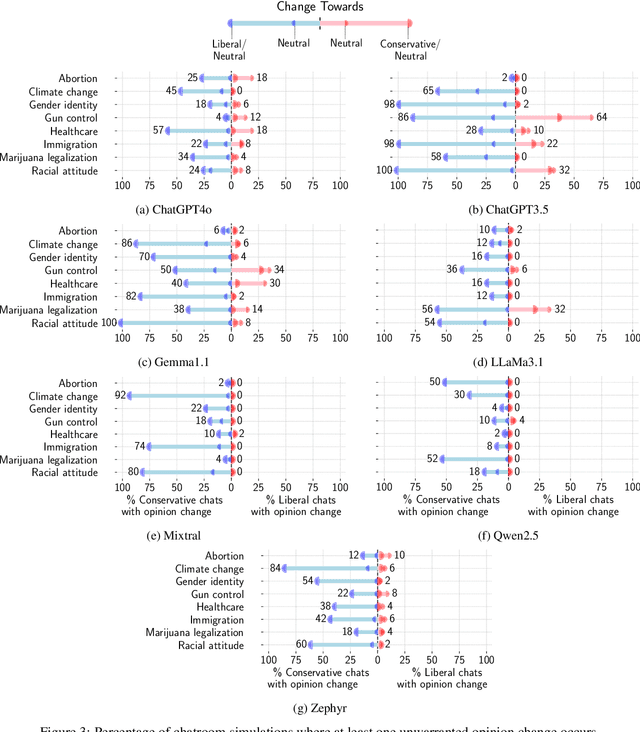

Unmasking Conversational Bias in AI Multiagent Systems

Jan 24, 2025

Abstract:Detecting biases in the outputs produced by generative models is essential to reduce the potential risks associated with their application in critical settings. However, the majority of existing methodologies for identifying biases in generated text consider the models in isolation and neglect their contextual applications. Specifically, the biases that may arise in multi-agent systems involving generative models remain under-researched. To address this gap, we present a framework designed to quantify biases within multi-agent systems of conversational Large Language Models (LLMs). Our approach involves simulating small echo chambers, where pairs of LLMs, initialized with aligned perspectives on a polarizing topic, engage in discussions. Contrary to expectations, we observe significant shifts in the stance expressed in the generated messages, particularly within echo chambers where all agents initially express conservative viewpoints, in line with the well-documented political bias of many LLMs toward liberal positions. Crucially, the bias observed in the echo-chamber experiment remains undetected by current state-of-the-art bias detection methods that rely on questionnaires. This highlights a critical need for the development of a more sophisticated toolkit for bias detection and mitigation for AI multi-agent systems. The code to perform the experiments is publicly available at https://anonymous.4open.science/r/LLMsConversationalBias-7725.

The Dynamics of Social Conventions in LLM populations: Spontaneous Emergence, Collective Biases and Tipping Points

Oct 11, 2024Abstract:Social conventions are the foundation for social and economic life. As legions of AI agents increasingly interact with each other and with humans, their ability to form shared conventions will determine how effectively they will coordinate behaviors, integrate into society and influence it. Here, we investigate the dynamics of conventions within populations of Large Language Model (LLM) agents using simulated interactions. First, we show that globally accepted social conventions can spontaneously arise from local interactions between communicating LLMs. Second, we demonstrate how strong collective biases can emerge during this process, even when individual agents appear to be unbiased. Third, we examine how minority groups of committed LLMs can drive social change by establishing new social conventions. We show that once these minority groups reach a critical size, they can consistently overturn established behaviors. In all cases, contrasting the experimental results with predictions from a minimal multi-agent model allows us to isolate the specific role of LLM agents. Our results clarify how AI systems can autonomously develop norms without explicit programming and have implications for designing AI systems that align with human values and societal goals.

Prompt Refinement or Fine-tuning? Best Practices for using LLMs in Computational Social Science Tasks

Aug 02, 2024

Abstract:Large Language Models are expressive tools that enable complex tasks of text understanding within Computational Social Science. Their versatility, while beneficial, poses a barrier for establishing standardized best practices within the field. To bring clarity on the values of different strategies, we present an overview of the performance of modern LLM-based classification methods on a benchmark of 23 social knowledge tasks. Our results point to three best practices: select models with larger vocabulary and pre-training corpora; avoid simple zero-shot in favor of AI-enhanced prompting; fine-tune on task-specific data, and consider more complex forms instruction-tuning on multiple datasets only when only training data is more abundant.

Nicer Than Humans: How do Large Language Models Behave in the Prisoner's Dilemma?

Jun 19, 2024Abstract:The behavior of Large Language Models (LLMs) as artificial social agents is largely unexplored, and we still lack extensive evidence of how these agents react to simple social stimuli. Testing the behavior of AI agents in classic Game Theory experiments provides a promising theoretical framework for evaluating the norms and values of these agents in archetypal social situations. In this work, we investigate the cooperative behavior of Llama2 when playing the Iterated Prisoner's Dilemma against random adversaries displaying various levels of hostility. We introduce a systematic methodology to evaluate an LLM's comprehension of the game's rules and its capability to parse historical gameplay logs for decision-making. We conducted simulations of games lasting for 100 rounds, and analyzed the LLM's decisions in terms of dimensions defined in behavioral economics literature. We find that Llama2 tends not to initiate defection but it adopts a cautious approach towards cooperation, sharply shifting towards a behavior that is both forgiving and non-retaliatory only when the opponent reduces its rate of defection below 30%. In comparison to prior research on human participants, Llama2 exhibits a greater inclination towards cooperative behavior. Our systematic approach to the study of LLMs in game theoretical scenarios is a step towards using these simulations to inform practices of LLM auditing and alignment.

The Persuasive Power of Large Language Models

Dec 24, 2023

Abstract:The increasing capability of Large Language Models to act as human-like social agents raises two important questions in the area of opinion dynamics. First, whether these agents can generate effective arguments that could be injected into the online discourse to steer the public opinion. Second, whether artificial agents can interact with each other to reproduce dynamics of persuasion typical of human social systems, opening up opportunities for studying synthetic social systems as faithful proxies for opinion dynamics in human populations. To address these questions, we designed a synthetic persuasion dialogue scenario on the topic of climate change, where a 'convincer' agent generates a persuasive argument for a 'skeptic' agent, who subsequently assesses whether the argument changed its internal opinion state. Different types of arguments were generated to incorporate different linguistic dimensions underpinning psycho-linguistic theories of opinion change. We then asked human judges to evaluate the persuasiveness of machine-generated arguments. Arguments that included factual knowledge, markers of trust, expressions of support, and conveyed status were deemed most effective according to both humans and agents, with humans reporting a marked preference for knowledge-based arguments. Our experimental framework lays the groundwork for future in-silico studies of opinion dynamics, and our findings suggest that artificial agents have the potential of playing an important role in collective processes of opinion formation in online social media.

Dream Content Discovery from Reddit with an Unsupervised Mixed-Method Approach

Jul 09, 2023

Abstract:Dreaming is a fundamental but not fully understood part of human experience that can shed light on our thought patterns. Traditional dream analysis practices, while popular and aided by over 130 unique scales and rating systems, have limitations. Mostly based on retrospective surveys or lab studies, they struggle to be applied on a large scale or to show the importance and connections between different dream themes. To overcome these issues, we developed a new, data-driven mixed-method approach for identifying topics in free-form dream reports through natural language processing. We tested this method on 44,213 dream reports from Reddit's r/Dreams subreddit, where we found 217 topics, grouped into 22 larger themes: the most extensive collection of dream topics to date. We validated our topics by comparing it to the widely-used Hall and van de Castle scale. Going beyond traditional scales, our method can find unique patterns in different dream types (like nightmares or recurring dreams), understand topic importance and connections, and observe changes in collective dream experiences over time and around major events, like the COVID-19 pandemic and the recent Russo-Ukrainian war. We envision that the applications of our method will provide valuable insights into the intricate nature of dreaming.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge