Louis-Antoine Blais-Morin

Dynamic Template Selection Through Change Detection for Adaptive Siamese Tracking

Mar 07, 2022

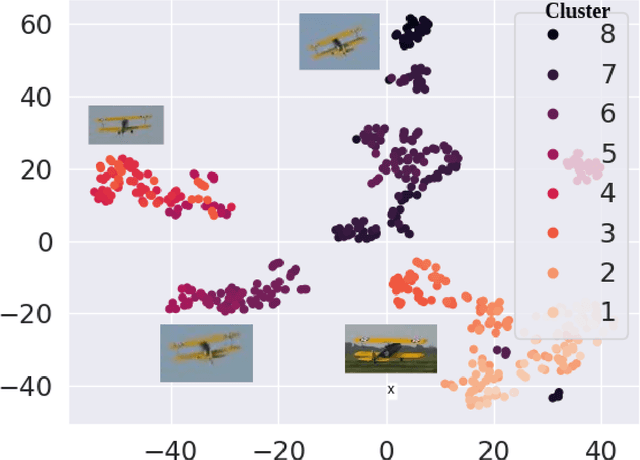

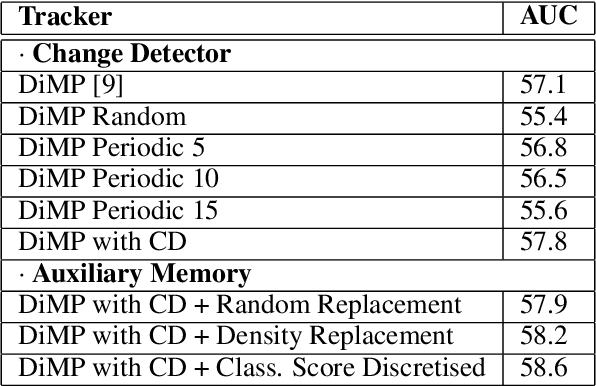

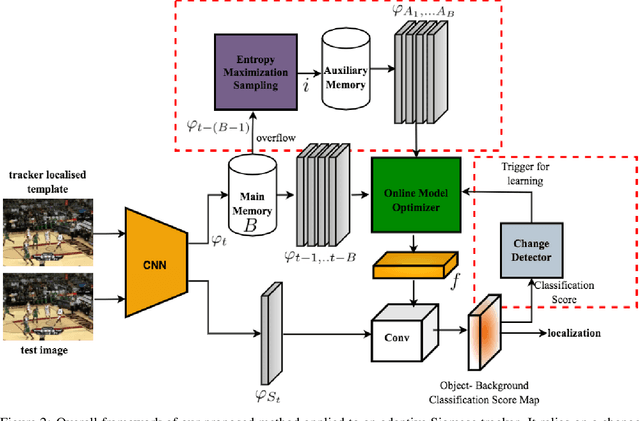

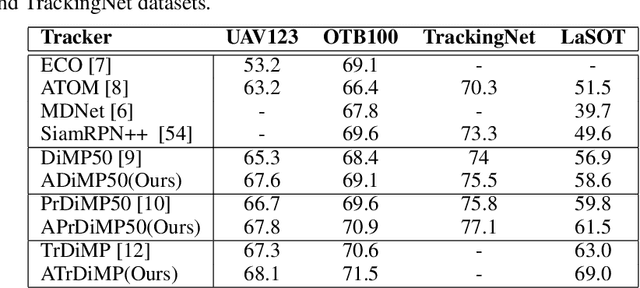

Abstract:Deep Siamese trackers have recently gained much attention in recent years since they can track visual objects at high speeds. Additionally, adaptive tracking methods, where target samples collected by the tracker are employed for online learning, have achieved state-of-the-art accuracy. However, single object tracking (SOT) remains a challenging task in real-world application due to changes and deformations in a target object's appearance. Learning on all the collected samples may lead to catastrophic forgetting, and thereby corrupt the tracking model. In this paper, SOT is formulated as an online incremental learning problem. A new method is proposed for dynamic sample selection and memory replay, preventing template corruption. In particular, we propose a change detection mechanism to detect gradual changes in object appearance and select the corresponding samples for online adaption. In addition, an entropy-based sample selection strategy is introduced to maintain a diversified auxiliary buffer for memory replay. Our proposed method can be integrated into any object tracking algorithm that leverages online learning for model adaptation. Extensive experiments conducted on the OTB-100, LaSOT, UAV123, and TrackingNet datasets highlight the cost-effectiveness of our method, along with the contribution of its key components. Results indicate that integrating our proposed method into state-of-art adaptive Siamese trackers can increase the potential benefits of a template update strategy, and significantly improve performance.

Generative Target Update for Adaptive Siamese Tracking

Feb 21, 2022

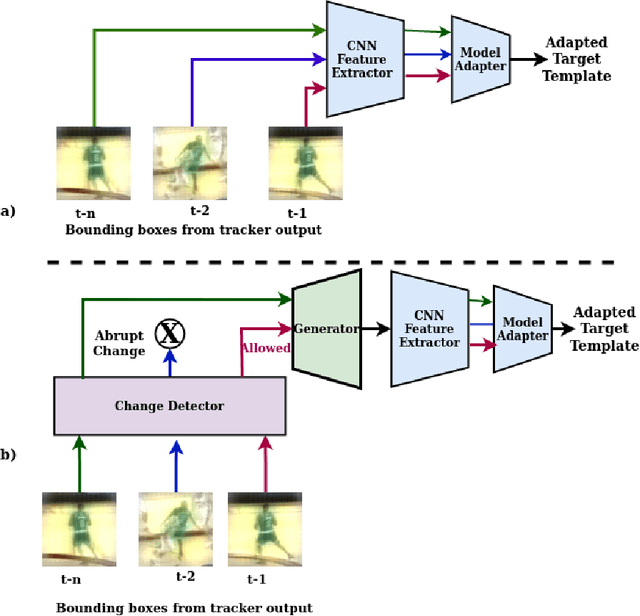

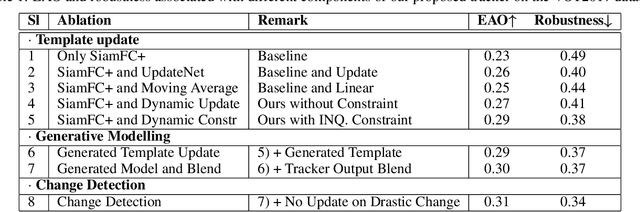

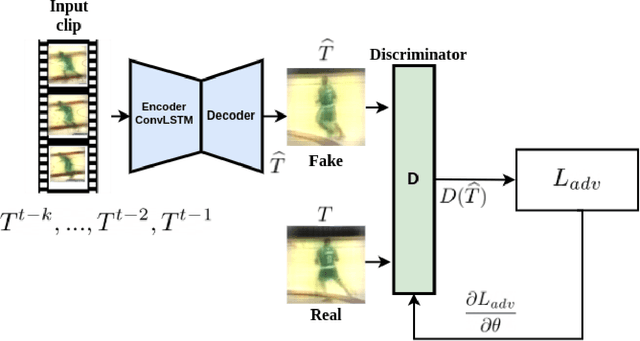

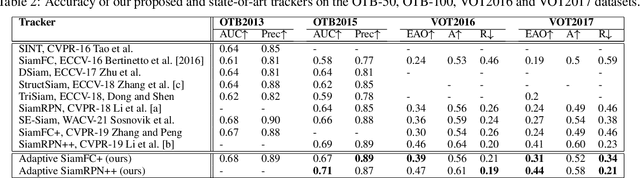

Abstract:Siamese trackers perform similarity matching with templates (i.e., target models) to recursively localize objects within a search region. Several strategies have been proposed in the literature to update a template based on the tracker output, typically extracted from the target search region in the current frame, and thereby mitigate the effects of target drift. However, this may lead to corrupted templates, limiting the potential benefits of a template update strategy. This paper proposes a model adaptation method for Siamese trackers that uses a generative model to produce a synthetic template from the object search regions of several previous frames, rather than directly using the tracker output. Since the search region encompasses the target, attention from the search region is used for robust model adaptation. In particular, our approach relies on an auto-encoder trained through adversarial learning to detect changes in a target object's appearance and predict a future target template, using a set of target templates localized from tracker outputs at previous frames. To prevent template corruption during the update, the proposed tracker also performs change detection using the generative model to suspend updates until the tracker stabilizes, and robust matching can resume through dynamic template fusion. Extensive experiments conducted on VOT-16, VOT-17, OTB-50, and OTB-100 datasets highlight the effectiveness of our method, along with the impact of its key components. Results indicate that our proposed approach can outperform state-of-art trackers, and its overall robustness allows tracking for a longer time before failure.

Incremental Multi-Target Domain Adaptation for Object Detection with Efficient Domain Transfer

Apr 19, 2021

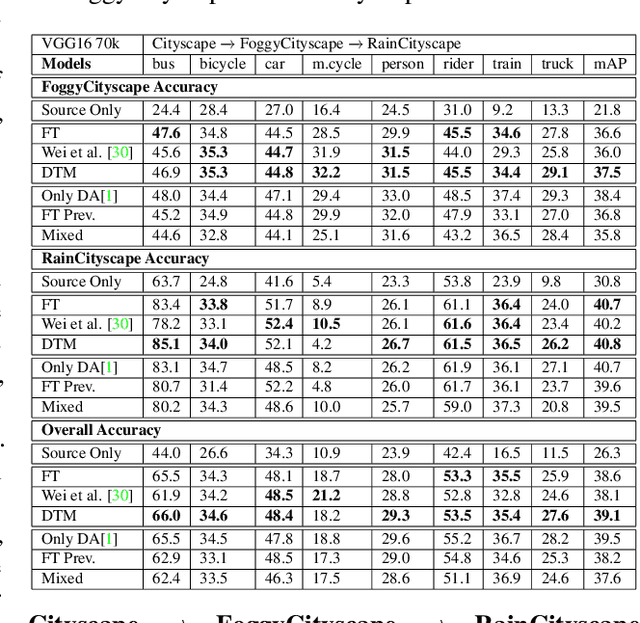

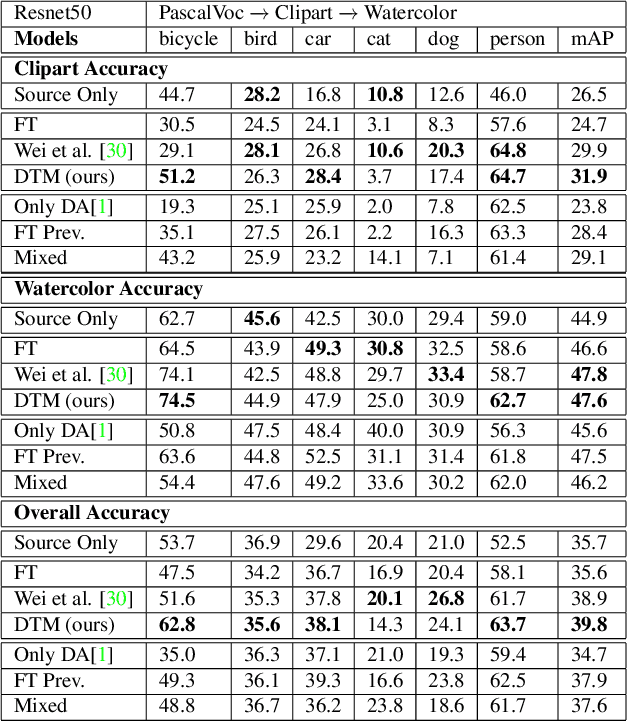

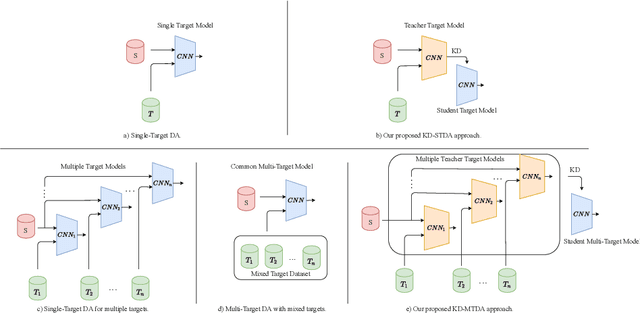

Abstract:Techniques for multi-target domain adaptation (MTDA) seek to adapt a recognition model such that it can generalize well across multiple target domains. While several successful techniques have been proposed for unsupervised single-target domain adaptation (STDA) in object detection, adapting a model to multiple target domains using unlabeled image data remains a challenging and largely unexplored problem. Key challenges include the lack of bounding box annotations for target data, knowledge corruption, and the growing resource requirements needed to train accurate deep detection models. The later requirements are augmented by the need to retraining a model with previous-learned target data when adapting to each new target domain. Currently, the only MTDA technique in literature for object detection relies on distillation with a duplicated model to avoid knowledge corruption but does not leverage the source-target feature alignment after UDA. To address these challenges, we propose a new Incremental MTDA technique for object detection that can adapt a detector to multiple target domains, one at a time, without having to retain data of previously-learned target domains. Instead of distillation, our technique efficiently transfers source images to a joint target domains' space, on the fly, thereby preserving knowledge during incremental MTDA. Using adversarial training, our Domain Transfer Module (DTM) is optimized to trick the domain classifiers into classifying source images as though transferred into the target domain, thus allowing the DTM to generate samples close to a joint distribution of target domains. Our proposed technique is validated on different MTDA detection benchmarks, and results show it improving accuracy across multiple domains, despite the considerable reduction in complexity.

Holistic Guidance for Occluded Person Re-Identification

Apr 13, 2021

Abstract:In real-world video surveillance applications, person re-identification (ReID) suffers from the effects of occlusions and detection errors. Despite recent advances, occlusions continue to corrupt the features extracted by state-of-art CNN backbones, and thereby deteriorate the accuracy of ReID systems. To address this issue, methods in the literature use an additional costly process such as pose estimation, where pose maps provide supervision to exclude occluded regions. In contrast, we introduce a novel Holistic Guidance (HG) method that relies only on person identity labels, and on the distribution of pairwise matching distances of datasets to alleviate the problem of occlusion, without requiring additional supervision. Hence, our proposed student-teacher framework is trained to address the occlusion problem by matching the distributions of between- and within-class distances (DCDs) of occluded samples with that of holistic (non-occluded) samples, thereby using the latter as a soft labeled reference to learn well separated DCDs. This approach is supported by our empirical study where the distribution of between- and within-class distances between images have more overlap in occluded than holistic datasets. In particular, features extracted from both datasets are jointly learned using the student model to produce an attention map that allows separating visible regions from occluded ones. In addition to this, a joint generative-discriminative backbone is trained with a denoising autoencoder, allowing the system to self-recover from occlusions. Extensive experiments on several challenging public datasets indicate that the proposed approach can outperform state-of-the-art methods on both occluded and holistic datasets

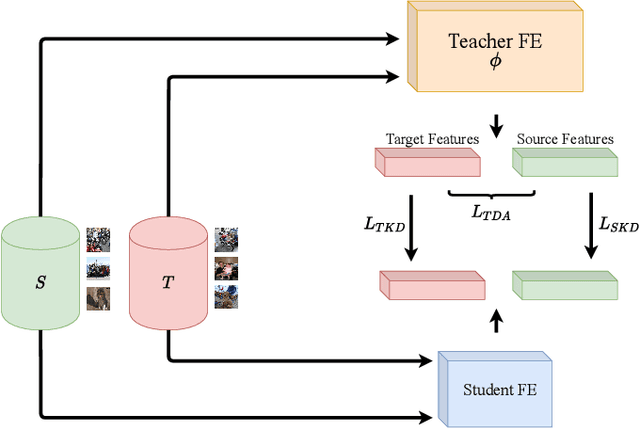

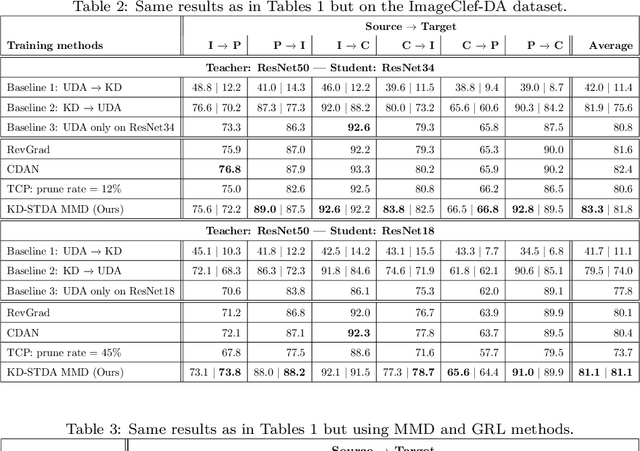

Knowledge Distillation Methods for Efficient Unsupervised Adaptation Across Multiple Domains

Jan 18, 2021

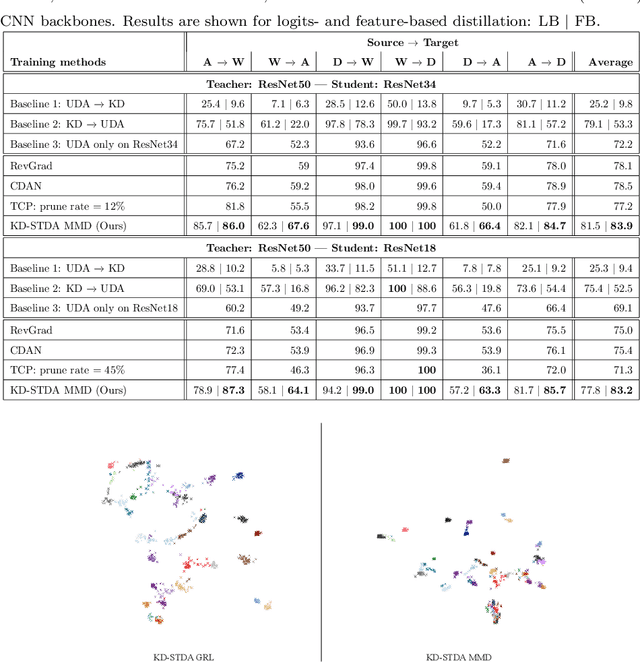

Abstract:Beyond the complexity of CNNs that require training on large annotated datasets, the domain shift between design and operational data has limited the adoption of CNNs in many real-world applications. For instance, in person re-identification, videos are captured over a distributed set of cameras with non-overlapping viewpoints. The shift between the source (e.g. lab setting) and target (e.g. cameras) domains may lead to a significant decline in recognition accuracy. Additionally, state-of-the-art CNNs may not be suitable for such real-time applications given their computational requirements. Although several techniques have recently been proposed to address domain shift problems through unsupervised domain adaptation (UDA), or to accelerate/compress CNNs through knowledge distillation (KD), we seek to simultaneously adapt and compress CNNs to generalize well across multiple target domains. In this paper, we propose a progressive KD approach for unsupervised single-target DA (STDA) and multi-target DA (MTDA) of CNNs. Our method for KD-STDA adapts a CNN to a single target domain by distilling from a larger teacher CNN, trained on both target and source domain data in order to maintain its consistency with a common representation. Our proposed approach is compared against state-of-the-art methods for compression and STDA of CNNs on the Office31 and ImageClef-DA image classification datasets. It is also compared against state-of-the-art methods for MTDA on Digits, Office31, and OfficeHome. In both settings -- KD-STDA and KD-MTDA -- results indicate that our approach can achieve the highest level of accuracy across target domains, while requiring a comparable or lower CNN complexity.

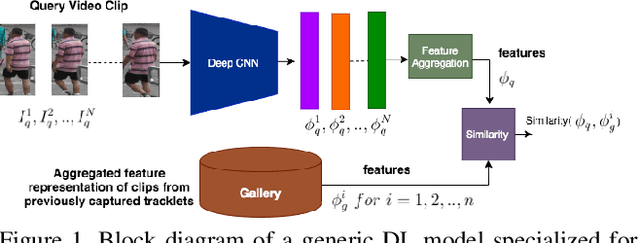

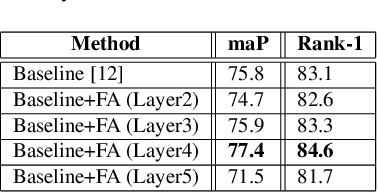

Flow-Guided Attention Networks for Video-Based Person Re-Identification

Aug 09, 2020

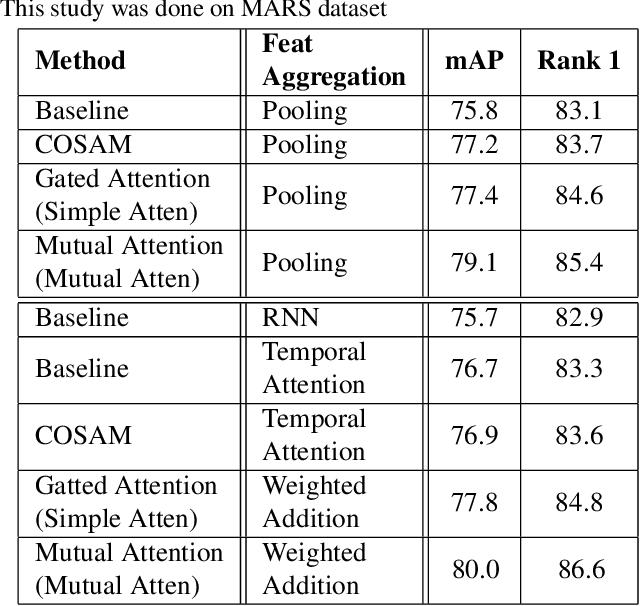

Abstract:Person Re-Identification (ReID) is an important problem in many video analytics and surveillance applications,where a person's identity must be associated across a distributed network of cameras. Video-based person ReID has recently gained much interest because it can capture discriminant spatio-temporal information that is unavailable for image-based ReID. Despite recent advances, deep learning models for video ReID often fail to leverage this information to improve the robustness of feature representations. In this paper, the motion pattern of a person is explored as an additional cue for ReID. In particular, two different flow-guided attention networks are proposed for fusion with any 2D-CNN backbone, allowing to encode temporal information along with spatial appearance information.Our first proposed network called Gated Attention relies on optical flow to generate gated attention with video-based feature that embed spatially. Hence the proposed framework allows to activate a common set of salient features across multiple frames. In contrast, our second network called Mutual Attention relies on the joint attention between image and optical flow features. This enables spatial attention between both sources of features, across motion and appearance cues. Both methods introduce a feature aggregation method that produce video features by identifying salient spatio-temporal information.Extensive experiments on two challenging video datasets indicate that using the proposed flow-guided spatio-temporal attention networks allows to improve recognition accuracy considerably, outperforming state-of-the-art methods for video-based person ReID. Additionally, our Mutual Attention network is able to process longer frame sequences with a wider range of appearance variations for highly accurate recognition.

Unsupervised Multi-Target Domain Adaptation Through Knowledge Distillation

Jul 20, 2020

Abstract:Unsupervised domain adaptation (UDA) seeks to alleviate the problem of domain shift between the distribution of unlabeled data from the target domain w.r.t labeled data from source domain. While the single-target domain scenario is well studied in UDA literature, the Multi-Target Domain Adaptation (MTDA) setting remains largely unexplored despite its importance. For instance, in video surveillance, each camera can corresponds to a different viewpoint (target domain). MTDA problem can be addressed by adapting one specialized model per target domain, although this solution is too costly in many applications. It has also been addressed by blending target data for multi-domain adaptation to train a common model, yet this may lead to a reduction in performance. In this paper, we propose a new unsupervised MTDA approach to train a common CNN that can generalize across multiple target domains. Our approach the Multi-Teacher MTDA (MT-MTDA) relies on multi-teacher knowledge distillation (KD) in order to distill target domain knowledge from multiple teachers to a common student. Inspired by a common education scenario, a different target domain is assigned to each teacher model for UDA, and these teachers alternatively distill their knowledge to one common student model. The KD process is performed in a progressive manner, where the student is trained by each teacher on how to perform UDA, instead of directly learning domain adapted features. Finally, instead of directly combining the knowledge from each teacher, MT-MTDA alternates between teachers that distill knowledge in order to preserve the specificity of each target (teacher) when learning to adapt the student. MT-MTDA is compared against state-of-the-art methods on OfficeHome, Office31 and Digits-5 datasets, and empirical results show that our proposed model can provide a considerably higher level of accuracy across multiple target domains.

Joint Progressive Knowledge Distillation and Unsupervised Domain Adaptation

May 16, 2020

Abstract:Currently, the divergence in distributions of design and operational data, and large computational complexity are limiting factors in the adoption of CNNs in real-world applications. For instance, person re-identification systems typically rely on a distributed set of cameras, where each camera has different capture conditions. This can translate to a considerable shift between source (e.g. lab setting) and target (e.g. operational camera) domains. Given the cost of annotating image data captured for fine-tuning in each target domain, unsupervised domain adaptation (UDA) has become a popular approach to adapt CNNs. Moreover, state-of-the-art deep learning models that provide a high level of accuracy often rely on architectures that are too complex for real-time applications. Although several compression and UDA approaches have recently been proposed to overcome these limitations, they do not allow optimizing a CNN to simultaneously address both. In this paper, we propose an unexplored direction -- the joint optimization of CNNs to provide a compressed model that is adapted to perform well for a given target domain. In particular, the proposed approach performs unsupervised knowledge distillation (KD) from a complex teacher model to a compact student model, by leveraging both source and target data. It also improves upon existing UDA techniques by progressively teaching the student about domain-invariant features, instead of directly adapting a compact model on target domain data. Our method is compared against state-of-the-art compression and UDA techniques, using two popular classification datasets for UDA -- Office31 and ImageClef-DA. In both datasets, results indicate that our method can achieve the highest level of accuracy while requiring a comparable or lower time complexity.

An Improved Trade-off Between Accuracy and Complexity with Progressive Gradient Pruning

Aug 12, 2019

Abstract:Although deep neural networks (NNs) have achieved state-of-the-art accuracy in many visual recognition tasks ,the growing computational complexity and energy consumption of networks remains an issue, especially for applications on platforms with limited resources and requiring real-time processing. Channel pruning techniques have recently shown promising results for the compression of convolutional NNs (CNNs). However, these techniques can result in low accuracy and complex optimisations because some only prune after training CNNs, while others prune from scratch during training by integrating sparsity constraints or modifying the loss function. The progressive soft filter pruning technique provides greater training efficiency, but its soft pruning strategy does no thandle the backward pass which is needed for better optimization. In this paper, a new Progressive Gradient Pruning (PGP) technique is proposed for iterative channel pruning during training. It relies on a criterion that measures the change in channel weights that improves existing progressive pruning, and on an effective hard and soft pruning strategies to adapt momentum tensors during the backward propagation pass. Experimental results obtained after training various CNNs on the MNIST and CIFAR10 datasets indicate that the PGP technique canachieve a better tradeoff between classification accuracy and network (time and memory) complexity than state-of-the-art channel pruning techniques

A Comparison of CNN-based Face and Head Detectors for Real-Time Video Surveillance Applications

Sep 10, 2018

Abstract:Detecting faces and heads appearing in video feeds are challenging tasks in real-world video surveillance applications due to variations in appearance, occlusions and complex backgrounds. Recently, several CNN architectures have been proposed to increase the accuracy of detectors, although their computational complexity can be an issue, especially for real-time applications, where faces and heads must be detected live using high-resolution cameras. This paper compares the accuracy and complexity of state-of-the-art CNN architectures that are suitable for face and head detection. Single pass and region-based architectures are reviewed and compared empirically to baseline techniques according to accuracy and to time and memory complexity on images from several challenging datasets. The viability of these architectures is analyzed with real-time video surveillance applications in mind. Results suggest that, although CNN architectures can achieve a very high level of accuracy compared to traditional detectors, their computational cost can represent a limitation for many practical real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge