Long Lv

Interactive Spatial-Frequency Fusion Mamba for Multi-Modal Image Fusion

Feb 04, 2026Abstract:Multi-Modal Image Fusion (MMIF) aims to combine images from different modalities to produce fused images, retaining texture details and preserving significant information. Recently, some MMIF methods incorporate frequency domain information to enhance spatial features. However, these methods typically rely on simple serial or parallel spatial-frequency fusion without interaction. In this paper, we propose a novel Interactive Spatial-Frequency Fusion Mamba (ISFM) framework for MMIF. Specifically, we begin with a Modality-Specific Extractor (MSE) to extract features from different modalities. It models long-range dependencies across the image with linear computational complexity. To effectively leverage frequency information, we then propose a Multi-scale Frequency Fusion (MFF). It adaptively integrates low-frequency and high-frequency components across multiple scales, enabling robust representations of frequency features. More importantly, we further propose an Interactive Spatial-Frequency Fusion (ISF). It incorporates frequency features to guide spatial features across modalities, enhancing complementary representations. Extensive experiments are conducted on six MMIF datasets. The experimental results demonstrate that our ISFM can achieve better performances than other state-of-the-art methods. The source code is available at https://github.com/Namn23/ISFM.

Spatial-Frequency Enhanced Mamba for Multi-Modal Image Fusion

Nov 10, 2025Abstract:Multi-Modal Image Fusion (MMIF) aims to integrate complementary image information from different modalities to produce informative images. Previous deep learning-based MMIF methods generally adopt Convolutional Neural Networks (CNNs) or Transformers for feature extraction. However, these methods deliver unsatisfactory performances due to the limited receptive field of CNNs and the high computational cost of Transformers. Recently, Mamba has demonstrated a powerful potential for modeling long-range dependencies with linear complexity, providing a promising solution to MMIF. Unfortunately, Mamba lacks full spatial and frequency perceptions, which are very important for MMIF. Moreover, employing Image Reconstruction (IR) as an auxiliary task has been proven beneficial for MMIF. However, a primary challenge is how to leverage IR efficiently and effectively. To address the above issues, we propose a novel framework named Spatial-Frequency Enhanced Mamba Fusion (SFMFusion) for MMIF. More specifically, we first propose a three-branch structure to couple MMIF and IR, which can retain complete contents from source images. Then, we propose the Spatial-Frequency Enhanced Mamba Block (SFMB), which can enhance Mamba in both spatial and frequency domains for comprehensive feature extraction. Finally, we propose the Dynamic Fusion Mamba Block (DFMB), which can be deployed across different branches for dynamic feature fusion. Extensive experiments show that our method achieves better results than most state-of-the-art methods on six MMIF datasets. The source code is available at https://github.com/SunHui1216/SFMFusion.

CNIMA: A Universal Evaluation Framework and Automated Approach for Assessing Second Language Dialogues

Aug 29, 2024Abstract:We develop CNIMA (Chinese Non-Native Interactivity Measurement and Automation), a Chinese-as-a-second-language labelled dataset with 10K dialogues. We annotate CNIMA using an evaluation framework -- originally introduced for English-as-a-second-language dialogues -- that assesses micro-level features (e.g.\ backchannels) and macro-level interactivity labels (e.g.\ topic management) and test the framework's transferability from English to Chinese. We found the framework robust across languages and revealed universal and language-specific relationships between micro-level and macro-level features. Next, we propose an approach to automate the evaluation and find strong performance, creating a new tool for automated second language assessment. Our system can be adapted to other languages easily as it uses large language models and as such does not require large-scale annotated training data.

M$^{2}$SNet: Multi-scale in Multi-scale Subtraction Network for Medical Image Segmentation

Mar 20, 2023

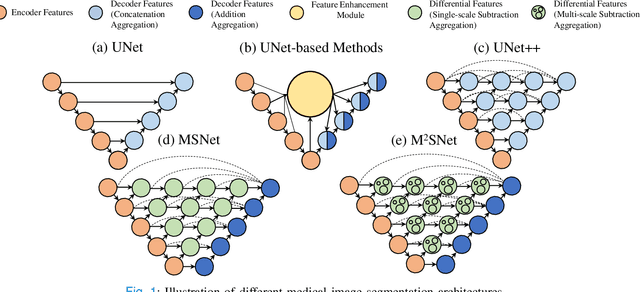

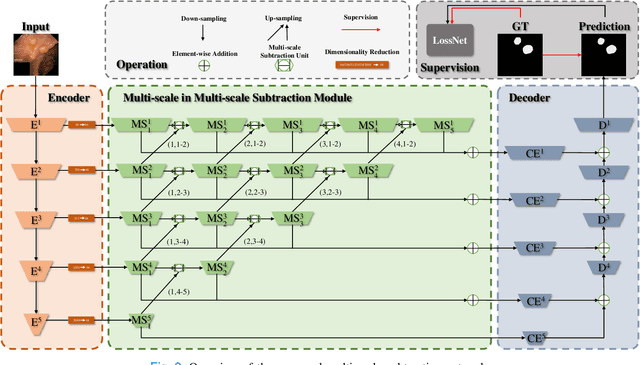

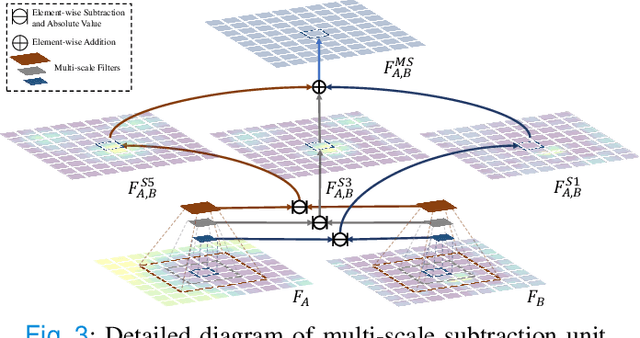

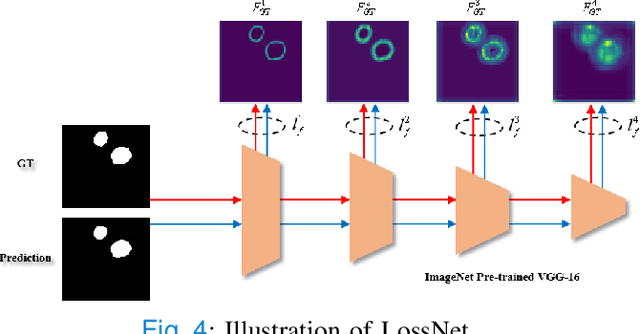

Abstract:Accurate medical image segmentation is critical for early medical diagnosis. Most existing methods are based on U-shape structure and use element-wise addition or concatenation to fuse different level features progressively in decoder. However, both the two operations easily generate plenty of redundant information, which will weaken the complementarity between different level features, resulting in inaccurate localization and blurred edges of lesions. To address this challenge, we propose a general multi-scale in multi-scale subtraction network (M$^{2}$SNet) to finish diverse segmentation from medical image. Specifically, we first design a basic subtraction unit (SU) to produce the difference features between adjacent levels in encoder. Next, we expand the single-scale SU to the intra-layer multi-scale SU, which can provide the decoder with both pixel-level and structure-level difference information. Then, we pyramidally equip the multi-scale SUs at different levels with varying receptive fields, thereby achieving the inter-layer multi-scale feature aggregation and obtaining rich multi-scale difference information. In addition, we build a training-free network ``LossNet'' to comprehensively supervise the task-aware features from bottom layer to top layer, which drives our multi-scale subtraction network to capture the detailed and structural cues simultaneously. Without bells and whistles, our method performs favorably against most state-of-the-art methods under different evaluation metrics on eleven datasets of four different medical image segmentation tasks of diverse image modalities, including color colonoscopy imaging, ultrasound imaging, computed tomography (CT), and optical coherence tomography (OCT). The source code can be available at \url{https://github.com/Xiaoqi-Zhao-DLUT/MSNet}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge