Liantao Wu

Sigil: Server-Enforced Watermarking in U-Shaped Split Federated Learning via Gradient Injection

Nov 18, 2025Abstract:In decentralized machine learning paradigms such as Split Federated Learning (SFL) and its variant U-shaped SFL, the server's capabilities are severely restricted. Although this enhances client-side privacy, it also leaves the server highly vulnerable to model theft by malicious clients. Ensuring intellectual property protection for such capability-limited servers presents a dual challenge: watermarking schemes that depend on client cooperation are unreliable in adversarial settings, whereas traditional server-side watermarking schemes are technically infeasible because the server lacks access to critical elements such as model parameters or labels. To address this challenge, this paper proposes Sigil, a mandatory watermarking framework designed specifically for capability-limited servers. Sigil defines the watermark as a statistical constraint on the server-visible activation space and embeds the watermark into the client model via gradient injection, without requiring any knowledge of the data. Besides, we design an adaptive gradient clipping mechanism to ensure that our watermarking process remains both mandatory and stealthy, effectively countering existing gradient anomaly detection methods and a specifically designed adaptive subspace removal attack. Extensive experiments on multiple datasets and models demonstrate Sigil's fidelity, robustness, and stealthiness.

Robust Client-Server Watermarking for Split Federated Learning

Nov 17, 2025

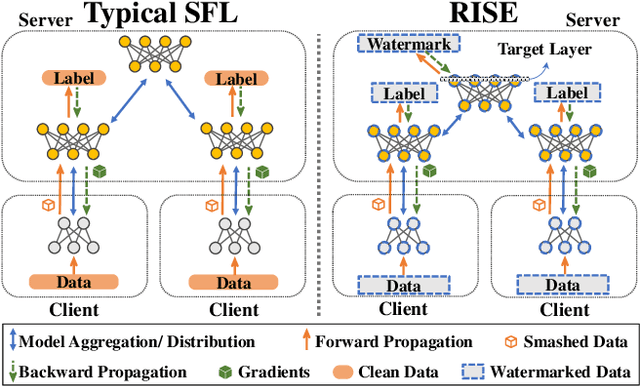

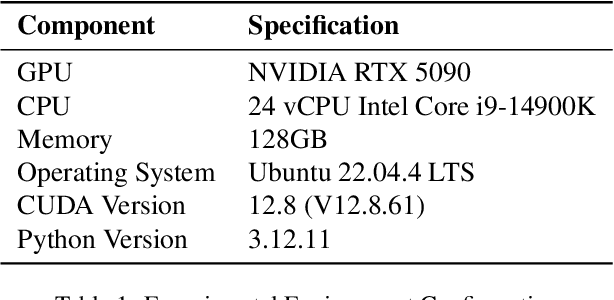

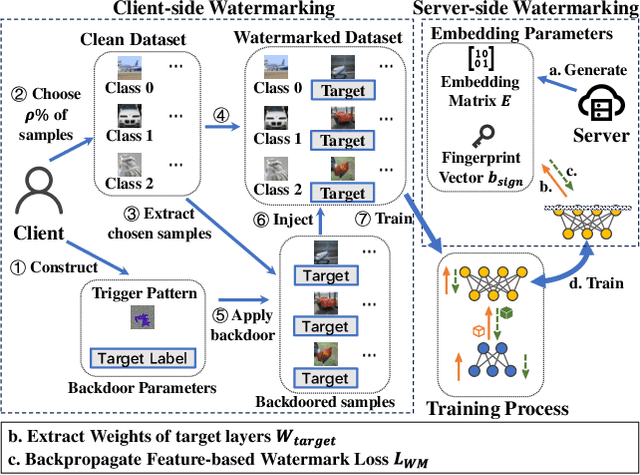

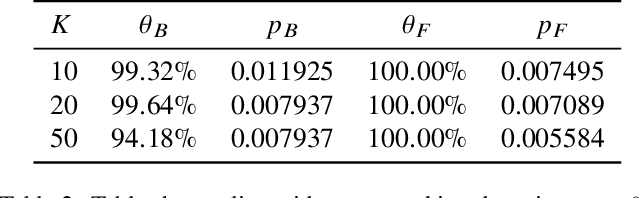

Abstract:Split Federated Learning (SFL) is renowned for its privacy-preserving nature and low computational overhead among decentralized machine learning paradigms. In this framework, clients employ lightweight models to process private data locally and transmit intermediate outputs to a powerful server for further computation. However, SFL is a double-edged sword: while it enables edge computing and enhances privacy, it also introduces intellectual property ambiguity as both clients and the server jointly contribute to training. Existing watermarking techniques fail to protect both sides since no single participant possesses the complete model. To address this, we propose RISE, a Robust model Intellectual property protection scheme using client-Server watermark Embedding for SFL. Specifically, RISE adopts an asymmetric client-server watermarking design: the server embeds feature-based watermarks through a loss regularization term, while clients embed backdoor-based watermarks by injecting predefined trigger samples into private datasets. This co-embedding strategy enables both clients and the server to verify model ownership. Experimental results on standard datasets and multiple network architectures show that RISE achieves over $95\%$ watermark detection rate ($p-value \lt 0.03$) across most settings. It exhibits no mutual interference between client- and server-side watermarks and remains robust against common removal attacks.

FFCBA: Feature-based Full-target Clean-label Backdoor Attacks

Apr 29, 2025Abstract:Backdoor attacks pose a significant threat to deep neural networks, as backdoored models would misclassify poisoned samples with specific triggers into target classes while maintaining normal performance on clean samples. Among these, multi-target backdoor attacks can simultaneously target multiple classes. However, existing multi-target backdoor attacks all follow the dirty-label paradigm, where poisoned samples are mislabeled, and most of them require an extremely high poisoning rate. This makes them easily detectable by manual inspection. In contrast, clean-label attacks are more stealthy, as they avoid modifying the labels of poisoned samples. However, they generally struggle to achieve stable and satisfactory attack performance and often fail to scale effectively to multi-target attacks. To address this issue, we propose the Feature-based Full-target Clean-label Backdoor Attacks (FFCBA) which consists of two paradigms: Feature-Spanning Backdoor Attacks (FSBA) and Feature-Migrating Backdoor Attacks (FMBA). FSBA leverages class-conditional autoencoders to generate noise triggers that align perturbed in-class samples with the original category's features, ensuring the effectiveness, intra-class consistency, inter-class specificity and natural-feature correlation of triggers. While FSBA supports swift and efficient attacks, its cross-model attack capability is relatively weak. FMBA employs a two-stage class-conditional autoencoder training process that alternates between using out-of-class samples and in-class samples. This allows FMBA to generate triggers with strong target-class features, making it highly effective for cross-model attacks. We conduct experiments on multiple datasets and models, the results show that FFCBA achieves outstanding attack performance and maintains desirable robustness against the state-of-the-art backdoor defenses.

FlocOff: Data Heterogeneity Resilient Federated Learning with Communication-Efficient Edge Offloading

May 29, 2024

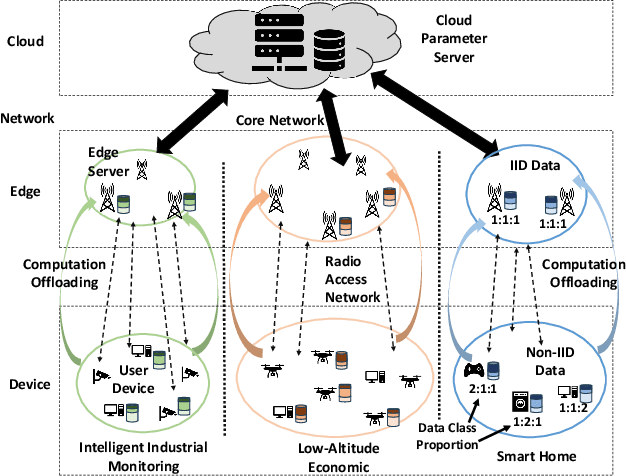

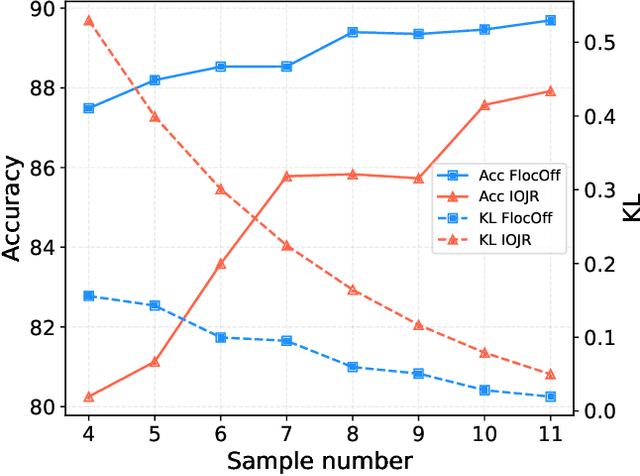

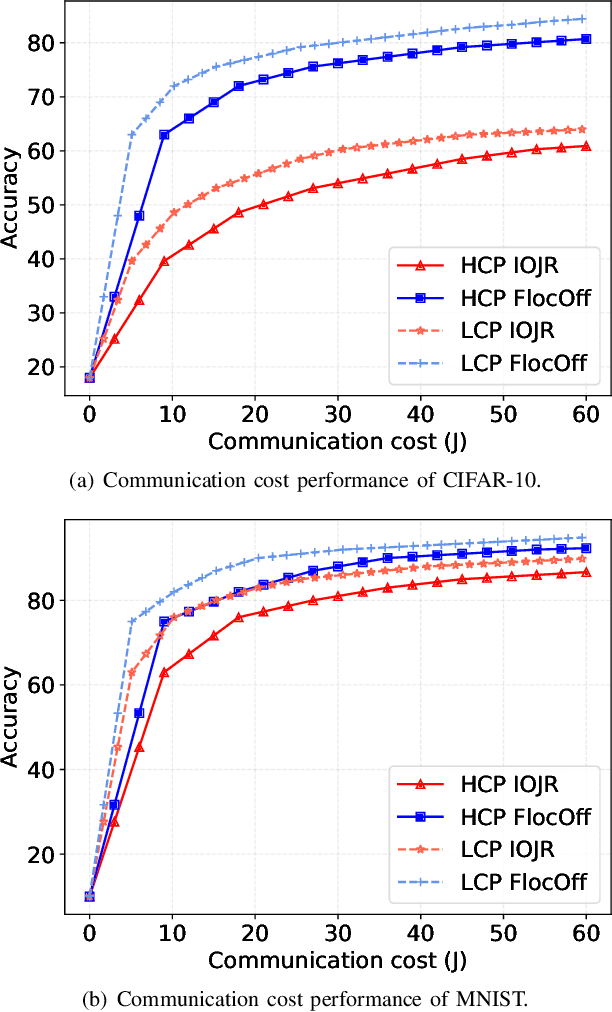

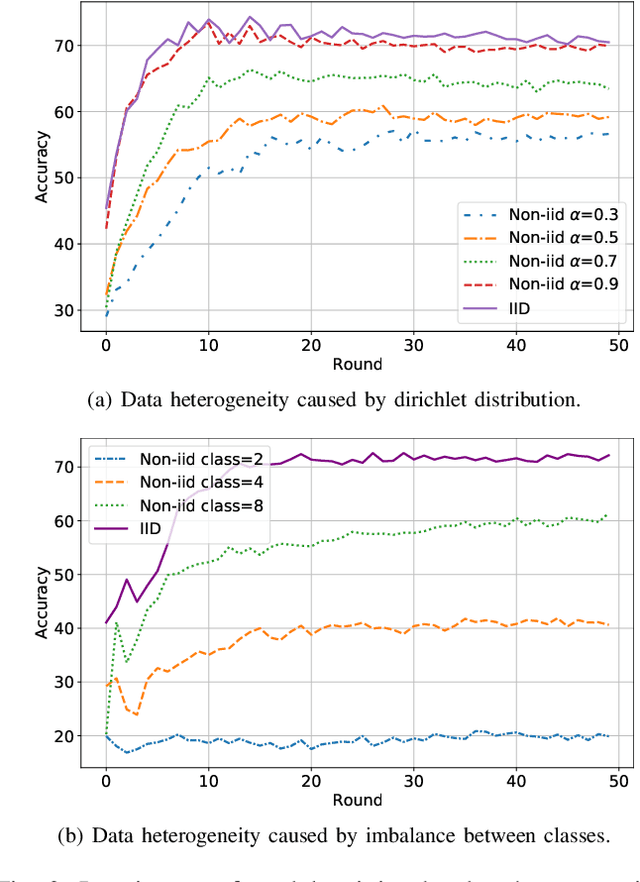

Abstract:Federated Learning (FL) has emerged as a fundamental learning paradigm to harness massive data scattered at geo-distributed edge devices in a privacy-preserving way. Given the heterogeneous deployment of edge devices, however, their data are usually Non-IID, introducing significant challenges to FL including degraded training accuracy, intensive communication costs, and high computing complexity. Towards that, traditional approaches typically utilize adaptive mechanisms, which may suffer from scalability issues, increased computational overhead, and limited adaptability to diverse edge environments. To address that, this paper instead leverages the observation that the computation offloading involves inherent functionalities such as node matching and service correlation to achieve data reshaping and proposes Federated learning based on computing Offloading (FlocOff) framework, to address data heterogeneity and resource-constrained challenges. Specifically, FlocOff formulates the FL process with Non-IID data in edge scenarios and derives rigorous analysis on the impact of imbalanced data distribution. Based on this, FlocOff decouples the optimization in two steps, namely : (1) Minimizes the Kullback-Leibler (KL) divergence via Computation Offloading scheduling (MKL-CO); (2) Minimizes the Communication Cost through Resource Allocation (MCC-RA). Extensive experimental results demonstrate that the proposed FlocOff effectively improves model convergence and accuracy by 14.3\%-32.7\% while reducing data heterogeneity under various data distributions.

Integrating Communication, Sensing and Computing in Satellite Internet of Things: Challenges and Opportunities

Dec 03, 2023

Abstract:Satellite Internet of Things (IoT) is to use satellites as the access points for IoT devices to achieve the global coverage of future IoT systems, and is expected to support burgeoning IoT applications, including communication, sensing, and computing. However, the complex and dynamic satellite environments and limited network resources raise new challenges in the design of satellite IoT systems. In this article, we focus on the joint design of communication, sensing, and computing to improve the performance of satellite IoT, which is quite different from the case of terrestrial IoT systems. We describe how the integration of the three functions can enhance system capabilities, and summarize the state-of-the-art solutions. Furthermore, we discuss the main challenges of integrating communication, sensing, and computing in satellite IoT to be solved with pressing interest.

Towards Efficient Compressive Data Collection in the Internet of Things

May 29, 2021

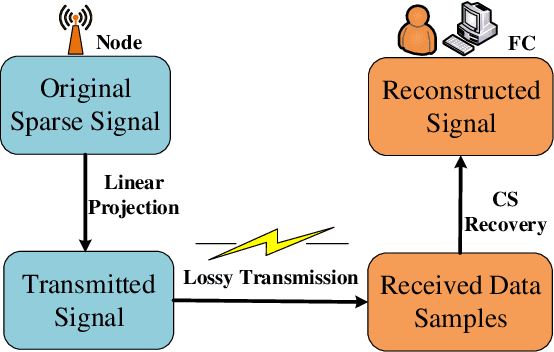

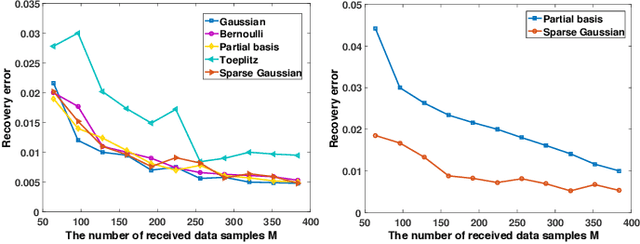

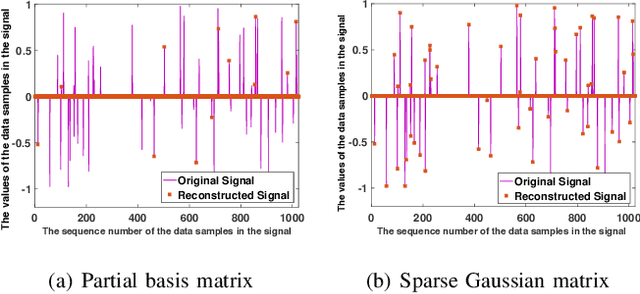

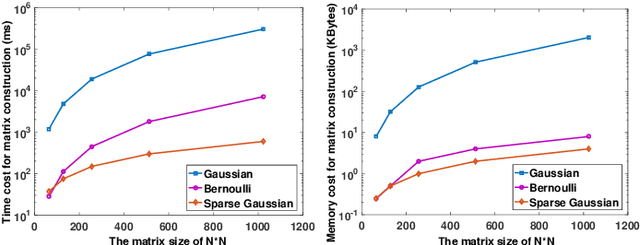

Abstract:It is of paramount importance to achieve efficient data collection in the Internet of Things (IoT). Due to the inherent structural properties (e.g., sparsity) existing in many signals of interest, compressive sensing (CS) technology has been extensively used for data collection in IoT to improve both accuracy and energy efficiency. Apart from the existing works which leverage CS as a channel coding scheme to deal with data loss during transmission, some recent results have started to employ CS as a source coding strategy. The frequently used projection matrices in these CS-based source coding schemes include dense random matrices (e.g., Gaussian matrices or Bernoulli matrices) and structured matrices (e.g., Toeplitz matrices). However, these matrices are either difficult to be implemented on resource-constrained IoT sensor nodes or have limited applicability. To address these issues, in this paper, we design a novel simple and efficient projection matrix, named sparse Gaussian matrix, which is easy and resource-saving to be implemented in practical IoT applications. We conduct both theoretical analysis and experimental evaluation of the designed sparse Gaussian matrix. The results demonstrate that employing the designed projection matrix to perform CS-based source coding could significantly save time and memory cost while ensuring satisfactory signal recovery performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge