Mingyang Yue

Integrating Communication, Sensing and Computing in Satellite Internet of Things: Challenges and Opportunities

Dec 03, 2023

Abstract:Satellite Internet of Things (IoT) is to use satellites as the access points for IoT devices to achieve the global coverage of future IoT systems, and is expected to support burgeoning IoT applications, including communication, sensing, and computing. However, the complex and dynamic satellite environments and limited network resources raise new challenges in the design of satellite IoT systems. In this article, we focus on the joint design of communication, sensing, and computing to improve the performance of satellite IoT, which is quite different from the case of terrestrial IoT systems. We describe how the integration of the three functions can enhance system capabilities, and summarize the state-of-the-art solutions. Furthermore, we discuss the main challenges of integrating communication, sensing, and computing in satellite IoT to be solved with pressing interest.

OFDM-Based Massive Connectivity for LEO Satellite Internet of Things

Oct 31, 2022Abstract:Low earth orbit (LEO) satellite has been considered as a potential supplement for the terrestrial Internet of Things (IoT). In this paper, we consider grant-free non-orthogonal random access (GF-NORA) in orthogonal frequency division multiplexing (OFDM) system to increase access capacity and reduce access latency for LEO satellite-IoT. We focus on the joint device activity detection (DAD) and channel estimation (CE) problem at the satellite access point. The delay and the Doppler effect of the LEO satellite channel are assumed to be partially compensated. We propose an OFDM-symbol repetition technique to better distinguish the residual Doppler frequency shifts, and present a grid-based parametric probability model to characterize channel sparsity in the delay-Doppler-user domain, as well as to characterize the relationship between the channel states and the device activity. Based on that, we develop a robust Bayesian message passing algorithm named modified variance state propagation (MVSP) for joint DAD and CE. Moreover, to tackle the mismatch between the real channel and its on-grid representation, an expectation-maximization (EM) framework is proposed to learn the grid parameters. Simulation results demonstrate that our proposed algorithms significantly outperform the existing approaches in both activity detection probability and channel estimation accuracy.

RIS-Aided Multiuser MIMO-OFDM with Linear Precoding and Iterative Detection: Analysis and Optimization

Aug 30, 2022

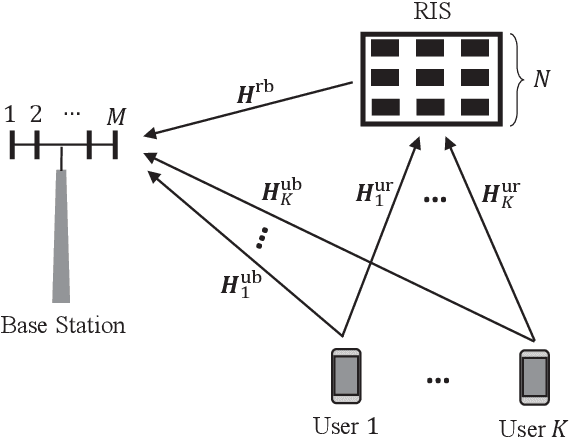

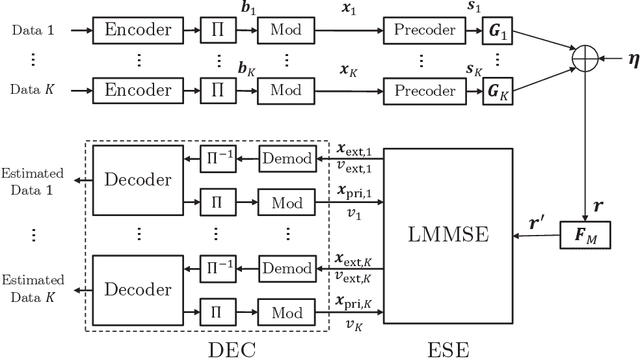

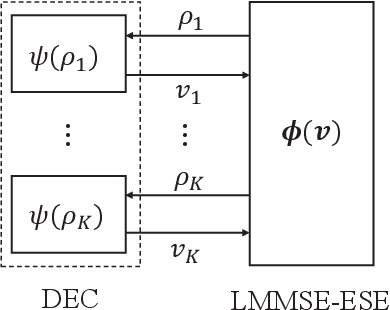

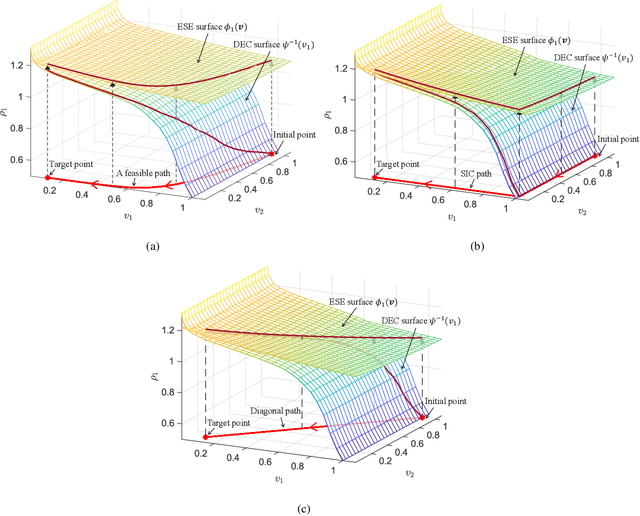

Abstract:In this paper, we consider a reconfigurable intelligence surface (RIS) aided uplink multiuser multi-input multi-output (MIMO) orthogonal frequency division multiplexing (OFDM) system, where the receiver is assumed to conduct low-complexity iterative detection. We aim to minimize the total transmit power by jointly designing the precoder of the transmitter and the passive beamforming of the RIS. This problem can be tackled from the perspective of information theory. But this information-theoretic approach may involve prohibitively high complexity since the number of rate constraints that specify the capacity region of the uplink multiuser channel is exponential in the number of users. To avoid this difficulty, we formulate the design problem of the iterative receiver under the constraints of a maximal iteration number and target bit error rates of users. To tackle this challenging problem, we propose a groupwise successive interference cancellation (SIC) optimization approach, where the signals of users are decoded and cancelled in a group-by-group manner. We present a heuristic user grouping strategy, and resort to the alternating optimization technique to iteratively solve the precoding and passive beamforming sub-problems. Specifically, for the precoding sub-problem, we employ fractional programming to convert it to a convex problem; for the passive beamforming sub-problem, we adopt successive convex approximation to deal with the unit-modulus constraints of the RIS. We show that the proposed groupwise SIC approach has significant advantages in both performance and computational complexity, as compared with the counterpart approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge