Laurent Mennillo

Robotic Arm Platform for Multi-View Image Acquisition and 3D Reconstruction in Minimally Invasive Surgery

Oct 15, 2024Abstract:Minimally invasive surgery (MIS) offers significant benefits such as reduced recovery time and minimised patient trauma, but poses challenges in visibility and access, making accurate 3D reconstruction a significant tool in surgical planning and navigation. This work introduces a robotic arm platform for efficient multi-view image acquisition and precise 3D reconstruction in MIS settings. We adapted a laparoscope to a robotic arm and captured ex-vivo images of several ovine organs across varying lighting conditions (operating room and laparoscopic) and trajectories (spherical and laparoscopic). We employed recently released learning-based feature matchers combined with COLMAP to produce our reconstructions. The reconstructions were evaluated against high-precision laser scans for quantitative evaluation. Our results show that whilst reconstructions suffer most under realistic MIS lighting and trajectory, many versions of our pipeline achieve close to sub-millimetre accuracy with an average of 1.05 mm Root Mean Squared Error and 0.82 mm Chamfer distance. Our best reconstruction results occur with operating room lighting and spherical trajectories. Our robotic platform provides a tool for controlled, repeatable multi-view data acquisition for 3D generation in MIS environments which we hope leads to new datasets for training learning-based models.

Automated Surgical Skill Assessment in Endoscopic Pituitary Surgery using Real-time Instrument Tracking on a High-fidelity Bench-top Phantom

Sep 25, 2024Abstract:Improved surgical skill is generally associated with improved patient outcomes, although assessment is subjective; labour-intensive; and requires domain specific expertise. Automated data driven metrics can alleviate these difficulties, as demonstrated by existing machine learning instrument tracking models in minimally invasive surgery. However, these models have been tested on limited datasets of laparoscopic surgery, with a focus on isolated tasks and robotic surgery. In this paper, a new public dataset is introduced, focusing on simulated surgery, using the nasal phase of endoscopic pituitary surgery as an exemplar. Simulated surgery allows for a realistic yet repeatable environment, meaning the insights gained from automated assessment can be used by novice surgeons to hone their skills on the simulator before moving to real surgery. PRINTNet (Pituitary Real-time INstrument Tracking Network) has been created as a baseline model for this automated assessment. Consisting of DeepLabV3 for classification and segmentation; StrongSORT for tracking; and the NVIDIA Holoscan SDK for real-time performance, PRINTNet achieved 71.9% Multiple Object Tracking Precision running at 22 Frames Per Second. Using this tracking output, a Multilayer Perceptron achieved 87% accuracy in predicting surgical skill level (novice or expert), with the "ratio of total procedure time to instrument visible time" correlated with higher surgical skill. This therefore demonstrates the feasibility of automated surgical skill assessment in simulated endoscopic pituitary surgery. The new publicly available dataset can be found here: https://doi.org/10.5522/04/26511049.

Autonomous object harvesting using synchronized optoelectronic microrobots

Mar 08, 2021

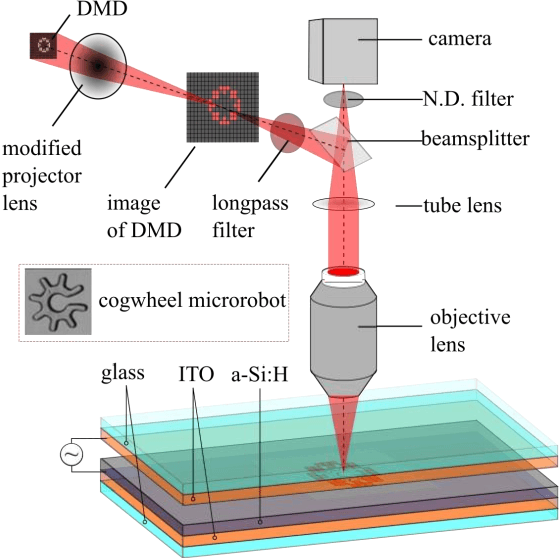

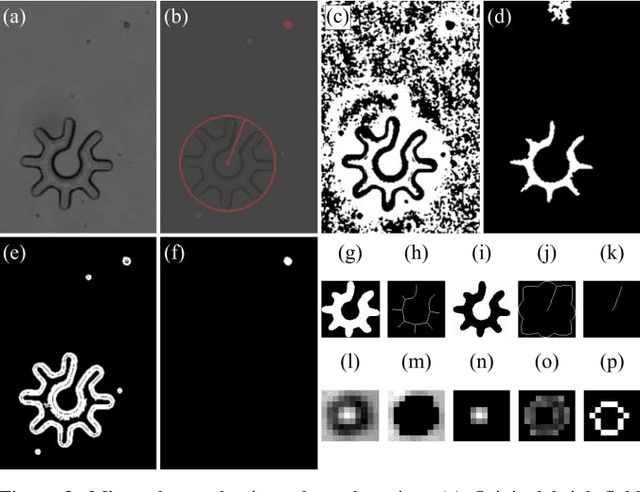

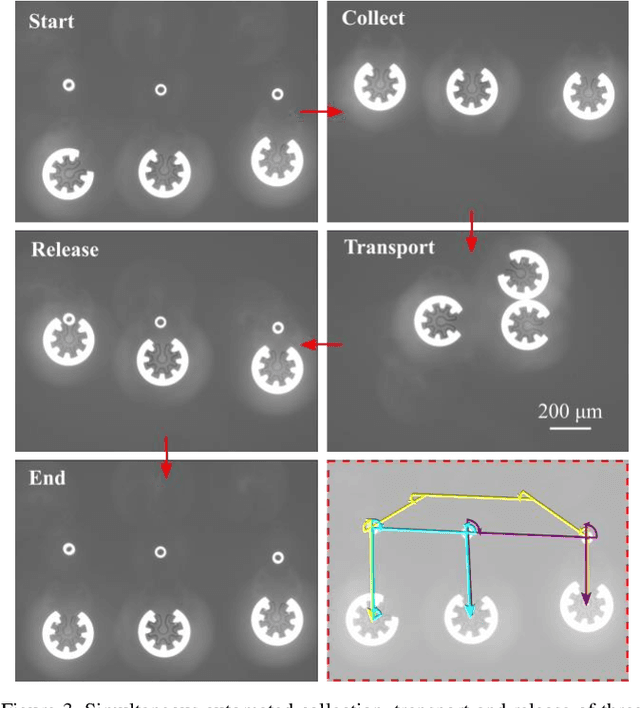

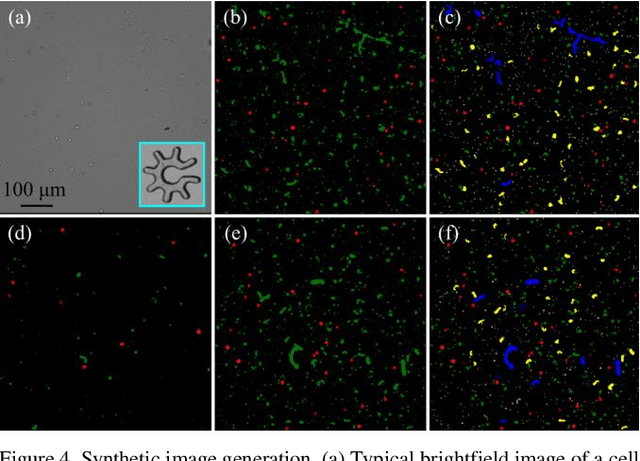

Abstract:Optoelectronic tweezer-driven microrobots (OETdMs) are a versatile micromanipulation technology based on the use of light induced dielectrophoresis to move small dielectric structures (microrobots) across a photoconductive substrate. The microrobots in turn can be used to exert forces on secondary objects and carry out a wide range of micromanipulation operations, including collecting, transporting and depositing microscopic cargos. In contrast to alternative (direct) micromanipulation techniques, OETdMs are relatively gentle, making them particularly well suited to interacting with sensitive objects such as biological cells. However, at present such systems are used exclusively under manual control by a human operator. This limits the capacity for simultaneous control of multiple microrobots, reducing both experimental throughput and the possibility of cooperative multi-robot operations. In this article, we describe an approach to automated targeting and path planning to enable open-loop control of multiple microrobots. We demonstrate the performance of the method in practice, using microrobots to simultaneously collect, transport and deposit silica microspheres. Using computational simulations based on real microscopic image data, we investigate the capacity of microrobots to collect target cells from within a dissociated tissue culture. Our results indicate the feasibility of using OETdMs to autonomously carry out micromanipulation tasks within complex, unstructured environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge