Miguel Xochicale

Automated Surgical Skill Assessment in Endoscopic Pituitary Surgery using Real-time Instrument Tracking on a High-fidelity Bench-top Phantom

Sep 25, 2024Abstract:Improved surgical skill is generally associated with improved patient outcomes, although assessment is subjective; labour-intensive; and requires domain specific expertise. Automated data driven metrics can alleviate these difficulties, as demonstrated by existing machine learning instrument tracking models in minimally invasive surgery. However, these models have been tested on limited datasets of laparoscopic surgery, with a focus on isolated tasks and robotic surgery. In this paper, a new public dataset is introduced, focusing on simulated surgery, using the nasal phase of endoscopic pituitary surgery as an exemplar. Simulated surgery allows for a realistic yet repeatable environment, meaning the insights gained from automated assessment can be used by novice surgeons to hone their skills on the simulator before moving to real surgery. PRINTNet (Pituitary Real-time INstrument Tracking Network) has been created as a baseline model for this automated assessment. Consisting of DeepLabV3 for classification and segmentation; StrongSORT for tracking; and the NVIDIA Holoscan SDK for real-time performance, PRINTNet achieved 71.9% Multiple Object Tracking Precision running at 22 Frames Per Second. Using this tracking output, a Multilayer Perceptron achieved 87% accuracy in predicting surgical skill level (novice or expert), with the "ratio of total procedure time to instrument visible time" correlated with higher surgical skill. This therefore demonstrates the feasibility of automated surgical skill assessment in simulated endoscopic pituitary surgery. The new publicly available dataset can be found here: https://doi.org/10.5522/04/26511049.

Towards a Simple Framework of Skill Transfer Learning for Robotic Ultrasound-guidance Procedures

May 06, 2023Abstract:In this paper, we present a simple framework of skill transfer learning for robotic ultrasound-guidance procedures. We briefly review challenges in skill transfer learning for robotic ultrasound-guidance procedures. We then identify the need of appropriate sampling techniques, computationally efficient neural networks models that lead to the proposal of a simple framework of skill transfer learning for real-time applications in robotic ultrasound-guidance procedures. We present pilot experiments from two participants (one experienced clinician and one non-clinician) looking for an optimal scanning plane of the four-chamber cardiac view from a fetal phantom. We analysed ultrasound image frames, time series of texture image features and quaternions and found that the experienced clinician performed the procedure in a quicker and smoother way compared to lengthy and non-constant movements from non-clinicians. For future work, we pointed out the need of pruned and quantised neural network models for real-time applications in robotic ultrasound-guidance procedure. The resources to reproduce this work are available at \url{https://github.com/mxochicale/rami-icra2023}.

Towards Realistic Ultrasound Fetal Brain Imaging Synthesis

Apr 08, 2023

Abstract:Prenatal ultrasound imaging is the first-choice modality to assess fetal health. Medical image datasets for AI and ML methods must be diverse (i.e. diagnoses, diseases, pathologies, scanners, demographics, etc), however there are few public ultrasound fetal imaging datasets due to insufficient amounts of clinical data, patient privacy, rare occurrence of abnormalities in general practice, and limited experts for data collection and validation. To address such data scarcity, we proposed generative adversarial networks (GAN)-based models, diffusion-super-resolution-GAN and transformer-based-GAN, to synthesise images of fetal ultrasound brain planes from one public dataset. We reported that GAN-based methods can generate 256x256 pixel size of fetal ultrasound trans-cerebellum brain image plane with stable training losses, resulting in lower FID values for diffusion-super-resolution-GAN (average 7.04 and lower FID 5.09 at epoch 10) than the FID values of transformer-based-GAN (average 36.02 and lower 28.93 at epoch 60). The results of this work illustrate the potential of GAN-based methods to synthesise realistic high-resolution ultrasound images, leading to future work with other fetal brain planes, anatomies, devices and the need of a pool of experts to evaluate synthesised images. Code, data and other resources to reproduce this work are available at \url{https://github.com/budai4medtech/midl2023}.

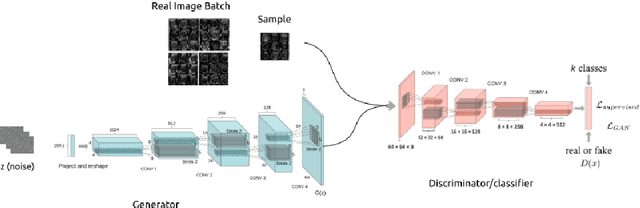

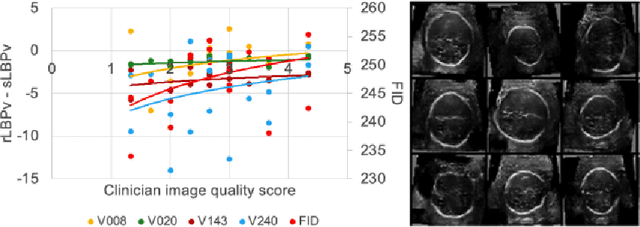

Empirical Study of Quality Image Assessment for Synthesis of Fetal Head Ultrasound Imaging with DCGANs

Jun 01, 2022

Abstract:In this work, we present an empirical study of DCGANs for synthetic generation of fetal head ultrasound, consisting of hyperparameter heuristics and image quality assessment. We present experiments to show the impact of different image sizes, epochs, data size input, and learning rates for quality image assessment on four metrics: mutual information (MI), fr\'echet inception distance (FID), peak-signal-to-noise ratio (PSNR), and local binary pattern vector (LBPv). The results show that FID and LBPv have stronger relationship with clinical image quality scores. The resources to reproduce this work are available at \url{https://github.com/xfetus/miua2022}.

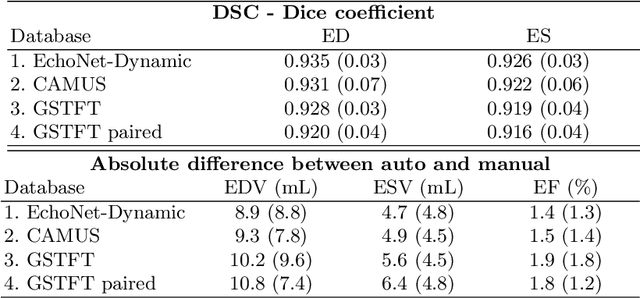

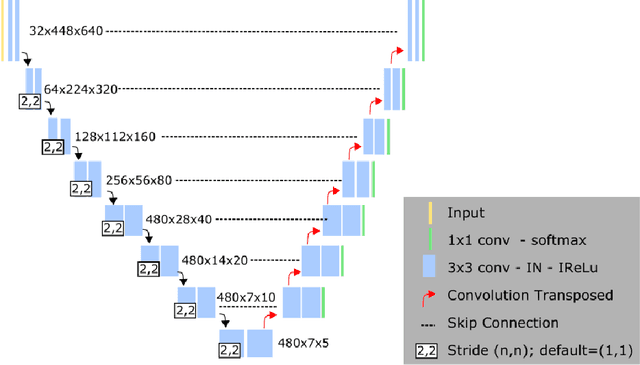

AI-enabled Assessment of Cardiac Systolic and Diastolic Function from Echocardiography

Mar 21, 2022

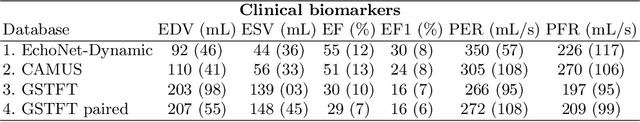

Abstract:Left ventricular (LV) function is an important factor in terms of patient management, outcome, and long-term survival of patients with heart disease. The most recently published clinical guidelines for heart failure recognise that over reliance on only one measure of cardiac function (LV ejection fraction) as a diagnostic and treatment stratification biomarker is suboptimal. Recent advances in AI-based echocardiography analysis have shown excellent results on automated estimation of LV volumes and LV ejection fraction. However, from time-varying 2-D echocardiography acquisition, a richer description of cardiac function can be obtained by estimating functional biomarkers from the complete cardiac cycle. In this work we propose for the first time an AI approach for deriving advanced biomarkers of systolic and diastolic LV function from 2-D echocardiography based on segmentations of the full cardiac cycle. These biomarkers will allow clinicians to obtain a much richer picture of the heart in health and disease. The AI model is based on the 'nn-Unet' framework and was trained and tested using four different databases. Results show excellent agreement between manual and automated analysis and showcase the potential of the advanced systolic and diastolic biomarkers for patient stratification. Finally, for a subset of 50 cases, we perform a correlation analysis between clinical biomarkers derived from echocardiography and CMR and we show excellent agreement between the two modalities.

Piloting Diversity and Inclusion Workshops in Artificial Intelligence and Robotics for Children

Mar 10, 2022

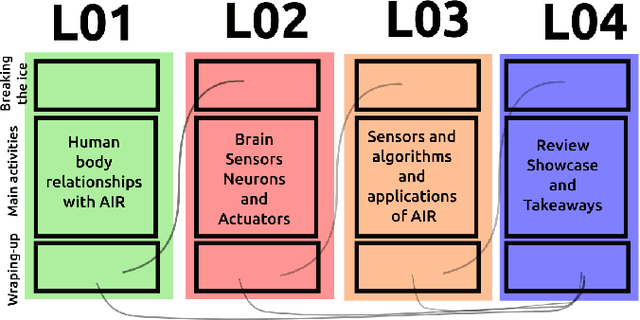

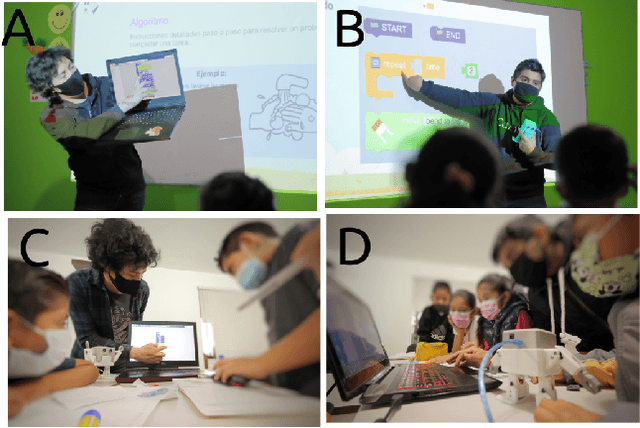

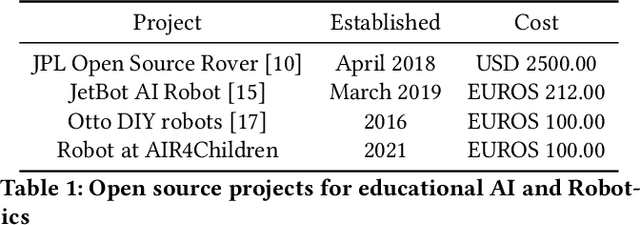

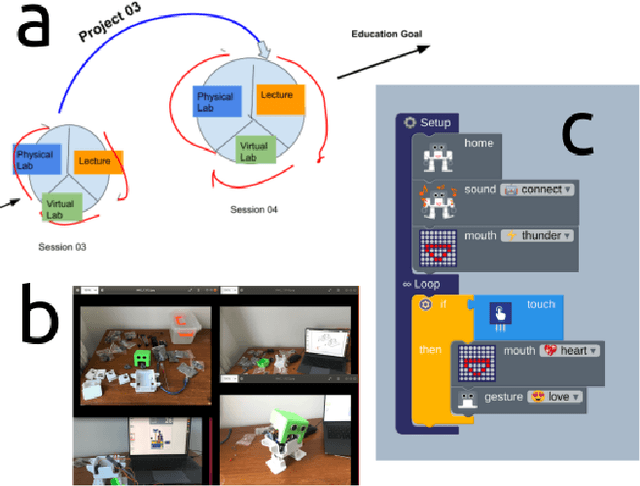

Abstract:In this paper, we present preliminary work from a pilot workshop that aimed to promote diversity and inclusion for fundamentals of Artificial Intelligence and Robotics for Children (air4children) in the context of developing countries. Considering the scarcity of funding and the little to none availability of specialised professionals to teach AI and robotics in developing countries, we present resources based on free open-source hardware and software, open educational resources, and alternative education programs. That said, the contribution of this work is the pilot workshop of four lessons that promote diversity and inclusion on teaching AI and Robotics for children to a small gender-balanced sample of 14 children of an average age of 7.64 years old. We conclude that participant, instructors, coordinators and parents engaged well in the pilot workshop noting the various challenges of having the right resources for the workshops in developing countries and posing future work. The resources to reproduce this work are available at https://github.com/air4children/hri2022.

AIR4Children: Artificial Intelligence and Robotics for Children

Mar 13, 2021

Abstract:We introduce AIR4Children, Artificial Intelligence for Children, as a way to (a) tackle aspects for inclusion, accessibility, transparency, equity, fairness and participation and (b) to create affordable child-centred materials in AI and Robotics (AIR). We present current challenges and opportunities for a child-centred approaches for AIR. Similarly, we touch on open-sourced software and hardware technologies to make a more inclusive, affordable and fair participation of children in areas of AIR. Then, we describe the avenues that AIR4Children can take with the development of open-sourced software and hardware based on our initial pilots and experiences. Similarly, we propose to follow the philosophy of Montessori education to help children to not only develop computational thinking but also to internalise new concepts and learning skills through activities of movement and repetition. Finally, we conclude with the opportunities of our work and mainly we pose the future work of putting in practice what is proposed here to evaluate the potential impact on AIR to children, instructors, parents and their community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge