Laurent Kloeker

Framework for Quality Evaluation of Smart Roadside Infrastructure Sensors for Automated Driving Applications

Apr 16, 2023

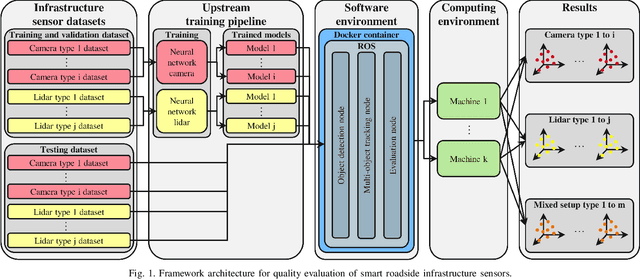

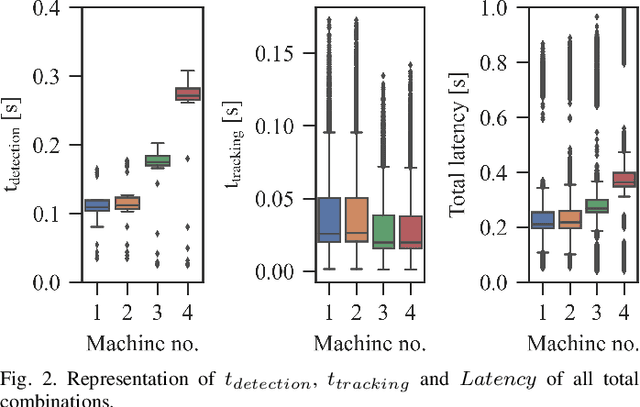

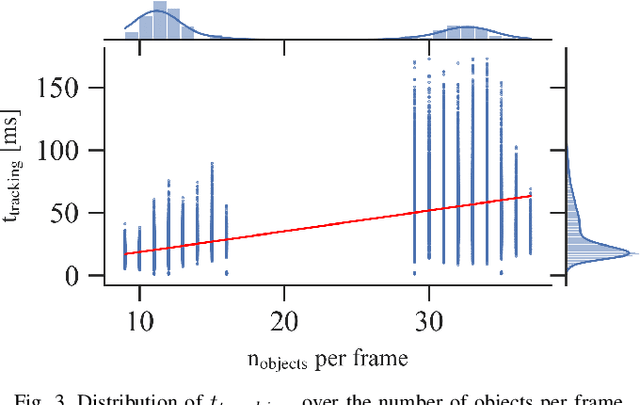

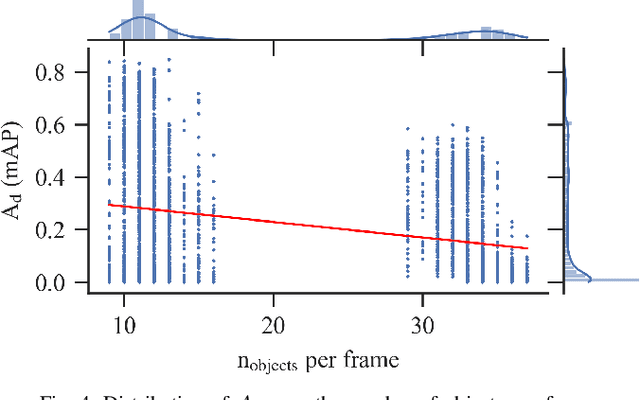

Abstract:The use of smart roadside infrastructure sensors is highly relevant for future applications of connected and automated vehicles. External sensor technology in the form of intelligent transportation system stations (ITS-Ss) can provide safety-critical real-time information about road users in the form of a digital twin. The choice of sensor setups has a major influence on the downstream function as well as the data quality. To date, there is insufficient research on which sensor setups result in which levels of ITS-S data quality. We present a novel approach to perform detailed quality assessment for smart roadside infrastructure sensors. Our framework is multimodal across different sensor types and is evaluated on the DAIR-V2X dataset. We analyze the composition of different lidar and camera sensors and assess them in terms of accuracy, latency, and reliability. The evaluations show that the framework can be used reliably for several future ITS-S applications.

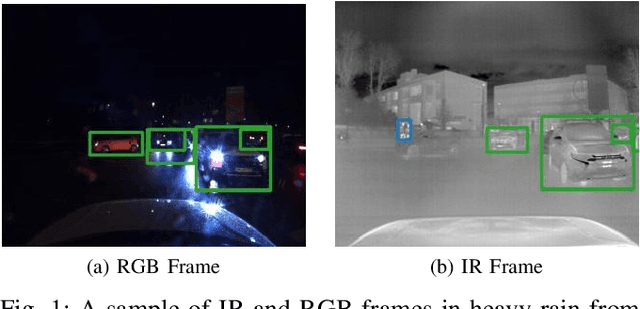

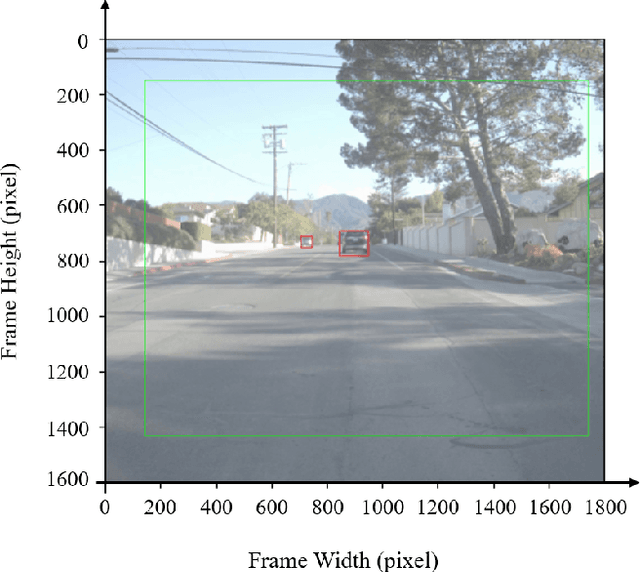

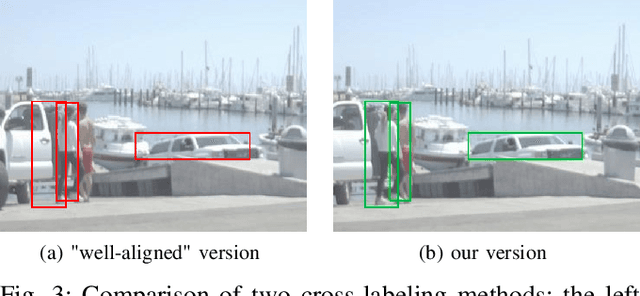

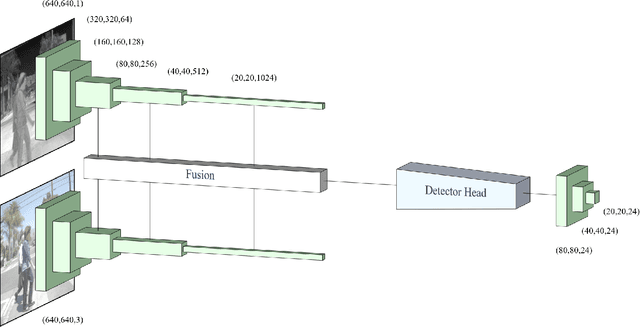

Robust Environment Perception for Automated Driving: A Unified Learning Pipeline for Visual-Infrared Object Detection

Jun 08, 2022

Abstract:The RGB complementary metal-oxidesemiconductor (CMOS) sensor works within the visible light spectrum. Therefore it is very sensitive to environmental light conditions. On the contrary, a long-wave infrared (LWIR) sensor operating in 8-14 micro meter spectral band, functions independent of visible light. In this paper, we exploit both visual and thermal perception units for robust object detection purposes. After delicate synchronization and (cross-) labeling of the FLIR [1] dataset, this multi-modal perception data passes through a convolutional neural network (CNN) to detect three critical objects on the road, namely pedestrians, bicycles, and cars. After evaluation of RGB and infrared (thermal and infrared are often used interchangeably) sensors separately, various network structures are compared to fuse the data at the feature level effectively. Our RGB-thermal (RGBT) fusion network, which takes advantage of a novel entropy-block attention module (EBAM), outperforms the state-of-the-art network [2] by 10% with 82.9% mAP.

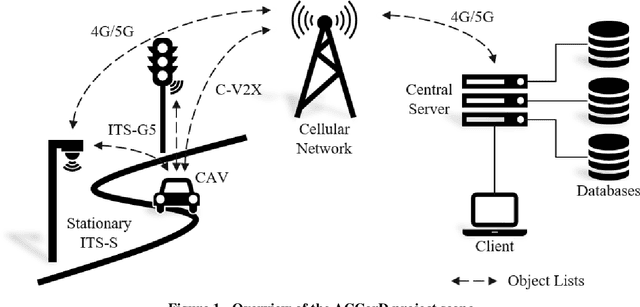

Corridor for new mobility Aachen-Düsseldorf: Methods and concepts of the research project ACCorD

Jul 13, 2021

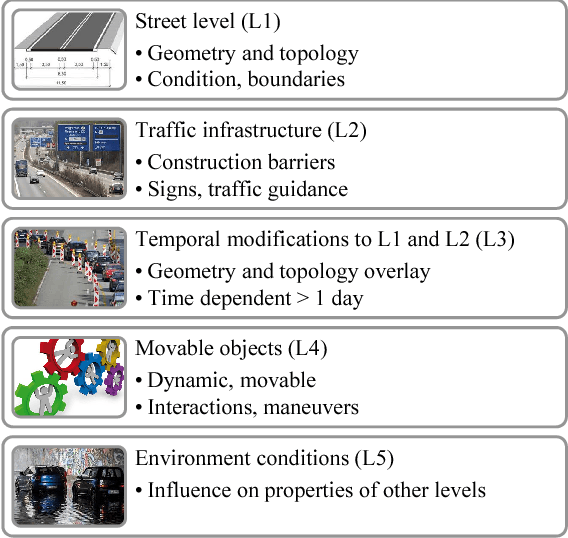

Abstract:With the Corridor for New Mobility Aachen - D\"usseldorf, an integrated development environment is created, incorporating existing test capabilities, to systematically test and validate automated vehicles in interaction with connected Intelligent Transport Systems Stations (ITS-Ss). This is achieved through a time- and cost-efficient toolchain and methodology, in which simulation, closed test sites as well as test fields in public transport are linked in the best possible way. By implementing a digital twin, the recorded traffic events can be visualized in real-time and driving functions can be tested in the simulation based on real data. In order to represent diverse traffic scenarios, the corridor contains a highway section, a rural area, and urban areas. First, this paper outlines the project goals before describing the individual project contents in more detail. These include the concepts of traffic detection, driving function development, digital twin development, and public involvement.

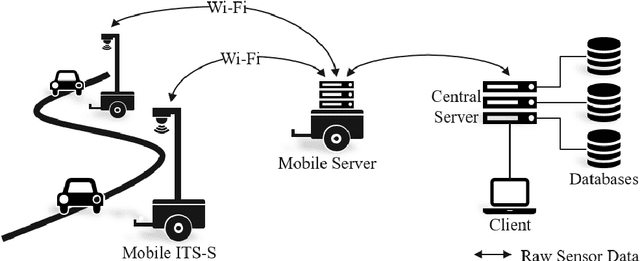

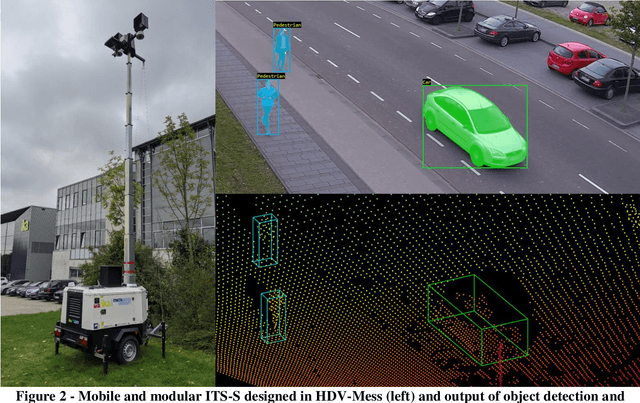

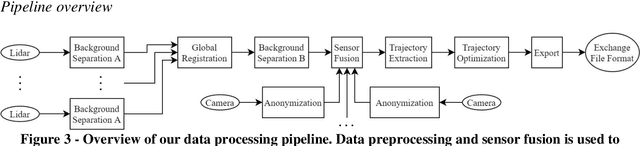

Highly accurate digital traffic recording as a basis for future mobility research: Methods and concepts of the research project HDV-Mess

Jun 08, 2021

Abstract:The research project HDV-Mess aims at a currently missing, but very crucial component for addressing important challenges in the field of connected and automated driving on public roads. The goal is to record traffic events at various relevant locations with high accuracy and to collect real traffic data as a basis for the development and validation of current and future sensor technologies as well as automated driving functions. For this purpose, it is necessary to develop a concept for a mobile modular system of measuring stations for highly accurate traffic data acquisition, which enables a temporary installation of a sensor and communication infrastructure at different locations. Within this paper, we first discuss the project goals before we present our traffic detection concept using mobile modular intelligent transport systems stations (ITS-Ss). We then explain the approaches for data processing of sensor raw data to refined trajectories, data communication, and data validation.

Real-Time Point Cloud Fusion of Multi-LiDAR Infrastructure Sensor Setups with Unknown Spatial Location and Orientation

Jul 28, 2020

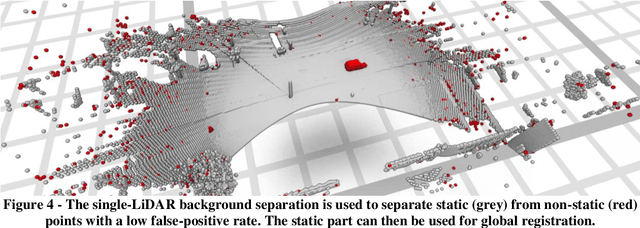

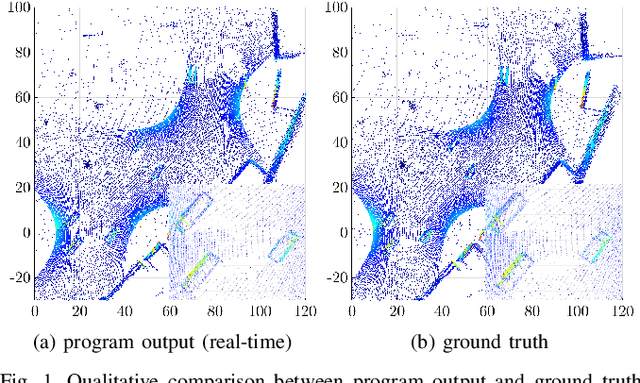

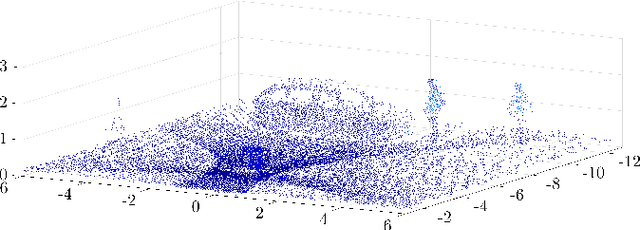

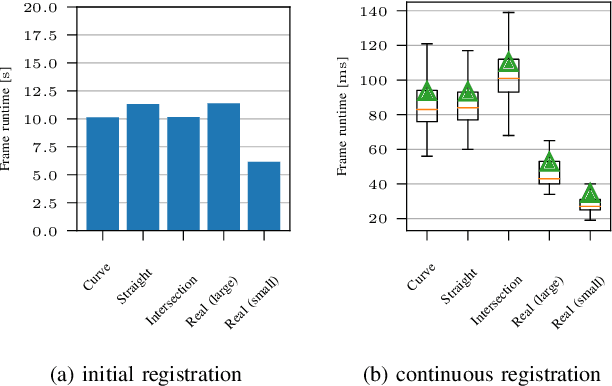

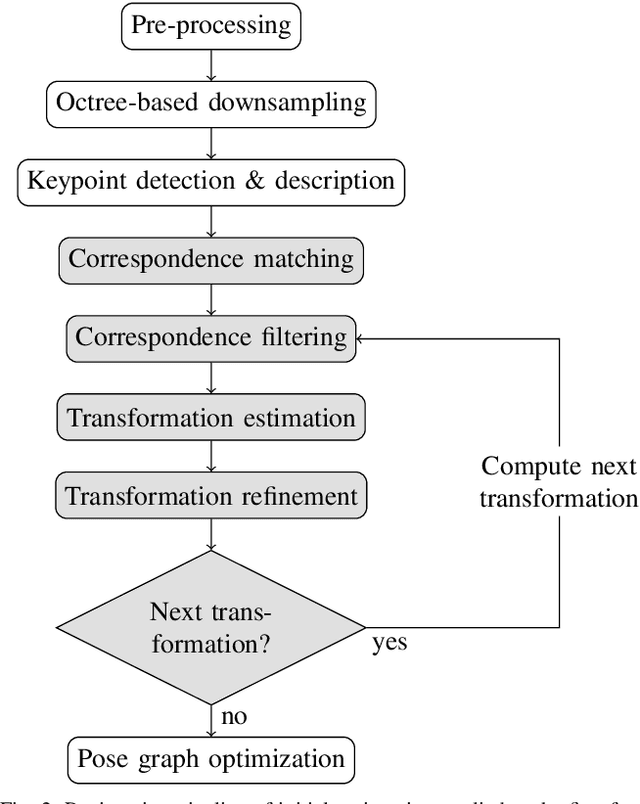

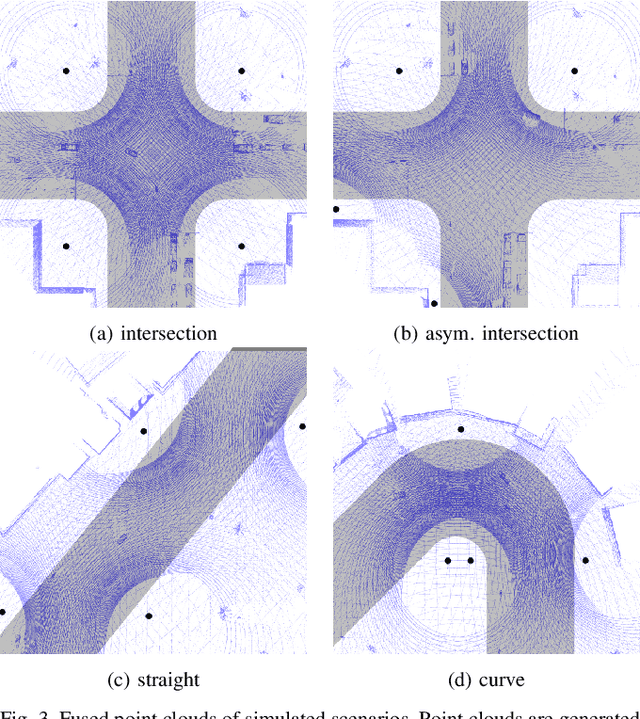

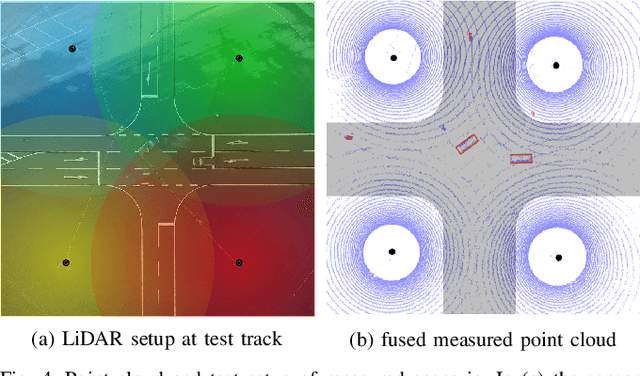

Abstract:The use of infrastructure sensor technology for traffic detection has already been proven several times. However, extrinsic sensor calibration is still a challenge for the operator. While previous approaches are unable to calibrate the sensors without the use of reference objects in the sensor field of view (FOV), we present an algorithm that is completely detached from external assistance and runs fully automatically. Our method focuses on the high-precision fusion of LiDAR point clouds and is evaluated in simulation as well as on real measurements. We set the LiDARs in a continuous pendulum motion in order to simulate real-world operation as closely as possible and to increase the demands on the algorithm. However, it does not receive any information about the initial spatial location and orientation of the LiDARs throughout the entire measurement period. Experiments in simulation as well as with real measurements have shown that our algorithm performs a continuous point cloud registration of up to four 64-layer LiDARs in real-time. The averaged resulting translational error is within a few centimeters and the averaged error in rotation is below 0.15 degrees.

High-Precision Digital Traffic Recording with Multi-LiDAR Infrastructure Sensor Setups

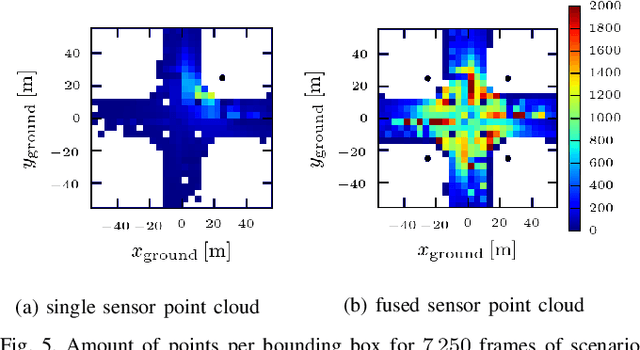

Jun 22, 2020

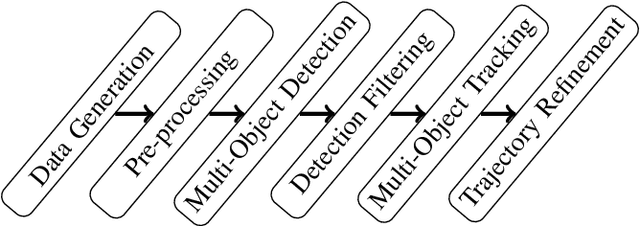

Abstract:Large driving datasets are a key component in the current development and safeguarding of automated driving functions. Various methods can be used to collect such driving data records. In addition to the use of sensor equipped research vehicles or unmanned aerial vehicles (UAVs), the use of infrastructure sensor technology offers another alternative. To minimize object occlusion during data collection, it is crucial to record the traffic situation from several perspectives in parallel. A fusion of all raw sensor data might create better conditions for multi-object detection and tracking (MODT) compared to the use of individual raw sensor data. So far, no sufficient studies have been conducted to sufficiently confirm this approach. In our work we investigate the impact of fused LiDAR point clouds compared to single LiDAR point clouds. We model different urban traffic scenarios with up to eight 64-layer LiDARs in simulation and in reality. We then analyze the properties of the resulting point clouds and perform MODT for all emerging traffic participants. The evaluation of the extracted trajectories shows that a fused infrastructure approach significantly increases the tracking results and reaches accuracies within a few centimeters.

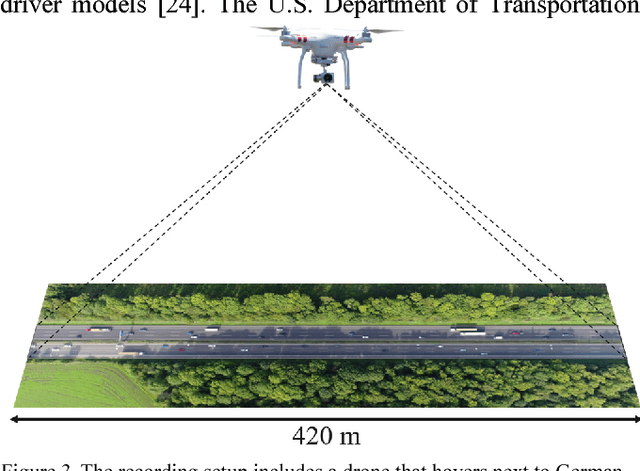

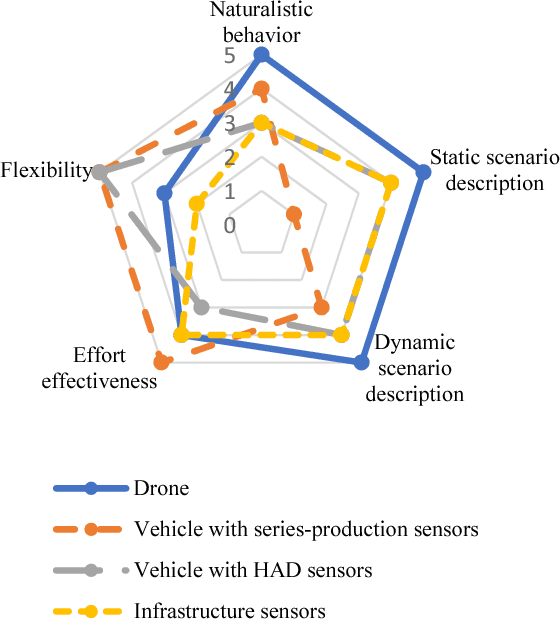

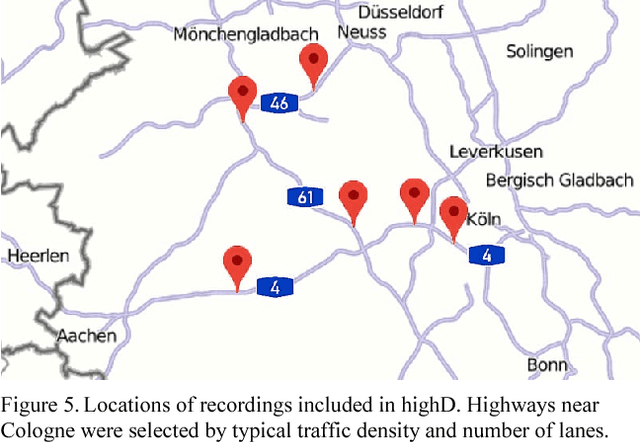

The highD Dataset: A Drone Dataset of Naturalistic Vehicle Trajectories on German Highways for Validation of Highly Automated Driving Systems

Oct 11, 2018

Abstract:Scenario-based testing for the safety validation of highly automated vehicles is a promising approach that is being examined in research and industry. This approach heavily relies on data from real-world scenarios to derive the necessary scenario information for testing. Measurement data should be collected at a reasonable effort, contain naturalistic behavior of road users and include all data relevant for a description of the identified scenarios in sufficient quality. However, the current measurement methods fail to meet at least one of the requirements. Thus, we propose a novel method to measure data from an aerial perspective for scenario-based validation fulfilling the mentioned requirements. Furthermore, we provide a large-scale naturalistic vehicle trajectory dataset from German highways called highD. We evaluate the data in terms of quantity, variety and contained scenarios. Our dataset consists of 16.5 hours of measurements from six locations with 110 000 vehicles, a total driven distance of 45 000 km and 5600 recorded complete lane changes. The highD dataset is available online at: http://www.highD-dataset.com

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge