Christian Kotulla

HetroD: A High-Fidelity Drone Dataset and Benchmark for Autonomous Driving in Heterogeneous Traffic

Feb 03, 2026Abstract:We present HetroD, a dataset and benchmark for developing autonomous driving systems in heterogeneous environments. HetroD targets the critical challenge of navi- gating real-world heterogeneous traffic dominated by vulner- able road users (VRUs), including pedestrians, cyclists, and motorcyclists that interact with vehicles. These mixed agent types exhibit complex behaviors such as hook turns, lane splitting, and informal right-of-way negotiation. Such behaviors pose significant challenges for autonomous vehicles but remain underrepresented in existing datasets focused on structured, lane-disciplined traffic. To bridge the gap, we collect a large- scale drone-based dataset to provide a holistic observation of traffic scenes with centimeter-accurate annotations, HD maps, and traffic signal states. We further develop a modular toolkit for extracting per-agent scenarios to support downstream task development. In total, the dataset comprises over 65.4k high- fidelity agent trajectories, 70% of which are from VRUs. HetroD supports modeling of VRU behaviors in dense, het- erogeneous traffic and provides standardized benchmarks for forecasting, planning, and simulation tasks. Evaluation results reveal that state-of-the-art prediction and planning models struggle with the challenges presented by our dataset: they fail to predict lateral VRU movements, cannot handle unstructured maneuvers, and exhibit limited performance in dense and multi-agent scenarios, highlighting the need for more robust approaches to heterogeneous traffic. See our project page for more examples: https://hetroddata.github.io/HetroD/

Real-Time Point Cloud Fusion of Multi-LiDAR Infrastructure Sensor Setups with Unknown Spatial Location and Orientation

Jul 28, 2020

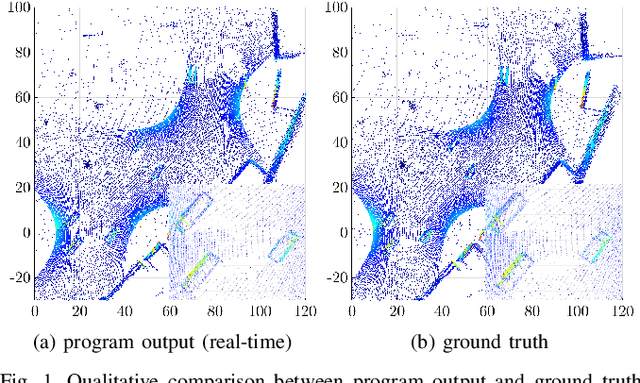

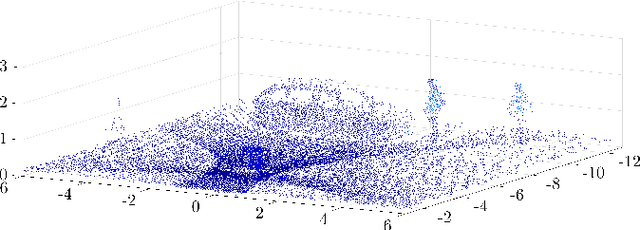

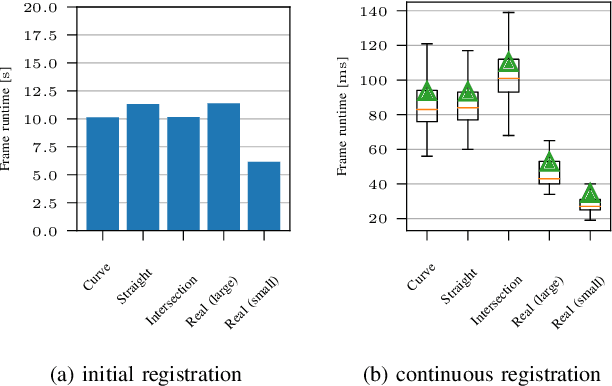

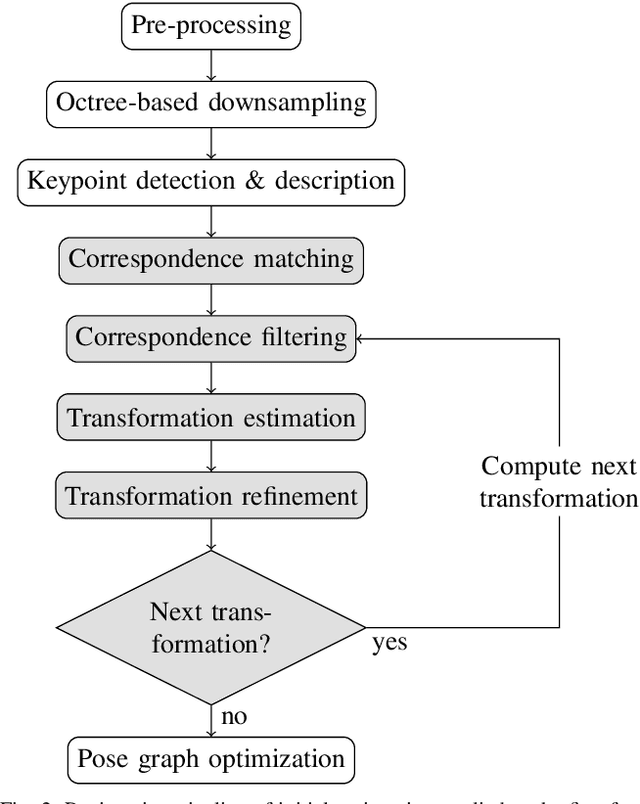

Abstract:The use of infrastructure sensor technology for traffic detection has already been proven several times. However, extrinsic sensor calibration is still a challenge for the operator. While previous approaches are unable to calibrate the sensors without the use of reference objects in the sensor field of view (FOV), we present an algorithm that is completely detached from external assistance and runs fully automatically. Our method focuses on the high-precision fusion of LiDAR point clouds and is evaluated in simulation as well as on real measurements. We set the LiDARs in a continuous pendulum motion in order to simulate real-world operation as closely as possible and to increase the demands on the algorithm. However, it does not receive any information about the initial spatial location and orientation of the LiDARs throughout the entire measurement period. Experiments in simulation as well as with real measurements have shown that our algorithm performs a continuous point cloud registration of up to four 64-layer LiDARs in real-time. The averaged resulting translational error is within a few centimeters and the averaged error in rotation is below 0.15 degrees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge