Lam Nguyen

Guaranteeing Conservation of Integrals with Projection in Physics-Informed Neural Networks

Nov 12, 2025Abstract:We propose a novel projection method that guarantees the conservation of integral quantities in Physics-Informed Neural Networks (PINNs). While the soft constraint that PINNs use to enforce the structure of partial differential equations (PDEs) enables necessary flexibility during training, it also permits the discovered solution to violate physical laws. To address this, we introduce a projection method that guarantees the conservation of the linear and quadratic integrals, both separately and jointly. We derived the projection formulae by solving constrained non-linear optimization problems and found that our PINN modified with the projection, which we call PINN-Proj, reduced the error in the conservation of these quantities by three to four orders of magnitude compared to the soft constraint and marginally reduced the PDE solution error. We also found evidence that the projection improved convergence through improving the conditioning of the loss landscape. Our method holds promise as a general framework to guarantee the conservation of any integral quantity in a PINN if a tractable solution exists.

PromptGuard: An Orchestrated Prompting Framework for Principled Synthetic Text Generation for Vulnerable Populations using LLMs with Enhanced Safety, Fairness, and Controllability

Sep 10, 2025Abstract:The proliferation of Large Language Models (LLMs) in real-world applications poses unprecedented risks of generating harmful, biased, or misleading information to vulnerable populations including LGBTQ+ individuals, single parents, and marginalized communities. While existing safety approaches rely on post-hoc filtering or generic alignment techniques, they fail to proactively prevent harmful outputs at the generation source. This paper introduces PromptGuard, a novel modular prompting framework with our breakthrough contribution: VulnGuard Prompt, a hybrid technique that prevents harmful information generation using real-world data-driven contrastive learning. VulnGuard integrates few-shot examples from curated GitHub repositories, ethical chain-of-thought reasoning, and adaptive role-prompting to create population-specific protective barriers. Our framework employs theoretical multi-objective optimization with formal proofs demonstrating 25-30% analytical harm reduction through entropy bounds and Pareto optimality. PromptGuard orchestrates six core modules: Input Classification, VulnGuard Prompting, Ethical Principles Integration, External Tool Interaction, Output Validation, and User-System Interaction, creating an intelligent expert system for real-time harm prevention. We provide comprehensive mathematical formalization including convergence proofs, vulnerability analysis using information theory, and theoretical validation framework using GitHub-sourced datasets, establishing mathematical foundations for systematic empirical research.

More for Keys, Less for Values: Adaptive KV Cache Quantization

Feb 20, 2025Abstract:This paper introduces an information-aware quantization framework that adaptively compresses the key-value (KV) cache in large language models (LLMs). Although prior work has underscored the distinct roles of key and value cache during inference, our systematic analysis -- examining singular value distributions, spectral norms, and Frobenius norms -- reveals, for the first time, that key matrices consistently exhibit higher norm values and are more sensitive to quantization than value matrices. Furthermore, our theoretical analysis shows that matrices with higher spectral norms amplify quantization errors more significantly. Motivated by these insights, we propose a mixed-precision quantization strategy, KV-AdaQuant, which allocates more bit-width for keys and fewer for values since key matrices have higher norm values. With the same total KV bit budget, this approach effectively mitigates error propagation across transformer layers while achieving significant memory savings. Our extensive experiments on multiple LLMs (1B--70B) demonstrate that our mixed-precision quantization scheme maintains high model accuracy even under aggressive compression. For instance, using 4-bit for Key and 2-bit for Value achieves an accuracy of 75.2%, whereas reversing the assignment (2-bit for Key and 4-bit for Value) yields only 54.7% accuracy. The code is available at https://tinyurl.com/kv-adaquant

Count What You Want: Exemplar Identification and Few-shot Counting of Human Actions in the Wild

Dec 28, 2023

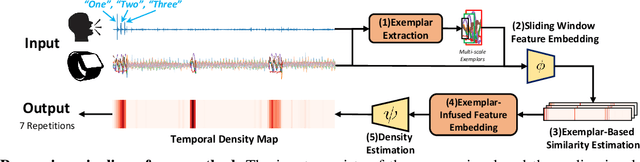

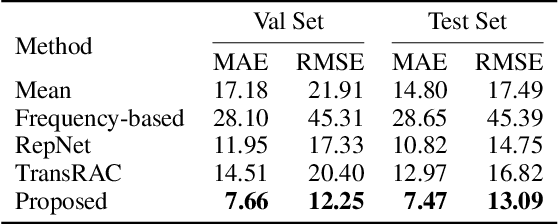

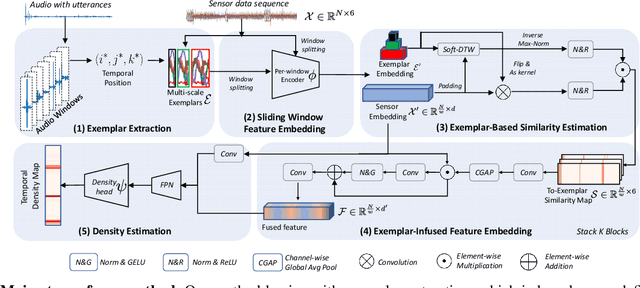

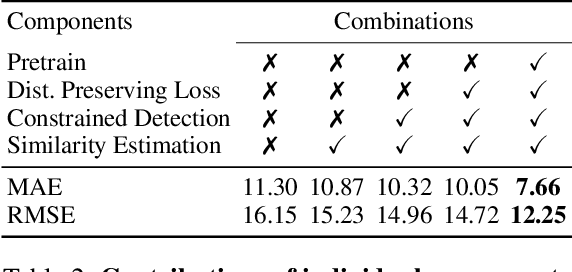

Abstract:This paper addresses the task of counting human actions of interest using sensor data from wearable devices. We propose a novel exemplar-based framework, allowing users to provide exemplars of the actions they want to count by vocalizing predefined sounds ''one'', ''two'', and ''three''. Our method first localizes temporal positions of these utterances from the audio sequence. These positions serve as the basis for identifying exemplars representing the action class of interest. A similarity map is then computed between the exemplars and the entire sensor data sequence, which is further fed into a density estimation module to generate a sequence of estimated density values. Summing these density values provides the final count. To develop and evaluate our approach, we introduce a diverse and realistic dataset consisting of real-world data from 37 subjects and 50 action categories, encompassing both sensor and audio data. The experiments on this dataset demonstrate the viability of the proposed method in counting instances of actions from new classes and subjects that were not part of the training data. On average, the discrepancy between the predicted count and the ground truth value is 7.47, significantly lower than the errors of the frequency-based and transformer-based methods. Our project, code and dataset can be found at https://github.com/cvlab-stonybrook/ExRAC.

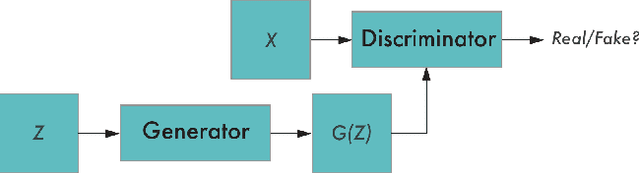

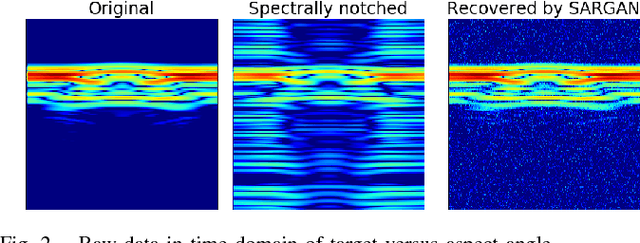

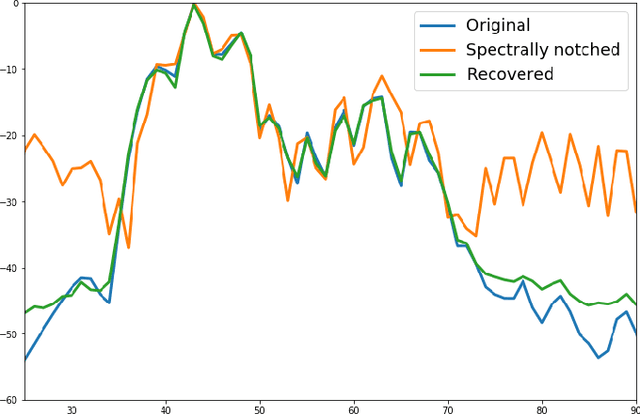

Generative Adversarial Networks for Recovering Missing Spectral Information

Dec 13, 2018

Abstract:Ultra-wideband (UWB) radar systems nowadays typical operate in the low frequency spectrum to achieve penetration capability. However, this spectrum is also shared by many others communication systems, which causes missing information in the frequency bands. To recover this missing spectral information, we propose a generative adversarial network, called SARGAN, that learns the relationship between original and missing band signals by observing these training pairs in a clever way. Initial results shows that this approach is promising in tackling this challenging missing band problem.

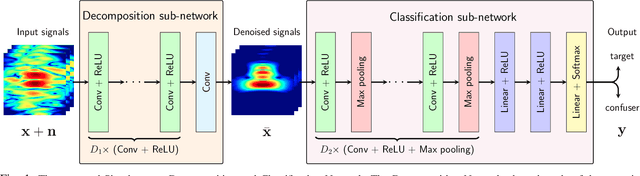

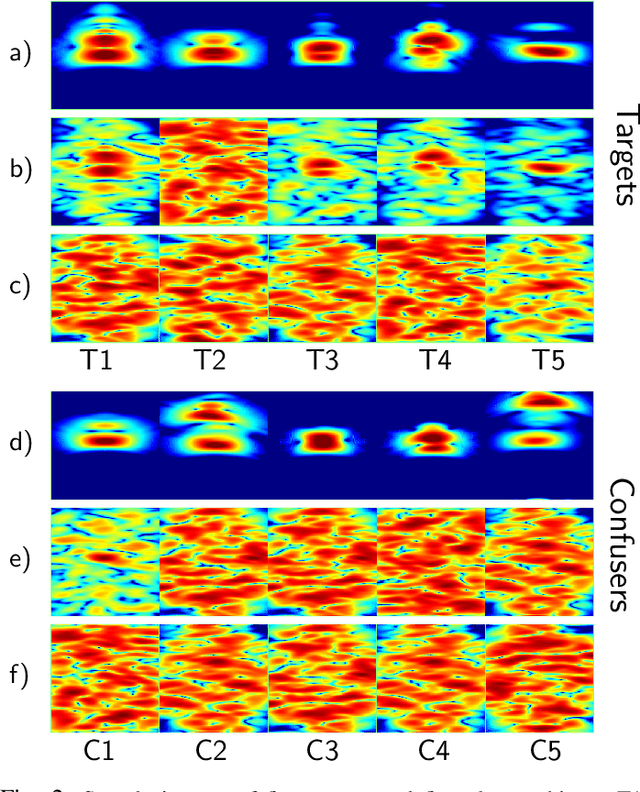

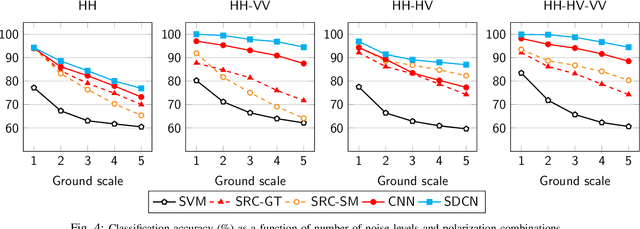

Deep Network for Simultaneous Decomposition and Classification in UWB-SAR Imagery

Feb 22, 2018

Abstract:Classifying buried and obscured targets of interest from other natural and manmade clutter objects in the scene is an important problem for the U.S. Army. Targets of interest are often represented by signals captured using low-frequency (UHF to L-band) ultra-wideband (UWB) synthetic aperture radar (SAR) technology. This technology has been used in various applications, including ground penetration and sensing-through-the-wall. However, the technology still faces a significant issues regarding low-resolution SAR imagery in this particular frequency band, low radar cross sections (RCS), small objects compared to radar signal wavelengths, and heavy interference. The classification problem has been firstly, and partially, addressed by sparse representation-based classification (SRC) method which can extract noise from signals and exploit the cross-channel information. Despite providing potential results, SRC-related methods have drawbacks in representing nonlinear relations and dealing with larger training sets. In this paper, we propose a Simultaneous Decomposition and Classification Network (SDCN) to alleviate noise inferences and enhance classification accuracy. The network contains two jointly trained sub-networks: the decomposition sub-network handles denoising, while the classification sub-network discriminates targets from confusers. Experimental results show significant improvements over a network without decomposition and SRC-related methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge