Kyle Johnson

Solar-powered shape-changing origami microfliers

Sep 13, 2023Abstract:Using wind to disperse microfliers that fall like seeds and leaves can help automate large-scale sensor deployments. Here, we present battery-free microfliers that can change shape in mid-air to vary their dispersal distance. We design origami microfliers using bi-stable leaf-out structures and uncover an important property: a simple change in the shape of these origami structures causes two dramatically different falling behaviors. When unfolded and flat, the microfliers exhibit a tumbling behavior that increases lateral displacement in the wind. When folded inward, their orientation is stabilized, resulting in a downward descent that is less influenced by wind. To electronically transition between these two shapes, we designed a low-power electromagnetic actuator that produces peak forces of up to 200 millinewtons within 25 milliseconds while powered by solar cells. We fabricated a circuit directly on the folded origami structure that includes a programmable microcontroller, Bluetooth radio, solar power harvesting circuit, a pressure sensor to estimate altitude and a temperature sensor. Outdoor evaluations show that our 414 milligram origami microfliers are able to electronically change their shape mid-air, travel up to 98 meters in a light breeze, and wirelessly transmit data via Bluetooth up to 60 meters away, using only power collected from the sun.

Modular machine learning-based elastoplasticity: generalization in the context of limited data

Oct 15, 2022

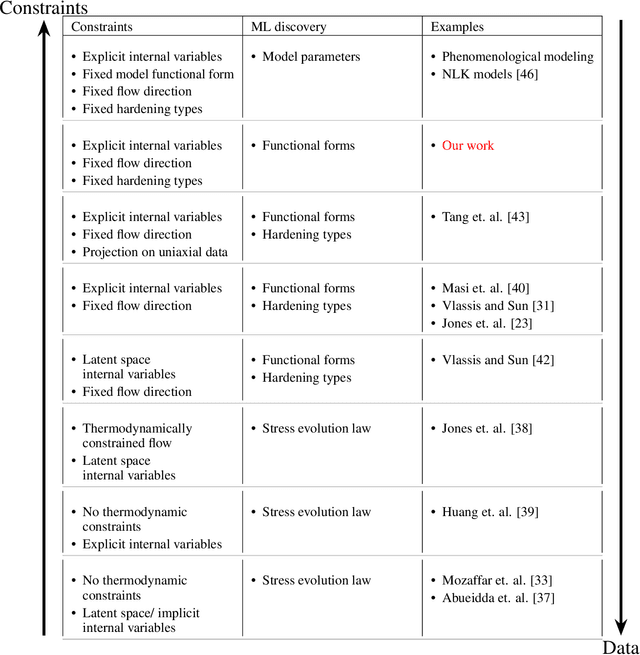

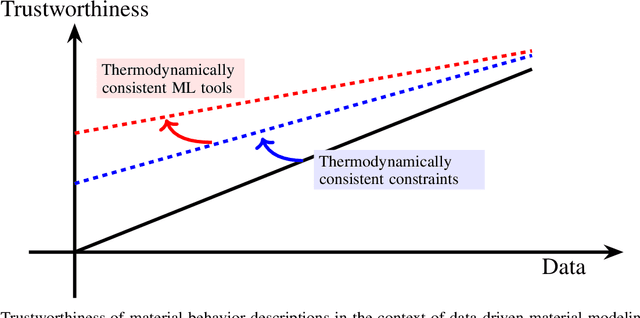

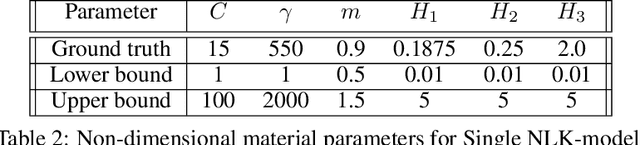

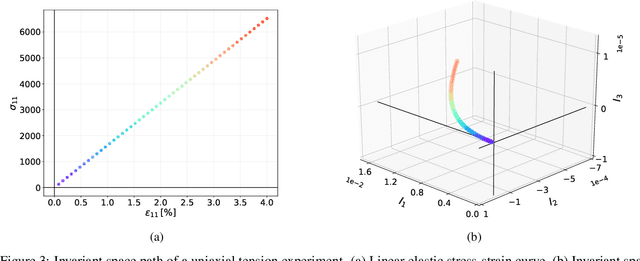

Abstract:The development of accurate constitutive models for materials that undergo path-dependent processes continues to be a complex challenge in computational solid mechanics. Challenges arise both in considering the appropriate model assumptions and from the viewpoint of data availability, verification, and validation. Recently, data-driven modeling approaches have been proposed that aim to establish stress-evolution laws that avoid user-chosen functional forms by relying on machine learning representations and algorithms. However, these approaches not only require a significant amount of data but also need data that probes the full stress space with a variety of complex loading paths. Furthermore, they rarely enforce all necessary thermodynamic principles as hard constraints. Hence, they are in particular not suitable for low-data or limited-data regimes, where the first arises from the cost of obtaining the data and the latter from the experimental limitations of obtaining labeled data, which is commonly the case in engineering applications. In this work, we discuss a hybrid framework that can work on a variable amount of data by relying on the modularity of the elastoplasticity formulation where each component of the model can be chosen to be either a classical phenomenological or a data-driven model depending on the amount of available information and the complexity of the response. The method is tested on synthetic uniaxial data coming from simulations as well as cyclic experimental data for structural materials. The discovered material models are found to not only interpolate well but also allow for accurate extrapolation in a thermodynamically consistent manner far outside the domain of the training data. Training aspects and details of the implementation of these models into Finite Element simulations are discussed and analyzed.

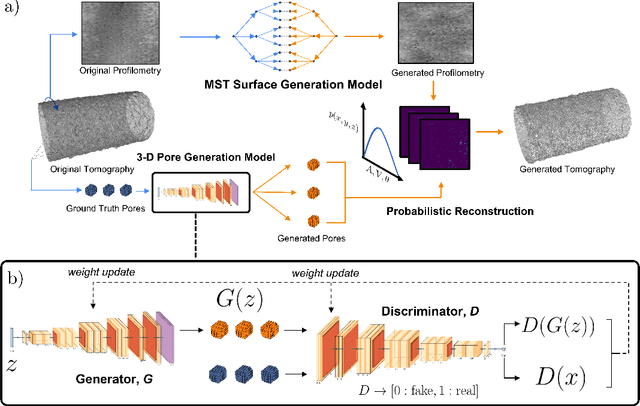

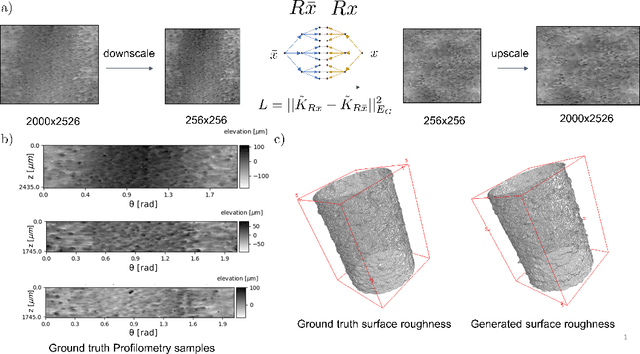

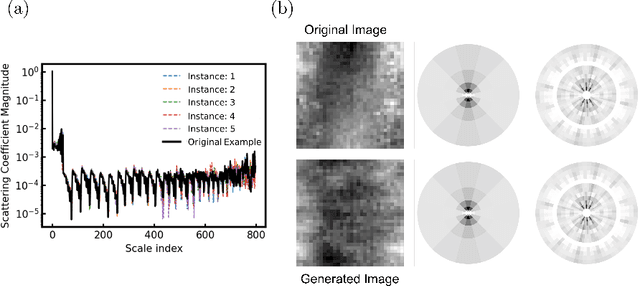

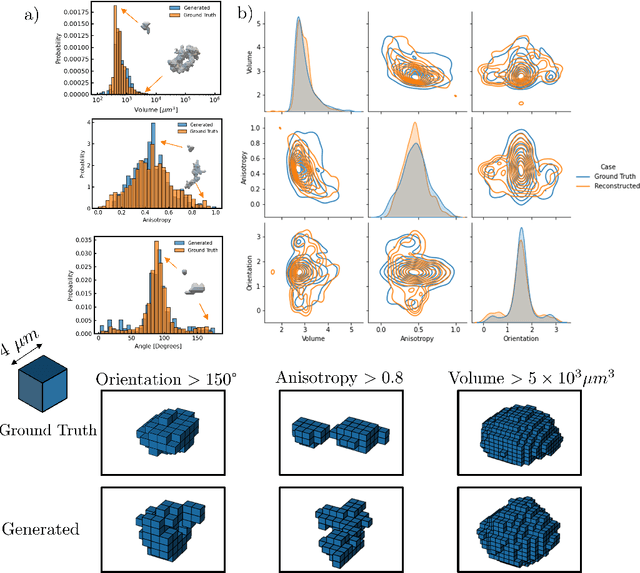

Deep-Learned Generators of Porosity Distributions Produced During Metal Additive Manufacturing

May 11, 2022

Abstract:Laser Powder Bed Fusion has become a widely adopted method for metal Additive Manufacturing (AM) due to its ability to mass produce complex parts with increased local control. However, AM produced parts can be subject to undesirable porosity, negatively influencing the properties of printed components. Thus, controlling porosity is integral for creating effective parts. A precise understanding of the porosity distribution is crucial for accurately simulating potential fatigue and failure zones. Previous research on generating synthetic porous microstructures have succeeded in generating parts with high density, isotropic porosity distributions but are often inapplicable to cases with sparser, boundary-dependent pore distributions. Our work bridges this gap by providing a method that considers these constraints by deconstructing the generation problem into its constitutive parts. A framework is introduced that combines Generative Adversarial Networks with Mallat Scattering Transform-based autocorrelation methods to construct novel realizations of the individual pore geometries and surface roughness, then stochastically reconstruct them to form realizations of a porous printed part. The generated parts are compared to the existing experimental porosity distributions based on statistical and dimensional metrics, such as nearest neighbor distances, pore volumes, pore anisotropies and scattering transform based auto-correlations.

Leaf-like Origami with Bistability for Self-Adaptive Grasping Motions

Nov 03, 2020

Abstract:The leaf-like origami structure was inspired by geometric patterns found in nature, exhibiting unique transitions between open and closed shapes. With a bistable energy landscape, leaf-like origami is able to replicate the autonomous grasping of objects observed in biological systems like the Venus flytrap. We show uniform grasping motions of the leaf-like origami, as well as various non-uniform grasping motions which arise from its multi-transformable nature. Grasping motions can be triggered with high tunability due to the structure's bistable energy landscape. We demonstrate the self-adaptive grasping motion by dropping a target object onto our paper prototype, which does not require an external power source to retain the capture of the object. We also explore the non-uniform grasping motions of the leaf-like structure by selectively controlling the creases, which reveals various unique grasping configurations that can be exploited for versatile, autonomous, and self-adaptive robotic operations.

Neural Network Based Reconstruction of a 3D Object from a 2D Wireframe

Jul 14, 2010

Abstract:We propose a new approach for constructing a 3D representation from a 2D wireframe drawing. A drawing is simply a parallel projection of a 3D object onto a 2D surface; humans are able to recreate mental 3D models from 2D representations very easily, yet the process is very difficult to emulate computationally. We hypothesize that our ability to perform this construction relies on the angles in the 2D scene, among other geometric properties. Being able to reproduce this reconstruction process automatically would allow for efficient and robust 3D sketch interfaces. Our research focuses on the relationship between 2D geometry observable in the sketch and 3D geometry derived from a potential 3D construction. We present a fully automated system that constructs 3D representations from 2D wireframes using a neural network in conjunction with a genetic search algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge