Kwok Yan Lam

Bona fide Cross Testing Reveals Weak Spot in Audio Deepfake Detection Systems

Sep 11, 2025Abstract:Audio deepfake detection (ADD) models are commonly evaluated using datasets that combine multiple synthesizers, with performance reported as a single Equal Error Rate (EER). However, this approach disproportionately weights synthesizers with more samples, underrepresenting others and reducing the overall reliability of EER. Additionally, most ADD datasets lack diversity in bona fide speech, often featuring a single environment and speech style (e.g., clean read speech), limiting their ability to simulate real-world conditions. To address these challenges, we propose bona fide cross-testing, a novel evaluation framework that incorporates diverse bona fide datasets and aggregates EERs for more balanced assessments. Our approach improves robustness and interpretability compared to traditional evaluation methods. We benchmark over 150 synthesizers across nine bona fide speech types and release a new dataset to facilitate further research at https://github.com/cyaaronk/audio_deepfake_eval.

A Macro- and Micro-Hierarchical Transfer Learning Framework for Cross-Domain Fake News Detection

Feb 20, 2025

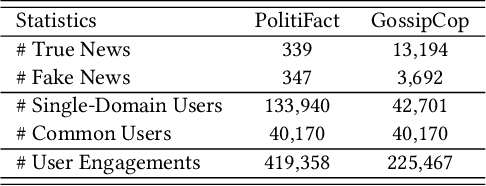

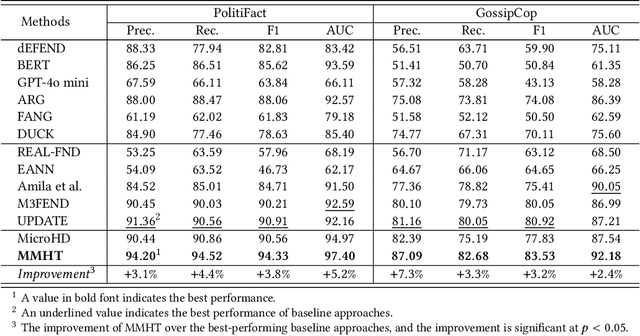

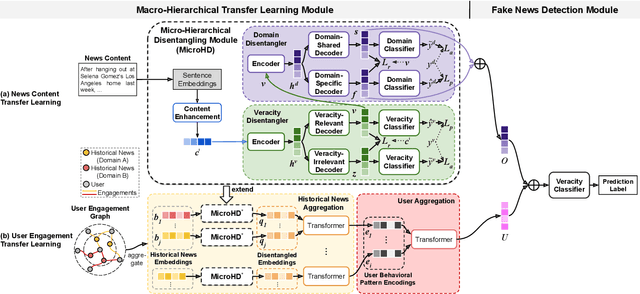

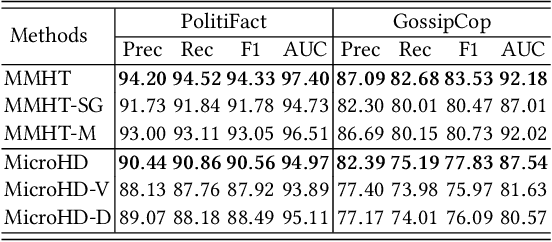

Abstract:Cross-domain fake news detection aims to mitigate domain shift and improve detection performance by transferring knowledge across domains. Existing approaches transfer knowledge based on news content and user engagements from a source domain to a target domain. However, these approaches face two main limitations, hindering effective knowledge transfer and optimal fake news detection performance. Firstly, from a micro perspective, they neglect the negative impact of veracity-irrelevant features in news content when transferring domain-shared features across domains. Secondly, from a macro perspective, existing approaches ignore the relationship between user engagement and news content, which reveals shared behaviors of common users across domains and can facilitate more effective knowledge transfer. To address these limitations, we propose a novel macro- and micro- hierarchical transfer learning framework (MMHT) for cross-domain fake news detection. Firstly, we propose a micro-hierarchical disentangling module to disentangle veracity-relevant and veracity-irrelevant features from news content in the source domain for improving fake news detection performance in the target domain. Secondly, we propose a macro-hierarchical transfer learning module to generate engagement features based on common users' shared behaviors in different domains for improving effectiveness of knowledge transfer. Extensive experiments on real-world datasets demonstrate that our framework significantly outperforms the state-of-the-art baselines.

MPC-enabled Privacy-Preserving Neural Network Training against Malicious Attack

Aug 12, 2020

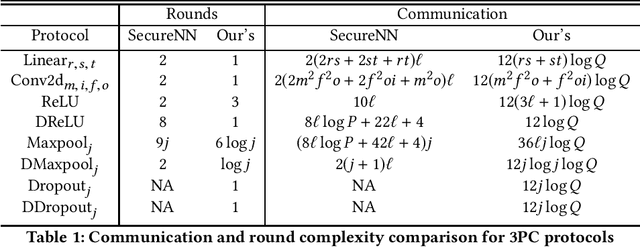

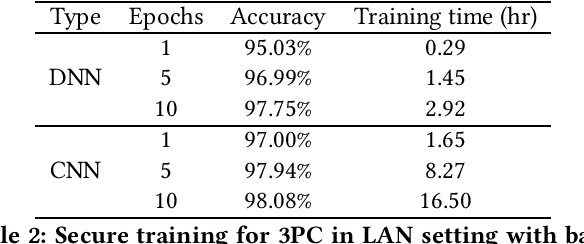

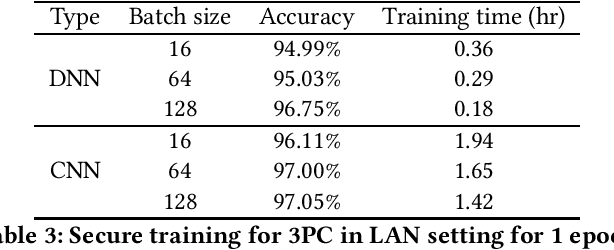

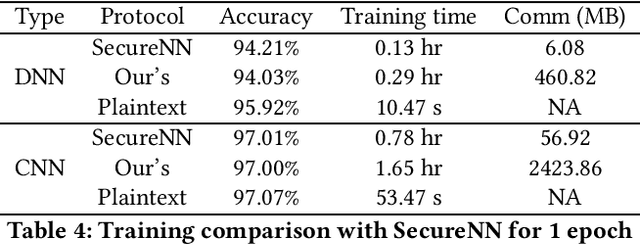

Abstract:In the past decades, the application of secure multiparty computation (MPC) to machine learning, especially privacy-preserving neural network training, has attracted tremendous attention from both academia and industry. MPC enables several data owners to jointly train a neural network while preserving their data privacy. However, most previous works focus on semi-honest threat model which cannot withstand fraudulent messages sent by malicious participants. In this work, we propose a construction of efficient $n$-party protocols for secure neural network training that can secure the privacy of all honest participants even when a majority of the parties are malicious. Compared to the other designs that provides semi-honest security in a dishonest majority setting, our actively secured neural network training incurs affordable efficiency overheads. In addition, we propose a scheme to allow additive shares defined over an integer ring $\mathbb{Z}_N$ to be securely converted to additive shares over a finite field $\mathbb{Z}_Q$. This conversion scheme is essential in correctly converting shared Beaver triples in order to make the values generated in preprocessing phase to be usable in online phase, which may be of independent interest.

Local Differential Privacy based Federated Learning for Internet of Things

Apr 19, 2020

Abstract:Internet of Vehicles (IoV) is a promising branch of the Internet of Things. IoV simulates a large variety of crowdsourcing applications such as Waze, Uber, and Amazon Mechanical Turk, etc. Users of these applications report the real-time traffic information to the cloud server which trains a machine learning model based on traffic information reported by users for intelligent traffic management. However, crowdsourcing application owners can easily infer users' location information, which raises severe location privacy concerns of the users. In addition, as the number of vehicles increases, the frequent communication between vehicles and the cloud server incurs unexpected amount of communication cost. To avoid the privacy threat and reduce the communication cost, in this paper, we propose to integrate federated learning and local differential privacy (LDP) to facilitate the crowdsourcing applications to achieve the machine learning model. Specifically, we propose four LDP mechanisms to perturb gradients generated by vehicles. The Three-Outputs mechanism is proposed which introduces three different output possibilities to deliver a high accuracy when the privacy budget is small. The output possibilities of Three-Outputs can be encoded with two bits to reduce the communication cost. Besides, to maximize the performance when the privacy budget is large, an optimal piecewise mechanism (PM-OPT) is proposed. We further propose a suboptimal mechanism (PM-SUB) with a simple formula and comparable utility to PM-OPT. Then, we build a novel hybrid mechanism by combining Three-Outputs and PM-SUB.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge