Kumar Akash

Toward Informed AV Decision-Making: Computational Model of Well-being and Trust in Mobility

May 21, 2025Abstract:For future human-autonomous vehicle (AV) interactions to be effective and smooth, human-aware systems that analyze and align human needs with automation decisions are essential. Achieving this requires systems that account for human cognitive states. We present a novel computational model in the form of a Dynamic Bayesian Network (DBN) that infers the cognitive states of both AV users and other road users, integrating this information into the AV's decision-making process. Specifically, our model captures the well-being of both an AV user and an interacting road user as cognitive states alongside trust. Our DBN models infer beliefs over the AV user's evolving well-being, trust, and intention states, as well as the possible well-being of other road users, based on observed interaction experiences. Using data collected from an interaction study, we refine the model parameters and empirically assess its performance. Finally, we extend our model into a causal inference model (CIM) framework for AV decision-making, enabling the AV to enhance user well-being and trust while balancing these factors with its own operational costs and the well-being of interacting road users. Our evaluation demonstrates the model's effectiveness in accurately predicting user's states and guiding informed, human-centered AV decisions.

Can we enhance prosocial behavior? Using post-ride feedback to improve micromobility interactions

Sep 05, 2024

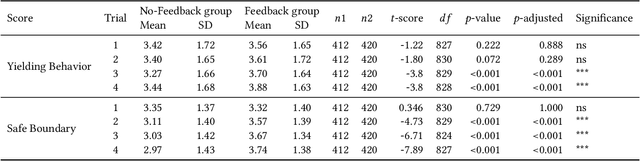

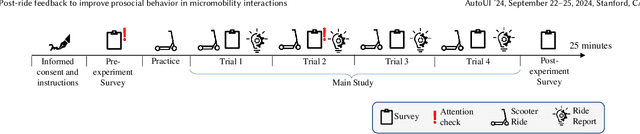

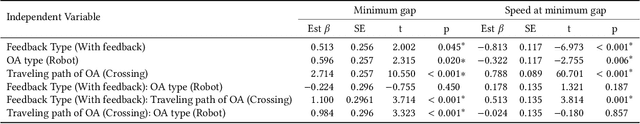

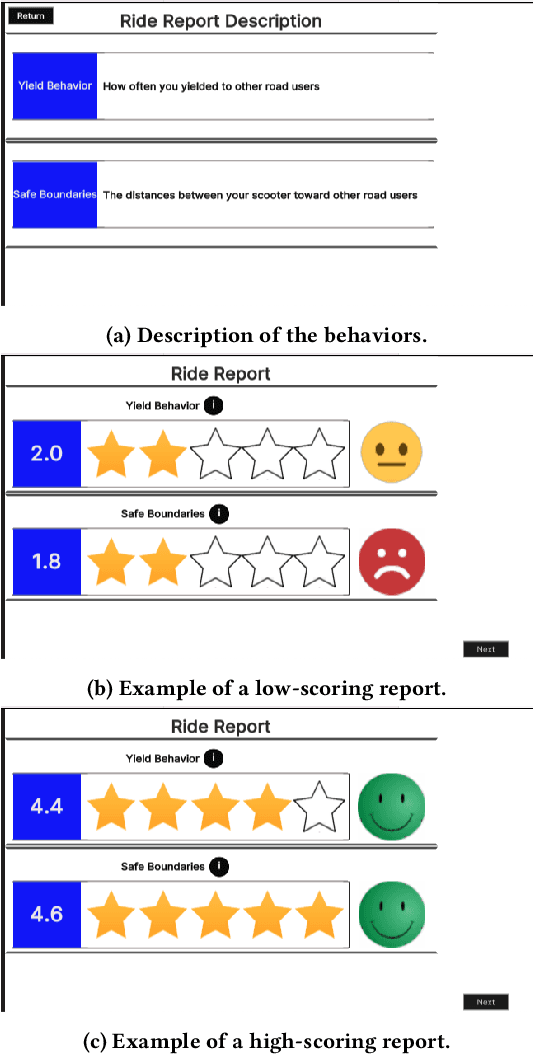

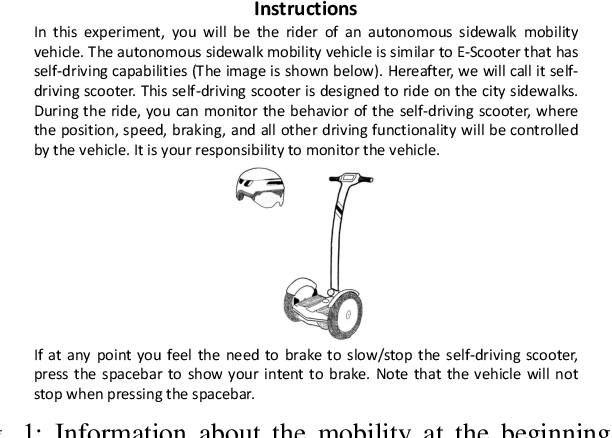

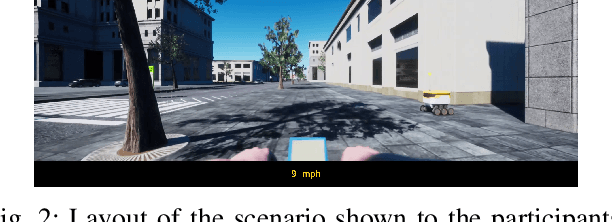

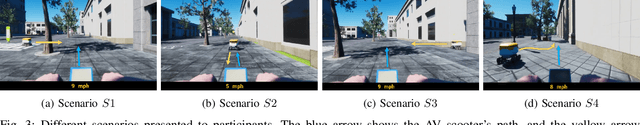

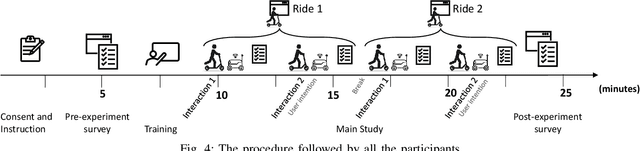

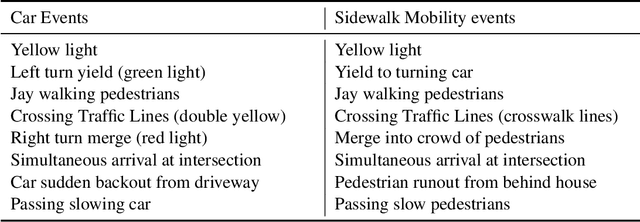

Abstract:Micromobility devices, such as e-scooters and delivery robots, hold promise for eco-friendly and cost-effective alternatives for future urban transportation. However, their lack of societal acceptance remains a challenge. Therefore, we must consider ways to promote prosocial behavior in micromobility interactions. We investigate how post-ride feedback can encourage the prosocial behavior of e-scooter riders while interacting with sidewalk users, including pedestrians and delivery robots. Using a web-based platform, we measure the prosocial behavior of e-scooter riders. Results found that post-ride feedback can successfully promote prosocial behavior, and objective measures indicated better gap behavior, lower speeds at interaction, and longer stopping time around other sidewalk actors. The findings of this study demonstrate the efficacy of post-ride feedback and provide a step toward designing methodologies to improve the prosocial behavior of mobility users.

Should I Help a Delivery Robot? Cultivating Prosocial Norms through Observations

Mar 27, 2024

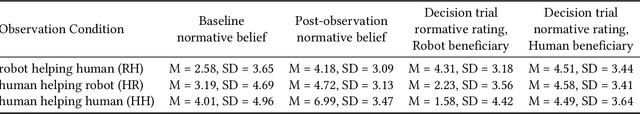

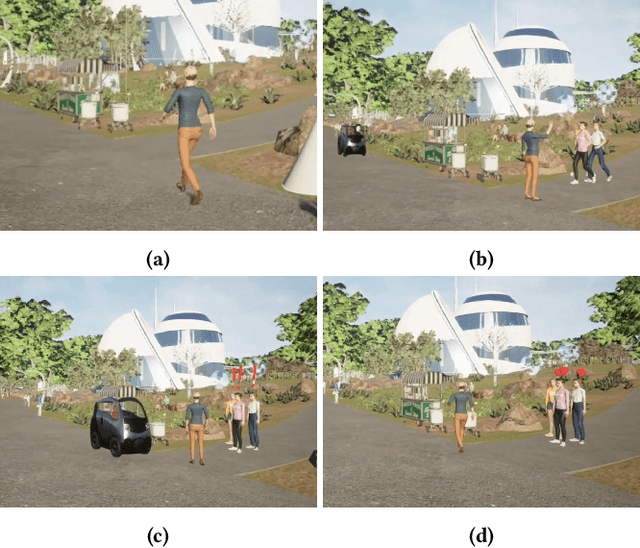

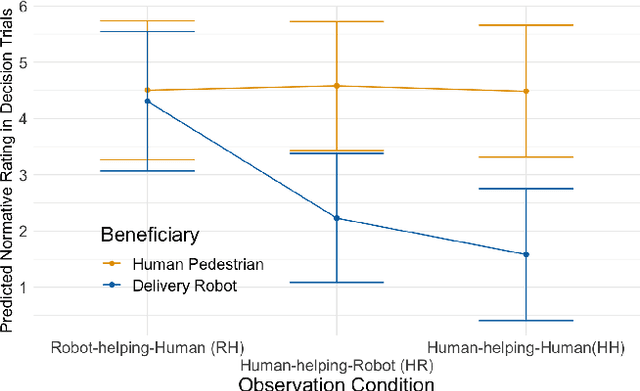

Abstract:We propose leveraging prosocial observations to cultivate new social norms to encourage prosocial behaviors toward delivery robots. With an online experiment, we quantitatively assess updates in norm beliefs regarding human-robot prosocial behaviors through observational learning. Results demonstrate the initially perceived normativity of helping robots is influenced by familiarity with delivery robots and perceptions of robots' social intelligence. Observing human-robot prosocial interactions notably shifts peoples' normative beliefs about prosocial actions; thereby changing their perceived obligations to offer help to delivery robots. Additionally, we found that observing robots offering help to humans, rather than receiving help, more significantly increased participants' feelings of obligation to help robots. Our findings provide insights into prosocial design for future mobility systems. Improved familiarity with robot capabilities and portraying them as desirable social partners can help foster wider acceptance. Furthermore, robots need to be designed to exhibit higher levels of interactivity and reciprocal capabilities for prosocial behavior.

Beyond Empirical Windowing: An Attention-Based Approach for Trust Prediction in Autonomous Vehicles

Dec 15, 2023Abstract:Humans' internal states play a key role in human-machine interaction, leading to the rise of human state estimation as a prominent field. Compared to swift state changes such as surprise and irritation, modeling gradual states like trust and satisfaction are further challenged by label sparsity: long time-series signals are usually associated with a single label, making it difficult to identify the critical span of state shifts. Windowing has been one widely-used technique to enable localized analysis of long time-series data. However, the performance of downstream models can be sensitive to the window size, and determining the optimal window size demands domain expertise and extensive search. To address this challenge, we propose a Selective Windowing Attention Network (SWAN), which employs window prompts and masked attention transformation to enable the selection of attended intervals with flexible lengths. We evaluate SWAN on the task of trust prediction on a new multimodal driving simulation dataset. Experiments show that SWAN significantly outperforms an existing empirical window selection baseline and neural network baselines including CNN-LSTM and Transformer. Furthermore, it shows robustness across a wide span of windowing ranges, compared to the traditional windowing approach.

Wellbeing in Future Mobility: Toward AV Policy Design to Increase Wellbeing through Interactions

Oct 02, 2023

Abstract:Recent advances in Automated vehicle (AV) technology and micromobility devices promise a transformational change in the future of mobility usage. These advances also pose challenges concerning human-AV interactions. To ensure the smooth adoption of these new mobilities, it is essential to assess how past experiences and perceptions of social interactions by people may impact the interactions with AV mobility. This research identifies and estimates an individual's wellbeing based on their actions, prior experiences, social interaction perceptions, and dyadic interactions with other road users. An online video-based user study was designed, and responses from 300 participants were collected and analyzed to investigate the impact on individual wellbeing. A machine learning model was designed to predict the change in wellbeing. An optimal policy based on the model allows informed AV actions toward its yielding behavior with other road users to enhance users' wellbeing. The findings from this study have broader implications for creating human-aware systems by creating policies that align with the individual state and contribute toward designing systems that align with an individual's state of wellbeing.

Trust in Shared Automated Vehicles: Study on Two Mobility Platforms

Mar 17, 2023

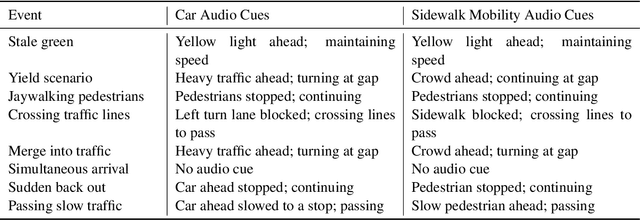

Abstract:The ever-increasing adoption of shared transportation modalities across the United States has the potential to fundamentally change the preferences and usage of different mobilities. It also raises several challenges with respect to the design and development of automated mobilities that can enable a large population to take advantage of this emergent technology. One such challenge is the lack of understanding of how trust in one automated mobility may impact trust in another. Without this understanding, it is difficult for researchers to determine whether future mobility solutions will have acceptance within different population groups. This study focuses on identifying the differences in trust across different mobility and how trust evolves across their use for participants who preferred an aggressive driving style. A dual mobility simulator study was designed in which 48 participants experienced two different automated mobilities (car and sidewalk). The results found that participants showed increasing levels of trust when they transitioned from the car to the sidewalk mobility. In comparison, participants showed decreasing levels of trust when they transitioned from the sidewalk to the car mobility. The findings from the study help inform and identify how people can develop trust in future mobility platforms and could inform the design of interventions that may help improve the trust and acceptance of future mobility.

* https://trid.trb.org/view/2117834

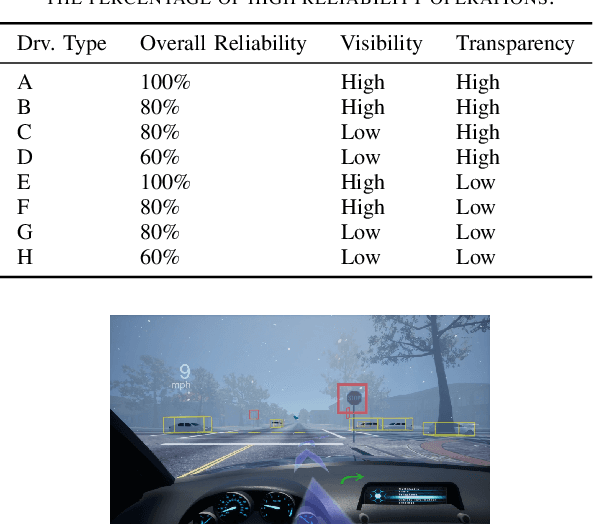

Identification of Adaptive Driving Style Preference through Implicit Inputs in SAE L2 Vehicles

Sep 21, 2022

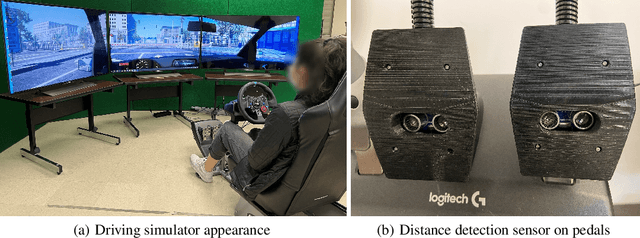

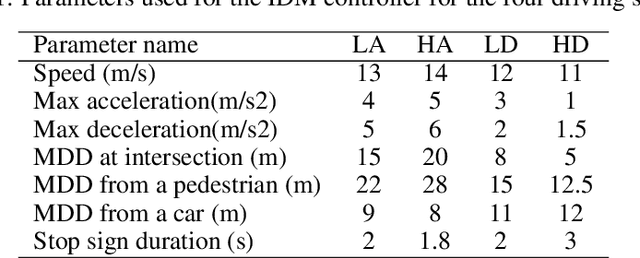

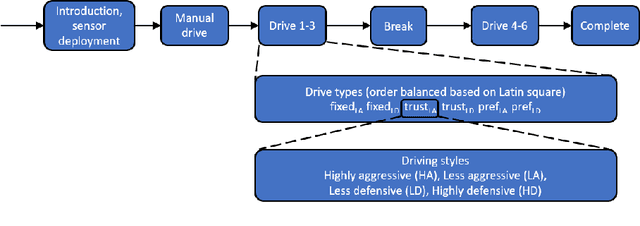

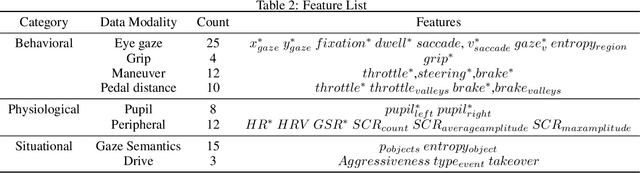

Abstract:A key factor to optimal acceptance and comfort of automated vehicle features is the driving style. Mismatches between the automated and the driver preferred driving styles can make users take over more frequently or even disable the automation features. This work proposes identification of user driving style preference with multimodal signals, so the vehicle could match user preference in a continuous and automatic way. We conducted a driving simulator study with 36 participants and collected extensive multimodal data including behavioral, physiological, and situational data. This includes eye gaze, steering grip force, driving maneuvers, brake and throttle pedal inputs as well as foot distance from pedals, pupil diameter, galvanic skin response, heart rate, and situational drive context. Then, we built machine learning models to identify preferred driving styles, and confirmed that all modalities are important for the identification of user preference. This work paves the road for implicit adaptive driving styles on automated vehicles.

Effects of Augmented-Reality-Based Assisting Interfaces on Drivers' Object-wise Situational Awareness in Highly Autonomous Vehicles

Jun 06, 2022

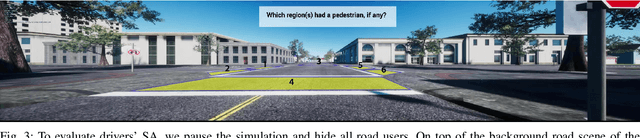

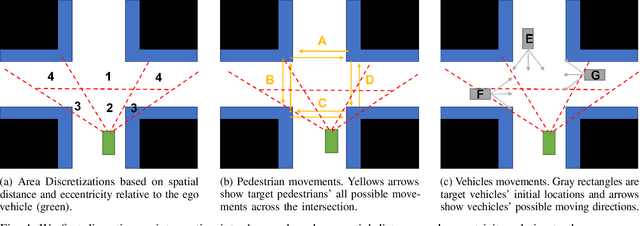

Abstract:Although partially autonomous driving (AD) systems are already available in production vehicles, drivers are still required to maintain a sufficient level of situational awareness (SA) during driving. Previous studies have shown that providing information about the AD's capability using user interfaces can improve the driver's SA. However, displaying too much information increases the driver's workload and can distract or overwhelm the driver. Therefore, to design an efficient user interface (UI), it is necessary to understand its effect under different circumstances. In this paper, we focus on a UI based on augmented reality (AR), which can highlight potential hazards on the road. To understand the effect of highlighting on drivers' SA for objects with different types and locations under various traffic densities, we conducted an in-person experiment with 20 participants on a driving simulator. Our study results show that the effects of highlighting on drivers' SA varied by traffic densities, object locations and object types. We believe our study can provide guidance in selecting which object to highlight for the AR-based driver-assistance interface to optimize SA for drivers driving and monitoring partially autonomous vehicles.

Learning Temporally and Semantically Consistent Unpaired Video-to-video Translation Through Pseudo-Supervision From Synthetic Optical Flow

Jan 15, 2022

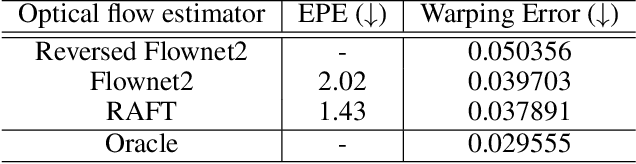

Abstract:Unpaired video-to-video translation aims to translate videos between a source and a target domain without the need of paired training data, making it more feasible for real applications. Unfortunately, the translated videos generally suffer from temporal and semantic inconsistency. To address this, many existing works adopt spatiotemporal consistency constraints incorporating temporal information based on motion estimation. However, the inaccuracies in the estimation of motion deteriorate the quality of the guidance towards spatiotemporal consistency, which leads to unstable translation. In this work, we propose a novel paradigm that regularizes the spatiotemporal consistency by synthesizing motions in input videos with the generated optical flow instead of estimating them. Therefore, the synthetic motion can be applied in the regularization paradigm to keep motions consistent across domains without the risk of errors in motion estimation. Thereafter, we utilize our unsupervised recycle and unsupervised spatial loss, guided by the pseudo-supervision provided by the synthetic optical flow, to accurately enforce spatiotemporal consistency in both domains. Experiments show that our method is versatile in various scenarios and achieves state-of-the-art performance in generating temporally and semantically consistent videos. Code is available at: https://github.com/wangkaihong/Unsup_Recycle_GAN/.

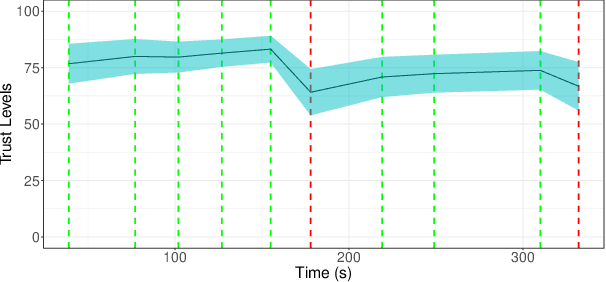

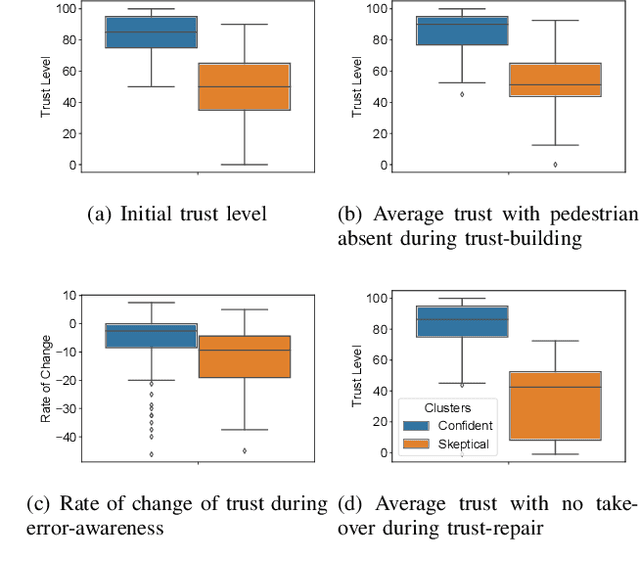

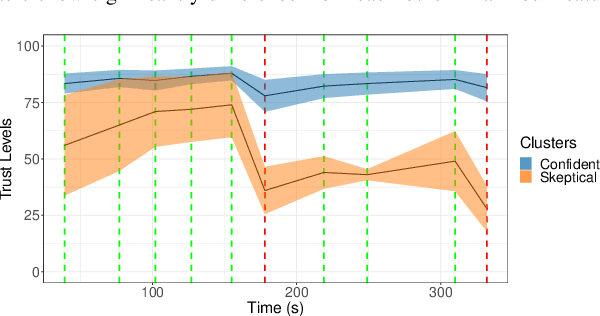

Clustering Human Trust Dynamics for Customized Real-time Prediction

Oct 09, 2021

Abstract:Trust calibration is necessary to ensure appropriate user acceptance in advanced automation technologies. A significant challenge to achieve trust calibration is to quantitatively estimate human trust in real-time. Although multiple trust models exist, these models have limited predictive performance partly due to individual differences in trust dynamics. A personalized model for each person can address this issue, but it requires a significant amount of data for each user. We present a methodology to develop customized model by clustering humans based on their trust dynamics. The clustering-based method addresses the individual differences in trust dynamics while requiring significantly less data than personalized model. We show that our clustering-based customized models not only outperform the general model based on entire population, but also outperform simple demographic factor-based customized models. Specifically, we propose that two models based on ``confident'' and ``skeptical'' group of participants, respectively, can represent the trust behavior of the population. The ``confident'' participants, as compared to the ``skeptical'' participants, have higher initial trust levels, lose trust slower when they encounter low reliability operations, and have higher trust levels during trust-repair after the low reliability operations. In summary, clustering-based customized models improve trust prediction performance for further trust calibration considerations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge