Zahra Zahedi

Toward Informed AV Decision-Making: Computational Model of Well-being and Trust in Mobility

May 21, 2025Abstract:For future human-autonomous vehicle (AV) interactions to be effective and smooth, human-aware systems that analyze and align human needs with automation decisions are essential. Achieving this requires systems that account for human cognitive states. We present a novel computational model in the form of a Dynamic Bayesian Network (DBN) that infers the cognitive states of both AV users and other road users, integrating this information into the AV's decision-making process. Specifically, our model captures the well-being of both an AV user and an interacting road user as cognitive states alongside trust. Our DBN models infer beliefs over the AV user's evolving well-being, trust, and intention states, as well as the possible well-being of other road users, based on observed interaction experiences. Using data collected from an interaction study, we refine the model parameters and empirically assess its performance. Finally, we extend our model into a causal inference model (CIM) framework for AV decision-making, enabling the AV to enhance user well-being and trust while balancing these factors with its own operational costs and the well-being of interacting road users. Our evaluation demonstrates the model's effectiveness in accurately predicting user's states and guiding informed, human-centered AV decisions.

Wellbeing in Future Mobility: Toward AV Policy Design to Increase Wellbeing through Interactions

Oct 02, 2023

Abstract:Recent advances in Automated vehicle (AV) technology and micromobility devices promise a transformational change in the future of mobility usage. These advances also pose challenges concerning human-AV interactions. To ensure the smooth adoption of these new mobilities, it is essential to assess how past experiences and perceptions of social interactions by people may impact the interactions with AV mobility. This research identifies and estimates an individual's wellbeing based on their actions, prior experiences, social interaction perceptions, and dyadic interactions with other road users. An online video-based user study was designed, and responses from 300 participants were collected and analyzed to investigate the impact on individual wellbeing. A machine learning model was designed to predict the change in wellbeing. An optimal policy based on the model allows informed AV actions toward its yielding behavior with other road users to enhance users' wellbeing. The findings from this study have broader implications for creating human-aware systems by creating policies that align with the individual state and contribute toward designing systems that align with an individual's state of wellbeing.

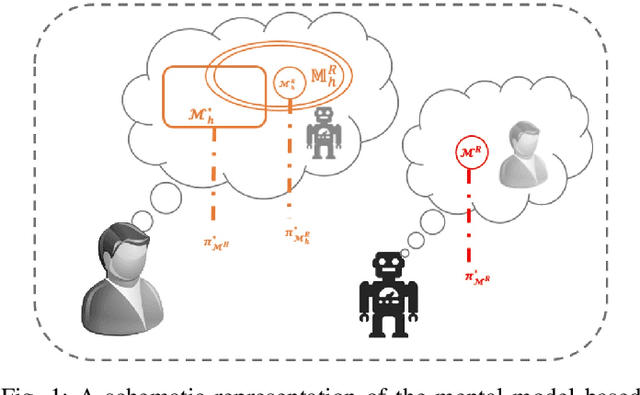

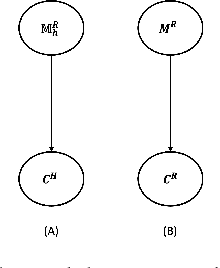

A Mental Model Based Theory of Trust

Jan 29, 2023

Abstract:Handling trust is one of the core requirements for facilitating effective interaction between the human and the AI agent. Thus, any decision-making framework designed to work with humans must possess the ability to estimate and leverage human trust. In this paper, we propose a mental model based theory of trust that not only can be used to infer trust, thus providing an alternative to psychological or behavioral trust inference methods, but also can be used as a foundation for any trust-aware decision-making frameworks. First, we introduce what trust means according to our theory and then use the theory to define trust evolution, human reliance and decision making, and a formalization of the appropriate level of trust in the agent. Using human subject studies, we compare our theory against one of the most common trust scales (Muir scale) to evaluate 1) whether the observations from the human studies match our proposed theory and 2) what aspects of trust are more aligned with our proposed theory.

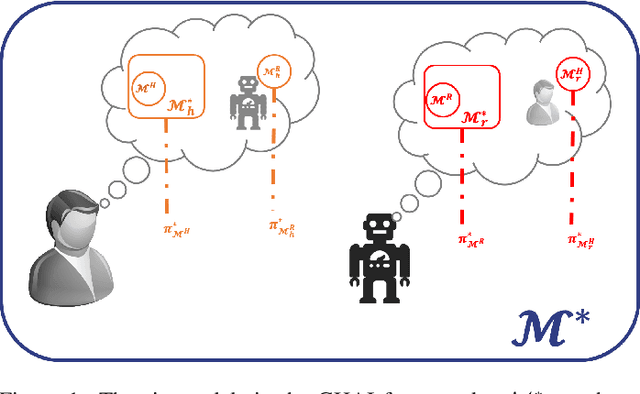

A Mental-Model Centric Landscape of Human-AI Symbiosis

Feb 18, 2022

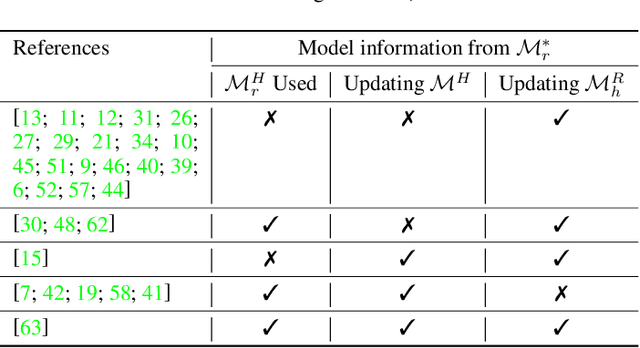

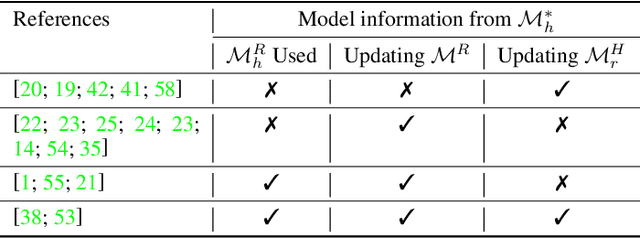

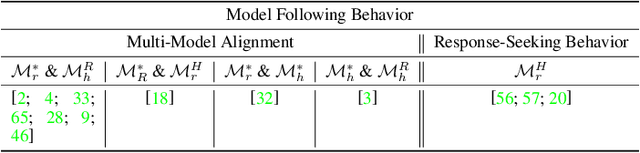

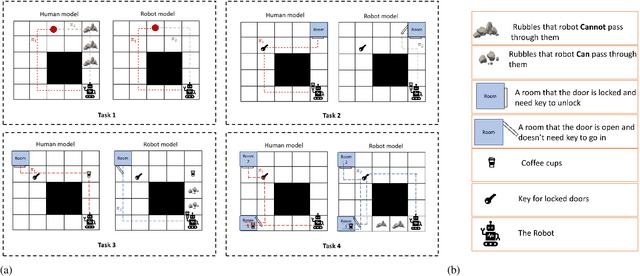

Abstract:There has been significant recent interest in developing AI agents capable of effectively interacting and teaming with humans. While each of these works try to tackle a problem quite central to the problem of human-AI interaction, they tend to rely on myopic formulations that obscure the possible inter-relatedness and complementarity of many of these works. The human-aware AI framework was a recent effort to provide a unified account for human-AI interaction by casting them in terms of their relationship to various mental models. Unfortunately, the current accounts of human-aware AI are insufficient to explain the landscape of the work doing in the space of human-AI interaction due to their focus on limited settings. In this paper, we aim to correct this shortcoming by introducing a significantly general version of human-aware AI interaction scheme, called generalized human-aware interaction (GHAI), that talks about (mental) models of six types. Through this paper, we will see how this new framework allows us to capture the various works done in the space of human-AI interaction and identify the fundamental behavioral patterns supported by these works. We will also use this framework to identify potential gaps in the current literature and suggest future research directions to address these shortcomings.

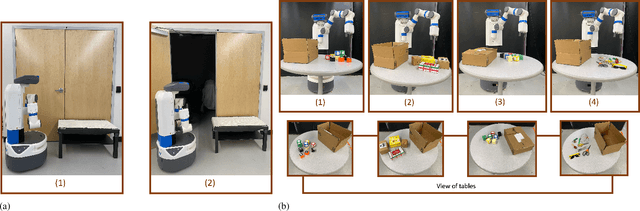

Trust-Aware Planning: Modeling Trust Evolution in Longitudinal Human-Robot Interaction

May 03, 2021

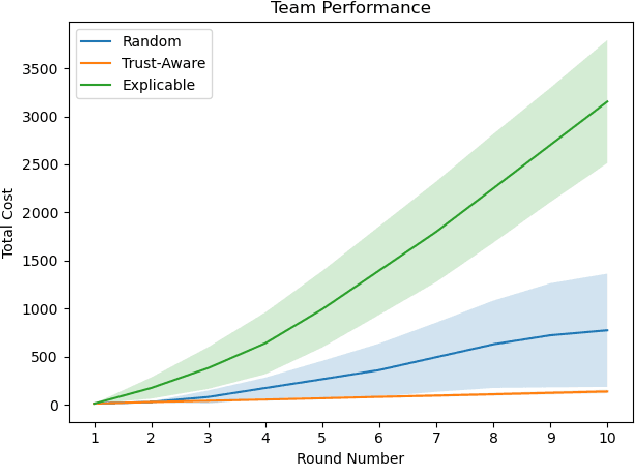

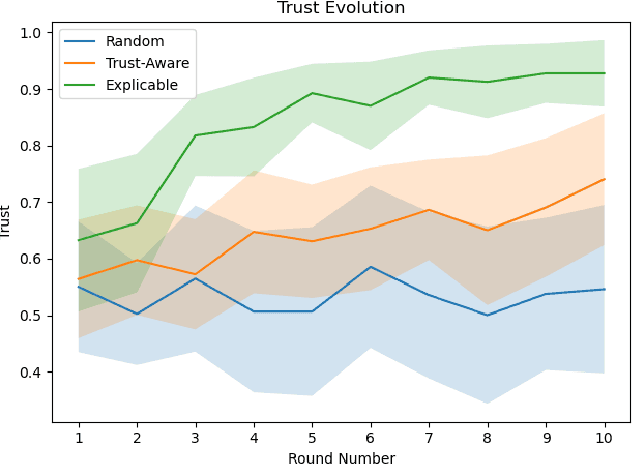

Abstract:Trust between team members is an essential requirement for any successful cooperation. Thus, engendering and maintaining the fellow team members' trust becomes a central responsibility for any member trying to not only successfully participate in the task but to ensure the team achieves its goals. The problem of trust management is particularly challenging in mixed human-robot teams where the human and the robot may have different models about the task at hand and thus may have different expectations regarding the current course of action and forcing the robot to focus on the costly explicable behavior. We propose a computational model for capturing and modulating trust in such longitudinal human-robot interaction, where the human adopts a supervisory role. In our model, the robot integrates human's trust and their expectations from the robot into its planning process to build and maintain trust over the interaction horizon. By establishing the required level of trust, the robot can focus on maximizing the team goal by eschewing explicit explanatory or explicable behavior without worrying about the human supervisor monitoring and intervening to stop behaviors they may not necessarily understand. We model this reasoning about trust levels as a meta reasoning process over individual planning tasks. We additionally validate our model through a human subject experiment.

Human-AI Symbiosis: A Survey of Current Approaches

Mar 18, 2021

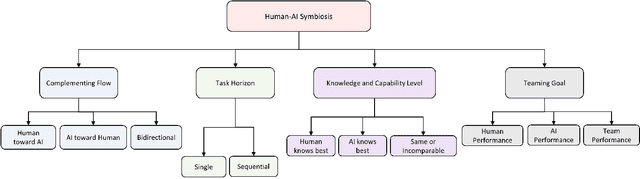

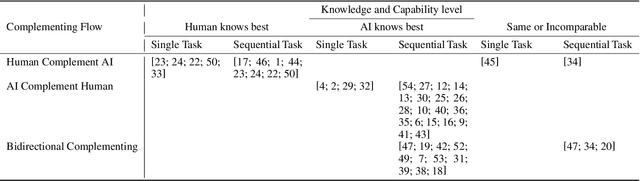

Abstract:In this paper, we aim at providing a comprehensive outline of the different threads of work in human-AI collaboration. By highlighting various aspects of works on the human-AI team such as the flow of complementing, task horizon, model representation, knowledge level, and teaming goal, we make a taxonomy of recent works according to these dimensions. We hope that the survey will provide a more clear connection between the works in the human-AI team and guidance to new researchers in this area.

`Why not give this work to them?' Explaining AI-Moderated Task-Allocation Outcomes using Negotiation Trees

Feb 20, 2020

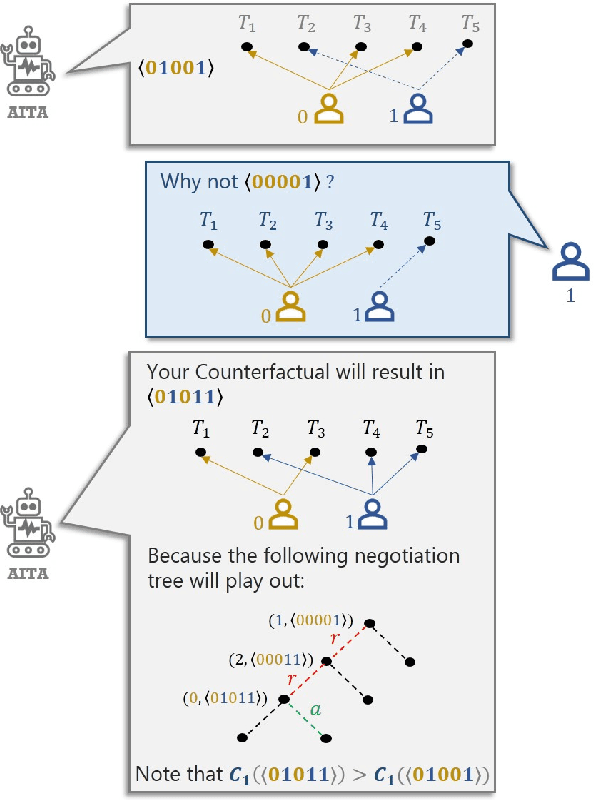

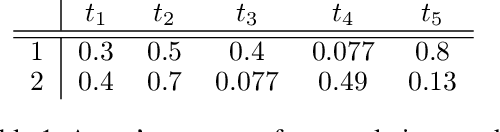

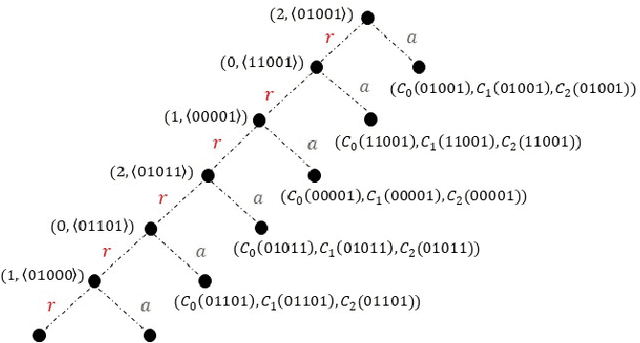

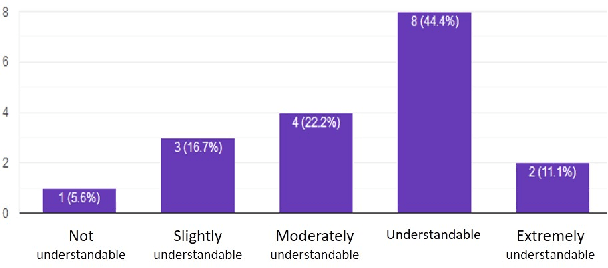

Abstract:The problem of multi-agent task allocation arises in a variety of scenarios involving human teams. In many such settings, human teammates may act with selfish motives and try to minimize their cost metrics. In the absence of (1) complete knowledge about the reward of other agents and (2) the team's overall cost associated with a particular allocation outcome, distributed algorithms can only arrive at sub-optimal solutions within a reasonable amount of time. To address these challenges, we introduce the notion of an AI Task Allocator (AITA) that, with complete knowledge, comes up with fair allocations that strike a balance between the individual human costs and the team's performance cost. To ensure that AITA is explicable to the humans, we allow each human agent to question AITA's proposed allocation with counterfactual allocations. In response, we design AITA to provide a replay negotiation tree that acts as an explanation showing why the counterfactual allocation, with the correct costs, will eventually result in a sub-optimal allocation. This explanation also updates a human's incomplete knowledge about their teammate's and the team's actual costs. We then investigate whether humans are (1) able to understand the explanations provided and (2) convinced by it using human factor studies. Finally, we show the effect of various kinds of incompleteness on the length of explanations. We conclude that underestimation of other's costs often leads to the need for explanations and in turn, longer explanations on average.

To Monitor Or Not: Observing Robot's Behavior based on a Game-Theoretic Model of Trust

Apr 06, 2019

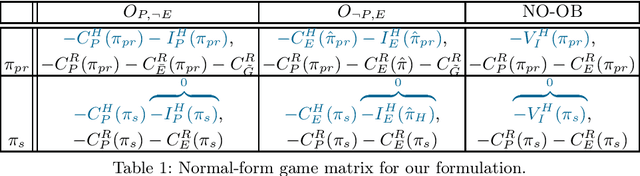

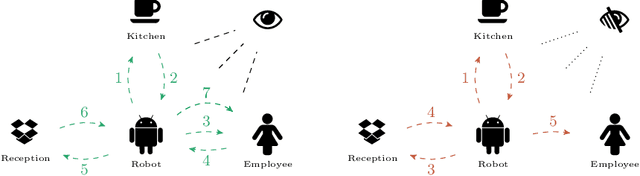

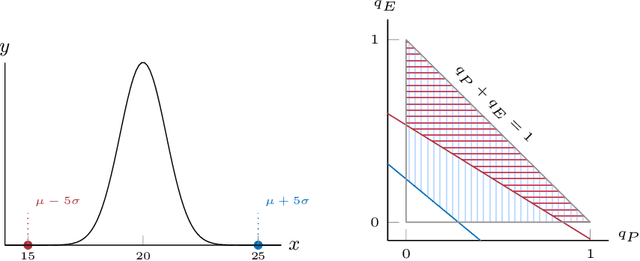

Abstract:In scenarios where a robot generates and executes a plan, there may be instances where this generated plan is less costly for the robot to execute but incomprehensible to the human. When the human acts as a supervisor and is held accountable for the robot's plan, the human may be at a higher risk if the incomprehensible behavior is deemed to be unsafe. In such cases, the robot, who may be unaware of the human's exact expectations, may choose to do (1) the most constrained plan (i.e. one preferred by all possible supervisors) incurring the added cost of executing highly sub-optimal behavior when the human is observing it and (2) deviate to a more optimal plan when the human looks away. These problems amplify in situations where the robot has to fulfill multiple goals and cater to the needs of different human supervisors. In such settings, the robot, being a rational agent, should take any chance it gets to deviate to a lower cost plan. On the other hand, continuous monitoring of the robot's behavior is often difficult for human because it costs them valuable resources (e.g., time, effort, cognitive overload, etc.). To optimize the cost for constant monitoring while ensuring the robots follow the {\em safe} behavior, we model this problem in the game-theoretic framework of trust where the human is the agent that trusts the robot. We show that the notion of human's trust, which is well-defined when there is a pure strategy equilibrium, is inversely proportional to the probability it assigns for observing the robot's behavior. We then show that with high probability, our game lacks a pure strategy Nash equilibrium, forcing us to define trust boundary over mixed strategies of the human in order to guarantee safe behavior by the robot.

* First two authors contributed equally and names are ordered based on a coin flip

A review of neuro-fuzzy systems based on intelligent control

May 06, 2018

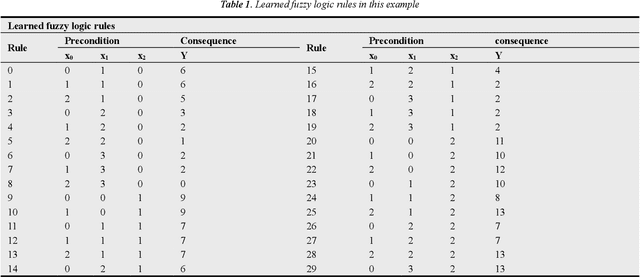

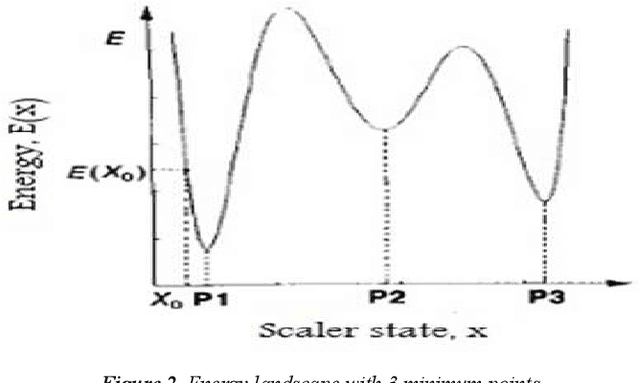

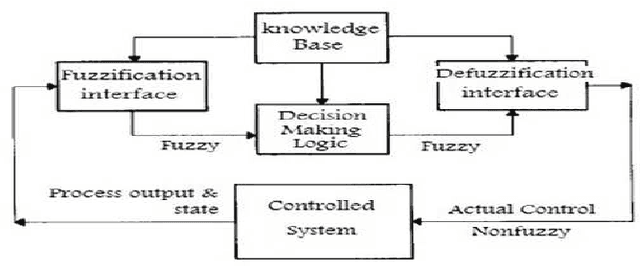

Abstract:The system's ability to adapt and self-organize are two key factors when it comes to how well the system can survive the changes to the environment and the plant they work within. Intelligent control improves these two factors in controllers. Considering the increasing complexity of dynamic systems along with their need for feedback controls, using more complicated controls has become necessary and intelligent control can be a suitable response to this necessity. This paper briefly describes the structure of intelligent control and provides a review on fuzzy logic and neural networks which are some of the base methods for intelligent control. The different aspects of these two methods are then compared together and an example of a combined method is presented.

* 4 pages, 7 figures, 1 table, Journal of Electrical and Electronic Engineering

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge