`Why not give this work to them?' Explaining AI-Moderated Task-Allocation Outcomes using Negotiation Trees

Paper and Code

Feb 20, 2020

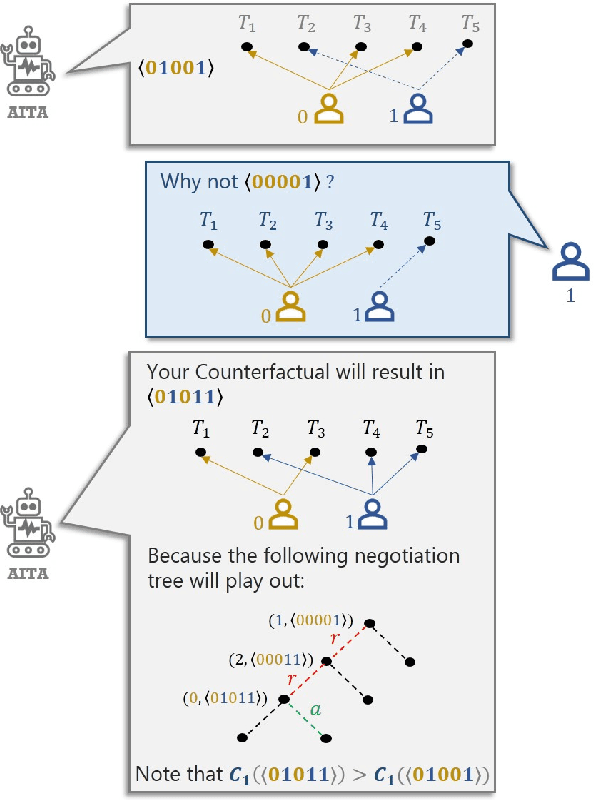

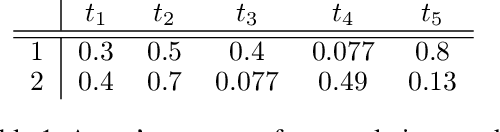

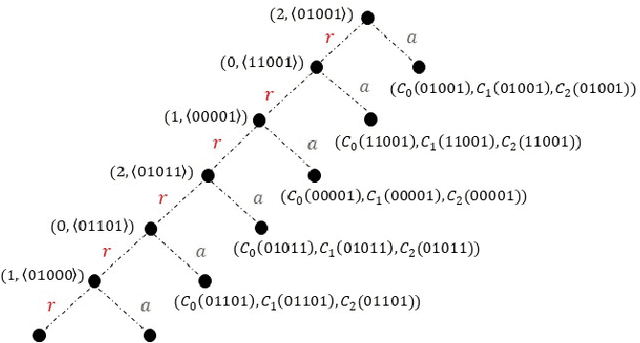

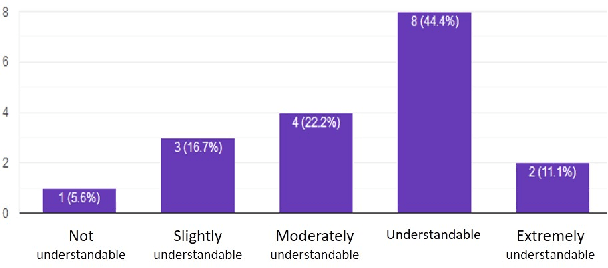

The problem of multi-agent task allocation arises in a variety of scenarios involving human teams. In many such settings, human teammates may act with selfish motives and try to minimize their cost metrics. In the absence of (1) complete knowledge about the reward of other agents and (2) the team's overall cost associated with a particular allocation outcome, distributed algorithms can only arrive at sub-optimal solutions within a reasonable amount of time. To address these challenges, we introduce the notion of an AI Task Allocator (AITA) that, with complete knowledge, comes up with fair allocations that strike a balance between the individual human costs and the team's performance cost. To ensure that AITA is explicable to the humans, we allow each human agent to question AITA's proposed allocation with counterfactual allocations. In response, we design AITA to provide a replay negotiation tree that acts as an explanation showing why the counterfactual allocation, with the correct costs, will eventually result in a sub-optimal allocation. This explanation also updates a human's incomplete knowledge about their teammate's and the team's actual costs. We then investigate whether humans are (1) able to understand the explanations provided and (2) convinced by it using human factor studies. Finally, we show the effect of various kinds of incompleteness on the length of explanations. We conclude that underestimation of other's costs often leads to the need for explanations and in turn, longer explanations on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge