Xingwei Wu

Effects of Augmented-Reality-Based Assisting Interfaces on Drivers' Object-wise Situational Awareness in Highly Autonomous Vehicles

Jun 06, 2022

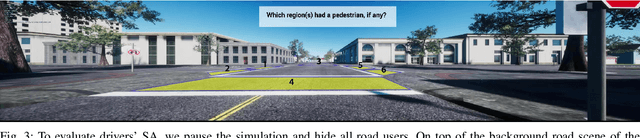

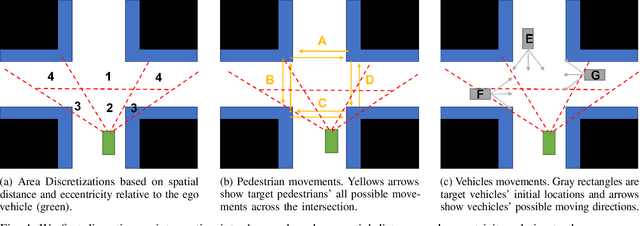

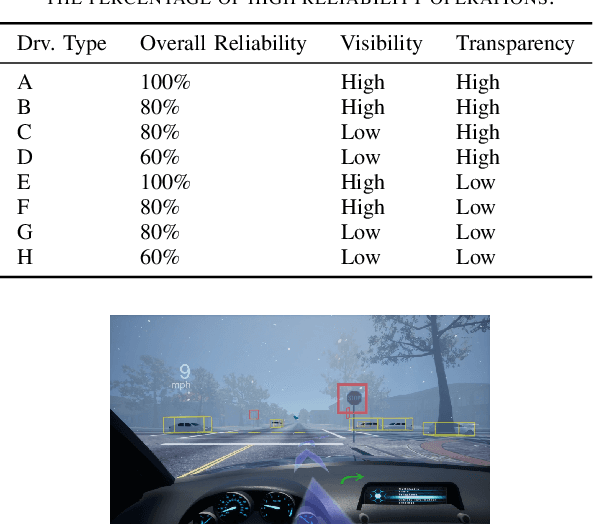

Abstract:Although partially autonomous driving (AD) systems are already available in production vehicles, drivers are still required to maintain a sufficient level of situational awareness (SA) during driving. Previous studies have shown that providing information about the AD's capability using user interfaces can improve the driver's SA. However, displaying too much information increases the driver's workload and can distract or overwhelm the driver. Therefore, to design an efficient user interface (UI), it is necessary to understand its effect under different circumstances. In this paper, we focus on a UI based on augmented reality (AR), which can highlight potential hazards on the road. To understand the effect of highlighting on drivers' SA for objects with different types and locations under various traffic densities, we conducted an in-person experiment with 20 participants on a driving simulator. Our study results show that the effects of highlighting on drivers' SA varied by traffic densities, object locations and object types. We believe our study can provide guidance in selecting which object to highlight for the AR-based driver-assistance interface to optimize SA for drivers driving and monitoring partially autonomous vehicles.

Clustering Human Trust Dynamics for Customized Real-time Prediction

Oct 09, 2021

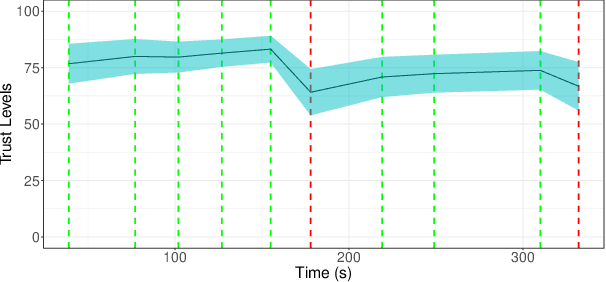

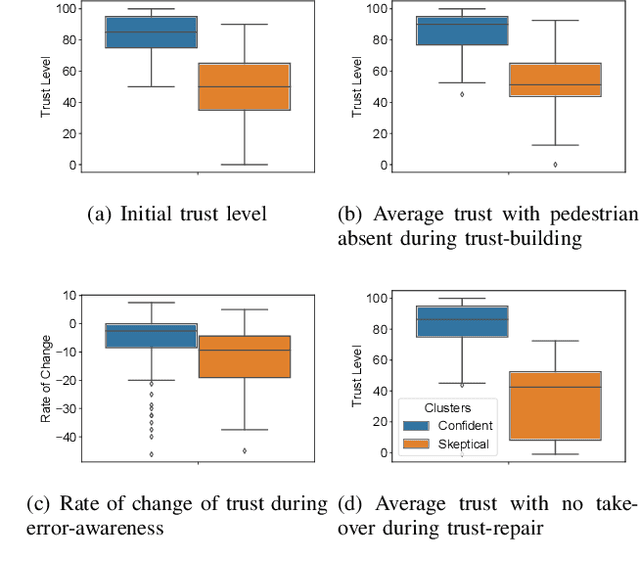

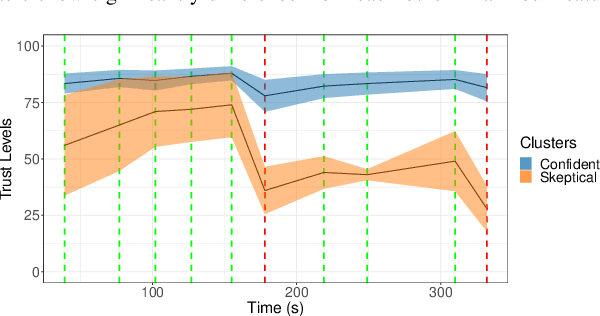

Abstract:Trust calibration is necessary to ensure appropriate user acceptance in advanced automation technologies. A significant challenge to achieve trust calibration is to quantitatively estimate human trust in real-time. Although multiple trust models exist, these models have limited predictive performance partly due to individual differences in trust dynamics. A personalized model for each person can address this issue, but it requires a significant amount of data for each user. We present a methodology to develop customized model by clustering humans based on their trust dynamics. The clustering-based method addresses the individual differences in trust dynamics while requiring significantly less data than personalized model. We show that our clustering-based customized models not only outperform the general model based on entire population, but also outperform simple demographic factor-based customized models. Specifically, we propose that two models based on ``confident'' and ``skeptical'' group of participants, respectively, can represent the trust behavior of the population. The ``confident'' participants, as compared to the ``skeptical'' participants, have higher initial trust levels, lose trust slower when they encounter low reliability operations, and have higher trust levels during trust-repair after the low reliability operations. In summary, clustering-based customized models improve trust prediction performance for further trust calibration considerations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge