Anil Kumar

A Multitask Deep Learning Model for Classification and Regression of Hyperspectral Images: Application to the large-scale dataset

Jul 23, 2024

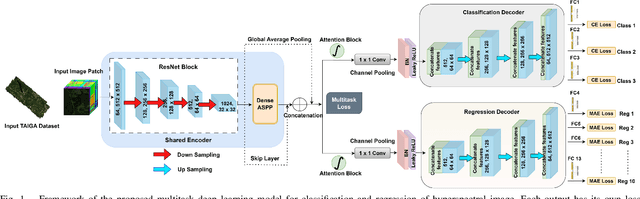

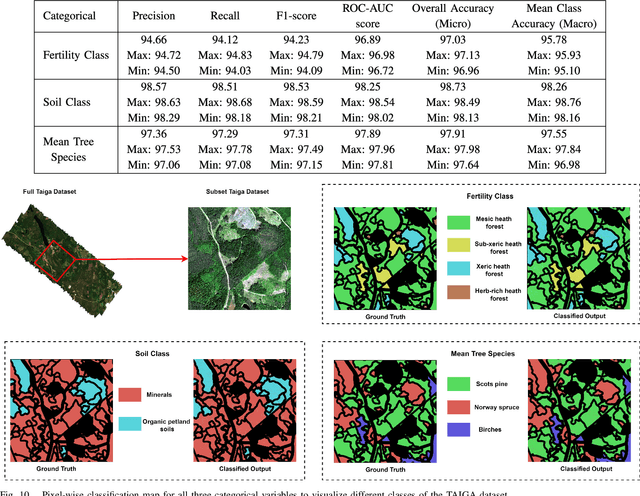

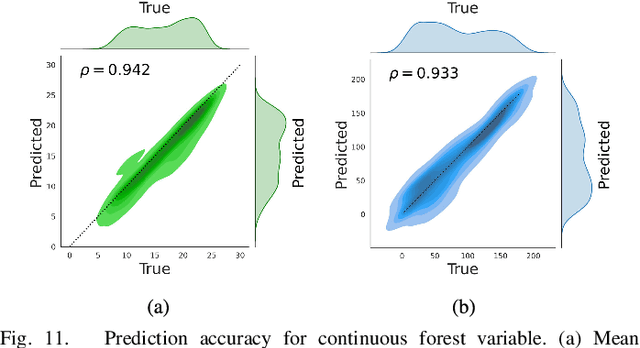

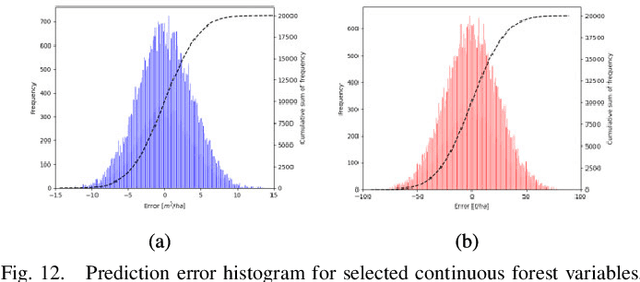

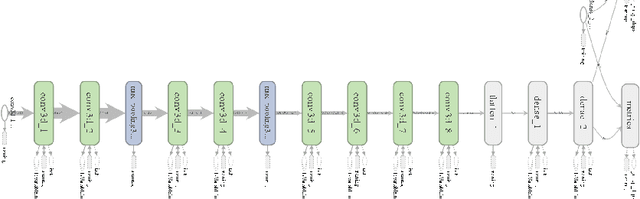

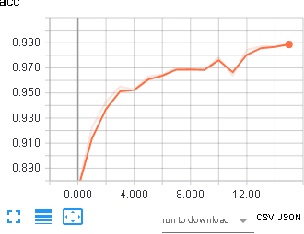

Abstract:Multitask learning is a widely recognized technique in the field of computer vision and deep learning domain. However, it is still a research question in remote sensing, particularly for hyperspectral imaging. Moreover, most of the research in the remote sensing domain focuses on small and single-task-based annotated datasets, which limits the generalizability and scalability of the developed models to more diverse and complex real-world scenarios. Thus, in this study, we propose a multitask deep learning model designed to perform multiple classification and regression tasks simultaneously on hyperspectral images. We validated our approach on a large hyperspectral dataset called TAIGA, which contains 13 forest variables, including three categorical variables and ten continuous variables with different biophysical parameters. We design a sharing encoder and task-specific decoder network to streamline feature learning while allowing each task-specific decoder to focus on the unique aspects of its respective task. Additionally, a dense atrous pyramid pooling layer and attention network were integrated to extract multi-scale contextual information and enable selective information processing by prioritizing task-specific features. Further, we computed multitask loss and optimized its parameters for the proposed framework to improve the model performance and efficiency across diverse tasks. A comprehensive qualitative and quantitative analysis of the results shows that the proposed method significantly outperforms other state-of-the-art methods. We trained our model across 10 seeds/trials to ensure robustness. Our proposed model demonstrates higher mean performance while maintaining lower or equivalent variability. To make the work reproducible, the codes will be available at https://github.com/Koushikey4596/Multitask-Deep-Learning-Model-for-Taiga-datatset.

Intelligent fault diagnosis of worm gearbox based on adaptive CNN using amended gorilla troop optimization with quantum gate mutation strategy

Mar 19, 2024

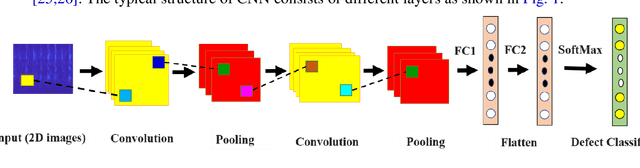

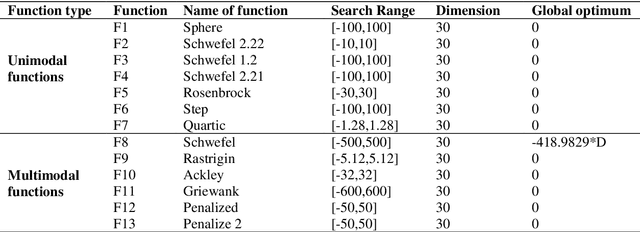

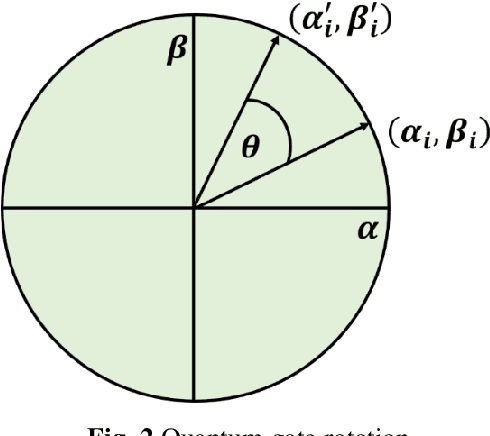

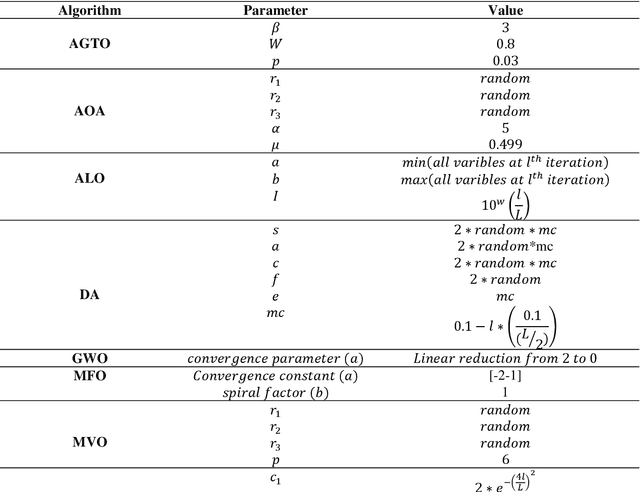

Abstract:The worm gearbox is a high-speed transmission system that plays a vital role in various industries. Therefore it becomes necessary to develop a robust fault diagnosis scheme for worm gearbox. Due to advancements in sensor technology, researchers from academia and industries prefer deep learning models for fault diagnosis purposes. The optimal selection of hyperparameters (HPs) of deep learning models plays a significant role in stable performance. Existing methods mainly focused on manual tunning of these parameters, which is a troublesome process and sometimes leads to inaccurate results. Thus, exploring more sophisticated methods to optimize the HPs automatically is important. In this work, a novel optimization, i.e. amended gorilla troop optimization (AGTO), has been proposed to make the convolutional neural network (CNN) adaptive for extracting the features to identify the worm gearbox defects. Initially, the vibration and acoustic signals are converted into 2D images by the Morlet wavelet function. Then, the initial model of CNN is developed by setting hyperparameters. Further, the search space of each Hp is identified and optimized by the developed AGTO algorithm. The classification accuracy has been evaluated by AGTO-CNN, which is further validated by the confusion matrix. The performance of the developed model has also been compared with other models. The AGTO algorithm is examined on twenty-three classical benchmark functions and the Wilcoxon test which demonstrates the effectiveness and dominance of the developed optimization algorithm. The results obtained suggested that the AGTO-CNN has the highest diagnostic accuracy and is stable and robust while diagnosing the worm gearbox.

Non-parametric Ensemble Empirical Mode Decomposition for extracting weak features to identify bearing defects

Oct 02, 2023Abstract:A non-parametric complementary ensemble empirical mode decomposition (NPCEEMD) is proposed for identifying bearing defects using weak features. NPCEEMD is non-parametric because, unlike existing decomposition methods such as ensemble empirical mode decomposition, it does not require defining the ideal SNR of noise and the number of ensembles, every time while processing the signals. The simulation results show that mode mixing in NPCEEMD is less than the existing decomposition methods. After conducting in-depth simulation analysis, the proposed method is applied to experimental data. The proposed NPCEEMD method works in following steps. First raw signal is obtained. Second, the obtained signal is decomposed. Then, the mutual information (MI) of the raw signal with NPCEEMD-generated IMFs is computed. Further IMFs with MI above 0.1 are selected and combined to form a resulting signal. Finally, envelope spectrum of resulting signal is computed to confirm the presence of defect.

Trust in Shared Automated Vehicles: Study on Two Mobility Platforms

Mar 17, 2023

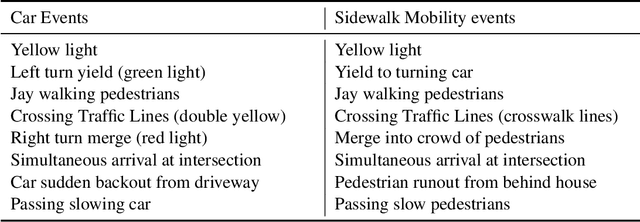

Abstract:The ever-increasing adoption of shared transportation modalities across the United States has the potential to fundamentally change the preferences and usage of different mobilities. It also raises several challenges with respect to the design and development of automated mobilities that can enable a large population to take advantage of this emergent technology. One such challenge is the lack of understanding of how trust in one automated mobility may impact trust in another. Without this understanding, it is difficult for researchers to determine whether future mobility solutions will have acceptance within different population groups. This study focuses on identifying the differences in trust across different mobility and how trust evolves across their use for participants who preferred an aggressive driving style. A dual mobility simulator study was designed in which 48 participants experienced two different automated mobilities (car and sidewalk). The results found that participants showed increasing levels of trust when they transitioned from the car to the sidewalk mobility. In comparison, participants showed decreasing levels of trust when they transitioned from the sidewalk to the car mobility. The findings from the study help inform and identify how people can develop trust in future mobility platforms and could inform the design of interventions that may help improve the trust and acceptance of future mobility.

* https://trid.trb.org/view/2117834

Deep 3D Convolutional Neural Network for Automated Lung Cancer Diagnosis

May 04, 2019

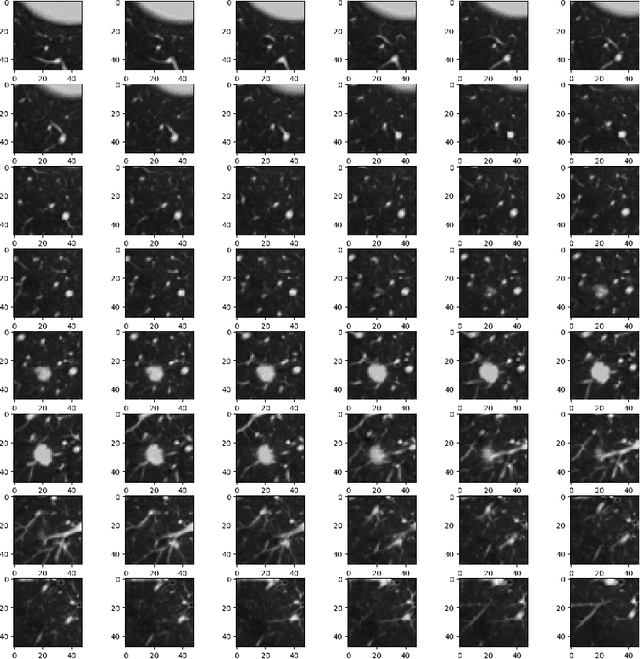

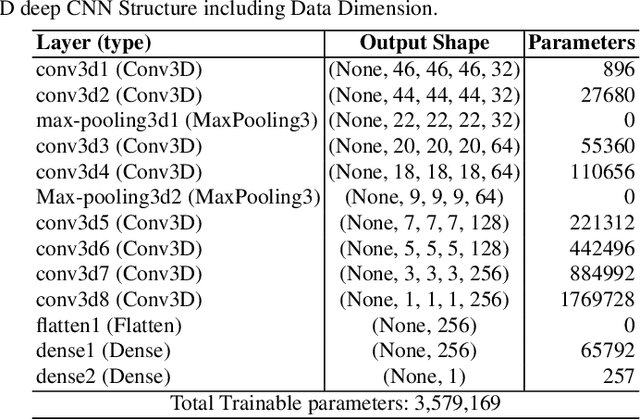

Abstract:Computer Aided Diagnosis has emerged as an indispensible technique for validating the opinion of radiologists in CT interpretation. This paper presents a deep 3D Convolutional Neural Network (CNN) architecture for automated CT scan-based lung cancer detection system. It utilizes three dimensional spatial information to learn highly discriminative 3 dimensional features instead of 2D features like texture or geometric shape whick need to be generated manually. The proposed deep learning method automatically extracts the 3D features on the basis of spatio-temporal statistics.The developed model is end-to-end and is able to predict malignancy of each voxel for given input scan. Simulation results demonstrate the effectiveness of proposed 3D CNN network for classification of lung nodule in-spite of limited computational capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge