Krishna Mohan Buddhiraju

A Multitask Deep Learning Model for Classification and Regression of Hyperspectral Images: Application to the large-scale dataset

Jul 23, 2024

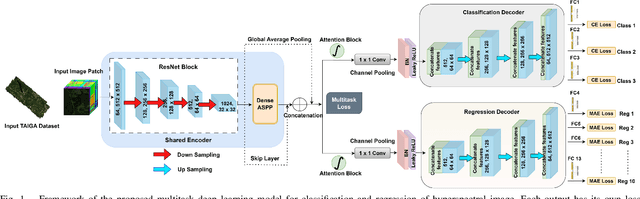

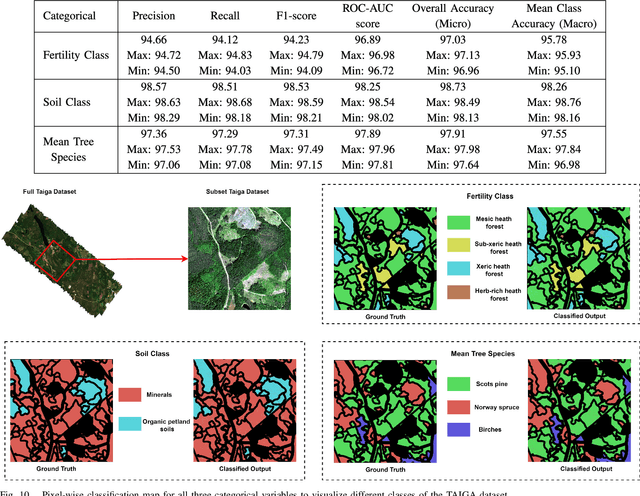

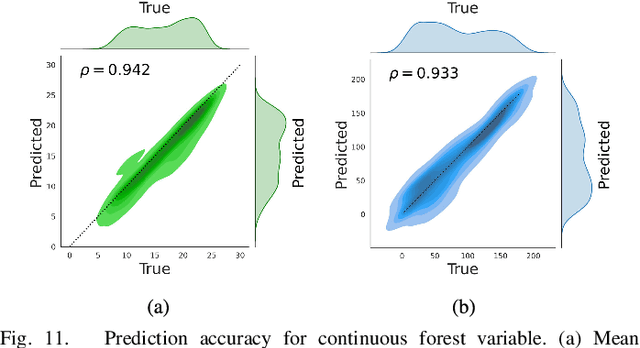

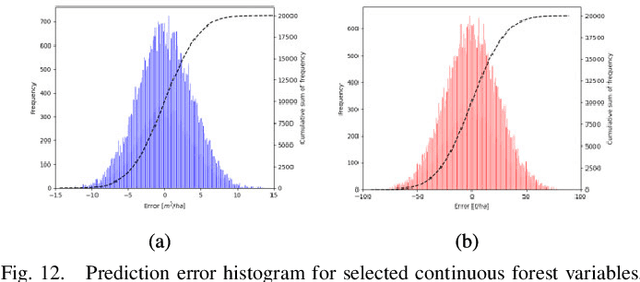

Abstract:Multitask learning is a widely recognized technique in the field of computer vision and deep learning domain. However, it is still a research question in remote sensing, particularly for hyperspectral imaging. Moreover, most of the research in the remote sensing domain focuses on small and single-task-based annotated datasets, which limits the generalizability and scalability of the developed models to more diverse and complex real-world scenarios. Thus, in this study, we propose a multitask deep learning model designed to perform multiple classification and regression tasks simultaneously on hyperspectral images. We validated our approach on a large hyperspectral dataset called TAIGA, which contains 13 forest variables, including three categorical variables and ten continuous variables with different biophysical parameters. We design a sharing encoder and task-specific decoder network to streamline feature learning while allowing each task-specific decoder to focus on the unique aspects of its respective task. Additionally, a dense atrous pyramid pooling layer and attention network were integrated to extract multi-scale contextual information and enable selective information processing by prioritizing task-specific features. Further, we computed multitask loss and optimized its parameters for the proposed framework to improve the model performance and efficiency across diverse tasks. A comprehensive qualitative and quantitative analysis of the results shows that the proposed method significantly outperforms other state-of-the-art methods. We trained our model across 10 seeds/trials to ensure robustness. Our proposed model demonstrates higher mean performance while maintaining lower or equivalent variability. To make the work reproducible, the codes will be available at https://github.com/Koushikey4596/Multitask-Deep-Learning-Model-for-Taiga-datatset.

LEt-SNE: A Hybrid Approach To Data Embedding and Visualization of Hyperspectral Imagery

Oct 19, 2019

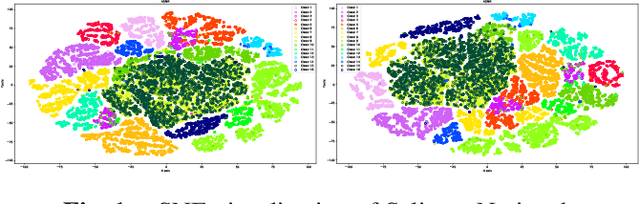

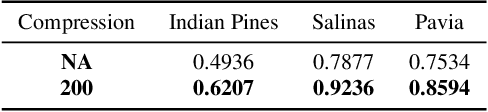

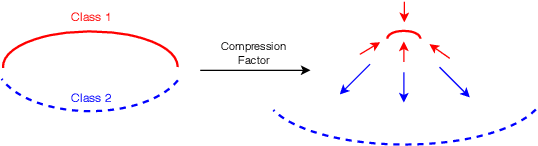

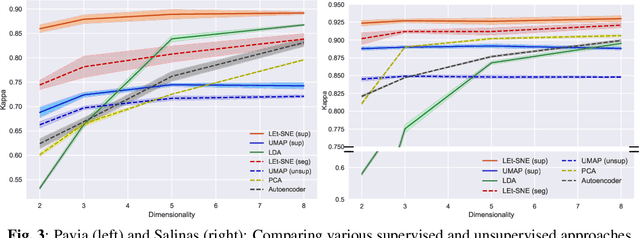

Abstract:Hyperspectral Imagery (and Remote Sensing in general) captured from UAVs or satellites are highly voluminous in nature due to the large spatial extent and wavelengths captured by them. Since analyzing these images requires a huge amount of computational time and power, various dimensionality reduction techniques have been used for feature reduction. Some popular techniques among these falter when applied to Hyperspectral Imagery due to the famed curse of dimensionality. In this paper, we propose a novel approach, LEt-SNE, which combines graph based algorithms like t-SNE and Laplacian Eigenmaps into a model parameterized by a shallow feed forward network. We introduce a new term, Compression Factor, that enables our method to combat the curse of dimensionality. The proposed algorithm is suitable for manifold visualization and sample clustering with labelled or unlabelled data. We demonstrate that our method is competitive with current state-of-the-art methods on hyperspectral remote sensing datasets in public domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge