Neera Jain

Trust in Shared Automated Vehicles: Study on Two Mobility Platforms

Mar 17, 2023

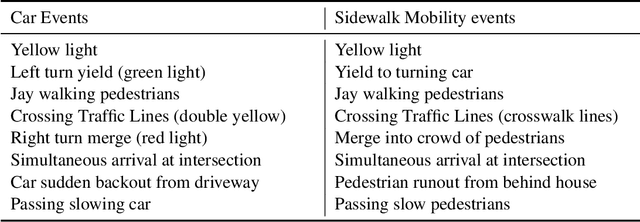

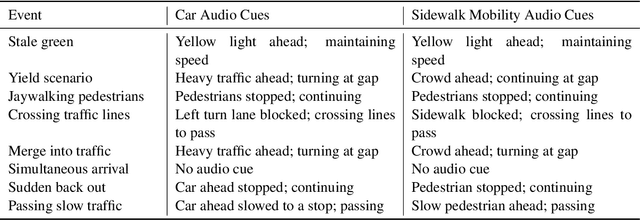

Abstract:The ever-increasing adoption of shared transportation modalities across the United States has the potential to fundamentally change the preferences and usage of different mobilities. It also raises several challenges with respect to the design and development of automated mobilities that can enable a large population to take advantage of this emergent technology. One such challenge is the lack of understanding of how trust in one automated mobility may impact trust in another. Without this understanding, it is difficult for researchers to determine whether future mobility solutions will have acceptance within different population groups. This study focuses on identifying the differences in trust across different mobility and how trust evolves across their use for participants who preferred an aggressive driving style. A dual mobility simulator study was designed in which 48 participants experienced two different automated mobilities (car and sidewalk). The results found that participants showed increasing levels of trust when they transitioned from the car to the sidewalk mobility. In comparison, participants showed decreasing levels of trust when they transitioned from the sidewalk to the car mobility. The findings from the study help inform and identify how people can develop trust in future mobility platforms and could inform the design of interventions that may help improve the trust and acceptance of future mobility.

* https://trid.trb.org/view/2117834

Toward Adaptive Trust Calibration for Level 2 Driving Automation

Sep 24, 2020

Abstract:Properly calibrated human trust is essential for successful interaction between humans and automation. However, while human trust calibration can be improved by increased automation transparency, too much transparency can overwhelm human workload. To address this tradeoff, we present a probabilistic framework using a partially observable Markov decision process (POMDP) for modeling the coupled trust-workload dynamics of human behavior in an action-automation context. We specifically consider hands-off Level 2 driving automation in a city environment involving multiple intersections where the human chooses whether or not to rely on the automation. We consider automation reliability, automation transparency, and scene complexity, along with human reliance and eye-gaze behavior, to model the dynamics of human trust and workload. We demonstrate that our model framework can appropriately vary automation transparency based on real-time human trust and workload belief estimates to achieve trust calibration.

Human Trust-based Feedback Control: Dynamically varying automation transparency to optimize human-machine interactions

Jun 29, 2020

Abstract:Human trust in automation plays an essential role in interactions between humans and automation. While a lack of trust can lead to a human's disuse of automation, over-trust can result in a human trusting a faulty autonomous system which could have negative consequences for the human. Therefore, human trust should be calibrated to optimize human-machine interactions with respect to context-specific performance objectives. In this article, we present a probabilistic framework to model and calibrate a human's trust and workload dynamics during his/her interaction with an intelligent decision-aid system. This calibration is achieved by varying the automation's transparency---the amount and utility of information provided to the human. The parameterization of the model is conducted using behavioral data collected through human-subject experiments, and three feedback control policies are experimentally validated and compared against a non-adaptive decision-aid system. The results show that human-automation team performance can be optimized when the transparency is dynamically updated based on the proposed control policy. This framework is a first step toward widespread design and implementation of real-time adaptive automation for use in human-machine interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge