Kiriakos N. Kutulakos

Generating the Past, Present and Future from a Motion-Blurred Image

Dec 22, 2025Abstract:We seek to answer the question: what can a motion-blurred image reveal about a scene's past, present, and future? Although motion blur obscures image details and degrades visual quality, it also encodes information about scene and camera motion during an exposure. Previous techniques leverage this information to estimate a sharp image from an input blurry one, or to predict a sequence of video frames showing what might have occurred at the moment of image capture. However, they rely on handcrafted priors or network architectures to resolve ambiguities in this inverse problem, and do not incorporate image and video priors on large-scale datasets. As such, existing methods struggle to reproduce complex scene dynamics and do not attempt to recover what occurred before or after an image was taken. Here, we introduce a new technique that repurposes a pre-trained video diffusion model trained on internet-scale datasets to recover videos revealing complex scene dynamics during the moment of capture and what might have occurred immediately into the past or future. Our approach is robust and versatile; it outperforms previous methods for this task, generalizes to challenging in-the-wild images, and supports downstream tasks such as recovering camera trajectories, object motion, and dynamic 3D scene structure. Code and data are available at https://blur2vid.github.io

* Code and data are available at https://blur2vid.github.io

Nexels: Neurally-Textured Surfels for Real-Time Novel View Synthesis with Sparse Geometries

Dec 15, 2025Abstract:Though Gaussian splatting has achieved impressive results in novel view synthesis, it requires millions of primitives to model highly textured scenes, even when the geometry of the scene is simple. We propose a representation that goes beyond point-based rendering and decouples geometry and appearance in order to achieve a compact representation. We use surfels for geometry and a combination of a global neural field and per-primitive colours for appearance. The neural field textures a fixed number of primitives for each pixel, ensuring that the added compute is low. Our representation matches the perceptual quality of 3D Gaussian splatting while using $9.7\times$ fewer primitives and $5.5\times$ less memory on outdoor scenes and using $31\times$ fewer primitives and $3.7\times$ less memory on indoor scenes. Our representation also renders twice as fast as existing textured primitives while improving upon their visual quality.

Super Resolved Imaging with Adaptive Optics

Aug 06, 2025Abstract:Astronomical telescopes suffer from a tradeoff between field of view (FoV) and image resolution: increasing the FoV leads to an optical field that is under-sampled by the science camera. This work presents a novel computational imaging approach to overcome this tradeoff by leveraging the existing adaptive optics (AO) systems in modern ground-based telescopes. Our key idea is to use the AO system's deformable mirror to apply a series of learned, precisely controlled distortions to the optical wavefront, producing a sequence of images that exhibit distinct, high-frequency, sub-pixel shifts. These images can then be jointly upsampled to yield the final super-resolved image. Crucially, we show this can be done while simultaneously maintaining the core AO operation--correcting for the unknown and rapidly changing wavefront distortions caused by Earth's atmosphere. To achieve this, we incorporate end-to-end optimization of both the induced mirror distortions and the upsampling algorithm, such that telescope-specific optics and temporal statistics of atmospheric wavefront distortions are accounted for. Our experimental results with a hardware prototype, as well as simulations, demonstrate significant SNR improvements of up to 12 dB over non-AO super-resolution baselines, using only existing telescope optics and no hardware modifications. Moreover, by using a precise bench-top replica of a complete telescope and AO system, we show that our methodology can be readily transferred to an operational telescope. Project webpage: https://www.cs.toronto.edu/~robin/aosr/

Coherent Optical Modems for Full-Wavefield Lidar

Jun 12, 2024

Abstract:The advent of the digital age has driven the development of coherent optical modems -- devices that modulate the amplitude and phase of light in multiple polarization states. These modems transmit data through fiber optic cables that are thousands of kilometers in length at data rates exceeding one terabit per second. This remarkable technology is made possible through near-THz-rate programmable control and sensing of the full optical wavefield. While coherent optical modems form the backbone of telecommunications networks around the world, their extraordinary capabilities also provide unique opportunities for imaging. Here, we introduce full-wavefield lidar: a new imaging modality that repurposes off-the-shelf coherent optical modems to simultaneously measure distance, axial velocity, and polarization. We demonstrate this modality by combining a 74 GHz-bandwidth coherent optical modem with free-space coupling optics and scanning mirrors. We develop a time-resolved image formation model for this system and formulate a maximum-likelihood reconstruction algorithm to recover depth, velocity, and polarization information at each scene point from the modem's raw transmitted and received symbols. Compared to existing lidars, full-wavefield lidar promises improved mm-scale ranging accuracy from brief, microsecond exposure times, reliable velocimetry, and robustness to intererence from ambient light or other lidar signals.

Flying with Photons: Rendering Novel Views of Propagating Light

Apr 10, 2024Abstract:We present an imaging and neural rendering technique that seeks to synthesize videos of light propagating through a scene from novel, moving camera viewpoints. Our approach relies on a new ultrafast imaging setup to capture a first-of-its kind, multi-viewpoint video dataset with picosecond-level temporal resolution. Combined with this dataset, we introduce an efficient neural volume rendering framework based on the transient field. This field is defined as a mapping from a 3D point and 2D direction to a high-dimensional, discrete-time signal that represents time-varying radiance at ultrafast timescales. Rendering with transient fields naturally accounts for effects due to the finite speed of light, including viewpoint-dependent appearance changes caused by light propagation delays to the camera. We render a range of complex effects, including scattering, specular reflection, refraction, and diffraction. Additionally, we demonstrate removing viewpoint-dependent propagation delays using a time warping procedure, rendering of relativistic effects, and video synthesis of direct and global components of light transport.

Learning Lens Blur Fields

Oct 17, 2023Abstract:Optical blur is an inherent property of any lens system and is challenging to model in modern cameras because of their complex optical elements. To tackle this challenge, we introduce a high-dimensional neural representation of blur$-$$\textit{the lens blur field}$$-$and a practical method for acquiring it. The lens blur field is a multilayer perceptron (MLP) designed to (1) accurately capture variations of the lens 2D point spread function over image plane location, focus setting and, optionally, depth and (2) represent these variations parametrically as a single, sensor-specific function. The representation models the combined effects of defocus, diffraction, aberration, and accounts for sensor features such as pixel color filters and pixel-specific micro-lenses. To learn the real-world blur field of a given device, we formulate a generalized non-blind deconvolution problem that directly optimizes the MLP weights using a small set of focal stacks as the only input. We also provide a first-of-its-kind dataset of 5D blur fields$-$for smartphone cameras, camera bodies equipped with a variety of lenses, etc. Lastly, we show that acquired 5D blur fields are expressive and accurate enough to reveal, for the first time, differences in optical behavior of smartphone devices of the same make and model.

Transient Neural Radiance Fields for Lidar View Synthesis and 3D Reconstruction

Jul 14, 2023Abstract:Neural radiance fields (NeRFs) have become a ubiquitous tool for modeling scene appearance and geometry from multiview imagery. Recent work has also begun to explore how to use additional supervision from lidar or depth sensor measurements in the NeRF framework. However, previous lidar-supervised NeRFs focus on rendering conventional camera imagery and use lidar-derived point cloud data as auxiliary supervision; thus, they fail to incorporate the underlying image formation model of the lidar. Here, we propose a novel method for rendering transient NeRFs that take as input the raw, time-resolved photon count histograms measured by a single-photon lidar system, and we seek to render such histograms from novel views. Different from conventional NeRFs, the approach relies on a time-resolved version of the volume rendering equation to render the lidar measurements and capture transient light transport phenomena at picosecond timescales. We evaluate our method on a first-of-its-kind dataset of simulated and captured transient multiview scans from a prototype single-photon lidar. Overall, our work brings NeRFs to a new dimension of imaging at transient timescales, newly enabling rendering of transient imagery from novel views. Additionally, we show that our approach recovers improved geometry and conventional appearance compared to point cloud-based supervision when training on few input viewpoints. Transient NeRFs may be especially useful for applications which seek to simulate raw lidar measurements for downstream tasks in autonomous driving, robotics, and remote sensing.

A Neural Rendering Framework for Free-Viewpoint Relighting

Nov 26, 2019

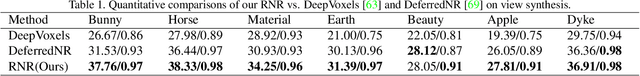

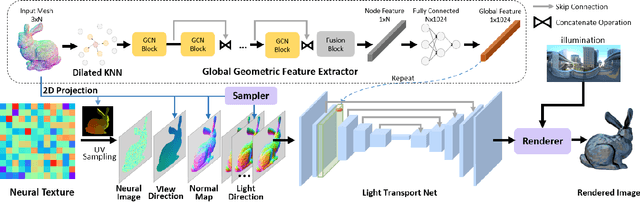

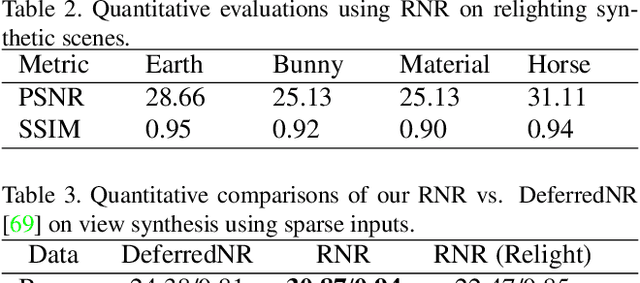

Abstract:We present a novel Relightable Neural Renderer (RNR) for simultaneous view synthesis and relighting using multi-view image inputs. Existing neural rendering (NR) does not explicitly model the physical rendering process and hence has limited capabilities on relighting. RNR instead models image formation in terms of environment lighting, object intrinsic attributes, and the light transport function (LTF), each corresponding to a learnable component. In particular, the incorporation of a physically based rendering process not only enables relighting but also improves the quality of novel view synthesis. Comprehensive experiments on synthetic and real data show that RNR provides a practical and effective solution for conducting free-viewpoint relighting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge