Keisuke Nonaka

Graph-based Scalable Sampling of 3D Point Cloud Attributes

Oct 01, 2024

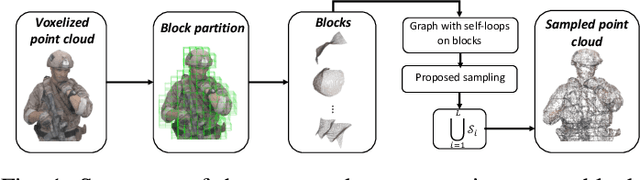

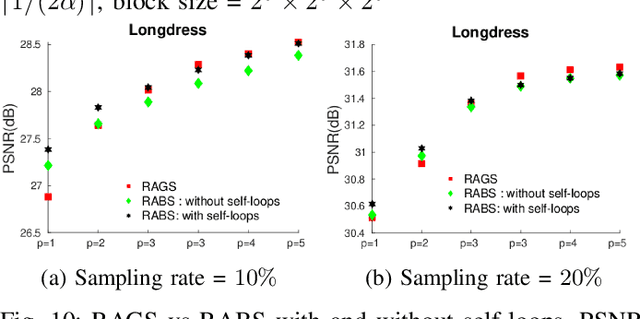

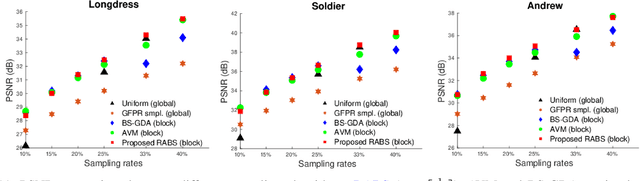

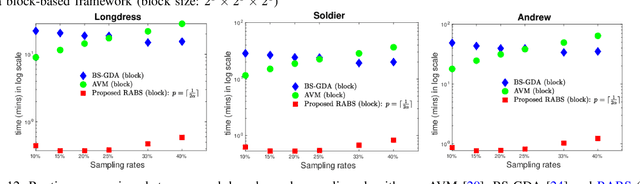

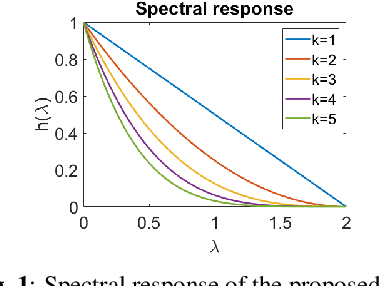

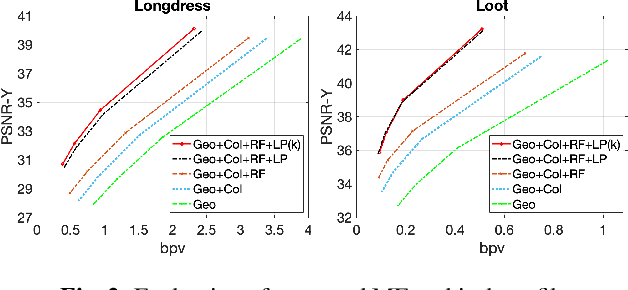

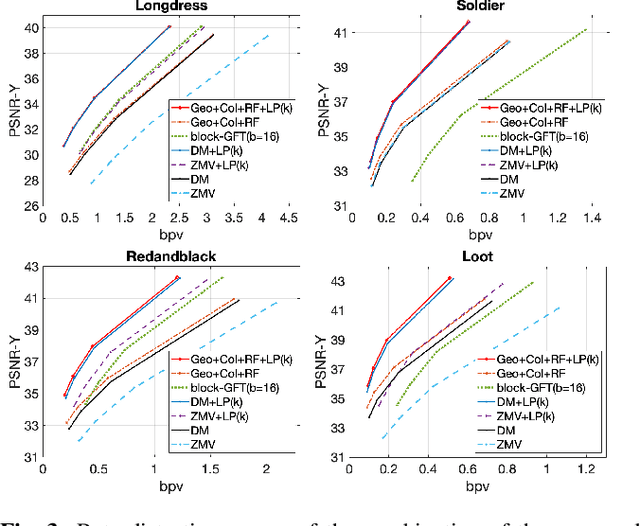

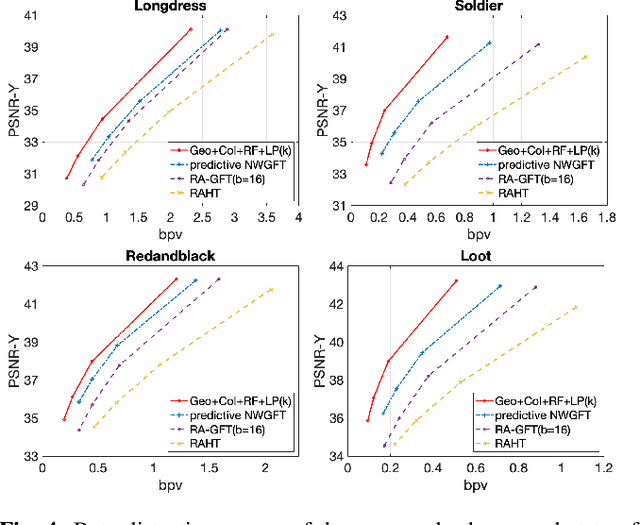

Abstract:3D Point clouds (PCs) are commonly used to represent 3D scenes. They can have millions of points, making subsequent downstream tasks such as compression and streaming computationally expensive. PC sampling (selecting a subset of points) can be used to reduce complexity. Existing PC sampling algorithms focus on preserving geometry features and often do not scale to handle large PCs. In this work, we develop scalable graph-based sampling algorithms for PC color attributes, assuming the full geometry is available. Our sampling algorithms are optimized for a signal reconstruction method that minimizes the graph Laplacian quadratic form. We first develop a global sampling algorithm that can be applied to PCs with millions of points by exploiting sparsity and sampling rate adaptive parameter selection. Further, we propose a block-based sampling strategy where each block is sampled independently. We show that sampling the corresponding sub-graphs with optimally chosen self-loop weights (node weights) will produce a sampling set that approximates the results of global sampling while reducing complexity by an order of magnitude. Our empirical results on two large PC datasets show that our algorithms outperform the existing fast PC subsampling techniques (uniform and geometry feature preserving random sampling) by 2dB. Our algorithm is up to 50 times faster than existing graph signal sampling algorithms while providing better reconstruction accuracy. Finally, we illustrate the efficacy of PC attribute sampling within a compression scenario, showing that pre-compression sampling of PC attributes can lower the bitrate by 11% while having minimal effect on reconstruction.

Full reference point cloud quality assessment using support vector regression

Jun 15, 2024

Abstract:Point clouds are a general format for representing realistic 3D objects in diverse 3D applications. Since point clouds have large data sizes, developing efficient point cloud compression methods is crucial. However, excessive compression leads to various distortions, which deteriorates the point cloud quality perceived by end users. Thus, establishing reliable point cloud quality assessment (PCQA) methods is essential as a benchmark to develop efficient compression methods. This paper presents an accurate full-reference point cloud quality assessment (FR-PCQA) method called full-reference quality assessment using support vector regression (FRSVR) for various types of degradations such as compression distortion, Gaussian noise, and down-sampling. The proposed method demonstrates accurate PCQA by integrating five FR-based metrics covering various types of errors (e.g., considering geometric distortion, color distortion, and point count) using support vector regression (SVR). Moreover, the proposed method achieves a superior trade-off between accuracy and calculation speed because it includes only the calculation of these five simple metrics and SVR, which can perform fast prediction. Experimental results with three types of open datasets show that the proposed method is more accurate than conventional FR-PCQA methods. In addition, the proposed method is faster than state-of-the-art methods that utilize complicated features such as curvature and multi-scale features. Thus, the proposed method provides excellent performance in terms of the accuracy of PCQA and processing speed. Our method is available from https://github.com/STAC-USC/FRSVR-PCQA.

Fast graph-based denoising for point cloud color information

Jan 19, 2024

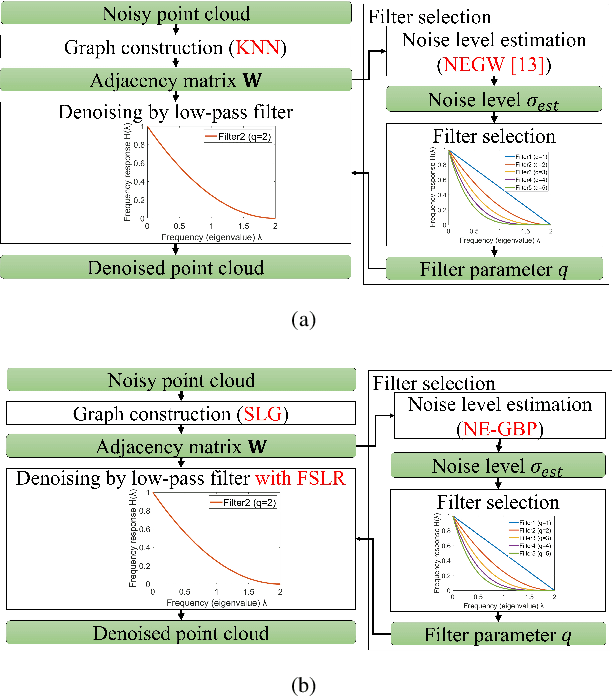

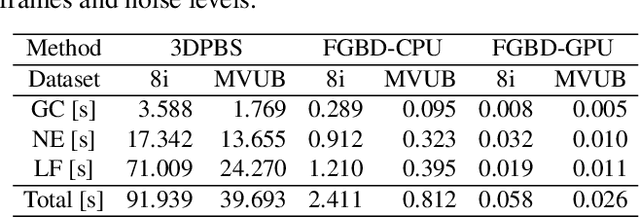

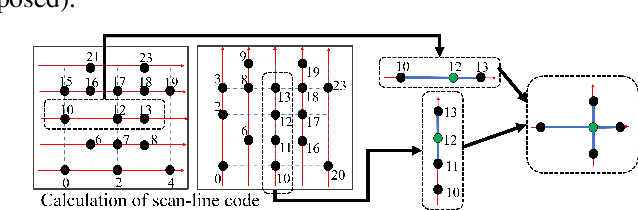

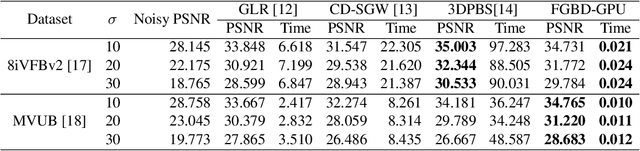

Abstract:Point clouds are utilized in various 3D applications such as cross-reality (XR) and realistic 3D displays. In some applications, e.g., for live streaming using a 3D point cloud, real-time point cloud denoising methods are required to enhance the visual quality. However, conventional high-precision denoising methods cannot be executed in real time for large-scale point clouds owing to the complexity of graph constructions with K nearest neighbors and noise level estimation. This paper proposes a fast graph-based denoising (FGBD) for a large-scale point cloud. First, high-speed graph construction is achieved by scanning a point cloud in various directions and searching adjacent neighborhoods on the scanning lines. Second, we propose a fast noise level estimation method using eigenvalues of the covariance matrix on a graph. Finally, we also propose a new low-cost filter selection method to enhance denoising accuracy to compensate for the degradation caused by the acceleration algorithms. In our experiments, we succeeded in reducing the processing time dramatically while maintaining accuracy relative to conventional denoising methods. Denoising was performed at 30fps, with frames containing approximately 1 million points.

SCP: Spherical-Coordinate-based Learned Point Cloud Compression

Aug 24, 2023

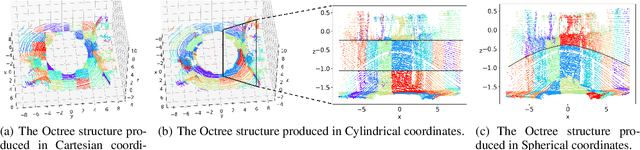

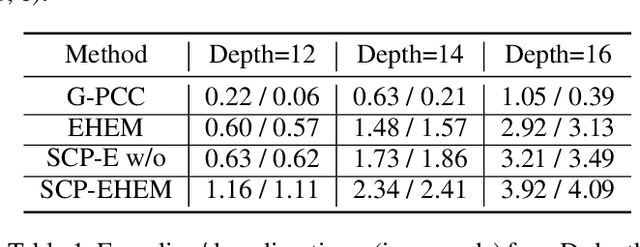

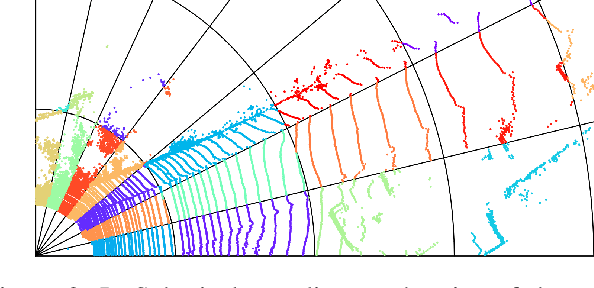

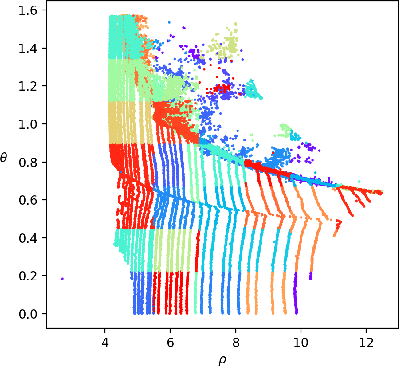

Abstract:In recent years, the task of learned point cloud compression has gained prominence. An important type of point cloud, the spinning LiDAR point cloud, is generated by spinning LiDAR on vehicles. This process results in numerous circular shapes and azimuthal angle invariance features within the point clouds. However, these two features have been largely overlooked by previous methodologies. In this paper, we introduce a model-agnostic method called Spherical-Coordinate-based learned Point cloud compression (SCP), designed to leverage the aforementioned features fully. Additionally, we propose a multi-level Octree for SCP to mitigate the reconstruction error for distant areas within the Spherical-coordinate-based Octree. SCP exhibits excellent universality, making it applicable to various learned point cloud compression techniques. Experimental results demonstrate that SCP surpasses previous state-of-the-art methods by up to 29.14% in point-to-point PSNR BD-Rate.

Motion estimation and filtered prediction for dynamic point cloud attribute compression

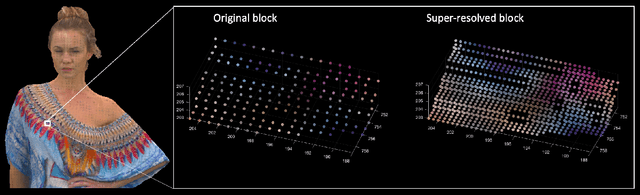

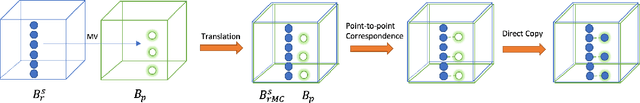

Oct 15, 2022

Abstract:In point cloud compression, exploiting temporal redundancy for inter predictive coding is challenging because of the irregular geometry. This paper proposes an efficient block-based inter-coding scheme for color attribute compression. The scheme includes integer-precision motion estimation and an adaptive graph based in-loop filtering scheme for improved attribute prediction. The proposed block-based motion estimation scheme consists of an initial motion search that exploits geometric and color attributes, followed by a motion refinement that only minimizes color prediction error. To further improve color prediction, we propose a vertex-domain low-pass graph filtering scheme that can adaptively remove noise from predictors computed from motion estimation with different accuracy. Our experiments demonstrate significant coding gain over state-of-the-art coding methods.

Fractional Motion Estimation for Point Cloud Compression

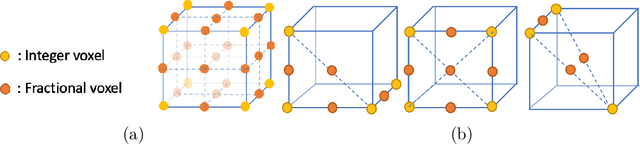

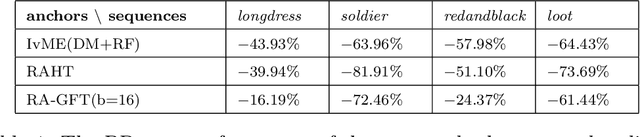

Feb 01, 2022

Abstract:Motivated by the success of fractional pixel motion in video coding, we explore the design of motion estimation with fractional-voxel resolution for compression of color attributes of dynamic 3D point clouds. Our proposed block-based fractional-voxel motion estimation scheme takes into account the fundamental differences between point clouds and videos, i.e., the irregularity of the distribution of voxels within a frame and across frames. We show that motion compensation can benefit from the higher resolution reference and more accurate displacements provided by fractional precision. Our proposed scheme significantly outperforms comparable methods that only use integer motion. The proposed scheme can be combined with and add sizeable gains to state-of-the-art systems that use transforms such as Region Adaptive Graph Fourier Transform and Region Adaptive Haar Transform.

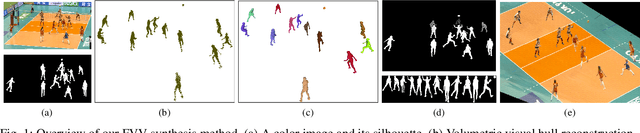

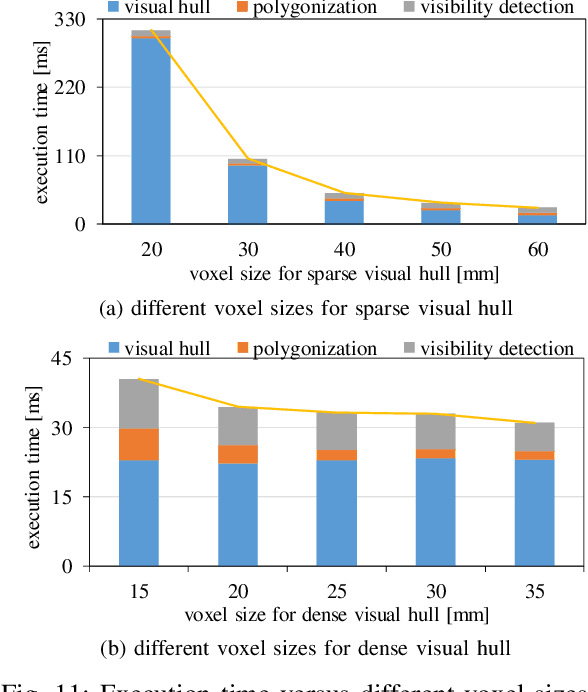

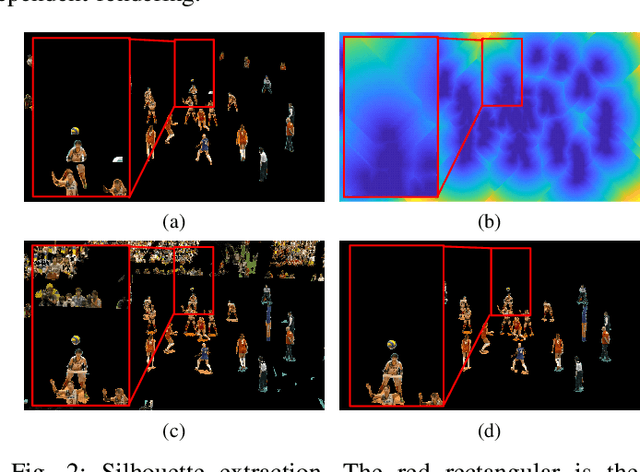

A Fast Free-viewpoint Video Synthesis Algorithm for Sports Scenes

Mar 28, 2019

Abstract:In this paper, we report on a parallel freeviewpoint video synthesis algorithm that can efficiently reconstruct a high-quality 3D scene representation of sports scenes. The proposed method focuses on a scene that is captured by multiple synchronized cameras featuring wide-baselines. The following strategies are introduced to accelerate the production of a free-viewpoint video taking the improvement of visual quality into account: (1) a sparse point cloud is reconstructed using a volumetric visual hull approach, and an exact 3D ROI is found for each object using an efficient connected components labeling algorithm. Next, the reconstruction of a dense point cloud is accelerated by implementing visual hull only in the ROIs; (2) an accurate polyhedral surface mesh is built by estimating the exact intersections between grid cells and the visual hull; (3) the appearance of the reconstructed presentation is reproduced in a view-dependent manner that respectively renders the non-occluded and occluded region with the nearest camera and its neighboring cameras. The production for volleyball and judo sequences demonstrates the effectiveness of our method in terms of both execution time and visual quality.

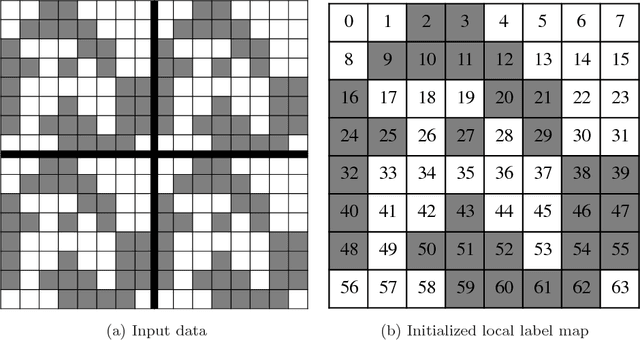

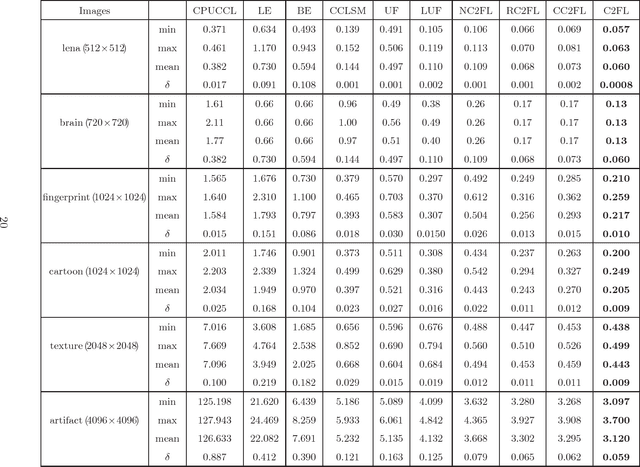

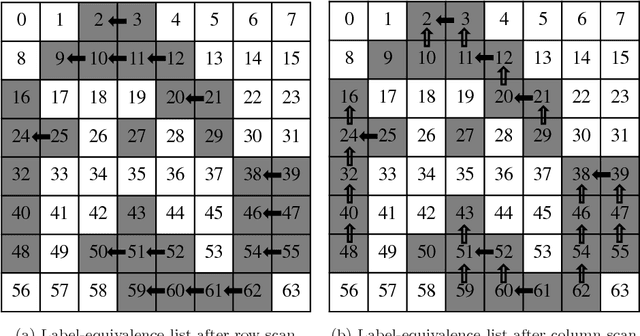

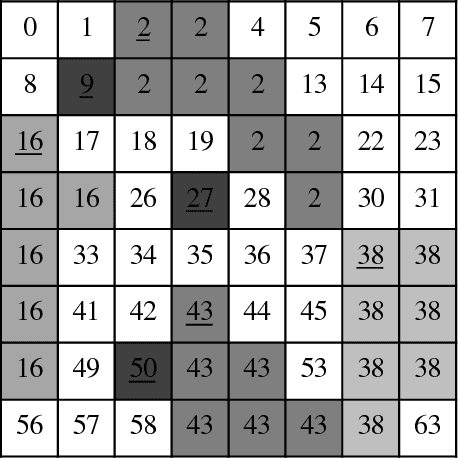

Efficient Parallel Connected Components Labeling with a Coarse-to-fine Strategy

Jan 26, 2018

Abstract:This paper proposes a new parallel approach to solve connected components on a 2D binary image implemented with CUDA. We employ the following strategies to accelerate neighborhood exploration after dividing an input image into independent blocks. In the local labeling stage, a coarse-labeling algorithm, including row-column connection and label-equivalence list unification, is applied first to sort out the mess of an initialized local label map; a refinement algorithm is then introduced to merge separated sub-regions from a single component. In the block merge stage, we scan the pixels located on the boundary of each block instead of solving the connectivity of all the pixels. With the proposed method, the length of label-equivalence lists is compressed, and the number of memory accesses is reduced. Thus, the efficiency of connected components labeling is improved. Experimental results show that our method outperforms the other approaches between $29\%$ and $80\%$ on average.

An Optimized Union-Find Algorithm for Connected Components Labeling Using GPUs

Aug 29, 2017

Abstract:In this paper, we report an optimized union-find (UF) algorithm that can label the connected components on a 2D image efficiently by employing the GPU architecture. The proposed method contains three phases: UF-based local merge, boundary analysis, and link. The coarse labeling in local merge reduces the number atomic operations, while the boundary analysis only manages the pixels on the boundary of each block. Evaluation results showed that the proposed algorithm speed up the average running time by more than 1.3X.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge