Kathrin Krieger

Motion Analysis of Upper Limb and Hand in a Haptic Rotation Task

Nov 17, 2024

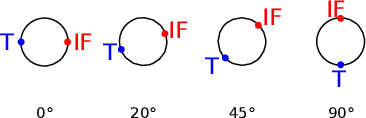

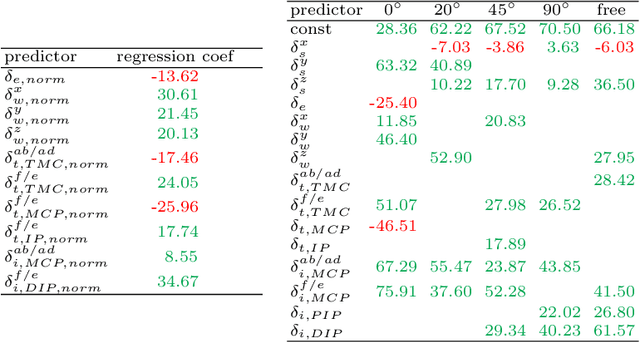

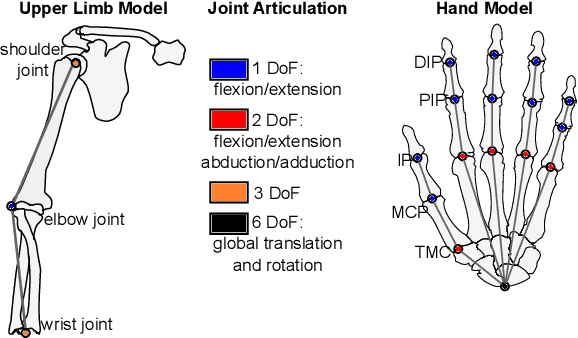

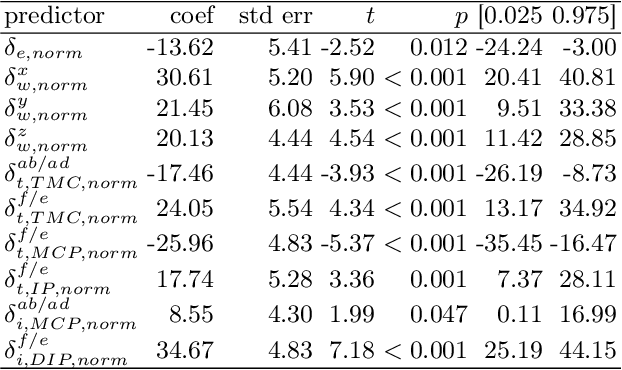

Abstract:Humans seem to have a bias to overshoot when rotating a rotary knob blindfolded around a specified target angle (i.e. during haptic rotation). Whereas some influence factors that strengthen or weaken such an effect are already known, the underlying reasons for the overshoot are still unknown. This work approaches the topic of haptic rotations by analyzing a detailed recording of the movement. We propose an experimental framework and an approach to investigate which upper limb and hand joint movements contribute significantly to a haptic rotation task and to the angle overshoot based on the acquired data. With stepwise regression with backward elimination, we analyze a rotation around 90 degrees counterclockwise with two fingers under different grasping orientations. Our results showed that the wrist joint, the sideways finger movement in the proximal joints, and the distal finger joints contributed significantly to overshooting. This suggests that two phenomena are behind the overshooting: 1) The significant contribution of the wrist joint indicates a bias of a hand-centered egocentric reference frame. 2) Significant contribution of the finger joints indicates a rolling of the fingertips over the rotary knob surface and, thus, a change of contact point for which probably the human does not compensate.

Adaptive Kinematic Modeling for Improved Hand Posture Estimates Using a Haptic Glove

Nov 10, 2024Abstract:Most commercially available haptic gloves compromise the accuracy of hand-posture measurements in favor of a simpler design with fewer sensors. While inaccurate posture data is often sufficient for the task at hand in biomedical settings such as VR-therapy-aided rehabilitation, measurements should be as precise as possible to digitally recreate hand postures as accurately as possible. With these applications in mind, we have added extra sensors to the commercially available Dexmo haptic glove by Dexta Robotics and applied kinematic models of the haptic glove and the user's hand to improve the accuracy of hand-posture measurements. In this work, we describe the augmentations and the kinematic modeling approach. Additionally, we present and discuss an evaluation of hand posture measurements as a proof of concept.

MedShapeNet -- A Large-Scale Dataset of 3D Medical Shapes for Computer Vision

Sep 12, 2023

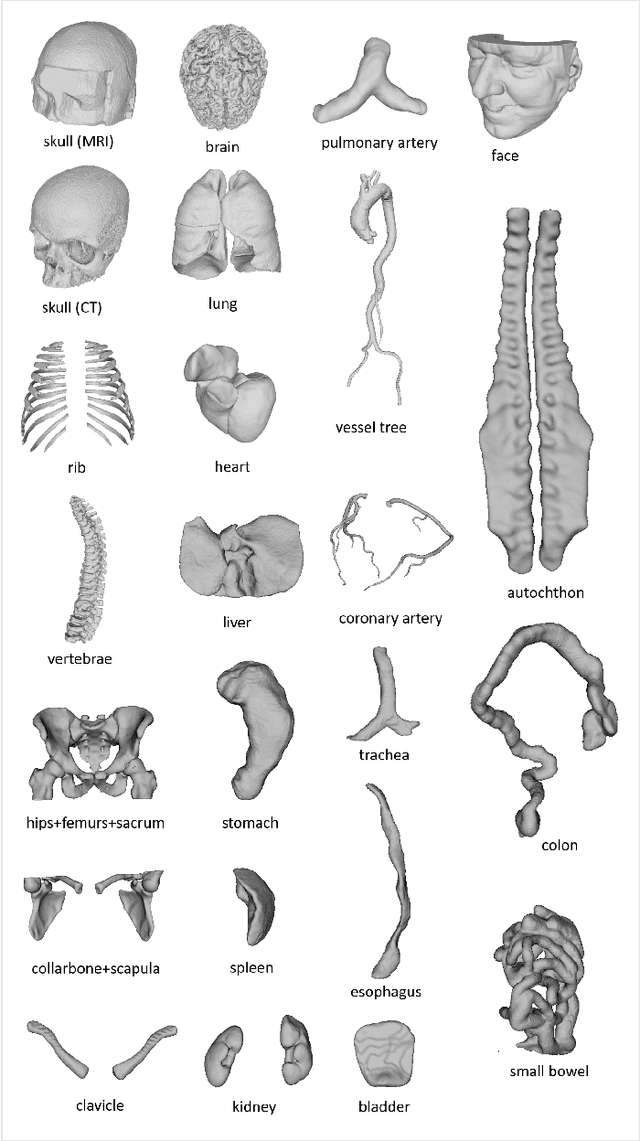

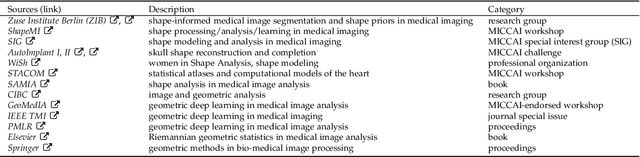

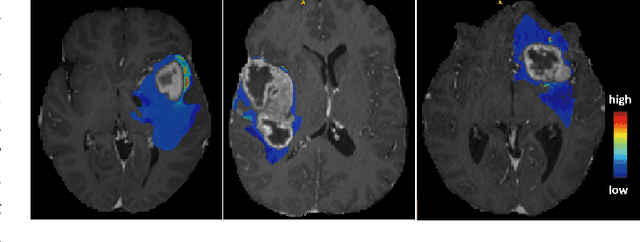

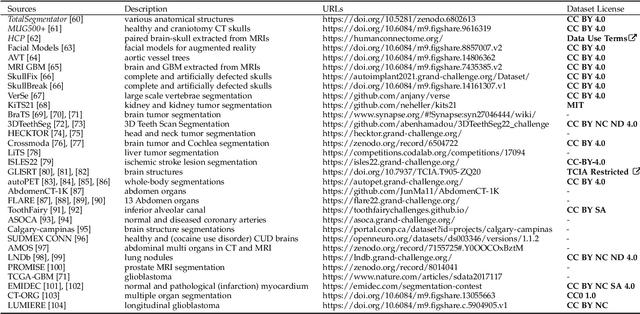

Abstract:We present MedShapeNet, a large collection of anatomical shapes (e.g., bones, organs, vessels) and 3D surgical instrument models. Prior to the deep learning era, the broad application of statistical shape models (SSMs) in medical image analysis is evidence that shapes have been commonly used to describe medical data. Nowadays, however, state-of-the-art (SOTA) deep learning algorithms in medical imaging are predominantly voxel-based. In computer vision, on the contrary, shapes (including, voxel occupancy grids, meshes, point clouds and implicit surface models) are preferred data representations in 3D, as seen from the numerous shape-related publications in premier vision conferences, such as the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), as well as the increasing popularity of ShapeNet (about 51,300 models) and Princeton ModelNet (127,915 models) in computer vision research. MedShapeNet is created as an alternative to these commonly used shape benchmarks to facilitate the translation of data-driven vision algorithms to medical applications, and it extends the opportunities to adapt SOTA vision algorithms to solve critical medical problems. Besides, the majority of the medical shapes in MedShapeNet are modeled directly on the imaging data of real patients, and therefore it complements well existing shape benchmarks comprising of computer-aided design (CAD) models. MedShapeNet currently includes more than 100,000 medical shapes, and provides annotations in the form of paired data. It is therefore also a freely available repository of 3D models for extended reality (virtual reality - VR, augmented reality - AR, mixed reality - MR) and medical 3D printing. This white paper describes in detail the motivations behind MedShapeNet, the shape acquisition procedures, the use cases, as well as the usage of the online shape search portal: https://medshapenet.ikim.nrw/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge