Karine Miras

Unconventional Hexacopters via Evolution and Learning: Performance Gains and New Insights

May 20, 2025Abstract:Evolution and learning have historically been interrelated topics, and their interplay is attracting increased interest lately. The emerging new factor in this trend is morphological evolution, the evolution of physical forms within embodied AI systems such as robots. In this study, we investigate a system of hexacopter-type drones with evolvable morphologies and learnable controllers and make contributions to two fields. For aerial robotics, we demonstrate that the combination of evolution and learning can deliver non-conventional drones that significantly outperform the traditional hexacopter on several tasks that are more complex than previously considered in the literature. For the field of Evolutionary Computing, we introduce novel metrics and perform new analyses into the interaction of morphological evolution and learning, uncovering hitherto unidentified effects. Our analysis tools are domain-agnostic, making a methodological contribution towards building solid foundations for embodied AI systems that integrate evolution and learning.

AMaze: An intuitive benchmark generator for fast prototyping of generalizable agents

Nov 20, 2024

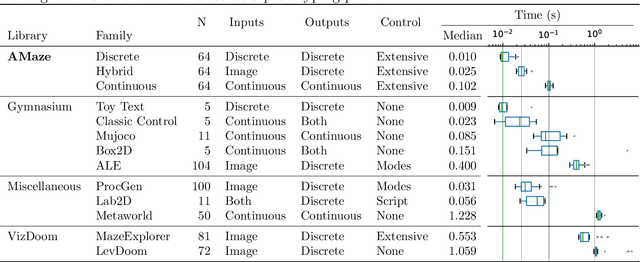

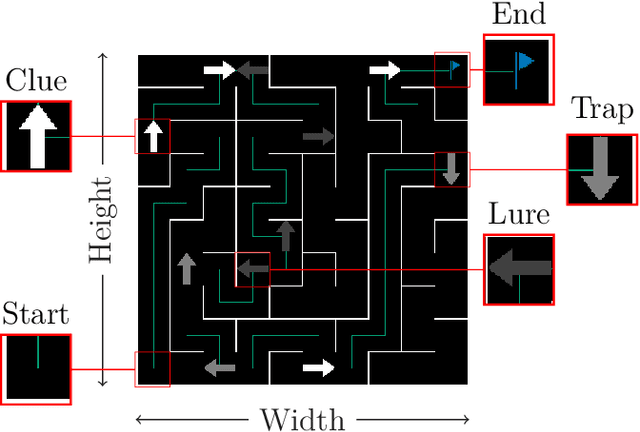

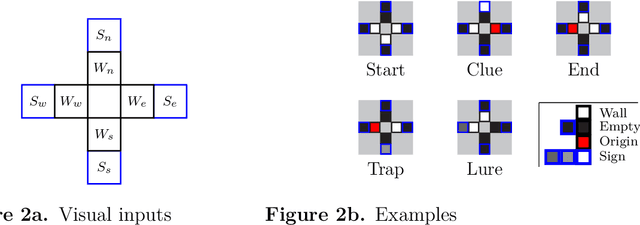

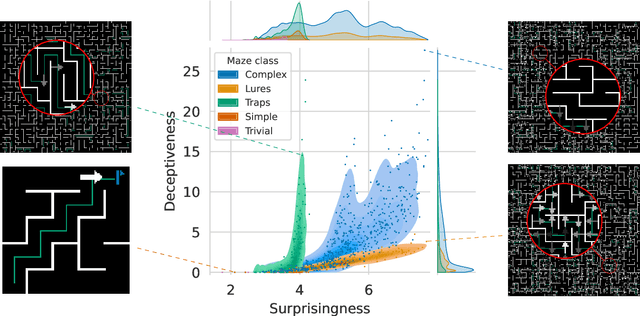

Abstract:Traditional approaches to training agents have generally involved a single, deterministic environment of minimal complexity to solve various tasks such as robot locomotion or computer vision. However, agents trained in static environments lack generalization capabilities, limiting their potential in broader scenarios. Thus, recent benchmarks frequently rely on multiple environments, for instance, by providing stochastic noise, simple permutations, or altogether different settings. In practice, such collections result mainly from costly human-designed processes or the liberal use of random number generators. In this work, we introduce AMaze, a novel benchmark generator in which embodied agents must navigate a maze by interpreting visual signs of arbitrary complexities and deceptiveness. This generator promotes human interaction through the easy generation of feature-specific mazes and an intuitive understanding of the resulting agents' strategies. As a proof-of-concept, we demonstrate the capabilities of the generator in a simple, fully discrete case with limited deceptiveness. Agents were trained under three different regimes (one-shot, scaffolding, interactive), and the results showed that the latter two cases outperform direct training in terms of generalization capabilities. Indeed, depending on the combination of generalization metric, training regime, and algorithm, the median gain ranged from 50% to 100% and maximal performance was achieved through interactive training, thereby demonstrating the benefits of a controllable human-in-the-loop benchmark generator.

Interactive embodied evolution for socially adept Artificial General Creatures

Jul 31, 2024Abstract:We introduce here the concept of Artificial General Creatures (AGC) which encompasses "robotic or virtual agents with a wide enough range of capabilities to ensure their continued survival". With this in mind, we propose a research line aimed at incrementally building both the technology and the trustworthiness of AGC. The core element in this approach is that trust can only be built over time, through demonstrably mutually beneficial interactions. To this end, we advocate starting from unobtrusive, nonthreatening artificial agents that would explicitly collaborate with humans, similarly to what domestic animals do. By combining multiple research fields, from Evolutionary Robotics to Neuroscience, from Ethics to Human-Machine Interaction, we aim at creating embodied, self-sustaining Artificial General Creatures that would form social and emotional connections with humans. Although they would not be able to play competitive online games or generate poems, we argue that creatures akin to artificial pets would be invaluable stepping stones toward symbiotic Artificial General Intelligence.

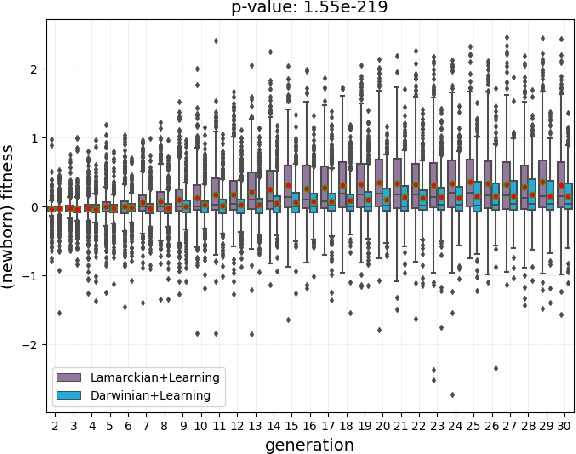

Lamarckian Inheritance Improves Robot Evolution in Dynamic Environments

Mar 28, 2024

Abstract:This study explores the integration of Lamarckian system into evolutionary robotics (ER), comparing it with the traditional Darwinian model across various environments. By adopting Lamarckian principles, where robots inherit learned traits, alongside Darwinian learning without inheritance, we investigate adaptation in dynamic settings. Our research, conducted in six distinct environmental setups, demonstrates that Lamarckian systems outperform Darwinian ones in adaptability and efficiency, particularly in challenging conditions. Our analysis highlights the critical role of the interplay between controller \& morphological evolution and environment adaptation, with parent-offspring similarities and newborn \&survivors before and after learning providing insights into the effectiveness of trait inheritance. Our findings suggest Lamarckian principles could significantly advance autonomous system design, highlighting the potential for more adaptable and robust robotic solutions in complex, real-world applications. These theoretical insights were validated using real physical robots, bridging the gap between simulation and practical application.

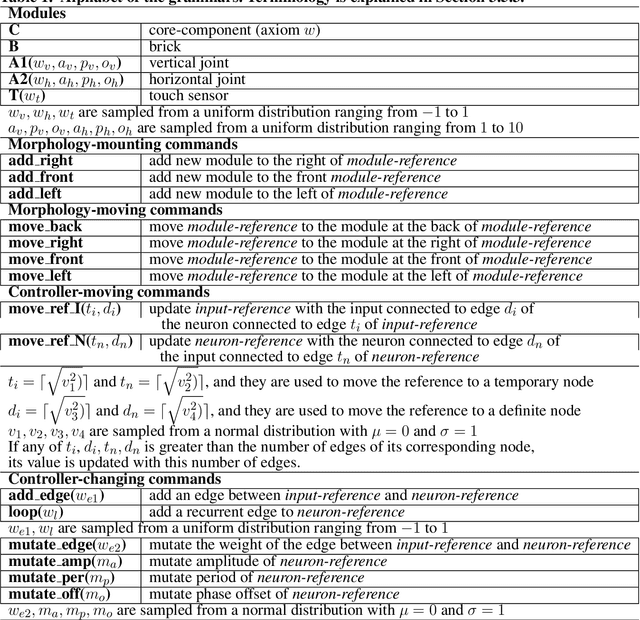

A comparison of controller architectures and learning mechanisms for arbitrary robot morphologies

Sep 25, 2023

Abstract:The main question this paper addresses is: What combination of a robot controller and a learning method should be used, if the morphology of the learning robot is not known in advance? Our interest is rooted in the context of morphologically evolving modular robots, but the question is also relevant in general, for system designers interested in widely applicable solutions. We perform an experimental comparison of three controller-and-learner combinations: one approach where controllers are based on modelling animal locomotion (Central Pattern Generators, CPG) and the learner is an evolutionary algorithm, a completely different method using Reinforcement Learning (RL) with a neural network controller architecture, and a combination `in-between' where controllers are neural networks and the learner is an evolutionary algorithm. We apply these three combinations to a test suite of modular robots and compare their efficacy, efficiency, and robustness. Surprisingly, the usual CPG-based and RL-based options are outperformed by the in-between combination that is more robust and efficient than the other two setups.

Lamarck's Revenge: Inheritance of Learned Traits Can Make Robot Evolution Better

Sep 22, 2023

Abstract:Evolutionary robot systems offer two principal advantages: an advanced way of developing robots through evolutionary optimization and a special research platform to conduct what-if experiments regarding questions about evolution. Our study sits at the intersection of these. We investigate the question ``What if the 18th-century biologist Lamarck was not completely wrong and individual traits learned during a lifetime could be passed on to offspring through inheritance?'' We research this issue through simulations with an evolutionary robot framework where morphologies (bodies) and controllers (brains) of robots are evolvable and robots also can improve their controllers through learning during their lifetime. Within this framework, we compare a Lamarckian system, where learned bits of the brain are inheritable, with a Darwinian system, where they are not. Analyzing simulations based on these systems, we obtain new insights about Lamarckian evolution dynamics and the interaction between evolution and learning. Specifically, we show that Lamarckism amplifies the emergence of `morphological intelligence', the ability of a given robot body to acquire a good brain by learning, and identify the source of this success: `newborn' robots have a higher fitness because their inherited brains match their bodies better than those in a Darwinian system.

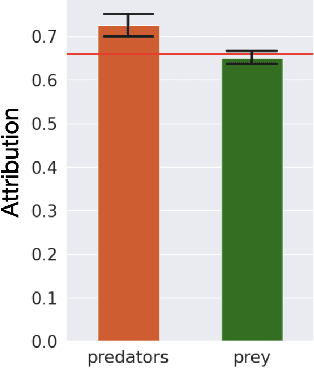

Open-endedness induced through a predator-prey scenario using modular robots

Sep 20, 2023

Abstract:This work investigates how a predator-prey scenario can induce the emergence of Open-Ended Evolution (OEE). We utilize modular robots of fixed morphologies whose controllers are subject to evolution. In both species, robots can send and receive signals and perceive the relative positions of other robots in the environment. Specifically, we introduce a feature we call a tagging system: it modifies how individuals can perceive each other and is expected to increase behavioral complexity. Our results show the emergence of adaptive strategies, demonstrating the viability of inducing OEE through predator-prey dynamics using modular robots. Such emergence, nevertheless, seemed to depend on conditioning reproduction to an explicit behavioral criterion.

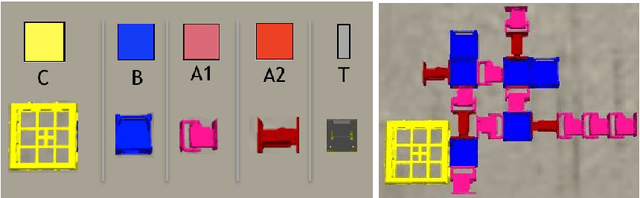

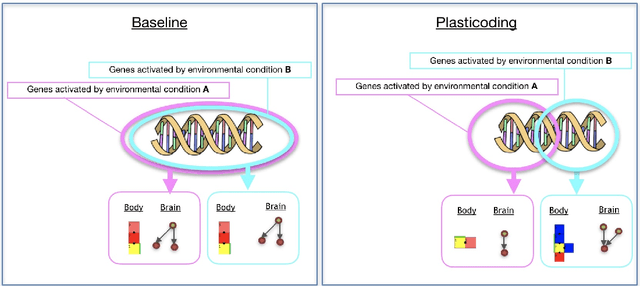

Environmental regulation using Plasticoding for the evolution of robots

May 29, 2020

Abstract:Evolutionary robot systems are usually affected by the properties of the environment indirectly through selection. In this paper, we present and investigate a system where the environment also has a direct effect: through regulation. We propose a novel robot encoding method where a genotype encodes multiple possible phenotypes, and the incarnation of a robot depends on the environmental conditions taking place in a determined moment of its life. This means that the morphology, controller, and behavior of a robot can change according to the environment. Importantly, this process of development can happen at any moment of a robot lifetime, according to its experienced environmental stimuli. We provide an empirical proof-of-concept, and the analysis of the experimental results shows that Plasticoding improves adaptation (task performance) while leading to different evolved morphologies, controllers, and behaviour.

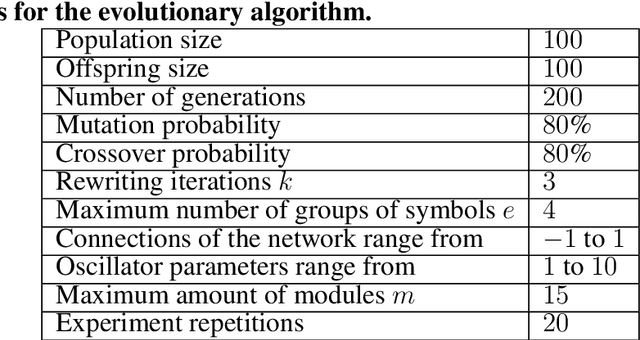

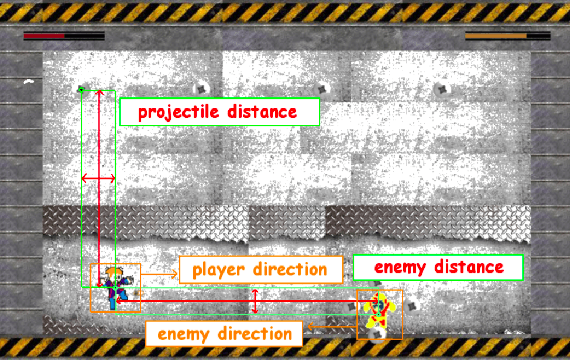

EvoMan: Game-playing Competition

Jan 04, 2020

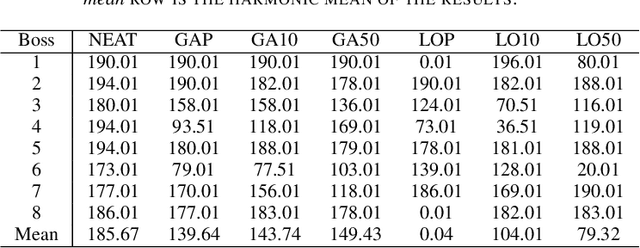

Abstract:This paper describes a competition proposal for evolving Intelligent Agents for the game-playing framework called EvoMan. The framework is based on the boss fights of the game called Mega Man II developed by Capcom. For this particular competition, the main goal is to beat all of the eight bosses using a generalist strategy. In other words, the competitors should train the agent to beat a set of the bosses and then the agent will be evaluated by its performance against all eight bosses. At the end of this paper, the competitors are provided with baseline results so that they can have an intuition on how good their results are.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge