Fabricio Olivetti de Franca

Federal University of ABC

Improving Genetic Programming for Symbolic Regression with Equality Graphs

Jan 29, 2025Abstract:The search for symbolic regression models with genetic programming (GP) has a tendency of revisiting expressions in their original or equivalent forms. Repeatedly evaluating equivalent expressions is inefficient, as it does not immediately lead to better solutions. However, evolutionary algorithms require diversity and should allow the accumulation of inactive building blocks that can play an important role at a later point. The equality graph is a data structure capable of compactly storing expressions and their equivalent forms allowing an efficient verification of whether an expression has been visited in any of their stored equivalent forms. We exploit the e-graph to adapt the subtree operators to reduce the chances of revisiting expressions. Our adaptation, called eggp, stores every visited expression in the e-graph, allowing us to filter out from the available selection of subtrees all the combinations that would create already visited expressions. Results show that, for small expressions, this approach improves the performance of a simple GP algorithm to compete with PySR and Operon without increasing computational cost. As a highlight, eggp was capable of reliably delivering short and at the same time accurate models for a selected set of benchmarks from SRBench and a set of real-world datasets.

rEGGression: an Interactive and Agnostic Tool for the Exploration of Symbolic Regression Models

Jan 29, 2025Abstract:Regression analysis is used for prediction and to understand the effect of independent variables on dependent variables. Symbolic regression (SR) automates the search for non-linear regression models, delivering a set of hypotheses that balances accuracy with the possibility to understand the phenomena. Many SR implementations return a Pareto front allowing the choice of the best trade-off. However, this hides alternatives that are close to non-domination, limiting these choices. Equality graphs (e-graphs) allow to represent large sets of expressions compactly by efficiently handling duplicated parts occurring in multiple expressions. E-graphs allow to store and query all SR solution candidates visited in one or multiple GP runs efficiently and open the possibility to analyse much larger sets of SR solution candidates. We introduce rEGGression, a tool using e-graphs to enable the exploration of a large set of symbolic expressions which provides querying, filtering, and pattern matching features creating an interactive experience to gain insights about SR models. The main highlight is its focus in the exploration of the building blocks found during the search that can help the experts to find insights about the studied phenomena.This is possible by exploiting the pattern matching capability of the e-graph data structure.

Alleviating Overfitting in Transformation-Interaction-Rational Symbolic Regression with Multi-Objective Optimization

Jan 03, 2025Abstract:The Transformation-Interaction-Rational is a representation for symbolic regression that limits the search space of functions to the ratio of two nonlinear functions each one defined as the linear regression of transformed variables. This representation has the main objective to bias the search towards simpler expressions while keeping the approximation power of standard approaches. The performance of using Genetic Programming with this representation was substantially better than with its predecessor (Interaction-Transformation) and ranked close to the state-of-the-art on a contemporary Symbolic Regression benchmark. On a closer look at these results, we observed that the performance could be further improved with an additional selective pressure for smaller expressions when the dataset contains just a few data points. The introduction of a penalization term applied to the fitness measure improved the results on these smaller datasets. One problem with this approach is that it introduces two additional hyperparameters: i) a criteria to when the penalization should be activated and, ii) the amount of penalization to the fitness function. In this paper, we extend Transformation-Interaction-Rational to support multi-objective optimization, specifically the NSGA-II algorithm, and apply that to the same benchmark. A detailed analysis of the results show that the use of multi-objective optimization benefits the overall performance on a subset of the benchmarks while keeping the results similar to the single-objective approach on the remainder of the datasets. Specifically to the small datasets, we observe a small (and statistically insignificant) improvement of the results suggesting that further strategies must be explored.

* 25 pages, 8 figures, 4 tables, Genetic Programming and Evolvable Machines, vol 24, no 2

The Inefficiency of Genetic Programming for Symbolic Regression -- Extended Version

Apr 26, 2024

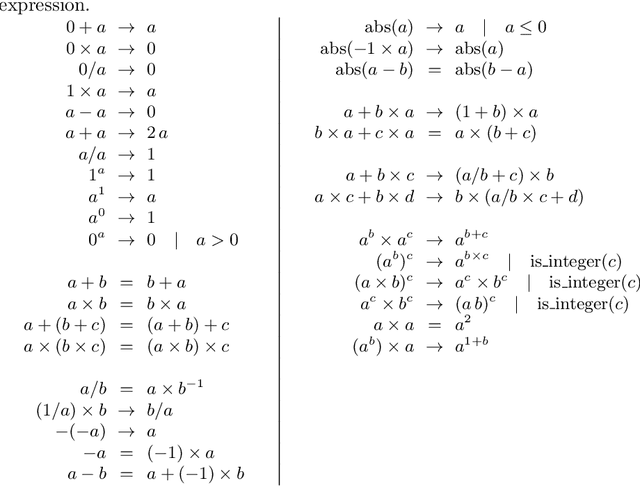

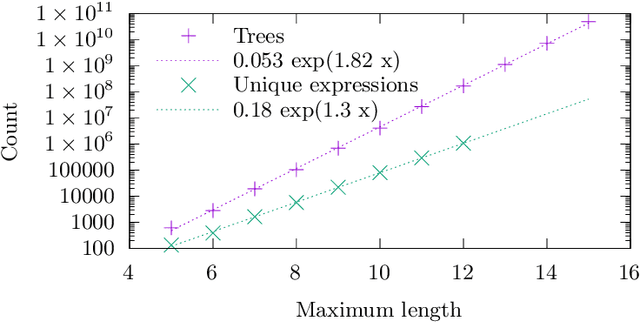

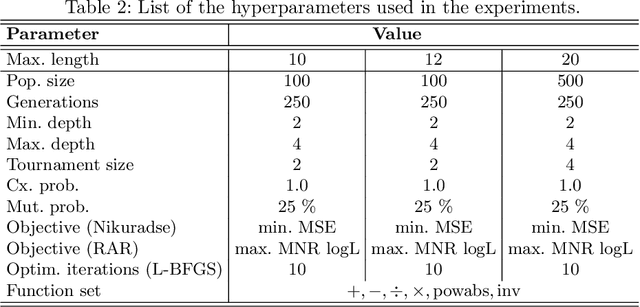

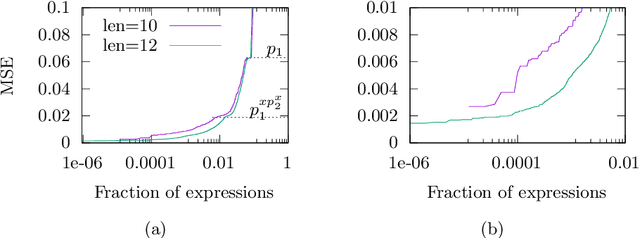

Abstract:We analyse the search behaviour of genetic programming for symbolic regression in practically relevant but limited settings, allowing exhaustive enumeration of all solutions. This enables us to quantify the success probability of finding the best possible expressions, and to compare the search efficiency of genetic programming to random search in the space of semantically unique expressions. This analysis is made possible by improved algorithms for equality saturation, which we use to improve the Exhaustive Symbolic Regression algorithm; this produces the set of semantically unique expression structures, orders of magnitude smaller than the full symbolic regression search space. We compare the efficiency of random search in the set of unique expressions and genetic programming. For our experiments we use two real-world datasets where symbolic regression has been used to produce well-fitting univariate expressions: the Nikuradse dataset of flow in rough pipes and the Radial Acceleration Relation of galaxy dynamics. The results show that genetic programming in such limited settings explores only a small fraction of all unique expressions, and evaluates expressions repeatedly that are congruent to already visited expressions.

Minimum variance threshold for epsilon-lexicase selection

Apr 08, 2024Abstract:Parent selection plays an important role in evolutionary algorithms, and many strategies exist to select the parent pool before breeding the next generation. Methods often rely on average error over the entire dataset as a criterion to select the parents, which can lead to an information loss due to aggregation of all test cases. Under epsilon-lexicase selection, the population goes to a selection pool that is iteratively reduced by using each test individually, discarding individuals with an error higher than the elite error plus the median absolute deviation (MAD) of errors for that particular test case. In an attempt to better capture differences in performance of individuals on cases, we propose a new criteria that splits errors into two partitions that minimize the total variance within partitions. Our method was embedded into the FEAT symbolic regression algorithm, and evaluated with the SRBench framework, containing 122 black-box synthetic and real-world regression problems. The empirical results show a better performance of our approach compared to traditional epsilon-lexicase selection in the real-world datasets while showing equivalent performance on the synthetic dataset.

* 9 pages, 13 figures, accepted in Genetic and Evolutionary Computation Conference (GECCO '24)

Inexact Simplification of Symbolic Regression Expressions with Locality-sensitive Hashing

Apr 08, 2024Abstract:Symbolic regression (SR) searches for parametric models that accurately fit a dataset, prioritizing simplicity and interpretability. Despite this secondary objective, studies point out that the models are often overly complex due to redundant operations, introns, and bloat that arise during the iterative process, and can hinder the search with repeated exploration of bloated segments. Applying a fast heuristic algebraic simplification may not fully simplify the expression and exact methods can be infeasible depending on size or complexity of the expressions. We propose a novel agnostic simplification and bloat control for SR employing an efficient memoization with locality-sensitive hashing (LHS). The idea is that expressions and their sub-expressions traversed during the iterative simplification process are stored in a dictionary using LHS, enabling efficient retrieval of similar structures. We iterate through the expression, replacing subtrees with others of same hash if they result in a smaller expression. Empirical results shows that applying this simplification during evolution performs equal or better than without simplification in minimization of error, significantly reducing the number of nonlinear functions. This technique can learn simplification rules that work in general or for a specific problem, and improves convergence while reducing model complexity.

* 9 pages, 10 figures, accepted to GECCO-24

Interpretability in Symbolic Regression: a benchmark of Explanatory Methods using the Feynman data set

Apr 08, 2024Abstract:In some situations, the interpretability of the machine learning models plays a role as important as the model accuracy. Interpretability comes from the need to trust the prediction model, verify some of its properties, or even enforce them to improve fairness. Many model-agnostic explanatory methods exists to provide explanations for black-box models. In the regression task, the practitioner can use white-boxes or gray-boxes models to achieve more interpretable results, which is the case of symbolic regression. When using an explanatory method, and since interpretability lacks a rigorous definition, there is a need to evaluate and compare the quality and different explainers. This paper proposes a benchmark scheme to evaluate explanatory methods to explain regression models, mainly symbolic regression models. Experiments were performed using 100 physics equations with different interpretable and non-interpretable regression methods and popular explanation methods, evaluating the performance of the explainers performance with several explanation measures. In addition, we further analyzed four benchmarks from the GP community. The results have shown that Symbolic Regression models can be an interesting alternative to white-box and black-box models that is capable of returning accurate models with appropriate explanations. Regarding the explainers, we observed that Partial Effects and SHAP were the most robust explanation models, with Integrated Gradients being unstable only with tree-based models. This benchmark is publicly available for further experiments.

* 47 pages, 10 figures. This is a post peer-review, pre-copyedit version of an article published in Genetic Programming and Evolvable Machines Volume 23, pages 309-349, (2022). The final version is available on https://link.springer.com/article/10.1007/s10710-022-09435-x

Origami: (un)folding the abstraction of recursion schemes for program synthesis

Feb 27, 2024Abstract:Program synthesis with Genetic Programming searches for a correct program that satisfies the input specification, which is usually provided as input-output examples. One particular challenge is how to effectively handle loops and recursion avoiding programs that never terminate. A helpful abstraction that can alleviate this problem is the employment of Recursion Schemes that generalize the combination of data production and consumption. Recursion Schemes are very powerful as they allow the construction of programs that can summarize data, create sequences, and perform advanced calculations. The main advantage of writing a program using Recursion Schemes is that the programs are composed of well defined templates with only a few parts that need to be synthesized. In this paper we make an initial study of the benefits of using program synthesis with fold and unfold templates, and outline some preliminary experimental results. To highlight the advantages and disadvantages of this approach, we manually solved the entire GPSB benchmark using recursion schemes, highlighting the parts that should be evolved compared to alternative implementations. We noticed that, once the choice of which recursion scheme is made, the synthesis process can be simplified as each of the missing parts of the template are reduced to simpler functions, which are further constrained by their own input and output types.

Prediction Intervals and Confidence Regions for Symbolic Regression Models based on Likelihood Profiles

Sep 14, 2022

Abstract:Symbolic regression is a nonlinear regression method which is commonly performed by an evolutionary computation method such as genetic programming. Quantification of uncertainty of regression models is important for the interpretation of models and for decision making. The linear approximation and so-called likelihood profiles are well-known possibilities for the calculation of confidence and prediction intervals for nonlinear regression models. These simple and effective techniques have been completely ignored so far in the genetic programming literature. In this work we describe the calculation of likelihood profiles in details and also provide some illustrative examples with models created with three different symbolic regression algorithms on two different datasets. The examples highlight the importance of the likelihood profiles to understand the limitations of symbolic regression models and to help the user taking an informed post-prediction decision.

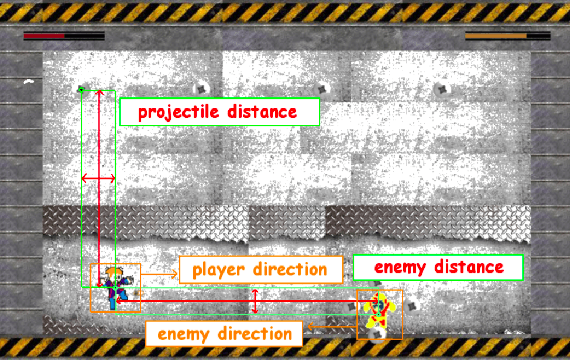

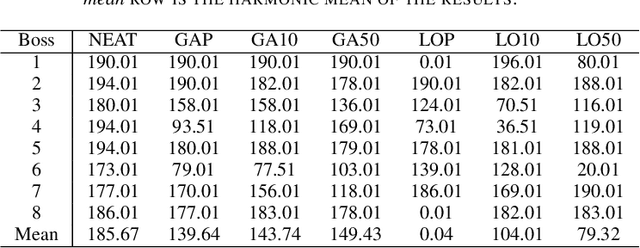

EvoMan: Game-playing Competition

Jan 04, 2020

Abstract:This paper describes a competition proposal for evolving Intelligent Agents for the game-playing framework called EvoMan. The framework is based on the boss fights of the game called Mega Man II developed by Capcom. For this particular competition, the main goal is to beat all of the eight bosses using a generalist strategy. In other words, the competitors should train the agent to beat a set of the bosses and then the agent will be evaluated by its performance against all eight bosses. At the end of this paper, the competitors are provided with baseline results so that they can have an intuition on how good their results are.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge