Karan Singh

Learning to Build Shapes by Extrusion

Jan 30, 2026Abstract:We introduce Text Encoded Extrusion (TEE), a text-based representation that expresses mesh construction as sequences of face extrusions rather than polygon lists, and a method for generating 3D meshes from TEE using a large language model (LLM). By learning extrusion sequences that assemble a mesh, similar to the way artists create meshes, our approach naturally supports arbitrary output face counts and produces manifold meshes by design, in contrast to recent transformer-based models. The learnt extrusion sequences can also be applied to existing meshes - enabling editing in addition to generation. To train our model, we decompose a library of quadrilateral meshes with non-self-intersecting face loops into constituent loops, which can be viewed as their building blocks, and finetune an LLM on the steps for reassembling the meshes by performing a sequence of extrusions. We demonstrate that our representation enables reconstruction, novel shape synthesis, and the addition of new features to existing meshes.

Model See Model Do: Speech-Driven Facial Animation with Style Control

May 02, 2025Abstract:Speech-driven 3D facial animation plays a key role in applications such as virtual avatars, gaming, and digital content creation. While existing methods have made significant progress in achieving accurate lip synchronization and generating basic emotional expressions, they often struggle to capture and effectively transfer nuanced performance styles. We propose a novel example-based generation framework that conditions a latent diffusion model on a reference style clip to produce highly expressive and temporally coherent facial animations. To address the challenge of accurately adhering to the style reference, we introduce a novel conditioning mechanism called style basis, which extracts key poses from the reference and additively guides the diffusion generation process to fit the style without compromising lip synchronization quality. This approach enables the model to capture subtle stylistic cues while ensuring that the generated animations align closely with the input speech. Extensive qualitative, quantitative, and perceptual evaluations demonstrate the effectiveness of our method in faithfully reproducing the desired style while achieving superior lip synchronization across various speech scenarios.

Sample-Optimal Agnostic Boosting with Unlabeled Data

Mar 06, 2025

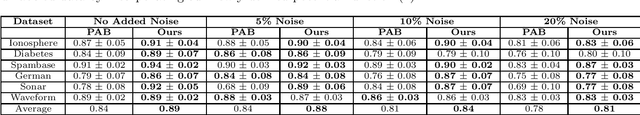

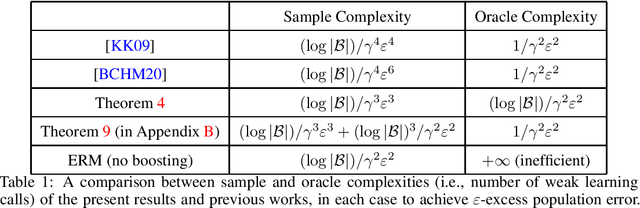

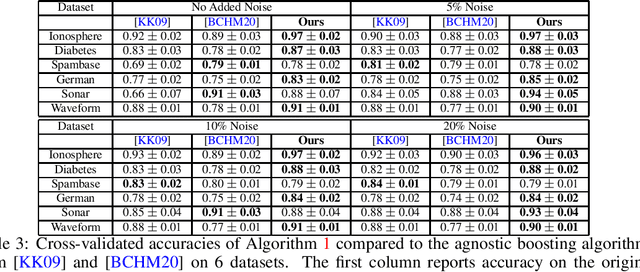

Abstract:Boosting provides a practical and provably effective framework for constructing accurate learning algorithms from inaccurate rules of thumb. It extends the promise of sample-efficient learning to settings where direct Empirical Risk Minimization (ERM) may not be implementable efficiently. In the realizable setting, boosting is known to offer this computational reprieve without compromising on sample efficiency. However, in the agnostic case, existing boosting algorithms fall short of achieving the optimal sample complexity. This paper highlights an unexpected and previously unexplored avenue of improvement: unlabeled samples. We design a computationally efficient agnostic boosting algorithm that matches the sample complexity of ERM, given polynomially many additional unlabeled samples. In fact, we show that the total number of samples needed, unlabeled and labeled inclusive, is never more than that for the best known agnostic boosting algorithm -- so this result is never worse -- while only a vanishing fraction of these need to be labeled for the algorithm to succeed. This is particularly fortuitous for learning-theoretic applications of agnostic boosting, which often take place in the distribution-specific setting, where unlabeled samples can be availed for free. We detail other applications of this result in reinforcement learning.

Motion Modes: What Could Happen Next?

Nov 29, 2024Abstract:Predicting diverse object motions from a single static image remains challenging, as current video generation models often entangle object movement with camera motion and other scene changes. While recent methods can predict specific motions from motion arrow input, they rely on synthetic data and predefined motions, limiting their application to complex scenes. We introduce Motion Modes, a training-free approach that explores a pre-trained image-to-video generator's latent distribution to discover various distinct and plausible motions focused on selected objects in static images. We achieve this by employing a flow generator guided by energy functions designed to disentangle object and camera motion. Additionally, we use an energy inspired by particle guidance to diversify the generated motions, without requiring explicit training data. Experimental results demonstrate that Motion Modes generates realistic and varied object animations, surpassing previous methods and even human predictions regarding plausibility and diversity. Project Webpage: https://motionmodes.github.io/

Sample-Efficient Agnostic Boosting

Oct 31, 2024

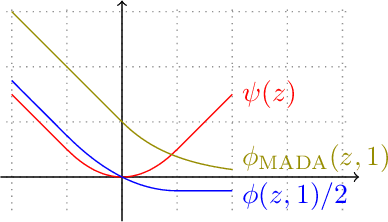

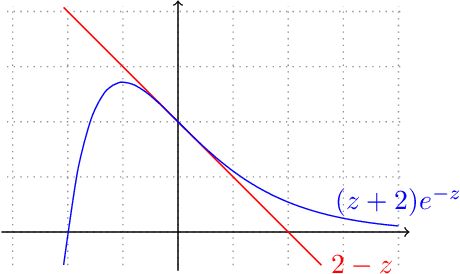

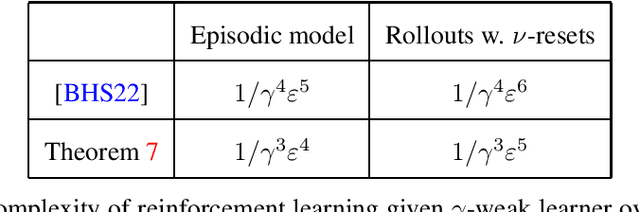

Abstract:The theory of boosting provides a computational framework for aggregating approximate weak learning algorithms, which perform marginally better than a random predictor, into an accurate strong learner. In the realizable case, the success of the boosting approach is underscored by a remarkable fact that the resultant sample complexity matches that of a computationally demanding alternative, namely Empirical Risk Minimization (ERM). This in particular implies that the realizable boosting methodology has the potential to offer computational relief without compromising on sample efficiency. Despite recent progress, in agnostic boosting, where assumptions on the conditional distribution of labels given feature descriptions are absent, ERM outstrips the agnostic boosting methodology in being quadratically more sample efficient than all known agnostic boosting algorithms. In this paper, we make progress on closing this gap, and give a substantially more sample efficient agnostic boosting algorithm than those known, without compromising on the computational (or oracle) complexity. A key feature of our algorithm is that it leverages the ability to reuse samples across multiple rounds of boosting, while guaranteeing a generalization error strictly better than those obtained by blackbox applications of uniform convergence arguments. We also apply our approach to other previously studied learning problems, including boosting for reinforcement learning, and demonstrate improved results.

Improved Differentially Private and Lazy Online Convex Optimization

Dec 20, 2023Abstract:We study the task of $(\epsilon, \delta)$-differentially private online convex optimization (OCO). In the online setting, the release of each distinct decision or iterate carries with it the potential for privacy loss. This problem has a long history of research starting with Jain et al. [2012] and the best known results for the regime of {\epsilon} not being very small are presented in Agarwal et al. [2023]. In this paper we improve upon the results of Agarwal et al. [2023] in terms of the dimension factors as well as removing the requirement of smoothness. Our results are now the best known rates for DP-OCO in this regime. Our algorithms builds upon the work of [Asi et al., 2023] which introduced the idea of explicitly limiting the number of switches via rejection sampling. The main innovation in our algorithm is the use of sampling from a strongly log-concave density which allows us to trade-off the dimension factors better leading to improved results.

Diffusion Handles: Enabling 3D Edits for Diffusion Models by Lifting Activations to 3D

Dec 06, 2023

Abstract:Diffusion Handles is a novel approach to enabling 3D object edits on diffusion images. We accomplish these edits using existing pre-trained diffusion models, and 2D image depth estimation, without any fine-tuning or 3D object retrieval. The edited results remain plausible, photo-real, and preserve object identity. Diffusion Handles address a critically missing facet of generative image based creative design, and significantly advance the state-of-the-art in generative image editing. Our key insight is to lift diffusion activations for an object to 3D using a proxy depth, 3D-transform the depth and associated activations, and project them back to image space. The diffusion process applied to the manipulated activations with identity control, produces plausible edited images showing complex 3D occlusion and lighting effects. We evaluate Diffusion Handles: quantitatively, on a large synthetic data benchmark; and qualitatively by a user study, showing our output to be more plausible, and better than prior art at both, 3D editing and identity control. Project Webpage: https://diffusionhandles.github.io/

Online Nonstochastic Model-Free Reinforcement Learning

May 27, 2023Abstract:In this work, we explore robust model-free reinforcement learning algorithms for environments that may be dynamic or even adversarial. Conventional state-based policies fail to accommodate the challenge imposed by the presence of unmodeled disturbances in such settings. Additionally, optimizing linear state-based policies pose obstacle for efficient optimization, leading to nonconvex objectives even in benign environments like linear dynamical systems. Drawing inspiration from recent advancements in model-based control, we introduce a novel class of policies centered on disturbance signals. We define several categories of these signals, referred to as pseudo-disturbances, and corresponding policy classes based on them. We provide efficient and practical algorithms for optimizing these policies. Next, we examine the task of online adaptation of reinforcement learning agents to adversarial disturbances. Our methods can be integrated with any black-box model-free approach, resulting in provable regret guarantees if the underlying dynamics is linear. We evaluate our method over different standard RL benchmarks and demonstrate improved robustness.

Variance-Reduced Conservative Policy Iteration

Dec 12, 2022

Abstract:We study the sample complexity of reducing reinforcement learning to a sequence of empirical risk minimization problems over the policy space. Such reductions-based algorithms exhibit local convergence in the function space, as opposed to the parameter space for policy gradient algorithms, and thus are unaffected by the possibly non-linear or discontinuous parameterization of the policy class. We propose a variance-reduced variant of Conservative Policy Iteration that improves the sample complexity of producing a $\varepsilon$-functional local optimum from $O(\varepsilon^{-4})$ to $O(\varepsilon^{-3})$. Under state-coverage and policy-completeness assumptions, the algorithm enjoys $\varepsilon$-global optimality after sampling $O(\varepsilon^{-2})$ times, improving upon the previously established $O(\varepsilon^{-3})$ sample requirement.

Best of Both Worlds in Online Control: Competitive Ratio and Policy Regret

Nov 21, 2022

Abstract:We consider the fundamental problem of online control of a linear dynamical system from two different viewpoints: regret minimization and competitive analysis. We prove that the optimal competitive policy is well-approximated by a convex parameterized policy class, known as a disturbance-action control (DAC) policies. Using this structural result, we show that several recently proposed online control algorithms achieve the best of both worlds: sublinear regret vs. the best DAC policy selected in hindsight, and optimal competitive ratio, up to an additive correction which grows sublinearly in the time horizon. We further conclude that sublinear regret vs. the optimal competitive policy is attainable when the linear dynamical system is unknown, and even when a stabilizing controller for the dynamics is not available a priori.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge