Kangyi Liu

xbench: Tracking Agents Productivity Scaling with Profession-Aligned Real-World Evaluations

Jun 16, 2025

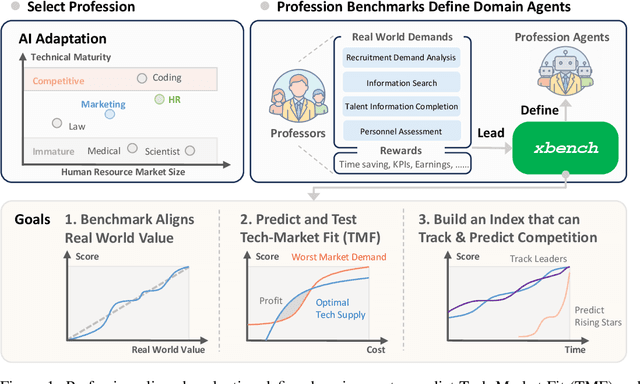

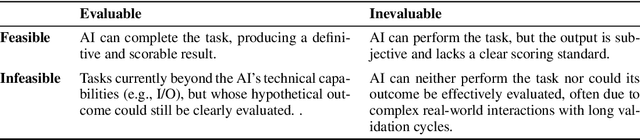

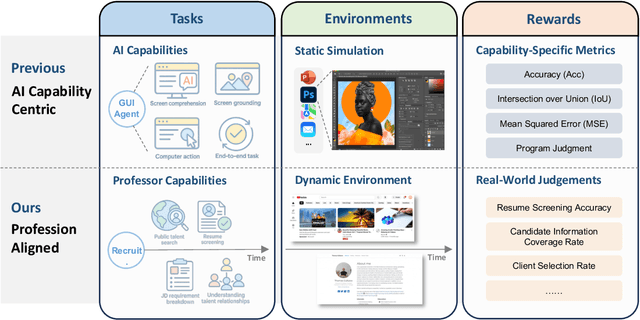

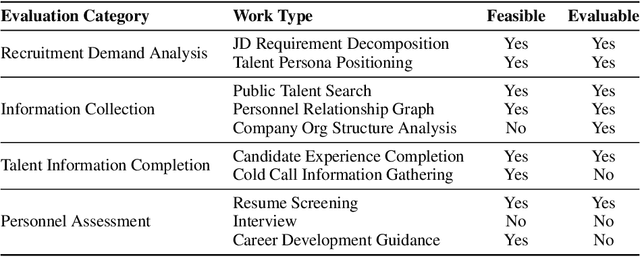

Abstract:We introduce xbench, a dynamic, profession-aligned evaluation suite designed to bridge the gap between AI agent capabilities and real-world productivity. While existing benchmarks often focus on isolated technical skills, they may not accurately reflect the economic value agents deliver in professional settings. To address this, xbench targets commercially significant domains with evaluation tasks defined by industry professionals. Our framework creates metrics that strongly correlate with productivity value, enables prediction of Technology-Market Fit (TMF), and facilitates tracking of product capabilities over time. As our initial implementations, we present two benchmarks: Recruitment and Marketing. For Recruitment, we collect 50 tasks from real-world headhunting business scenarios to evaluate agents' abilities in company mapping, information retrieval, and talent sourcing. For Marketing, we assess agents' ability to match influencers with advertiser needs, evaluating their performance across 50 advertiser requirements using a curated pool of 836 candidate influencers. We present initial evaluation results for leading contemporary agents, establishing a baseline for these professional domains. Our continuously updated evalsets and evaluations are available at https://xbench.org.

Labeled-to-Unlabeled Distribution Alignment for Partially-Supervised Multi-Organ Medical Image Segmentation

Sep 05, 2024

Abstract:Partially-supervised multi-organ medical image segmentation aims to develop a unified semantic segmentation model by utilizing multiple partially-labeled datasets, with each dataset providing labels for a single class of organs. However, the limited availability of labeled foreground organs and the absence of supervision to distinguish unlabeled foreground organs from the background pose a significant challenge, which leads to a distribution mismatch between labeled and unlabeled pixels. Although existing pseudo-labeling methods can be employed to learn from both labeled and unlabeled pixels, they are prone to performance degradation in this task, as they rely on the assumption that labeled and unlabeled pixels have the same distribution. In this paper, to address the problem of distribution mismatch, we propose a labeled-to-unlabeled distribution alignment (LTUDA) framework that aligns feature distributions and enhances discriminative capability. Specifically, we introduce a cross-set data augmentation strategy, which performs region-level mixing between labeled and unlabeled organs to reduce distribution discrepancy and enrich the training set. Besides, we propose a prototype-based distribution alignment method that implicitly reduces intra-class variation and increases the separation between the unlabeled foreground and background. This can be achieved by encouraging consistency between the outputs of two prototype classifiers and a linear classifier. Extensive experimental results on the AbdomenCT-1K dataset and a union of four benchmark datasets (including LiTS, MSD-Spleen, KiTS, and NIH82) demonstrate that our method outperforms the state-of-the-art partially-supervised methods by a considerable margin, and even surpasses the fully-supervised methods. The source code is publicly available at https://github.com/xjiangmed/LTUDA.

Dual-view Correlation Hybrid Attention Network for Robust Holistic Mammogram Classification

Jun 19, 2023Abstract:Mammogram image is important for breast cancer screening, and typically obtained in a dual-view form, i.e., cranio-caudal (CC) and mediolateral oblique (MLO), to provide complementary information. However, previous methods mostly learn features from the two views independently, which violates the clinical knowledge and ignores the importance of dual-view correlation. In this paper, we propose a dual-view correlation hybrid attention network (DCHA-Net) for robust holistic mammogram classification. Specifically, DCHA-Net is carefully designed to extract and reinvent deep features for the two views, and meanwhile to maximize the underlying correlations between them. A hybrid attention module, consisting of local relation and non-local attention blocks, is proposed to alleviate the spatial misalignment of the paired views in the correlation maximization. A dual-view correlation loss is introduced to maximize the feature similarity between corresponding strip-like regions with equal distance to the chest wall, motivated by the fact that their features represent the same breast tissues, and thus should be highly-correlated. Experimental results on two public datasets, i.e., INbreast and CBIS-DDSM, demonstrate that DCHA-Net can well preserve and maximize feature correlations across views, and thus outperforms the state-of-the-arts for classifying a whole mammogram as malignant or not.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge