Kaizheng Wang

Learning Credal Ensembles via Distributionally Robust Optimization

Feb 09, 2026Abstract:Credal predictors are models that are aware of epistemic uncertainty and produce a convex set of probabilistic predictions. They offer a principled way to quantify predictive epistemic uncertainty (EU) and have been shown to improve model robustness in various settings. However, most state-of-the-art methods mainly define EU as disagreement caused by random training initializations, which mostly reflects sensitivity to optimization randomness rather than uncertainty from deeper sources. To address this, we define EU as disagreement among models trained with varying relaxations of the i.i.d. assumption between training and test data. Based on this idea, we propose CreDRO, which learns an ensemble of plausible models through distributionally robust optimization. As a result, CreDRO captures EU not only from training randomness but also from meaningful disagreement due to potential distribution shifts between training and test data. Empirical results show that CreDRO consistently outperforms existing credal methods on tasks such as out-of-distribution detection across multiple benchmarks and selective classification in medical applications.

The Nonstationarity-Complexity Tradeoff in Return Prediction

Dec 29, 2025Abstract:We investigate machine learning models for stock return prediction in non-stationary environments, revealing a fundamental nonstationarity-complexity tradeoff: complex models reduce misspecification error but require longer training windows that introduce stronger non-stationarity. We resolve this tension with a novel model selection method that jointly optimizes model class and training window size using a tournament procedure that adaptively evaluates candidates on non-stationary validation data. Our theoretical analysis demonstrates that this approach balances misspecification error, estimation variance, and non-stationarity, performing close to the best model in hindsight. Applying our method to 17 industry portfolio returns, we consistently outperform standard rolling-window benchmarks, improving out-of-sample $R^2$ by 14-23% on average. During NBER-designated recessions, improvements are substantial: our method achieves positive $R^2$ during the Gulf War recession while benchmarks are negative, and improves $R^2$ in absolute terms by at least 80bps during the 2001 recession as well as superior performance during the 2008 Financial Crisis. Economically, a trading strategy based on our selected model generates 31% higher cumulative returns averaged across the industries.

Credal Ensemble Distillation for Uncertainty Quantification

Nov 14, 2025Abstract:Deep ensembles (DE) have emerged as a powerful approach for quantifying predictive uncertainty and distinguishing its aleatoric and epistemic components, thereby enhancing model robustness and reliability. However, their high computational and memory costs during inference pose significant challenges for wide practical deployment. To overcome this issue, we propose credal ensemble distillation (CED), a novel framework that compresses a DE into a single model, CREDIT, for classification tasks. Instead of a single softmax probability distribution, CREDIT predicts class-wise probability intervals that define a credal set, a convex set of probability distributions, for uncertainty quantification. Empirical results on out-of-distribution detection benchmarks demonstrate that CED achieves superior or comparable uncertainty estimation compared to several existing baselines, while substantially reducing inference overhead compared to DE.

Epistemic Wrapping for Uncertainty Quantification

May 04, 2025Abstract:Uncertainty estimation is pivotal in machine learning, especially for classification tasks, as it improves the robustness and reliability of models. We introduce a novel `Epistemic Wrapping' methodology aimed at improving uncertainty estimation in classification. Our approach uses Bayesian Neural Networks (BNNs) as a baseline and transforms their outputs into belief function posteriors, effectively capturing epistemic uncertainty and offering an efficient and general methodology for uncertainty quantification. Comprehensive experiments employing a Bayesian Neural Network (BNN) baseline and an Interval Neural Network for inference on the MNIST, Fashion-MNIST, CIFAR-10 and CIFAR-100 datasets demonstrate that our Epistemic Wrapper significantly enhances generalisation and uncertainty quantification.

Uncertainty Quantification for LLM-Based Survey Simulations

Feb 25, 2025

Abstract:We investigate the reliable use of simulated survey responses from large language models (LLMs) through the lens of uncertainty quantification. Our approach converts synthetic data into confidence sets for population parameters of human responses, addressing the distribution shift between the simulated and real populations. A key innovation lies in determining the optimal number of simulated responses: too many produce overly narrow confidence sets with poor coverage, while too few yield excessively loose estimates. To resolve this, our method adaptively selects the simulation sample size, ensuring valid average-case coverage guarantees. It is broadly applicable to any LLM, irrespective of its fidelity, and any procedure for constructing confidence sets. Additionally, the selected sample size quantifies the degree of misalignment between the LLM and the target human population. We illustrate our method on real datasets and LLMs.

A Unified Evaluation Framework for Epistemic Predictions

Jan 28, 2025Abstract:Predictions of uncertainty-aware models are diverse, ranging from single point estimates (often averaged over prediction samples) to predictive distributions, to set-valued or credal-set representations. We propose a novel unified evaluation framework for uncertainty-aware classifiers, applicable to a wide range of model classes, which allows users to tailor the trade-off between accuracy and precision of predictions via a suitably designed performance metric. This makes possible the selection of the most suitable model for a particular real-world application as a function of the desired trade-off. Our experiments, concerning Bayesian, ensemble, evidential, deterministic, credal and belief function classifiers on the CIFAR-10, MNIST and CIFAR-100 datasets, show that the metric behaves as desired.

A Similarity Measure Between Functions with Applications to Statistical Learning and Optimization

Jan 14, 2025Abstract:In this note, we present a novel measure of similarity between two functions. It quantifies how the sub-optimality gaps of two functions convert to each other, and unifies several existing notions of functional similarity. We show that it has convenient operation rules, and illustrate its use in empirical risk minimization and non-stationary online optimization.

A Particle Algorithm for Mean-Field Variational Inference

Dec 29, 2024Abstract:Variational inference is a fast and scalable alternative to Markov chain Monte Carlo and has been widely applied to posterior inference tasks in statistics and machine learning. A traditional approach for implementing mean-field variational inference (MFVI) is coordinate ascent variational inference (CAVI), which relies crucially on parametric assumptions on complete conditionals. In this paper, we introduce a novel particle-based algorithm for mean-field variational inference, which we term PArticle VI (PAVI). Notably, our algorithm does not rely on parametric assumptions on complete conditionals, and it applies to the nonparametric setting. We provide non-asymptotic finite-particle convergence guarantee for our algorithm. To our knowledge, this is the first end-to-end guarantee for particle-based MFVI.

Localized exploration in contextual dynamic pricing achieves dimension-free regret

Dec 26, 2024Abstract:We study the problem of contextual dynamic pricing with a linear demand model. We propose a novel localized exploration-then-commit (LetC) algorithm which starts with a pure exploration stage, followed by a refinement stage that explores near the learned optimal pricing policy, and finally enters a pure exploitation stage. The algorithm is shown to achieve a minimax optimal, dimension-free regret bound when the time horizon exceeds a polynomial of the covariate dimension. Furthermore, we provide a general theoretical framework that encompasses the entire time spectrum, demonstrating how to balance exploration and exploitation when the horizon is limited. The analysis is powered by a novel critical inequality that depicts the exploration-exploitation trade-off in dynamic pricing, mirroring its existing counterpart for the bias-variance trade-off in regularized regression. Our theoretical results are validated by extensive experiments on synthetic and real-world data.

Adaptive Transfer Clustering: A Unified Framework

Oct 28, 2024

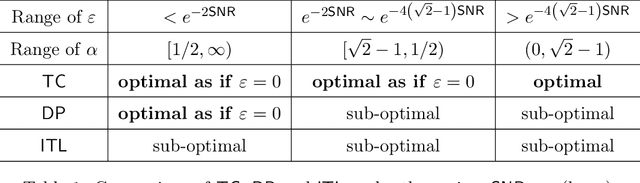

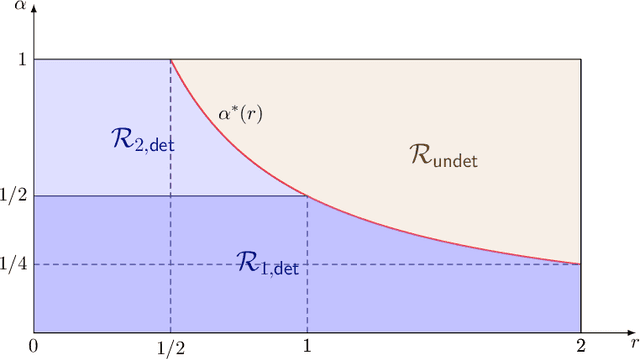

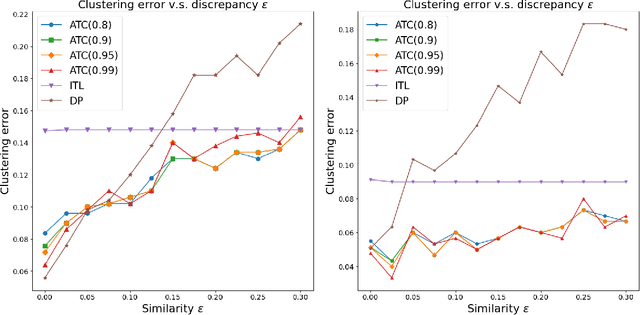

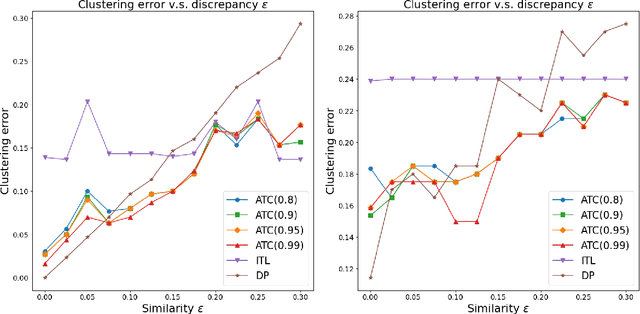

Abstract:We propose a general transfer learning framework for clustering given a main dataset and an auxiliary one about the same subjects. The two datasets may reflect similar but different latent grouping structures of the subjects. We propose an adaptive transfer clustering (ATC) algorithm that automatically leverages the commonality in the presence of unknown discrepancy, by optimizing an estimated bias-variance decomposition. It applies to a broad class of statistical models including Gaussian mixture models, stochastic block models, and latent class models. A theoretical analysis proves the optimality of ATC under the Gaussian mixture model and explicitly quantifies the benefit of transfer. Extensive simulations and real data experiments confirm our method's effectiveness in various scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge