Kagan Tumer

Safe Multiagent Coordination via Entropic Exploration

Dec 29, 2024

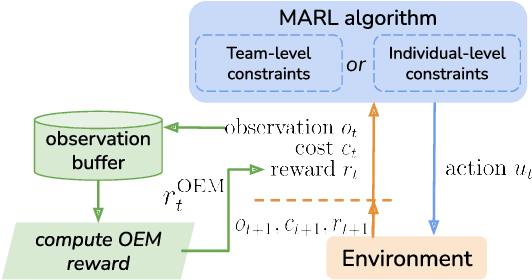

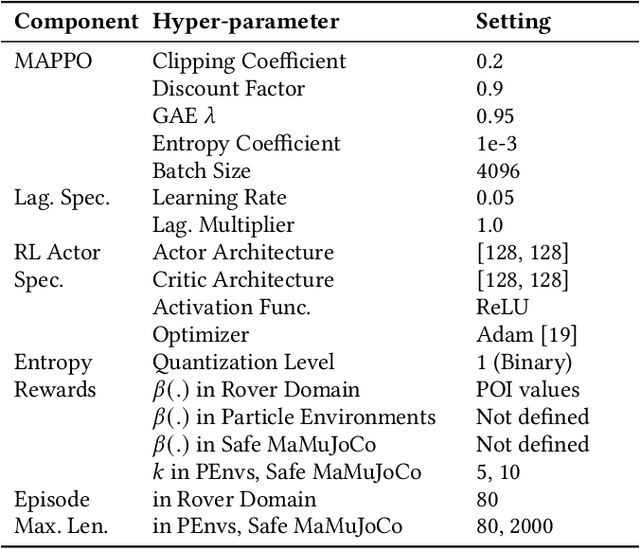

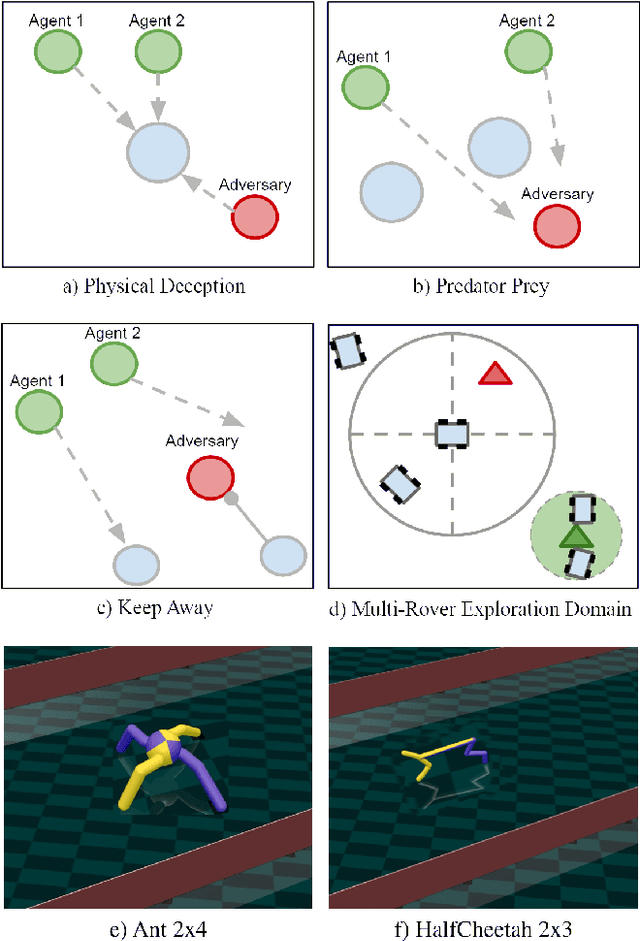

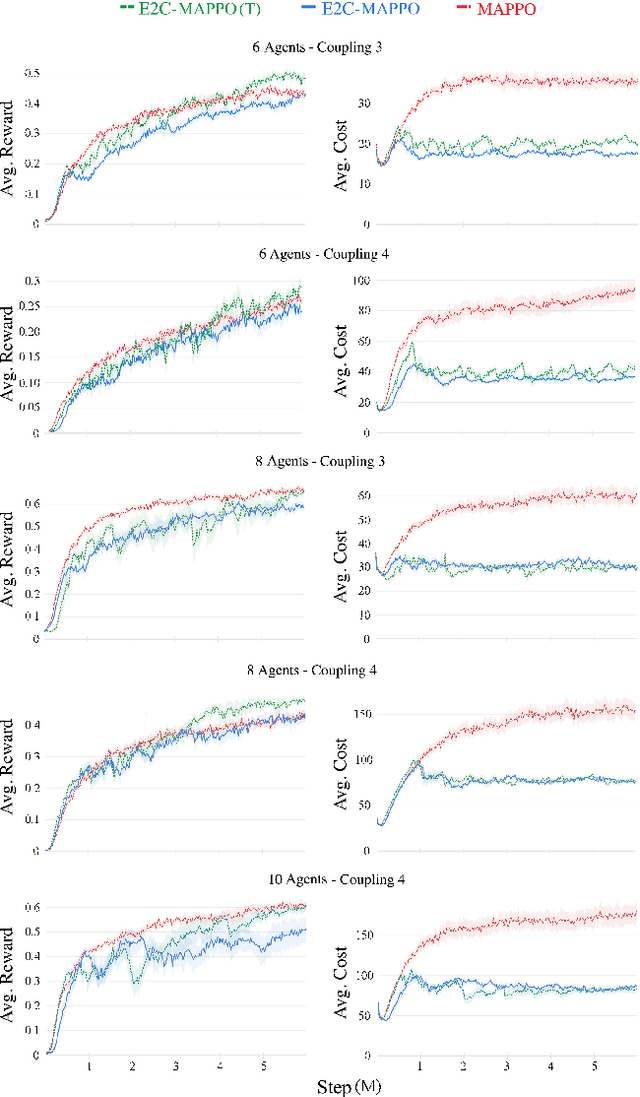

Abstract:Many real-world multiagent learning problems involve safety concerns. In these setups, typical safe reinforcement learning algorithms constrain agents' behavior, limiting exploration -- a crucial component for discovering effective cooperative multiagent behaviors. Moreover, the multiagent literature typically models individual constraints for each agent and has yet to investigate the benefits of using joint team constraints. In this work, we analyze these team constraints from a theoretical and practical perspective and propose entropic exploration for constrained multiagent reinforcement learning (E2C) to address the exploration issue. E2C leverages observation entropy maximization to incentivize exploration and facilitate learning safe and effective cooperative behaviors. Experiments across increasingly complex domains show that E2C agents match or surpass common unconstrained and constrained baselines in task performance while reducing unsafe behaviors by up to $50\%$.

Evolutionary Reinforcement Learning for Sample-Efficient Multiagent Coordination

Jun 18, 2019

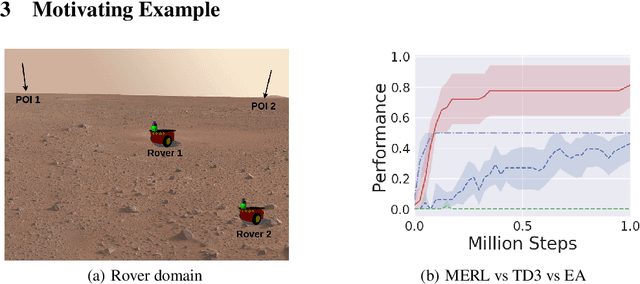

Abstract:A key challenge for Multiagent RL (Reinforcement Learning) is the design of agent-specific, local rewards that are aligned with sparse global objectives. In this paper, we introduce MERL (Multiagent Evolutionary RL), a hybrid algorithm that does not require an explicit alignment between local and global objectives. MERL uses fast, policy-gradient based learning for each agent by utilizing their dense local rewards. Concurrently, an evolutionary algorithm is used to recruit agents into a team by directly optimizing the sparser global objective. We explore problems that require coupling (a minimum number of agents required to coordinate for success), where the degree of coupling is not known to the agents. We demonstrate that MERL's integrated approach is more sample-efficient and retains performance better with increasing coupling orders compared to MADDPG, the state-of-the-art policy-gradient algorithm for multiagent coordination.

Collaborative Evolutionary Reinforcement Learning

May 06, 2019

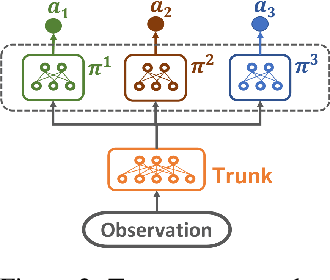

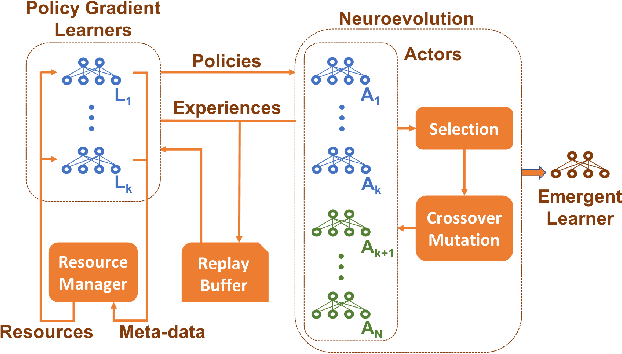

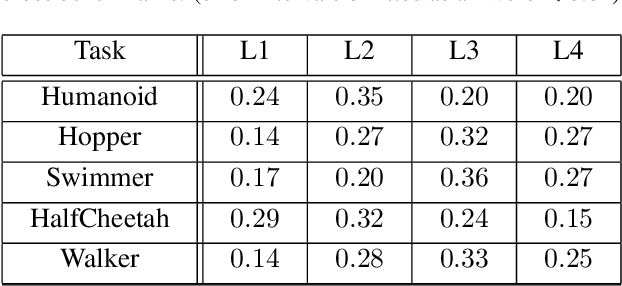

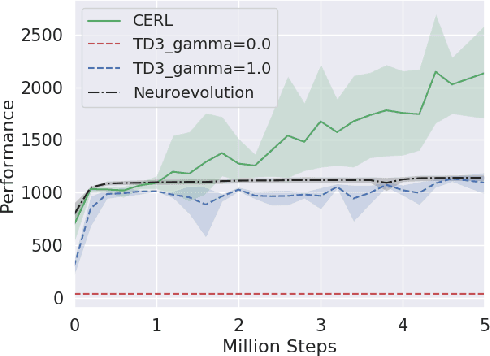

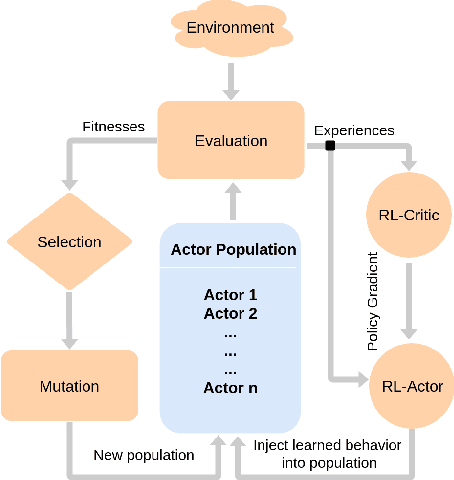

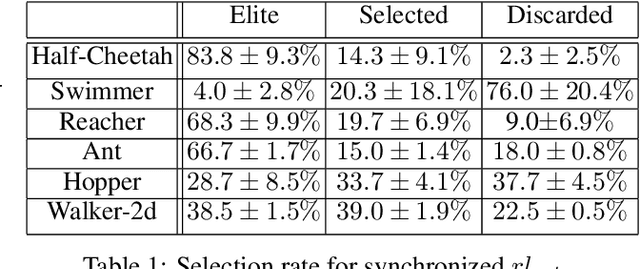

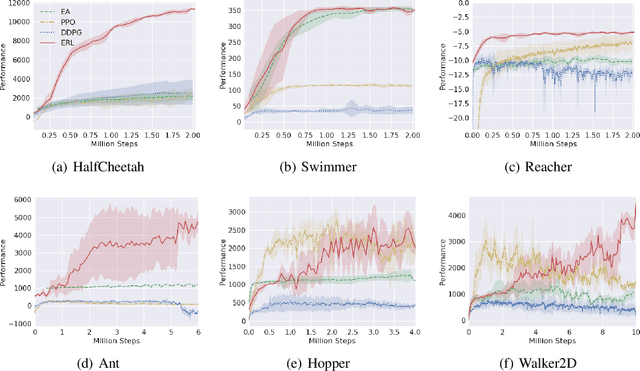

Abstract:Deep reinforcement learning algorithms have been successfully applied to a range of challenging control tasks. However, these methods typically struggle with achieving effective exploration and are extremely sensitive to the choice of hyperparameters. One reason is that most approaches use a noisy version of their operating policy to explore - thereby limiting the range of exploration. In this paper, we introduce Collaborative Evolutionary Reinforcement Learning (CERL), a scalable framework that comprises a portfolio of policies that simultaneously explore and exploit diverse regions of the solution space. A collection of learners - typically proven algorithms like TD3 - optimize over varying time-horizons leading to this diverse portfolio. All learners contribute to and use a shared replay buffer to achieve greater sample efficiency. Computational resources are dynamically distributed to favor the best learners as a form of online algorithm selection. Neuroevolution binds this entire process to generate a single emergent learner that exceeds the capabilities of any individual learner. Experiments in a range of continuous control benchmarks demonstrate that the emergent learner significantly outperforms its composite learners while remaining overall more sample-efficient - notably solving the Mujoco Humanoid benchmark where all of its composite learners (TD3) fail entirely in isolation.

Evolution-Guided Policy Gradient in Reinforcement Learning

Oct 27, 2018

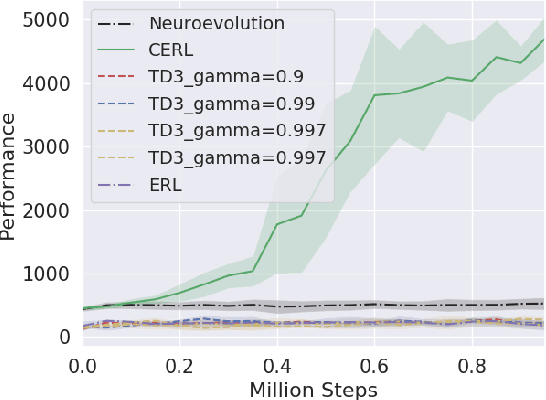

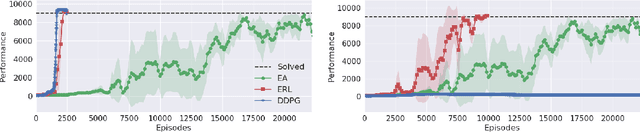

Abstract:Deep Reinforcement Learning (DRL) algorithms have been successfully applied to a range of challenging control tasks. However, these methods typically suffer from three core difficulties: temporal credit assignment with sparse rewards, lack of effective exploration, and brittle convergence properties that are extremely sensitive to hyperparameters. Collectively, these challenges severely limit the applicability of these approaches to real-world problems. Evolutionary Algorithms (EAs), a class of black box optimization techniques inspired by natural evolution, are well suited to address each of these three challenges. However, EAs typically suffer from high sample complexity and struggle to solve problems that require optimization of a large number of parameters. In this paper, we introduce Evolutionary Reinforcement Learning (ERL), a hybrid algorithm that leverages the population of an EA to provide diversified data to train an RL agent, and reinserts the RL agent into the EA population periodically to inject gradient information into the EA. ERL inherits EA's ability of temporal credit assignment with a fitness metric, effective exploration with a diverse set of policies, and stability of a population-based approach and complements it with off-policy DRL's ability to leverage gradients for higher sample efficiency and faster learning. Experiments in a range of challenging continuous control benchmarks demonstrate that ERL significantly outperforms prior DRL and EA methods.

An Introduction to Collective Intelligence

Aug 17, 1999

Abstract:This paper surveys the emerging science of how to design a ``COllective INtelligence'' (COIN). A COIN is a large multi-agent system where: (i) There is little to no centralized communication or control; and (ii) There is a provided world utility function that rates the possible histories of the full system. In particular, we are interested in COINs in which each agent runs a reinforcement learning (RL) algorithm. Rather than use a conventional modeling approach (e.g., model the system dynamics, and hand-tune agents to cooperate), we aim to solve the COIN design problem implicitly, via the ``adaptive'' character of the RL algorithms of each of the agents. This approach introduces an entirely new, profound design problem: Assuming the RL algorithms are able to achieve high rewards, what reward functions for the individual agents will, when pursued by those agents, result in high world utility? In other words, what reward functions will best ensure that we do not have phenomena like the tragedy of the commons, Braess's paradox, or the liquidity trap? Although still very young, research specifically concentrating on the COIN design problem has already resulted in successes in artificial domains, in particular in packet-routing, the leader-follower problem, and in variants of Arthur's El Farol bar problem. It is expected that as it matures and draws upon other disciplines related to COINs, this research will greatly expand the range of tasks addressable by human engineers. Moreover, in addition to drawing on them, such a fully developed scie nce of COIN design may provide much insight into other already established scientific fields, such as economics, game theory, and population biology.

Collective Intelligence for Control of Distributed Dynamical Systems

Aug 17, 1999

Abstract:We consider the El Farol bar problem, also known as the minority game (W. B. Arthur, ``The American Economic Review'', 84(2): 406--411 (1994), D. Challet and Y.C. Zhang, ``Physica A'', 256:514 (1998)). We view it as an instance of the general problem of how to configure the nodal elements of a distributed dynamical system so that they do not ``work at cross purposes'', in that their collective dynamics avoids frustration and thereby achieves a provided global goal. We summarize a mathematical theory for such configuration applicable when (as in the bar problem) the global goal can be expressed as minimizing a global energy function and the nodes can be expressed as minimizers of local free energy functions. We show that a system designed with that theory performs nearly optimally for the bar problem.

Robust Combining of Disparate Classifiers through Order Statistics

May 20, 1999

Abstract:Integrating the outputs of multiple classifiers via combiners or meta-learners has led to substantial improvements in several difficult pattern recognition problems. In the typical setting investigated till now, each classifier is trained on data taken or resampled from a common data set, or (almost) randomly selected subsets thereof, and thus experiences similar quality of training data. However, in certain situations where data is acquired and analyzed on-line at several geographically distributed locations, the quality of data may vary substantially, leading to large discrepancies in performance of individual classifiers. In this article we introduce and investigate a family of classifiers based on order statistics, for robust handling of such cases. Based on a mathematical modeling of how the decision boundaries are affected by order statistic combiners, we derive expressions for the reductions in error expected when such combiners are used. We show analytically that the selection of the median, the maximum and in general, the $i^{th}$ order statistic improves classification performance. Furthermore, we introduce the trim and spread combiners, both based on linear combinations of the ordered classifier outputs, and show that they are quite beneficial in presence of outliers or uneven classifier performance. Experimental results on several public domain data sets corroborate these findings.

Linear and Order Statistics Combiners for Pattern Classification

May 20, 1999

Abstract:Several researchers have experimentally shown that substantial improvements can be obtained in difficult pattern recognition problems by combining or integrating the outputs of multiple classifiers. This chapter provides an analytical framework to quantify the improvements in classification results due to combining. The results apply to both linear combiners and order statistics combiners. We first show that to a first order approximation, the error rate obtained over and above the Bayes error rate, is directly proportional to the variance of the actual decision boundaries around the Bayes optimum boundary. Combining classifiers in output space reduces this variance, and hence reduces the "added" error. If N unbiased classifiers are combined by simple averaging, the added error rate can be reduced by a factor of N if the individual errors in approximating the decision boundaries are uncorrelated. Expressions are then derived for linear combiners which are biased or correlated, and the effect of output correlations on ensemble performance is quantified. For order statistics based non-linear combiners, we derive expressions that indicate how much the median, the maximum and in general the ith order statistic can improve classifier performance. The analysis presented here facilitates the understanding of the relationships among error rates, classifier boundary distributions, and combining in output space. Experimental results on several public domain data sets are provided to illustrate the benefits of combining and to support the analytical results.

* 31 pages

Ensembles of Radial Basis Function Networks for Spectroscopic Detection of Cervical Pre-Cancer

May 20, 1999

Abstract:The mortality related to cervical cancer can be substantially reduced through early detection and treatment. However, current detection techniques, such as Pap smear and colposcopy, fail to achieve a concurrently high sensitivity and specificity. In vivo fluorescence spectroscopy is a technique which quickly, non-invasively and quantitatively probes the biochemical and morphological changes that occur in pre-cancerous tissue. A multivariate statistical algorithm was used to extract clinically useful information from tissue spectra acquired from 361 cervical sites from 95 patients at 337, 380 and 460 nm excitation wavelengths. The multivariate statistical analysis was also employed to reduce the number of fluorescence excitation-emission wavelength pairs required to discriminate healthy tissue samples from pre-cancerous tissue samples. The use of connectionist methods such as multi layered perceptrons, radial basis function networks, and ensembles of such networks was investigated. RBF ensemble algorithms based on fluorescence spectra potentially provide automated, and near real-time implementation of pre-cancer detection in the hands of non-experts. The results are more reliable, direct and accurate than those achieved by either human experts or multivariate statistical algorithms.

* 23 pages

General Principles of Learning-Based Multi-Agent Systems

May 10, 1999

Abstract:We consider the problem of how to design large decentralized multi-agent systems (MAS's) in an automated fashion, with little or no hand-tuning. Our approach has each agent run a reinforcement learning algorithm. This converts the problem into one of how to automatically set/update the reward functions for each of the agents so that the global goal is achieved. In particular we do not want the agents to ``work at cross-purposes'' as far as the global goal is concerned. We use the term artificial COllective INtelligence (COIN) to refer to systems that embody solutions to this problem. In this paper we present a summary of a mathematical framework for COINs. We then investigate the real-world applicability of the core concepts of that framework via two computer experiments: we show that our COINs perform near optimally in a difficult variant of Arthur's bar problem (and in particular avoid the tragedy of the commons for that problem), and we also illustrate optimal performance for our COINs in the leader-follower problem.

* 7 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge