Junzheng Wu

A Benchmark for Multi-Lingual Vision-Language Learning in Remote Sensing Image Captioning

Mar 06, 2025

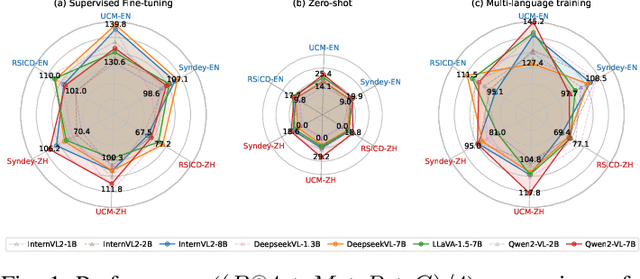

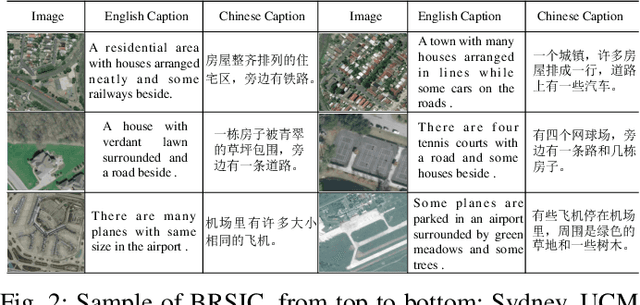

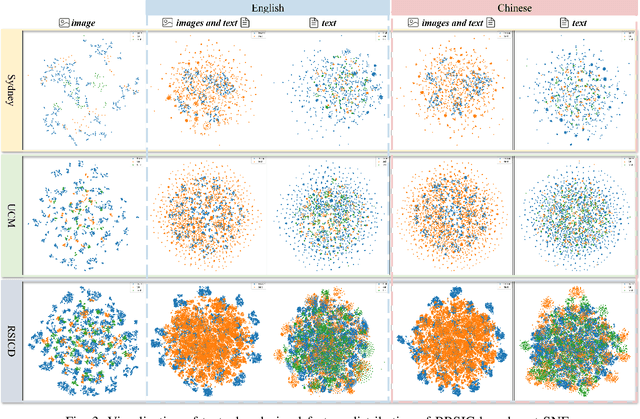

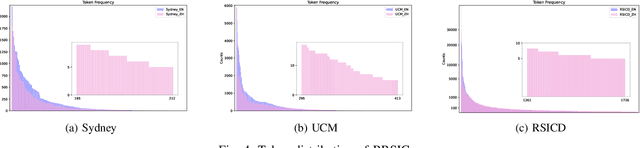

Abstract:Remote Sensing Image Captioning (RSIC) is a cross-modal field bridging vision and language, aimed at automatically generating natural language descriptions of features and scenes in remote sensing imagery. Despite significant advances in developing sophisticated methods and large-scale datasets for training vision-language models (VLMs), two critical challenges persist: the scarcity of non-English descriptive datasets and the lack of multilingual capability evaluation for models. These limitations fundamentally impede the progress and practical deployment of RSIC, particularly in the era of large VLMs. To address these challenges, this paper presents several significant contributions to the field. First, we introduce and analyze BRSIC (Bilingual Remote Sensing Image Captioning), a comprehensive bilingual dataset that enriches three established English RSIC datasets with Chinese descriptions, encompassing 13,634 images paired with 68,170 bilingual captions. Building upon this foundation, we develop a systematic evaluation framework that addresses the prevalent inconsistency in evaluation protocols, enabling rigorous assessment of model performance through standardized retraining procedures on BRSIC. Furthermore, we present an extensive empirical study of eight state-of-the-art large vision-language models (LVLMs), examining their capabilities across multiple paradigms including zero-shot inference, supervised fine-tuning, and multi-lingual training. This comprehensive evaluation provides crucial insights into the strengths and limitations of current LVLMs in handling multilingual remote sensing tasks. Additionally, our cross-dataset transfer experiments reveal interesting findings. The code and data will be available at https://github.com/mrazhou/BRSIC.

A Dual Neighborhood Hypergraph Neural Network for Change Detection in VHR Remote Sensing Images

Feb 27, 2022

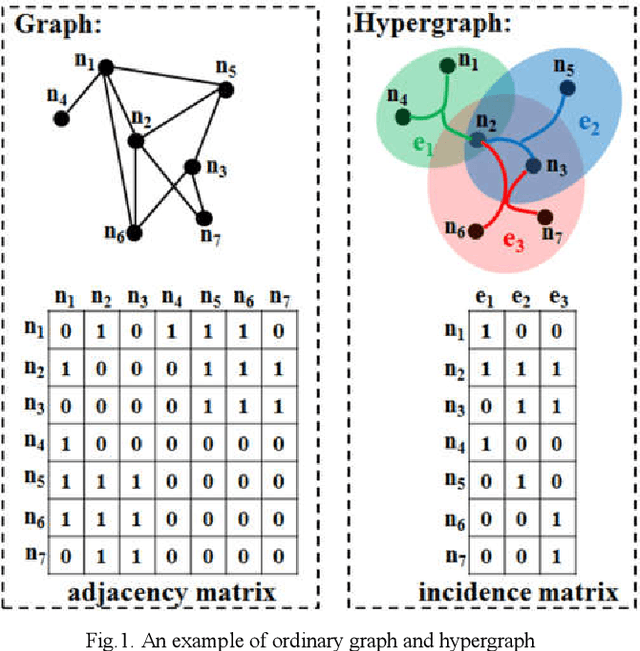

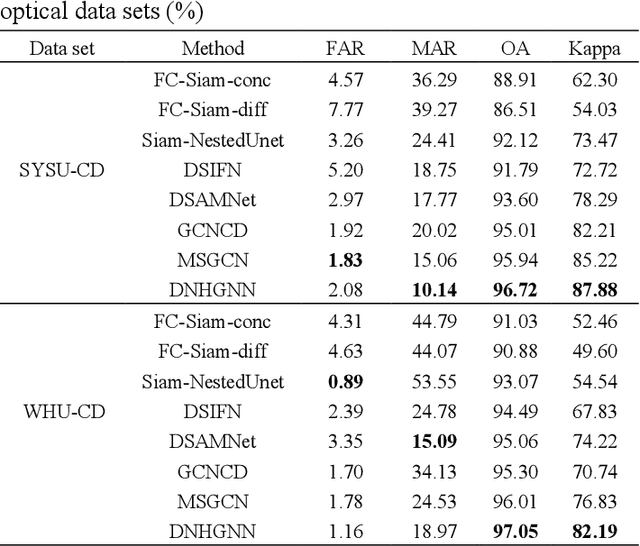

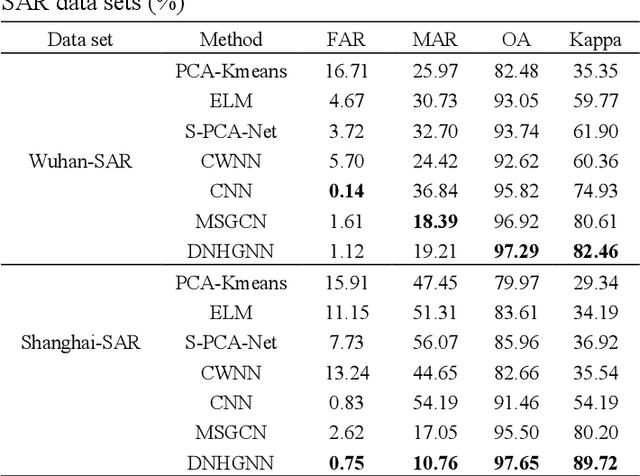

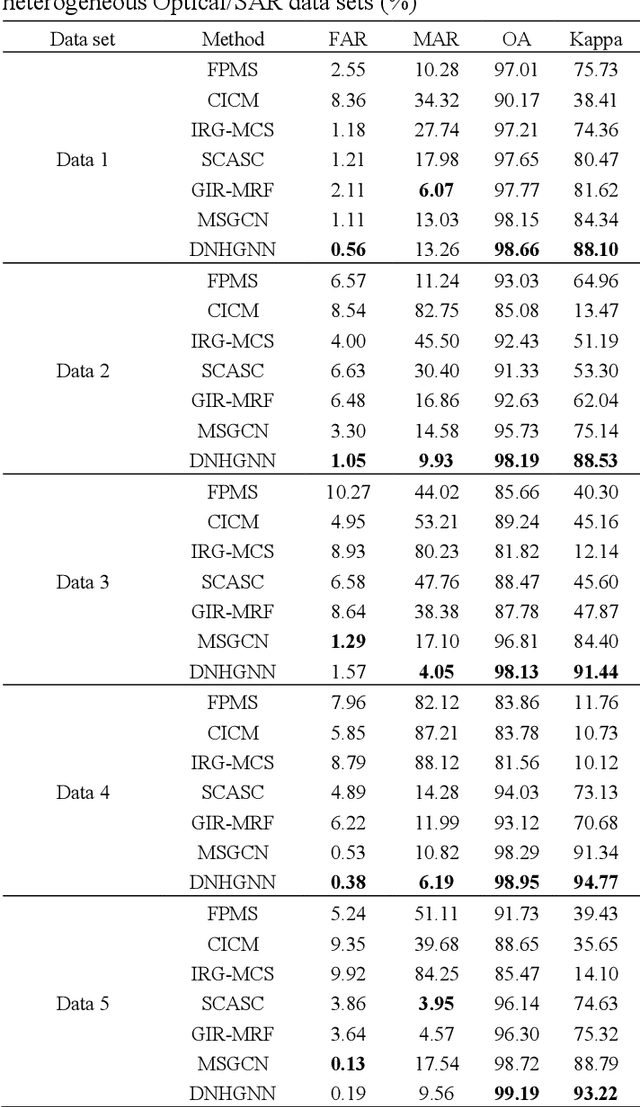

Abstract:The very high spatial resolution (VHR) remote sensing images have been an extremely valuable source for monitoring changes occurred on the earth surface. However, precisely detecting relevant changes in VHR images still remains a challenge, due to the complexity of the relationships among ground objects. To address this limitation, a dual neighborhood hypergraph neural network is proposed in this article, which combines the multiscale superpixel segmentation and hypergraph convolution to model and exploit the complex relationships. First, the bi-temporal image pairs are segmented under two scales and fed to a pre-trained U-net to obtain node features by treating each object under the fine scale as a node. The dual neighborhood is then defined using the father-child and adjacent relationships of the segmented objects to construct the hypergraph, which permits models to represent the higher-order structured information far more complex than just pairwise relationships. The hypergraph convolutions are conducted on the constructed hypergraph to propagate the label information from a small amount of labeled nodes to the other unlabeled ones by the node-edge-node transform. Moreover, to alleviate the problem of imbalanced sample, the focal loss function is adopted to train the hypergraph neural network. The experimental results on optical, SAR and heterogeneous optical/SAR data sets demonstrate that the proposed method comprises better effectiveness and robustness compared to many state-of-the-art methods.

An Improved Discriminative Optimization for 3D Rigid Point Cloud Registration

Apr 18, 2021

Abstract:The Discriminative Optimization (DO) algorithm has been proved much successful in 3D point cloud registration. In the original DO, the feature (descriptor) of two point cloud was defined as a histogram, and the element of histogram indicates the weights of scene points in "front" or "back" side of a model point. In this paper, we extended the histogram which indicate the sides from "front-back" to "front-back", "up-down", and "clockwise-anticlockwise". In addition, we reweighted the extended histogram according to the model points' distribution. We evaluated the proposed Improved DO on the Stanford Bunny and Oxford SensatUrban dataset, and compared it with six classical State-Of-The-Art point cloud registration algorithms. The experimental result demonstrates our algorithm achieves comparable performance in point registration accuracy and root-mean-sqart-error.

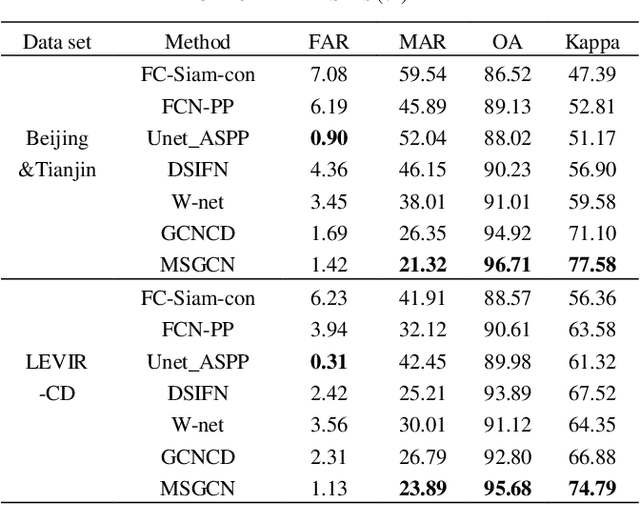

A Multiscale Graph Convolutional Network for Change Detection in Homogeneous and Heterogeneous Remote Sensing Images

Feb 16, 2021

Abstract:Change detection (CD) in remote sensing images has been an ever-expanding area of research. To date, although many methods have been proposed using various techniques, accurately identifying changes is still a great challenge, especially in the high resolution or heterogeneous situations, due to the difficulties in effectively modeling the features from ground objects with different patterns. In this paper, a novel CD method based on the graph convolutional network (GCN) and multiscale object-based technique is proposed for both homogeneous and heterogeneous images. First, the object-wise high level features are obtained through a pre-trained U-net and the multiscale segmentations. Treating each parcel as a node, the graph representations can be formed and then, fed into the proposed multiscale graph convolutional network with each channel corresponding to one scale. The multiscale GCN propagates the label information from a small number of labeled nodes to the other ones which are unlabeled. Further, to comprehensively incorporate the information from the output channels of multiscale GCN, a fusion strategy is designed using the father-child relationships between scales. Extensive Experiments on optical, SAR and heterogeneous optical/SAR data sets demonstrate that the proposed method outperforms some state-of the-art methods in both qualitative and quantitative evaluations. Besides, the Influences of some factors are also discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge