Junaid Malik

Department of Computing Sciences, Tampere University, Finland

2D Self-Organized ONN Model For Handwritten Text Recognition

Jul 17, 2022

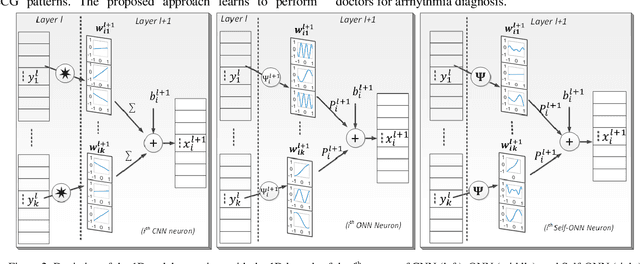

Abstract:Deep Convolutional Neural Networks (CNNs) have recently reached state-of-the-art Handwritten Text Recognition (HTR) performance. However, recent research has shown that typical CNNs' learning performance is limited since they are homogeneous networks with a simple (linear) neuron model. With their heterogeneous network structure incorporating non-linear neurons, Operational Neural Networks (ONNs) have recently been proposed to address this drawback. Self-ONNs are self-organized variations of ONNs with the generative neuron model that can generate any non-linear function using the Taylor approximation. In this study, in order to improve the state-of-the-art performance level in HTR, the 2D Self-organized ONNs (Self-ONNs) in the core of a novel network model are proposed. Moreover, deformable convolutions, which have recently been demonstrated to tackle variations in the writing styles better, are utilized in this study. The results over the IAM English dataset and HADARA80P Arabic dataset show that the proposed model with the operational layers of Self-ONNs significantly improves Character Error Rate (CER) and Word Error Rate (WER). Compared with its counterpart CNNs, Self-ONNs reduce CER and WER by 1.2% and 3.4 % in the HADARA80P and 0.199% and 1.244% in the IAM dataset. The results over the benchmark IAM demonstrate that the proposed model with the operational layers of Self-ONNs outperforms recent deep CNN models by a significant margin while the use of Self-ONNs with deformable convolutions demonstrates exceptional results.

Blind ECG Restoration by Operational Cycle-GANs

Jan 29, 2022

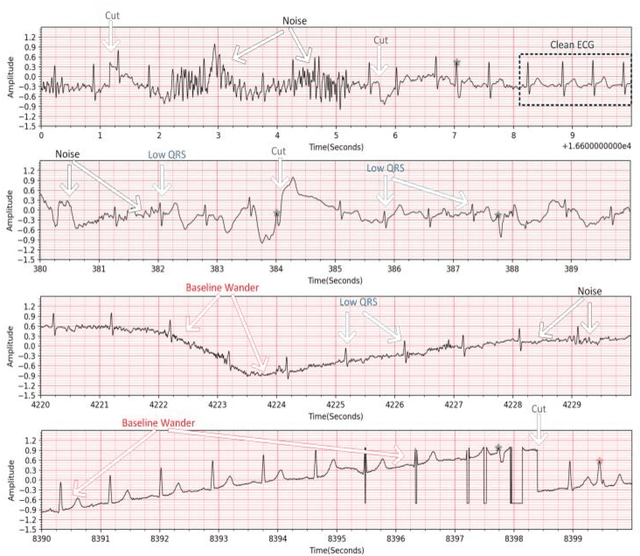

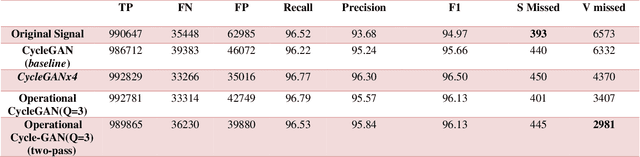

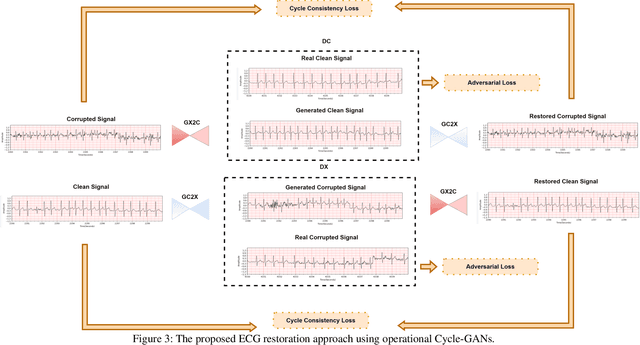

Abstract:Continuous long-term monitoring of electrocardiography (ECG) signals is crucial for the early detection of cardiac abnormalities such as arrhythmia. Non-clinical ECG recordings acquired by Holter and wearable ECG sensors often suffer from severe artifacts such as baseline wander, signal cuts, motion artifacts, variations on QRS amplitude, noise, and other interferences. Usually, a set of such artifacts occur on the same ECG signal with varying severity and duration, and this makes an accurate diagnosis by machines or medical doctors extremely difficult. Despite numerous studies that have attempted ECG denoising, they naturally fail to restore the actual ECG signal corrupted with such artifacts due to their simple and naive noise model. In this study, we propose a novel approach for blind ECG restoration using cycle-consistent generative adversarial networks (Cycle-GANs) where the quality of the signal can be improved to a clinical level ECG regardless of the type and severity of the artifacts corrupting the signal. To further boost the restoration performance, we propose 1D operational Cycle-GANs with the generative neuron model. The proposed approach has been evaluated extensively using one of the largest benchmark ECG datasets from the China Physiological Signal Challenge (CPSC-2020) with more than one million beats. Besides the quantitative and qualitative evaluations, a group of cardiologists performed medical evaluations to validate the quality and usability of the restored ECG, especially for an accurate arrhythmia diagnosis.

Image denoising by Super Neurons: Why go deep?

Nov 29, 2021

Abstract:Classical image denoising methods utilize the non-local self-similarity principle to effectively recover image content from noisy images. Current state-of-the-art methods use deep convolutional neural networks (CNNs) to effectively learn the mapping from noisy to clean images. Deep denoising CNNs manifest a high learning capacity and integrate non-local information owing to the large receptive field yielded by numerous cascade of hidden layers. However, deep networks are also computationally complex and require large data for training. To address these issues, this study draws the focus on the Self-organized Operational Neural Networks (Self-ONNs) empowered by a novel neuron model that can achieve a similar or better denoising performance with a compact and shallow model. Recently, the concept of super-neurons has been introduced which augment the non-linear transformations of generative neurons by utilizing non-localized kernel locations for an enhanced receptive field size. This is the key accomplishment which renders the need for a deep network configuration. As the integration of non-local information is known to benefit denoising, in this work we investigate the use of super neurons for both synthetic and real-world image denoising. We also discuss the practical issues in implementing the super neuron model on GPUs and propose a trade-off between the heterogeneity of non-localized operations and computational complexity. Our results demonstrate that with the same width and depth, Self-ONNs with super neurons provide a significant boost of denoising performance over the networks with generative and convolutional neurons for both denoising tasks. Moreover, results demonstrate that Self-ONNs with super neurons can achieve a competitive and superior synthetic denoising performances than well-known deep CNN denoisers for synthetic and real-world denoising, respectively.

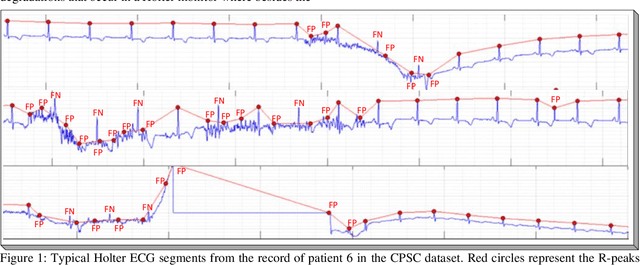

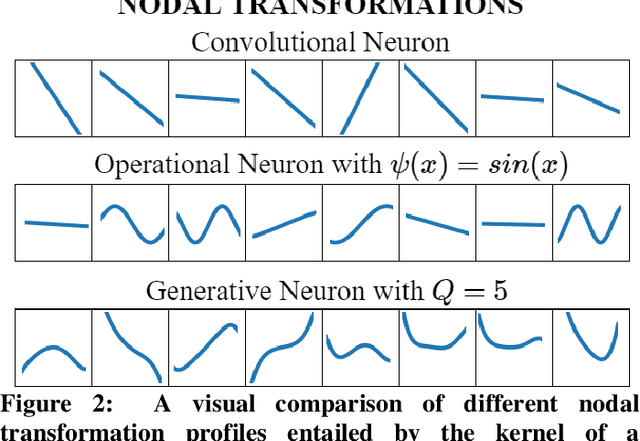

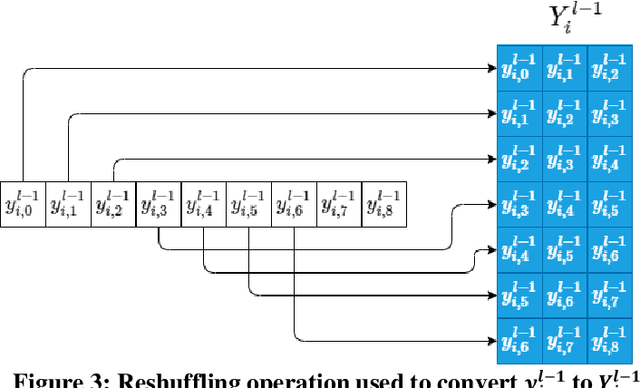

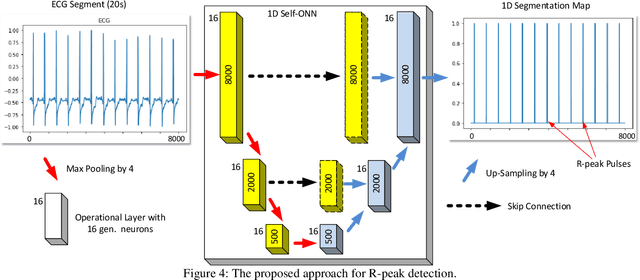

Robust Peak Detection for Holter ECGs by Self-Organized Operational Neural Networks

Sep 30, 2021

Abstract:Although numerous R-peak detectors have been proposed in the literature, their robustness and performance levels may significantly deteriorate in low quality and noisy signals acquired from mobile ECG sensors such as Holter monitors. Recently, this issue has been addressed by deep 1D Convolutional Neural Networks (CNNs) that have achieved state-of-the-art performance levels in Holter monitors; however, they pose a high complexity level that requires special parallelized hardware setup for real-time processing. On the other hand, their performance deteriorates when a compact network configuration is used instead. This is an expected outcome as recent studies have demonstrated that the learning performance of CNNs is limited due to their strictly homogenous configuration with the sole linear neuron model. This has been addressed by Operational Neural Networks (ONNs) with their heterogenous network configuration encapsulating neurons with various non-linear operators. In this study, to further boost the peak detection performance along with an elegant computational efficiency, we propose 1D Self-Organized Operational Neural Networks (Self-ONNs) with generative neurons. The most crucial advantage of 1D Self-ONNs over the ONNs is their self-organization capability that voids the need to search for the best operator set per neuron since each generative neuron has the ability to create the optimal operator during training. The experimental results over the China Physiological Signal Challenge-2020 (CPSC) dataset with more than one million ECG beats show that the proposed 1D Self-ONNs can significantly surpass the state-of-the-art deep CNN with less computational complexity. Results demonstrate that the proposed solution achieves 99.10% F1-score, 99.79% sensitivity, and 98.42% positive predictivity in the CPSC dataset which is the best R-peak detection performance ever achieved.

Real-Time Patient-Specific ECG Classification by 1D Self-Operational Neural Networks

Sep 30, 2021

Abstract:Despite the proliferation of numerous deep learning methods proposed for generic ECG classification and arrhythmia detection, compact systems with the real-time ability and high accuracy for classifying patient-specific ECG are still few. Particularly, the scarcity of patient-specific data poses an ultimate challenge to any classifier. Recently, compact 1D Convolutional Neural Networks (CNNs) have achieved the state-of-the-art performance level for the accurate classification of ventricular and supraventricular ectopic beats. However, several studies have demonstrated the fact that the learning performance of the conventional CNNs is limited because they are homogenous networks with a basic (linear) neuron model. In order to address this deficiency and further boost the patient-specific ECG classification performance, in this study, we propose 1D Self-organized Operational Neural Networks (1D Self-ONNs). Due to its self-organization capability, Self-ONNs have the utmost advantage and superiority over conventional ONNs where the prior operator search within the operator set library to find the best possible set of operators is entirely avoided. As the first study where 1D Self-ONNs are ever proposed for a classification task, our results over the MIT-BIH arrhythmia benchmark database demonstrate that 1D Self-ONNs can surpass 1D CNNs with a significant margin while having a similar computational complexity. Under AAMI recommendations and with minimal common training data used, over the entire MIT-BIH dataset 1D Self-ONNs have achieved 98% and 99.04% average accuracies, 76.6% and 93.7% average F1 scores on supra-ventricular and ventricular ectopic beat (VEB) classifications, respectively, which is the highest performance level ever reported.

Early Bearing Fault Diagnosis of Rotating Machinery by 1D Self-Organized Operational Neural Networks

Sep 30, 2021

Abstract:Preventive maintenance of modern electric rotating machinery (RM) is critical for ensuring reliable operation, preventing unpredicted breakdowns and avoiding costly repairs. Recently many studies investigated machine learning monitoring methods especially based on Deep Learning networks focusing mostly on detecting bearing faults; however, none of them addressed bearing fault severity classification for early fault diagnosis with high enough accuracy. 1D Convolutional Neural Networks (CNNs) have indeed achieved good performance for detecting RM bearing faults from raw vibration and current signals but did not classify fault severity. Furthermore, recent studies have demonstrated the limitation in terms of learning capability of conventional CNNs attributed to the basic underlying linear neuron model. Recently, Operational Neural Networks (ONNs) were proposed to enhance the learning capability of CNN by introducing non-linear neuron models and further heterogeneity in the network configuration. In this study, we propose 1D Self-organized ONNs (Self-ONNs) with generative neurons for bearing fault severity classification and providing continuous condition monitoring. Experimental results over the benchmark NSF/IMS bearing vibration dataset using both x- and y-axis vibration signals for inner race and rolling element faults demonstrate that the proposed 1D Self-ONNs achieve significant performance gap against the state-of-the-art (1D CNNs) with similar computational complexity.

Real-Time Glaucoma Detection from Digital Fundus Images using Self-ONNs

Sep 28, 2021

Abstract:Glaucoma leads to permanent vision disability by damaging the optical nerve that transmits visual images to the brain. The fact that glaucoma does not show any symptoms as it progresses and cannot be stopped at the later stages, makes it critical to be diagnosed in its early stages. Although various deep learning models have been applied for detecting glaucoma from digital fundus images, due to the scarcity of labeled data, their generalization performance was limited along with high computational complexity and special hardware requirements. In this study, compact Self-Organized Operational Neural Networks (Self- ONNs) are proposed for early detection of glaucoma in fundus images and their performance is compared against the conventional (deep) Convolutional Neural Networks (CNNs) over three benchmark datasets: ACRIMA, RIM-ONE, and ESOGU. The experimental results demonstrate that Self-ONNs not only achieve superior detection performance but can also significantly reduce the computational complexity making it a potentially suitable network model for biomedical datasets especially when the data is scarce.

Self-Organized Residual Blocks for Image Super-Resolution

May 31, 2021

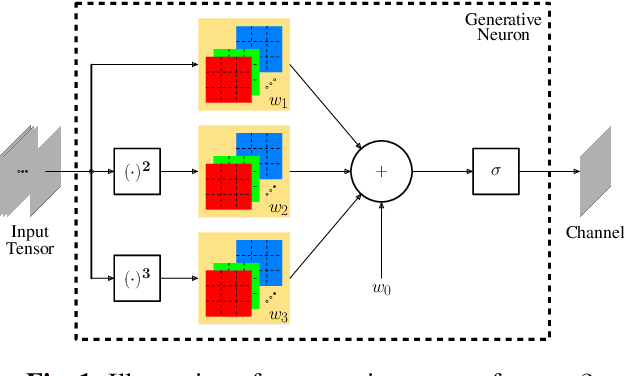

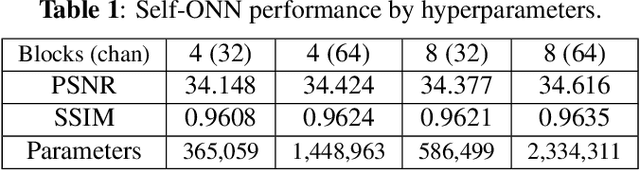

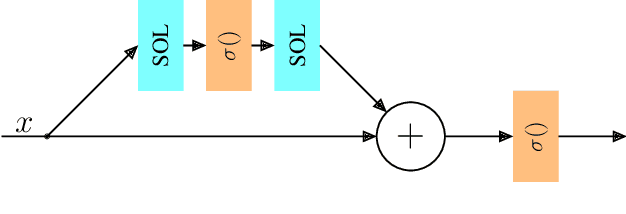

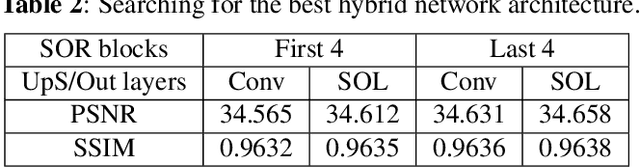

Abstract:It has become a standard practice to use the convolutional networks (ConvNet) with RELU non-linearity in image restoration and super-resolution (SR). Although the universal approximation theorem states that a multi-layer neural network can approximate any non-linear function with the desired precision, it does not reveal the best network architecture to do so. Recently, operational neural networks (ONNs) that choose the best non-linearity from a set of alternatives, and their "self-organized" variants (Self-ONN) that approximate any non-linearity via Taylor series have been proposed to address the well-known limitations and drawbacks of conventional ConvNets such as network homogeneity using only the McCulloch-Pitts neuron model. In this paper, we propose the concept of self-organized operational residual (SOR) blocks, and present hybrid network architectures combining regular residual and SOR blocks to strike a balance between the benefits of stronger non-linearity and the overall number of parameters. The experimental results demonstrate that the~proposed architectures yield performance improvements in both PSNR and perceptual metrics.

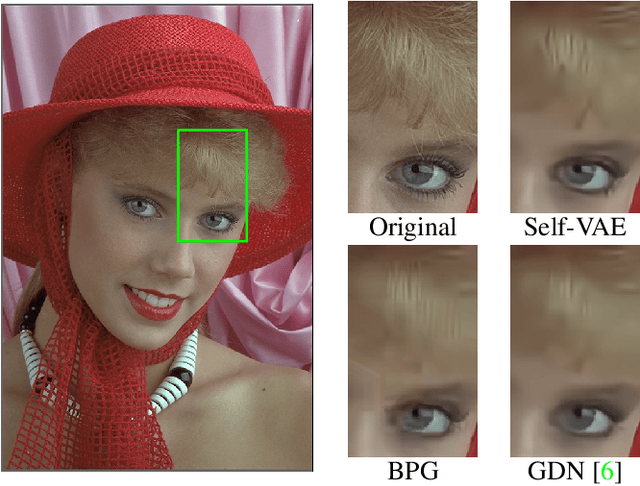

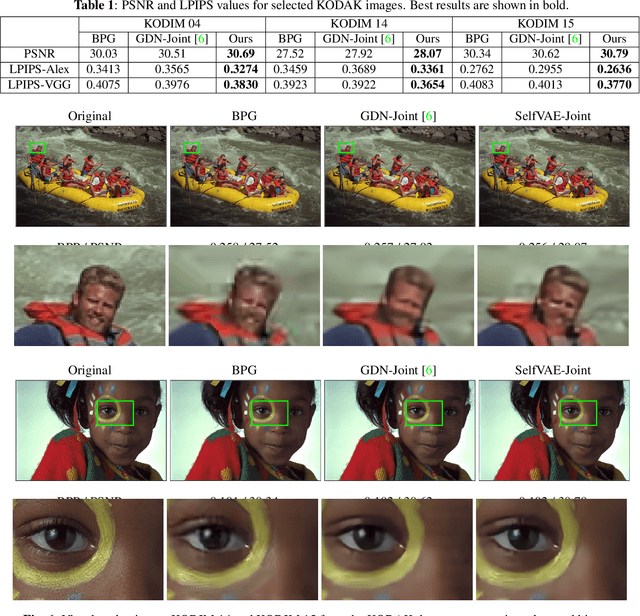

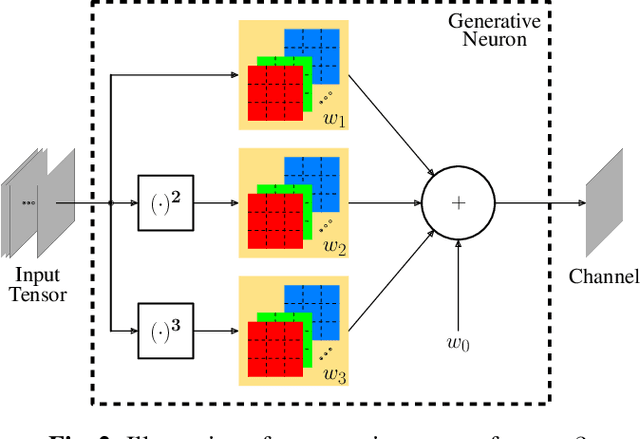

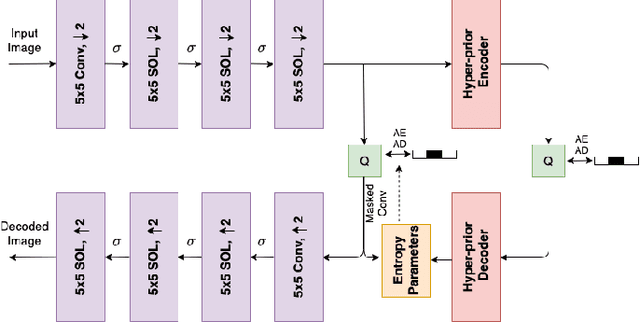

Self-Organized Variational Autoencoders (Self-VAE) for Learned Image Compression

May 28, 2021

Abstract:In end-to-end optimized learned image compression, it is standard practice to use a convolutional variational autoencoder with generalized divisive normalization (GDN) to transform images into a latent space. Recently, Operational Neural Networks (ONNs) that learn the best non-linearity from a set of alternatives, and their self-organized variants, Self-ONNs, that approximate any non-linearity via Taylor series have been proposed to address the limitations of convolutional layers and a fixed nonlinear activation. In this paper, we propose to replace the convolutional and GDN layers in the variational autoencoder with self-organized operational layers, and propose a novel self-organized variational autoencoder (Self-VAE) architecture that benefits from stronger non-linearity. The experimental results demonstrate that the proposed Self-VAE yields improvements in both rate-distortion performance and perceptual image quality.

Convolutional versus Self-Organized Operational Neural Networks for Real-World Blind Image Denoising

Mar 04, 2021

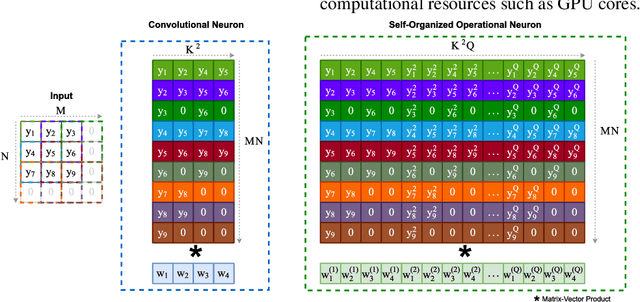

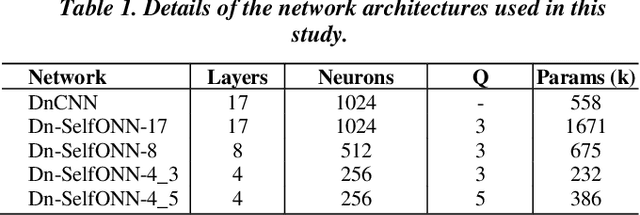

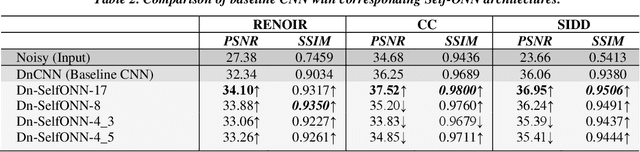

Abstract:Real-world blind denoising poses a unique image restoration challenge due to the non-deterministic nature of the underlying noise distribution. Prevalent discriminative networks trained on synthetic noise models have been shown to generalize poorly to real-world noisy images. While curating real-world noisy images and improving ground truth estimation procedures remain key points of interest, a potential research direction is to explore extensions to the widely used convolutional neuron model to enable better generalization with fewer data and lower network complexity, as opposed to simply using deeper Convolutional Neural Networks (CNNs). Operational Neural Networks (ONNs) and their recent variant, Self-organized ONNs (Self-ONNs), propose to embed enhanced non-linearity into the neuron model and have been shown to outperform CNNs across a variety of regression tasks. However, all such comparisons have been made for compact networks and the efficacy of deploying operational layers as a drop-in replacement for convolutional layers in contemporary deep architectures remains to be seen. In this work, we tackle the real-world blind image denoising problem by employing, for the first time, a deep Self-ONN. Extensive quantitative and qualitative evaluations spanning multiple metrics and four high-resolution real-world noisy image datasets against the state-of-the-art deep CNN network, DnCNN, reveal that deep Self-ONNs consistently achieve superior results with performance gains of up to 1.76dB in PSNR. Furthermore, Self-ONNs with half and even quarter the number of layers that require only a fraction of computational resources as that of DnCNN can still achieve similar or better results compared to the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge